Leaderboard

Popular Content

Showing content with the highest reputation on 03/27/17 in all areas

-

This is not actually a feature request (something to add to the unRAID distro), but more of a request for an improvement in the unRAID release program. I doubt that Tom will like it(!), even if he recognizes its usefulness, because it clearly adds more work for him and his staff. But I think it's worth discussing. Increasingly, I've been thinking that we may be getting too close to the bleeding edge. We have strongly competing interests, driving interests, that can't be fully reconciled. We want the long tested stability of a base system, the NAS, the rock solid foundation of our data storage. But we also want the latest technologies - the latest Docker features, the latest KVM/QEMU tech, full Ryzen support, etc, and that means the latest kernels. In my view, it's impossible to do both well. We're trying now, not far behind the latest kernels, but we're seeing more and more issues that seem related to less tested additions and changes to the kernel. For example, a whole set of HP machines unsupported, numerous Call Traces, and other instabilities, that I really don't think would be present in older but well patched kernel tracks. But some users are now anxiously awaiting kernels 4.10 and 4.11! So what I'm proposing for consideration is moving to two track releases, a stable track and a 'bleeding edge' track (someone else can come up with a better name). Currently, 6.2.4 would be the stable release, and 6.3.2 would be the leading edge release. 6.1.9 was a great release, considered very stable. It was based on kernel 4.1.18, that's the 18th patched release of Linux kernel 4.1. 6.2.4 was a great stable release. It was based on kernel 4.4.30, the 30th patch release of 4.4. We're currently on 6.3.2, using kernel 4.9.10. What I would like to see is the stable release stay farther back, and only move to a new kernel track when it reaches a 15th or 20th patched release (just my idea). We can still backport easy features, cosmetic features, and CVE's. Then Tom can be free to move ahead with whatever he wants to add into the latest 'leading edge' releases, with a little less concern for the effects, because there's always a safer alternative. A nice side effect is that the stable releases won't need betas, just an occasional RC or 2 when the move up is larger than usual. Practically everything added to it has already been tested in the newest releases. Small non-critical features can be added to both tracks, but larger or more risky features always go in the risky track, not the stable, and are only added to the stable releases when clearly stable themselves. I know this is more work, and that may make it impractical. I wonder if Tom could concentrate on the latest versions, while Eric maintains and builds the stable releases. Finding ways to automate the build processes could help too. But this could keep 2 very different sets of unRAID users happy, those who want stability first and foremost, and those who want the latest tech. Naturally, there are many who want both, but I personally think it can't be done, not done well.4 points

-

Hi, guys. I was frustrated as to why we couldn't use the techpowerup vbios files in our VMs. So I have discovered it is due to a header put there for later Nvidia cards which isn't compatible with KVM. (the header is for the NVflash programme) So in this video, you will see how to pass through a Nvidia GPU as primary in a KVM virtual machine on unRAID by removing the header from a vbios dumped by GPU-z/ Techpowerup making it compatible with a KVM virtual machine. This is very useful as techpowerup.com has an extensive database of GPU vbioses. Also, should you want to dump your own previously you had to have 2 GPUs and 2 PCIe slots to do this. Well now you can do it using GPU-Z easily then convert it for KVM. How to easily passthough a Nvidia GPU as primary without dumping your own vbios!2 points

-

I'll chime in with a little-talked-about unRAID advantage. Since unRAID does not stripe data across multiple spindles, if a hard drive does fail and for whatever reason files stored on it cannot be recovered, there is still a possibility of using a "data rescue" service to recover your files. The cost to do so for a single drive might be 4 figures, but the cost to recover files from such a situation with a typical RAID array might be up to 10x that. Granted, this level of protection is not worth the cost for lots of people, but backups do fail. Also, if you use single Parity disk you can suffer one complete drive failure and still recover data. If you use dual Parity you can suffer two complete simultaneous drive failures and still recover data. If you suffer more simultaneous drive failures than can be recovered, at least you still have the data on drives that didn't fail, whereas with a typical RAID array that could represent complete data loss. If you use Seagate "Ironwolf Pro" drives, you get 2 years free rescue service from Seagate, so use those to store your wedding pictures2 points

-

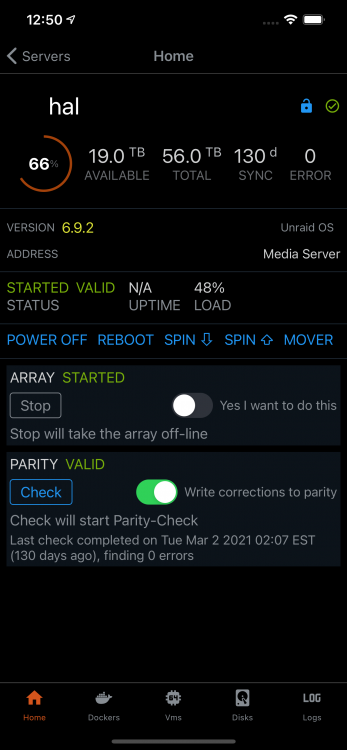

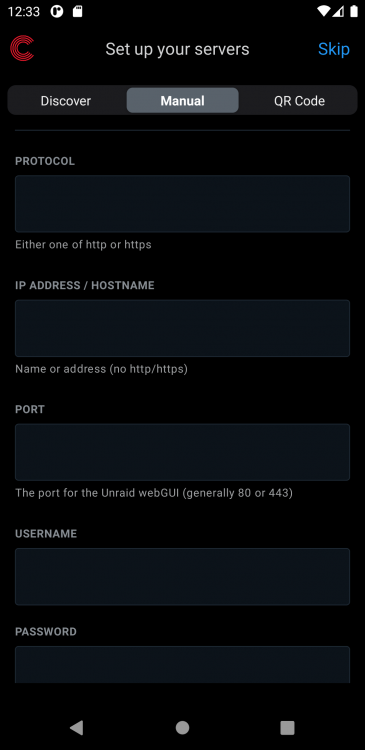

ControlR works from inside your local network and assists you in managing your unRAID servers. Features: - Manage multiple servers from a beautiful user interface - Manage dockers and virtual machines (start, stop, remove and more) - System theme support (light and dark mode) - Power on/off a server - Start/Stop an array - Spin down/up a disk - Show the banner for a server (including custom banners) - Automatic server discovery (in a lan environment) - User confirmation for sensitive operations (configurable) - And more ! Read the Privacy Policy before purchasing Purchase at https://www.apertoire.com/controlr/ Frequently Asked Questions Request Features / Provide feedback Screenshots1 point

-

1 point

-

Hey gang, Just wanted to drop a quick update. For those of you that have been reading the "Anybody planning a Ryzen build" message thread, you've realized that I've purchased a Ryzen system to upgrade my server. You would also know that there is a problem with Ryzen + unRAID, a combination that causes random crashes. For the past 2+ weeks, I've been troubleshooting these issues and trying to find a solution. This has consumed all my free time. In addition, my unRAID server is offline due to my testing. Long story short, I got sidetracked and haven't been able to do anything with the Tunables Tester script. I plan to wrap up my Ryzen testing this week, regardless of the outcome, and worst case I will get the old motherboard back in place to get my server back up. Then I will resume development of this script. Sorry for the delay after the big tease. -Paul1 point

-

Depends on your hardware, dual parity depends on your CPU then sata connections more so. If you're using 8TB drives, you will never see better than 16 hour parity check times going by reported speeds on these forums. I think most times were over 20 hours. If you want 12-13 hour parity check times, you will have to drop to 4TB 7200rpm drives.1 point

-

Yes, I was running this plugin just fine on my mb. The driver for my mb is nct6779. I installed Perl (from the Nerdpack), and the Dynamix Fans and the System Temp. Either the Fans or the System Temp (don't recall which), has the ability to search for the correct driver, but only if you have Perl installed. Once it finds it, you have to both "Save" and "Load" it. Some of the voltages appear wrong (like CPU and RAM), and my CPU and MB temps appeared to be reversed in the defaults, but otherwise it worked, and the fan speeds were accurate. I understand regarding your LAMP server, just happy you're up and running. Luckily there's lots of other users around, maybe one of them can try the test. Myself, I plan to install a Win10 VM... -Paul1 point

-

Respectfully, that's what the min free space setting is for. As long as you use a share that is set to cache:prefer for stuff that you want to live on the cache drive and cache:yes for stuff that will be moved to the array, and have a proper setting for min free space, you will never run into this specific issue.1 point

-

^^^^ This is why I went with unRaid vs "faster" raid setups. (Although I'd still like to have multiple cache pools)1 point

-

Wow, so that is big news, Lime-Tech is having issues too. While I had memtest issues with my original memory, those problems didn't manifest in Windows, even with heavy stability testing. I've since replaced my memory with ASRock QVL'd memory, and have completed 16 passes (and counting, it's been running for several days now) of Memtest86 without a single issue. Yet I still get frequent crashes in unRAID. Only in unRAID. I just reread through this entire topic and gathered the following info. If anyone has any updates, please share: TType85 has an ASUS B350 Prime, and has not reported any issues [presumed to be running Windows VM] hblockx has a Gigabyte X370 Gaming 5 and says it is running great [presumed to be running Windows VM's on his 2 gamers 1 pc] jonp (Lime-Tech) has an ASRock X370 Killer SLI/ac, and appears to be having issues but has not shared any info with us [unclear if they are running any Windows VM's] Beancounter has an ASUS Prime B350 Plus, was having crashes but is running fine [running patched Win10 VM] Naiqus has a Gigabyte X370 Gaming 5, and has not reported any issues [multiple VM's, did not list OS's] puddleglum has an ASRock AB350 Fatal1ty, and has not reported any issues [running Win10 VM] Bureaucromancer has an ASUS Crosshair VI Hero (X370 based), has had 2 crashes when idle and no VM's, no crashes with Win VM's [both Linux and Windows VM's] johnnie.black borrowed for a day an ASUS Prime B350M-A, and did not report any issues [did not report running any VM's] ufopinball has an ASUS Prime X370-Pro and has had 1 crash when only running a LInux VM [now running a Win VM now to test] chadjj has an ASUS Prime X370-Pro and is having issues similar to mine [ran a Windows VM before, but not now] I have an ASRock X370 Fatal1ty Professional Gamine, and I am having lots of issues in unRAID only [No VM's] JIM2003 has an ASUS Prime B350M-A, and had identical issues [No VM's] JIM2003 also has an ASRock Fatal1ty AB350 Gaming K4, and had identical issues [No VM's] Akio has ordered an ASUS Crosshair VI Hero, having assembly challenges with his waterblock cooler urbanracer34 has wants an ASRock X370 Taichi, not known if he bought it yet Reading through the list (and everyone's posts), a few things popped out at me. First, so far only X370 chipset boards have been reported as having any problems. So we might be looking at a problem specific to the X370, and not present on the B350 or A320 (which no one here seems to have purchased). The one exception to this is Beancounter was experiencing issue's on his B350, but those may have been caused by his Win10 VM, and recent Windows patches have fixed it. Second, seems like almost everyone immediately set up a Windows VM on their unRAID box. I seem to be one of the few that did not (I dual booted into Win10, but don't have a Win10 VM set up inside unRAID). This second observation becomes more interesting when you note that both chadjj and ufopinball are running the ASUS Prime X370-Pro, and chadjj is having issues and ufopinball is not. ufopinball does have a Win10 VM running. I don't know if chadjj ever got as far as a Windows VM, but his current testing has paired back the hardware and array to perform troubleshooting, so it seems he is not running one now. Add in Beancounter's note above that he was experiencing crashes (on a B350) that went away after his Win10 VM was recently patched. So now I am wondering, is running a Windows 10 VM somehow fixing the issue? And if so, how? -Paul1 point

-

I would be surprised if any motherboard maker has released a new BIOS that addresses the lock-up issue, it's only been a few days since AMD announced the issue and forthcoming fix. That said, I don't know if this issue is in any way related to our lock-up issue. The bug is in the FMA3 module, for performing Fuse-Multiply-Add operations, something that is typically only seen in certain benchmarks and scientific type applications. I could be wrong, but I don't see how an idle unRAID server would be doing FMA3 operations. Miserly loves company, thanks for joining... Sorry to hear you are experiencing issues as well. Have you heard back from Lime-Tech? I never did, but maybe I communicated incorrectly somehow. Just to be sure it's not a hardware issue, have you run Memtest86 and also booted into Windows or other Linux distros? From your post, it reads as if you skipped those steps since I had done them on my hardware. If you haven't done these tests, I highly encourage them. I took a look at your motherboard specs to see what is common between your motherboard and mine, since I'm still thinking this is not a CPU issue with several people here successfully running unRAID on Ryzen. One thing unique about my MB is the Aquantia AQC108 5Gb LAN chip, and your motherboard doesn't have one. There are also no drivers in 4.9.10 to support it (comes in 4.11), so technically it is going unused by unRAID. I'm now thinking it is unlikely to be part of the problem. Both motherboards have the Intel i211-AT gigabit LAN chip. I would expect an Intel LAN chip would be problem free, but who knows... I also noticed that both of our motherboards are running with a bonded network configuration: " Network: bond0: fault-tolerance (active-backup), mtu 1500" . I've become increasingly suspicious of this, as I"ve never seen a bonded configuration before (just personal experience, doesn't mean too much). In addition to the dual ethernet ports, my motherboard also has Wi-Fi and Bluetooth. I haven't been able to turn off the Wi-Fi or BT, and I was thinking that one of those was bonding with the ethernet port, something I don't want. Doubtful that would cause a crash, but again who knows... Your motherboard doesn't appear to have Wi-FI or Bluetooth. In fact, you only seem to have a single network port, so I'm not sure how you're running a bonded configuration. Seems odd. Both motherboards have the Realtek ALC S1220A audio codec chip. This chip doesn't have driver support in 4.9.10 (comes in 4.11), so again I'm thinking it is just sitting there unused by unRAID and not part of the problem, but who knows... Both motherboards have USB 3.1 support, but I believe this is part of the X370 chipset so it shouldn't be anything special. I tried disabling them in my BIOS, but flipping the switch did not actually turn them off. Your motherboard has 8 SATA3 ports, which I believe are all from the Ryzen CPU and the X370 chipset - the combo maxes out at 8. My motherboard has 10, with the extra 2 coming from an ASMedia ASM1061 chip. Since that is not a shared feature of our motherboards, I don't think this is the problem. Both motherboards have dual M.2 ports. This is a fairly unusual feature, common on several ASRock boards, but less common elsewhere. I'm not certain if anyone else here has dual M.2 ports. I am using one, the primary port, with a Samsung 960 in it. I installed Win10 on it, and dual boot into it. In unRAID, the drive is visible but not usable without formatting. I guess I could remove it to see if it makes a difference, as that is about the only hardware change I haven't made, but I'm doubtful it would help. I also don't see how having dual M.2 ports would be a problem, especially if the 2nd port is empty, but who knows... One thing I could not determine is whether or not your motherboard has the external bus clock generator (BCLK). My motherboard has one (called the ASRock Hyper BCLK Engine II) and an external bus generator is required to go over 100MHz on the bus. Typically only the highest end motherboards have one, and it is for overclocking. I've wondered if it is related to the problem, since the Hangcheck error can supposedly be triggered by bad bus timings. Best I can tell, your motherboard doesn't have one. I can't really see anything else that would be relevant. I need to go back through this long thread and reread what Ryzen motherboards are working okay with unRAID. I'm pretty sure there have been other X370 chipset motherboards, but can't remember for certain. -Paul1 point

-

Added in schedules. Schedules are set via a standard cron entry to force on or off turbo mode as requested. Turbo / Normal mode will stay set until a set time frame has expired, after which either Auto Turbo mode will be re-enabled (or unRaid's settings will take over if auto turbo is not enabled) In the event of an overlapping schedule, a warning will be logged, and the later schedule will take precedence * if you were using the script a few posts up to implement scheduling via user.scripts, then discontinue utilizing it -> changes have been made @binhex - Added scheduling not because I particularly see a need for it (but you may, who knows), but needed to work out how to do unlimited multiple schedules for another idea rolling around in my head)1 point

-

With all due respect man, this is unwarranted. We take security very seriously. Case in point: totally dropped development last month to incorporate CSRF protection as fast as possible and it was a hell of a lot of work. We are team of 2 developers in unRAID OS core, and one of us can only spend half time at it because of other hats that must be worn. Reality is 99% of CVE's do not affect unRAID directly. Many are in components we don't use. Many apply only to internet-facing servers. We have always advised, unRAID OS should not be directly attached to the internet. The day is coming when we can lift that caveat but for now VM's can certainly serve that role if you know what you are doing. If you find a truly egregious security vulnerability in unRAID we would certainly appreciate an email. We see every one of those, whereas we don't read every single forum post. Send to [email protected]1 point

-

Did some power usage testing on my own rig. Specs: ASUS Prime X370-PRO MB (Bios 0504) AMD Ryzen 7 1800X 8-Core 3.6GHz Crucial CT16G4DFD8213 DDR4 2133 (4 x 16GB = 64GB) Seasonic SS-660XP2 660W 80 PLUS PLATINUM Asus Radeon 6450 1GB (Desktop) Graphics Card 4 x 4TB Hitachi/HGST Deskstar 7K4000 Crucial C300 128GB SSD (Cache) SYBA USB 3.0 PCI-e x1 2.0 Card StarTech USB Audio Adapter unRAID 6.3.2 Standby: 1 watt No drives, only CPU/RAM/GPU: BIOS Setup: 70 watts unRAID @ root login (idle): 44 watts 4 x 4TB drives (spun up) + SSD + SYBA USB card + USB sound BIOS Setup: 106 watts unRAID @ root login (idle): 78 watts unRAID w/ Windows 10 VM (idle): 86 watts unRAID w/ Windows 10 VM (idle), HGST drives spun down: 68 watts Note: HGST drives are connected to motherboard SATA ports 4 x 4TB drives (spun up) + SSD + SYBA USB card + USB sound + SAS2LP controller + Seagate 2TB external + 32GB thumb drive BIOS Setup: 118 watts unRAID @ root login (idle): 94 watts unRAID w/ Windows 10 VM (idle): 98 watts unRAID w/ Windows 10 VM (idle), HGST drives spun down: 68 watts Note 1: HGST drives are connected to SAS2LP controller ports Note 2: Seagate 2TB external & 32GB thumb drives are connected to the SYBA USB 3.0 card and are being passed through to the Windows 10 VM Power consumption was measured using Kill-a-Watt, and tends to fluctuate +/- 5 watts, depending on what the system is doing in the background. Your results may vary, but the above rough results seem reasonable. Ultimately, this will become the new "Cortex" unRAID server and will need to support 16 SATA devices ... that's why the SuperMicro AOC-SAS2LP-MV8 controller is included. I will take more power measurements once I make the full switchover, but due to other commitments, that won't happen for at least a week. For the moment, I'll run a parity check against the SAS2LP and see if there are still problems. See this thread for details. In the end, I'll likely stick with the Dell HV52W PERC H310 8-Port controller since when I measured last, it used slightly less power than the SAS2LP. Mainly this test is out of curiosity that a newer motherboard can make the difference with the SAS2LP issue. - Bill1 point

-

i'm on Ubuntu, so you can install Ubuntu 16.04 for example and then update it to kernel 4.9. Instructions here: http://ubuntuhandbook.org/index.php/2016/12/install-linux-kernel-4-9-ubuntu-linux-mint/ i did it in my test environment many times to compare hardware compatibility to unRAID..1 point

-

This thread is reserved for Frequently Asked Questions, concerning all things Docker, their setup, operation, management, and troubleshooting. Please do not ask for support here, such requests and anything off-topic will be deleted or moved, probably to the Docker FAQ feedback topic. If you wish to comment on the current FAQ posts, or have suggestions or requests for the Docker FAQ, please put them in the Docker FAQ feedback topic. Thank you! Getting Started, Setup How do I get started using Docker containers? There's so much to learn! Are there any guides? What are the host volume paths and the container paths? How should I set up my appdata share? Why it's important to understand how folder mappings work ... and an example of why it can go wrong. How do I know if I've set up my containers (their paths, ports, etc) correctly? Where are my downloads stored? Why can't I find my downloads from one Docker in another Docker? Why can't I see my (something) folder in the Docker? What do I fill out when a blank template appears? How do I pass through a device to a container? How do I limit the CPU resources of a Docker application? How do I limit the memory usage of a Docker application? How do I Stop/Start/Restart Docker containers via the command line? I want to run a container from Docker Hub, how do I interpret the instructions? I can see the dockerfile on Docker Hub, how do I use that information to create a template for unRAID? How can I run another Docker container from Docker Hub (part 3)? I want to change the port my Docker container is running on or I have two containers that want to use the same port, how do I do that? General Questions With 6.2, do I need to move my appdata and/or Docker image into unRAID's recommended shares? Why doesn't the "Check for Updates" button bring my Docker to the newest version of the application? How do I update to the newest version of the application? How do I move or recreate docker.img? How do I increase the size of my Docker image? I've recreated my docker.img file. How do I re-add all my old apps? Can I switch Docker containers, same app, from one author to another? How do I do it? For a given application, how do I change Docker containers, to one from a different author or group? Why can't (insert Docker app name here) see my files mounted on another server or outside the array? How do I create my own Docker templates? Why does Sonarr keep telling me that it cannot import a file downloaded by nzbGet? (AKA Linking between containers) Why can't I delete / modify files created by CouchPotato (or another Docker app)? I need to open a terminal inside a Docker container, how do I do this? How do I get a command prompt so I can run commands within a Docker container? Troubleshooting and Maintenance I need some help! What info does the community need to help me? What do I do when I see 'layers from manifest don't match image configuration' during a Docker app installation? Why does my docker.img file keep filling up? Why does my docker.img file keep filling up while using Plex? Why does my docker.img file keep filling up still, when I've got everything configured correctly? Why did my files get moved off the Cache drive? Why do I keep losing my Docker container configurations? Why did my newly configured Docker just stop working? Where did my Docker files go? unRAID FAQ's and Guides - * Guides and Videos - comprehensive collection of all unRAID guides (please let us know if you find one that's missing) * FAQ for unRAID v6 on the forums, general NAS questions, not for Dockers or VM's * FAQ for unRAID v6 on the unRAID wiki - it has a tremendous amount of information, questions and answers about unRAID. It's being updated for v6, but much is still only for v4 and v5. * Docker FAQ - concerning all things Docker, their setup, operation, management, and troubleshooting * FAQ for binhex Docker containers - some of the questions and answers are of general interest, not just for binhex containers * VM FAQ - a FAQ for VM's and all virtualization issues * The old Docker FAQ (on the old forum) However, do not use the old index, as it points to the deleted entries. You will have to browse through the old FAQ to find the FAQ entries you want. Know of another question that ought to be here? Please suggest it in the Docker FAQ feedback topic. Suggested format for FAQ entries - clearly shape the issue as a question or as a statement of the problem to solve, then fully answer it below, including any appropriate links to related info or videos. Optionally, set the subject heading to be appropriate, perhaps the question itself. While a moderator could cut and paste a FAQ entry here, only another moderator could edit it. It's best therefore if only knowledgeable and experienced Docker users/authors create the FAQ posts, so they can be the ones to edit it later, as needed. Later, the author may want to add new info to the post, or add links to new and helpful info. And the post may need to be modified if a new unRAID release changes the behavior being discussed. Additional FAQ entries requested - * linking between containers, ie between sab and sickbeard - this might be best for specific author support FAQ's, as to how their containers should be linked * folder mapping - sufficiently covered yet? more needed? * port mapping - probably need both a general FAQ here, and individual FAQ's for many of the containers * location for the image file - answered in Upgrading to UnRAID v6 guide, but should be here too * where to put appdata - probably need a general FAQ here, but could use individual FAQ's for some containers Moderators: please feel free to edit this post.1 point

-

Can I switch Docker containers, same app, from one author to another? How do I do it? For a given application, how do I change Docker containers, to one from a different author or group? Answer is based on Kode's work here. Some applications have several Docker containers built for them, and often they are interchangeable, with few or only small differences in style, users, permissions, Docker base, support provided, etc. For example, Plex has a number of container choices built for it, and with care you can switch between them. Stop your current Docker container Click the Docker icon, select Edit on the current docker, and take a screenshot of the current Volume Mappings; if there are Advanced settings, copy them too Click on the Docker icon, select Remove, and at the prompt select "Container and Image" Find the new version in Community Applications and click Add Make the changes and additions necessary, so that the volume and port mappings and advanced settings match your screenshots and notes Click Create and wait (it may take awhile); that's it, you're done! Test it of course The last step may take quite awhile, in some cases a half hour or more. The setup may include special one-time tasks such as checking and correcting permissions.1 point

-

Dockers, there's so much to learn! Are there any guides? First, check this whole FAQ topic (the first post has an index). It includes various guides to getting-started and installation issues. Then check the stickied topics on this board, the Docker Engine board. (links to stickies to be added here) Then check this list of guides, many of which are video guides: * Guides and Videos Application guides * Guide-How to install SABnzbd and SickBeard on unRAID with auto process scripts * Plex: Guide to Moving Transcoding to RAM * Get Started with Docker Engine for Linux * Get Started with Docker for Windows If you know of other helpful guides (whole topics or single posts), please notify us, post about it in the Docker FAQ feedback topic. Moderators: please feel free to edit this post and its list of guides. I'm sure there's a better way to format it.1 point

-

How should I set up my appdata share? Assuming that you have a cache drive, the appdata share should be set up as use cache drive: prefer and not use cache only Why? What difference does it make? The difference is because of what happens should the cache drive happen to fill up (due to downloads, or the cache floor setting within Global Share Settings). If the appdata share is set up to use cache: only, then any docker application writing to its appdata will fail with a disk full error (which may in turn have detrimental effects on your apps) If the appdata share is set up to use cache: prefer then should the cache drive become full, then any additional write by the apps to appdata will be redirected to the array, and the app will not fail with an error. Once space is freed up on the cache drive, then mover will automatically move those files back to the cache drive where they belong1 point

-

How do I get started using Docker containers? First things to view - * All about Docker in unRAID - Docker Principles and Setup - video guide by gridrunner * Guides and Videos - check out the Docker section, full of useful videos and guides First things to read - * unRAID V6 Manual - has a good conceptual introduction to Dockers and VM's * LimeTech's Official Docker Guide * Using Docker - unRAID Manual for v6, guide to creating Dockers * Later, when you have time, check the other stickied threads here, at the top of the Docker board. First suggestions - * Turn on Docker support in the Settings page (there's more information in the Upgrading to UnRAID v6 guide). * Then install the Community Applications plugin, to obtain the entire list of containers, already categorized for you. You can select the containers you want, and add them from this plugin. * Next, read this entire FAQ! [not working yet: This post] includes examples of how to set up the appdata folder for the configuration settings of your Docker containers.1 point

-

On the remote server sharing (exporting) the NFS volume: root@nas1:~# cat /etc/exports # See exports(5) for a description. # This file contains a list of all directories exported to other computers. # It is used by rpc.nfsd and rpc.mountd. "/mnt/user/Movies" -async,no_subtree_check,fsid=101 *(sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash) And on the server that needs to mount the remote NFS volume, we'd use something like: root@Tower:~# mount -t nfs nas1:/mnt/user/Movies /mnt/disks/nas1_Movies/ Then verify: root@Tower:~# mount | grep nas1 nas1:/mnt/user/Movies on /mnt/disks/nas1_Movies type nfs (rw,addr=192.168.0.138)1 point

-

If you have one nearby, Micro Center is a great brick and mortar store to get computer parts at. Their prices are often pretty close to online stores, though you will get hit with sales tax. But, I don't think I would order online from them. That's not really their main thing, and their customer support for online orders doesn't seem nearly as good as Newegg's.1 point