Leaderboard

Popular Content

Showing content with the highest reputation on 01/13/20 in all areas

-

You've obviously got some ideas, why not do it? Problem is I see time and time again, is people keep telling us what we should be doing and how quick we should be doing it, now, don't be offended because this is a general observation, rather than personal. It's ten to one in the morning, I've just got back from work, I have a toddler that is going to get up in about five hours, my wife is heavily pregnant, Unraid Nvidia and beta testing just isn't up there in my list of priorities at this point. I've already looked at it and I need to look at compiling the newly added WireGuard out of tree driver. I will get around to it, but when I can. And if that means some Unraid users have to stick on v6.8.0 for a week or two then so be it, or, alternatively, forfeit GPU transcoding for a week or two, then so be it. I've tried every way I could when I was developing this to avoid completely repacking Unraid, I really did, nobody wanted to do that less than me. But, if we didn't do it this way, then we just saw loads of seg faults. I get a bit annoyed by criticism of turnaround time, because, as this forum approaches 100,000 users, how many actually give anything back? And of all the people who tell us how we should be quicker, how many step up and do it themselves? TL:DR It'll be ready when it's ready, not a moment sooner, and if my wife goes into labour, well, probably going to get delayed. My life priority order: 1. Wife/kids 2. Family 3. Work (Pays the mortgage and puts food on the table) @Marshalleq The one big criticism I have is comparing this to ZFS plugin, no disrespect, that's like comparing apples to oranges. Until you understand, and my last lengthy post on this thread might give you some insight. Please refrain from complaining. ZFS installs a package at boot, we replace every single file that makes up Unraid other than bzroot-gui. I've said it before, I'll say it again. WE ARE VOLUNTEERS Want enterprise level turnaround times, pay my wages.3 points

-

I'm going to go out on a limb here and speak for @CHBMB. This plugin (and the DVB plugin) are built and maintained by him on a volunteer basis and it isn't always convenient for him (or anyone else) to continually keep up with every release as soon as it's released. There is significant time required to build and test each and every release which isn't always doable because of other commitments which are probably far more important. Cut him some slack. To be honest, at times I'm surprised due to the time required for this that he even bothers to support RC's.3 points

-

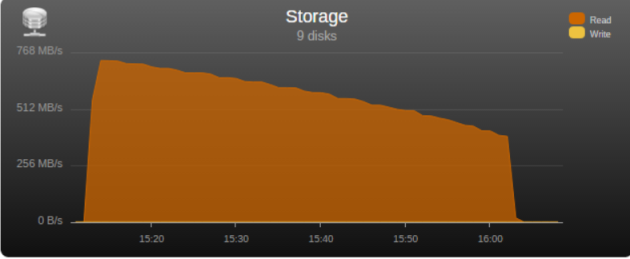

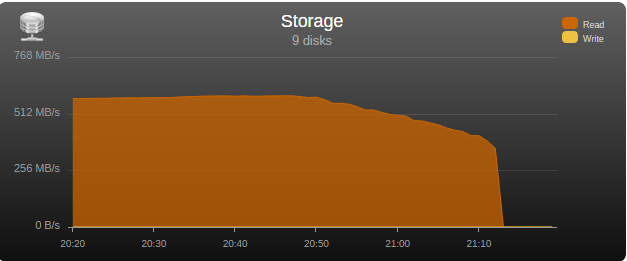

I had the opportunity to test the “real word” bandwidth of some commonly used controllers in the community, so I’m posting my results in the hopes that it may help some users choose a controller and others understand what may be limiting their parity check/sync speed. Note that these tests are only relevant for those operations, normal read/writes to the array are usually limited by hard disk or network speed. Next to each controller is its maximum theoretical throughput and my results depending on the number of disks connected, result is observed parity/read check speed using a fast SSD only array with Unraid V6 Values in green are the measured controller power consumption with all ports in use. 2 Port Controllers SIL 3132 PCIe gen1 x1 (250MB/s) 1 x 125MB/s 2 x 80MB/s Asmedia ASM1061 PCIe gen2 x1 (500MB/s) - e.g., SYBA SY-PEX40039 and other similar cards 1 x 375MB/s 2 x 206MB/s JMicron JMB582 PCIe gen3 x1 (985MB/s) - e.g., SYBA SI-PEX40148 and other similar cards 1 x 570MB/s 2 x 450MB/s 4 Port Controllers SIL 3114 PCI (133MB/s) 1 x 105MB/s 2 x 63.5MB/s 3 x 42.5MB/s 4 x 32MB/s Adaptec AAR-1430SA PCIe gen1 x4 (1000MB/s) 4 x 210MB/s Marvell 9215 PCIe gen2 x1 (500MB/s) - 2w - e.g., SYBA SI-PEX40064 and other similar cards (possible issues with virtualization) 2 x 200MB/s 3 x 140MB/s 4 x 100MB/s Marvell 9230 PCIe gen2 x2 (1000MB/s) - 2w - e.g., SYBA SI-PEX40057 and other similar cards (possible issues with virtualization) 2 x 375MB/s 3 x 255MB/s 4 x 204MB/s IBM H1110 PCIe gen2 x4 (2000MB/s) - LSI 2004 chipset, results should be the same as for an LSI 9211-4i and other similar controllers 2 x 570MB/s 3 x 500MB/s 4 x 375MB/s Asmedia ASM1064 PCIe gen3 x1 (985MB/s) - e.g., SYBA SI-PEX40156 and other similar cards 2 x 450MB/s 3 x 300MB/s 4 x 225MB/s Asmedia ASM1164 PCIe gen3 x2 (1970MB/s) - NOTE - not actually tested, performance inferred from the ASM1166 with up to 4 devices 2 x 565MB/s 3 x 565MB/s 4 x 445MB/s 5 and 6 Port Controllers JMicron JMB585 PCIe gen3 x2 (1970MB/s) - 2w - e.g., SYBA SI-PEX40139 and other similar cards 2 x 570MB/s 3 x 565MB/s 4 x 440MB/s 5 x 350MB/s Asmedia ASM1166 PCIe gen3 x2 (1970MB/s) - 2w 2 x 565MB/s 3 x 565MB/s 4 x 445MB/s 5 x 355MB/s 6 x 300MB/s 8 Port Controllers Supermicro AOC-SAT2-MV8 PCI-X (1067MB/s) 4 x 220MB/s (167MB/s*) 5 x 177.5MB/s (135MB/s*) 6 x 147.5MB/s (115MB/s*) 7 x 127MB/s (97MB/s*) 8 x 112MB/s (84MB/s*) * PCI-X 100Mhz slot (800MB/S) Supermicro AOC-SASLP-MV8 PCIe gen1 x4 (1000MB/s) - 6w 4 x 140MB/s 5 x 117MB/s 6 x 105MB/s 7 x 90MB/s 8 x 80MB/s Supermicro AOC-SAS2LP-MV8 PCIe gen2 x8 (4000MB/s) - 6w 4 x 340MB/s 6 x 345MB/s 8 x 320MB/s (205MB/s*, 200MB/s**) * PCIe gen2 x4 (2000MB/s) ** PCIe gen1 x8 (2000MB/s) LSI 9211-8i PCIe gen2 x8 (4000MB/s) - 6w – LSI 2008 chipset 4 x 565MB/s 6 x 465MB/s 8 x 330MB/s (190MB/s*, 185MB/s**) * PCIe gen2 x4 (2000MB/s) ** PCIe gen1 x8 (2000MB/s) LSI 9207-8i PCIe gen3 x8 (4800MB/s) - 9w - LSI 2308 chipset 8 x 565MB/s LSI 9300-8i PCIe gen3 x8 (4800MB/s with the SATA3 devices used for this test) - LSI 3008 chipset 8 x 565MB/s (425MB/s*, 380MB/s**) * PCIe gen3 x4 (3940MB/s) ** PCIe gen2 x8 (4000MB/s) SAS Expanders HP 6Gb (3Gb SATA) SAS Expander - 11w Single Link with LSI 9211-8i (1200MB/s*) 8 x 137.5MB/s 12 x 92.5MB/s 16 x 70MB/s 20 x 55MB/s 24 x 47.5MB/s Dual Link with LSI 9211-8i (2400MB/s*) 12 x 182.5MB/s 16 x 140MB/s 20 x 110MB/s 24 x 95MB/s * Half 6GB bandwidth because it only links @ 3Gb with SATA disks Intel® SAS2 Expander RES2SV240 - 10w Single Link with LSI 9211-8i (2400MB/s) 8 x 275MB/s 12 x 185MB/s 16 x 140MB/s (112MB/s*) 20 x 110MB/s (92MB/s*) * Avoid using slower linking speed disks with expanders, as it will bring total speed down, in this example 4 of the SSDs were SATA2, instead of all SATA3. Dual Link with LSI 9211-8i (4000MB/s) 12 x 235MB/s 16 x 185MB/s Dual Link with LSI 9207-8i (4800MB/s) 16 x 275MB/s LSI SAS3 expander (included on a Supermicro BPN-SAS3-826EL1 backplane) Single Link with LSI 9300-8i (tested with SATA3 devices, max usable bandwidth would be 2200MB/s, but with LSI's Databolt technology we can get almost SAS3 speeds) 8 x 500MB/s 12 x 340MB/s Dual Link with LSI 9300-8i (*) 10 x 510MB/s 12 x 460MB/s * tested with SATA3 devices, max usable bandwidth would be 4400MB/s, but with LSI's Databolt technology we can closer to SAS3 speeds, with SAS3 devices limit here would be the PCIe link, which should be around 6600-7000MB/s usable. HP 12G SAS3 EXPANDER (761879-001) Single Link with LSI 9300-8i (2400MB/s*) 8 x 270MB/s 12 x 180MB/s 16 x 135MB/s 20 x 110MB/s 24 x 90MB/s Dual Link with LSI 9300-8i (4800MB/s*) 10 x 420MB/s 12 x 360MB/s 16 x 270MB/s 20 x 220MB/s 24 x 180MB/s * tested with SATA3 devices, no Databolt or equivalent technology, at least not with an LSI HBA, with SAS3 devices limit here would be the around 4400MB/s with single link, and the PCIe slot with dual link, which should be around 6600-7000MB/s usable. Intel® SAS3 Expander RES3TV360 Single Link with LSI 9308-8i (*) 8 x 490MB/s 12 x 330MB/s 16 x 245MB/s 20 x 170MB/s 24 x 130MB/s 28 x 105MB/s Dual Link with LSI 9308-8i (*) 12 x 505MB/s 16 x 380MB/s 20 x 300MB/s 24 x 230MB/s 28 x 195MB/s * tested with SATA3 devices, PMC expander chip includes similar functionality to LSI's Databolt, with SAS3 devices limit here would be the around 4400MB/s with single link, and the PCIe slot with dual link, which should be around 6600-7000MB/s usable. Note: these results were after updating the expander firmware to latest available at this time (B057), it was noticeably slower with the older firmware that came with it. Sata 2 vs Sata 3 I see many times on the forum users asking if changing to Sata 3 controllers or disks would improve their speed, Sata 2 has enough bandwidth (between 265 and 275MB/s according to my tests) for the fastest disks currently on the market, if buying a new board or controller you should buy sata 3 for the future, but except for SSD use there’s no gain in changing your Sata 2 setup to Sata 3. Single vs. Dual Channel RAM In arrays with many disks, and especially with low “horsepower” CPUs, memory bandwidth can also have a big effect on parity check speed, obviously this will only make a difference if you’re not hitting a controller bottleneck, two examples with 24 drive arrays: Asus A88X-M PLUS with AMD A4-6300 dual core @ 3.7Ghz Single Channel – 99.1MB/s Dual Channel - 132.9MB/s Supermicro X9SCL-F with Intel G1620 dual core @ 2.7Ghz Single Channel – 131.8MB/s Dual Channel – 184.0MB/s DMI There is another bus that can be a bottleneck for Intel based boards, much more so than Sata 2, the DMI that connects the south bridge or PCH to the CPU. Socket 775, 1156 and 1366 use DMI 1.0, socket 1155, 1150 and 2011 use DMI 2.0, socket 1151 uses DMI 3.0 DMI 1.0 (1000MB/s) 4 x 180MB/s 5 x 140MB/s 6 x 120MB/s 8 x 100MB/s 10 x 85MB/s DMI 2.0 (2000MB/s) 4 x 270MB/s (Sata2 limit) 6 x 240MB/s 8 x 195MB/s 9 x 170MB/s 10 x 145MB/s 12 x 115MB/s 14 x 110MB/s DMI 3.0 (3940MB/s) 6 x 330MB/s (Onboard SATA only*) 10 X 297.5MB/s 12 x 250MB/s 16 X 185MB/s *Despite being DMI 3.0** , Skylake, Kaby Lake, Coffee Lake, Comet Lake and Alder Lake chipsets have a max combined bandwidth of approximately 2GB/s for the onboard SATA ports. **Except low end H110 and H310 chipsets which are only DMI 2.0, Z690 is DMI 4.0 and not yet tested by me, but except same result as the other Alder Lake chipsets. DMI 1.0 can be a bottleneck using only the onboard Sata ports, DMI 2.0 can limit users with all onboard ports used plus an additional controller onboard or on a PCIe slot that shares the DMI bus, in most home market boards only the graphics slot connects directly to CPU, all other slots go through the DMI (more top of the line boards, usually with SLI support, have at least 2 slots), server boards usually have 2 or 3 slots connected directly to the CPU, you should always use these slots first. You can see below the diagram for my X9SCL-F test server board, for the DMI 2.0 tests I used the 6 onboard ports plus one Adaptec 1430SA on PCIe slot 4. UMI (2000MB/s) - Used on most AMD APUs, equivalent to intel DMI 2.0 6 x 203MB/s 7 x 173MB/s 8 x 152MB/s Ryzen link - PCIe 3.0 x4 (3940MB/s) 6 x 467MB/s (Onboard SATA only) I think there are no big surprises and most results make sense and are in line with what I expected, exception maybe for the SASLP that should have the same bandwidth of the Adaptec 1430SA and is clearly slower, can limit a parity check with only 4 disks. I expect some variations in the results from other users due to different hardware and/or tunnable settings, but would be surprised if there are big differences, reply here if you can get a significant better speed with a specific controller. How to check and improve your parity check speed System Stats from Dynamix V6 Plugins is usually an easy way to find out if a parity check is bus limited, after the check finishes look at the storage graph, on an unlimited system it should start at a higher speed and gradually slow down as it goes to the disks slower inner tracks, on a limited system the graph will be flat at the beginning or totally flat for a worst-case scenario. See screenshots below for examples (arrays with mixed disk sizes will have speed jumps at the end of each one, but principle is the same). If you are not bus limited but still find your speed low, there’s a couple things worth trying: Diskspeed - your parity check speed can’t be faster than your slowest disk, a big advantage of Unraid is the possibility to mix different size disks, but this can lead to have an assortment of disk models and sizes, use this to find your slowest disks and when it’s time to upgrade replace these first. Tunables Tester - on some systems can increase the average speed 10 to 20Mb/s or more, on others makes little or no difference. That’s all I can think of, all suggestions welcome.1 point

-

**Beta** Theme Engine A plugin for the unRAID webGui Theme Engine lets you re-style the unRAID webGui. Instead of creating a whole new theme, it adjusts settings that override the theme you are already using. You can adjust as few or as many settings as you like. Mixing and matching the base themes with Theme Engine settings creates endless possibilities. Import one of the included styles, or remix and export your own. --- How to install Search for Theme Engine on Community Applications. -OR- the URL to install manually: https://github.com/Skitals/unraid-theme-engine/raw/master/plugins/theme.engine.plg --- How to use To load an included theme, select it under Saved Themes and hit Load. To import a theme (zip file) from the community, paste the url under Import Zip and hit Import. The theme is now available to Load under Saved Themes. To import a theme (zip file) from usb, place the zip file in the folder /boot/config/plugins/theme.engine/themes/ select it under Import Zip and hit Import. The theme is now available to Load under Saved Themes. Note that when you Apply changes to a theme, it applies to your current configuration but does not overwrite the Saved Theme. If you want to update the saved theme, check the checkmark next to "Export to Saved Themes" before hitting Apply. To save it as a new theme, change the Theme Name, check the checkmark, and hit Apply. To export your theme as a zip file, hit Apply to save any changes, toggle on Advanced View, and click "Download current confirguration as Zip File" at the bottom of the page. --- Screenshots Basic View: Advanced View: --- Advanced / Hidden Options You can inject custom css by creating the following files and enabling custom.css under advanced view. The files, if they exist, are included on every page just above `</style>` in the html head. The custom.css files are independent of the theme engine theme you are using. If custom.css is enabled, it will be included even if theme engine is disabled. /boot/config/plugins/theme.engine/custom.css Will be included in all themes. /boot/config/plugins/theme.engine/custom-white.css /boot/config/plugins/theme.engine/custom-black.css /boot/config/plugins/theme.engine/custom-gray.css /boot/config/plugins/theme.engine/custom-azure.css Will only be included when using the corresponding base theme.1 point

-

Hi all, I've recently been using Huginn for a few things and wanted to share an unRAID Template to use it: http://vaughanhilts.me/blog/2018/04/26/setting-up-huggins-on-unraid-for-automation.html I also wrote a guide on using it under unRAID for those less familar. I also wrote a blog post on how to utilize it for something fun here. I hope the community gets some enjoyment out of it.1 point

-

I started my unraid journey in october 2019 trying out some stuff before i decided to take the plunge and buy 2 licences (one for backups and one for my daily use). I am working with IT and knowing how much noise rack equipment makes at work i had no plans what so ever to go that route. My first attempt at an unraid build was using a large nanoxia high tower case where i could at max get 23 3.5mm drives into, but once i reached around 15 drives the tempratures started to become a problem. Also what i didn't see was how incredible hot the HBA controllers become. I decided to buy a 24bay 4u chassi to see what i could get away with just using the case and switching out the fan wall with noctua fans and such to reduce the noise levels. I got a 24bay case that supports regular ATX PSU because i know server chassis usually go for 2U psu's to have backups and they sound like jet engines so i wanted none of that. Installing everything in the 24bay 4u chassi the noise levels were almost twice as high compared to the nanoxia high tower case even when i switched the fans out and used some nifty "low noise adapters". Next up was the idea of having a 12U rack that is sound proofed, is it possible? How warm will stuff get? What can i find? I ended up taking a chance and i bought a sound proofed 12u rack from a german company called "Lehmann", it wasn't cheap in any sense but it was definitely worth it! I couldn't possible be happier with my build. From top to bottom: 10gps switch AMD Threadripper WM host server Intel Xeon Unraid server Startech 19" rackmount kvm switch APC UPS with Management Card In total 12u of 12u used! Temps: around 5c higher than room temprature, unraid disks average of 27c. Noise level? around 23db, i can sleep in the same room as the rack!1 point

-

I got a Quadro P2000, installed the Nvidia UnRAID Plugin, and then noticed that my power usage at idle was 18W. After some searching I found that the following command would fix this (by letting the power state go to P8 thus dropping power usage to 4W) by setting the Persistence Mode to "On": nvidia-smi -pm 1 Will adding this to my "go" file hurt anything or should I put it in as a cron job via the User Scripts Plugin instead as a "At First Array Start Only" task?1 point

-

1 point

-

i had emby set up with just the m3u file from my iptv provider, but the channel mappings are absolutely rubbish (no channel numbers in the m3u file, and I have no idea where to find the correct channel numbers and add them) but, more importantly, out of the tens of thousands of channels listed in the m3u file I want less than 30 ( i really don't need arab, pakistani, most of the USA channels etc etc - all i want is sports, well motorsports , not football, or american football, etc) and I couldn'te an easy way to filter using the emby channel data. anyway will look more, but time to go home right now.. cheers1 point

-

Please start a new thread on the general support forum and post the diagnostics.1 point

-

Starting with 6.8 writing the flash device changed in 2 ways: First, via /etc/fstab the flash device is mounted with the 'flush' mount option. The man page for that option says: "If set, the filesystem will try to flush to disk more early than normal. Not set by default." This was done to ensure data completely is written to the flash device sooner than normal Linux cache flush. Second, after a release zip file has been downloaded, instead of unpacking to RAM and then copying files to the usb flash device, it extracts from the zip file directly to the usb flash device. This was done to ensure those with low memory servers could still upgrade. FWIW - we routinely update several servers several times almost daily with various models of usb flash and honestly in all the years I think I've seen one legit flash failure.1 point

-

Upgrade to v6.8.1 and the errors filling the log will go away, it's a known issue with some earlier releases and VMs/dockers with custom IPs.1 point

-

Correct, in more recent bios there's usually an option to fix that without disabling c-states, look for "Power Supply Idle Control" (or similar) and set it to "typical current idle" (or similar).1 point

-

I've seen Unraid crash before during a parity check in v6.7.2 multiple times (IIRC only with dual parity), this could usually be fixed by lowering a tunable, but v6.8 uses very different code for check/sync, without that tunable, and I never saw those crashes with v6.8, that doesn't mean it's not indeed an Unraid problem, happening with specific hardware.1 point

-

The vbios has to be specific to your exact specimen (brand + model + revision). So it's very likely that you used something that doesn't match your card. It is not uncommon for some models to not have vbios on TPU. I have even seen a vbios dumped from 2nd slot not working if the card is in the 1st slot but that seems rare (have only seen 1 report). So given you don't have a 2nd GPU, the only thing you can do is to try to get the right vbios from TPU (if it's available). I believe SIO has a guide on how to dump it from gpuz (which runs from Windows) so if you can somehow get a Windows installation up and running, you may be able to follow that guide to do it. Alternatively, you can also dump the vbios if you have another computer as well. Passing through the GTX 1080 as only GPU without vbios is unlikely to work due to error code 43. Even with the right vbios, you might still need some workarounds in the xml but we'll deal with that when we get there.1 point

-

Cache "prefer" moves data from array to cache, for example your C3PO share is set that way and trying to move data to cache instead of the other way around, check all your shares, they need to be set to cache="yes" to move data from cache to array, check GUI help for a description of each share cache setting.1 point

-

This will be a network issue, it could be your host, it could be your ISP or it could be your VPN provider, you need to check all 3 for drops. Sent from my CLT-L09 using Tapatalk1 point

-

Interesting. I use a three Squeezebox players at home (Radio, Receiver and SB3), but have had various alternatives over the years. One of them was a touch-screen O2 panel which I meant to sell on, but forgot). Plus Softsqueeze machines. The radio still wakes me up every morning. I use Volumio on a standalone Pi as a media player at gigs that can be controlled from a browser. However, haven't looked at it in a while (just set up and left it). As mentioned earlier, the receivers are the way to go for a "just works" solution. Once they're connected to your wi-fi, they're perfect. Also echo the fact that the Controller is awful.1 point

-

mmm, not 100% sure, I don't remember how I dumped my vbios, maybe I used a second pc to connect to unraid 🤨 Since you need to unbind the gpu I think so. By this way you are 100% the vbios is correct for your own gpu.1 point

-

1 point

-

1 point

-

Why don't you dump your own vbios? SpaceInvaderOne explain how to do it, it's very simple and you can do it from unraid itself.1 point

-

Apparently yes, though the link I posted above is for different models I've read since on another forum that it also affects that model, and there's no firmware update from Seagate for now, so they shouldn't be used with LSI HBAs.1 point

-

I've spoke to him and have some idea on what to do, it's a little more difficult for me than his as he uses a docker container. i will see what i can do when i get time, if anyone wants to implement it and send a PR thats fine.1 point

-

There are constant errors on cache4 since early in the boot process, try swapping cables, if issue persists replace device.1 point

-

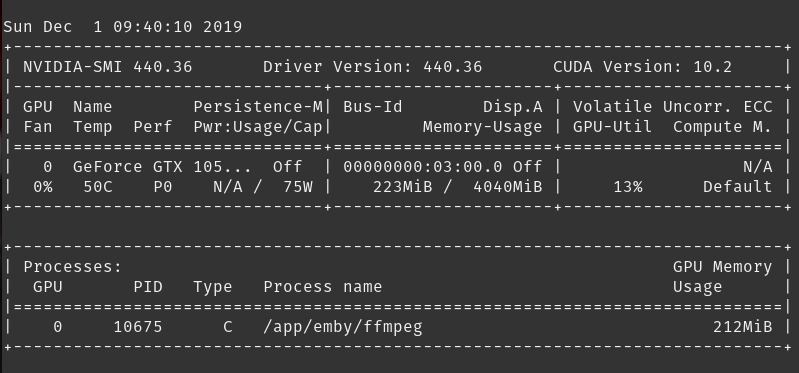

@CHBMB Appreciate your complicated plugin, take your time. Thanks! I use it for Emby transcoding and BOINC for Science United!1 point

-

1 point

-

Have you updated to a BIOS based on AMD AGESA 1.0.0.4 B, that's a requirement for passthrough.1 point

-

All of @overbyrns plugins wouldnt be compatible. For any and all compatible apps with your version you should always install via the apps page. If a plugin in particular is not listed there then there's probably a very good reason for it.1 point

-

I just repulled the static binaries for the ps3netsrv from webMAN MOD 1.47.26. If you want to use it, delete all images and only use mccloud/ps3netsrv which pulls a minimal install with the binary. It now pulls the binaries straight from the webMAN github, so it should always be up-to-date as long as they stay active and don't change the folder structure. So in theory, everything should work and stay up to date, but if what you have works, I'd stay with that.1 point

-

Not 100% sure if this is the best way to do it or not, but here is what I did to migrate to the bitwardenrs docker !!!!! Stop your existing bitwarden docker and then make a backup of your existing bitwarden appdata folder !!!!! I did this using the unraid web terminal as follows: cd /mnt/user/appdata/ tar -cvzf bw.tgz /mnt/user/appdata/bitwarden Copy that tgz file to at least 3 places. You should see it in the root of your appdata share. Now that you've backed up your precious bitwarden data, let's do the migration. Go to CA and open the template to install the bitwardenrs docker set the webui port to the same port you had previously used for bitwarden. make sure the appdata folder matches your old appdata folder set your server admin email address lastly, set your admin token with a long random string. I used 'openssl rand -base64 48' to make the string. You should be able to hit Apply now and it should create the new docker for you. Hope this helps.1 point

-

@bastl From 3rd attempt it work. At 2nd try i installed q35 machine, redhat drivers and tried GPU driver - failure. After searching a bit with error which i was getting i find out the right way. So i installed Windows, Only network driver for VM and after went back to vm, passed though GPU and it's sound part (without XML editing), Started and connected to VM using RDP and installed driver, everything went fine, finally this machine is working1 point

-

Would be best to post in the appropriate support thread for wherever you're installing fail2ban from, since it's not included in 6.8.x1 point

-

This plugin looks really cool. Raz's post on Reddit brought me here. Next we'll 'need' a 'store' from where to preview and get the themes1 point

-

And I refer people to my last lengthy post on here to help put this in perspective how much work this takes. And on a daily basis me and the other linuxserver guys are dealing with the other stuff we do, ie docker, and trying to answer support stuff. Just saying.....1 point

-

If you think this is a simple compile on boot job, you have clearly not read much in this thread or understand anything about how much work, manual that is, to get this to compile. If it was a simple script job for each unraid release, it would have been released shortly after. But it is not. If you want to test unraid releases the second they are ready, run a clean unraid build.1 point

-

It's your router and/or port settings. I'm assuming you're running unraid on port 443. Your router is redirecting internal (lan) requests to your domain to the unraid ip in the same 443 port, so you're getting unraid. You can move unraid's https port to something else and run letsencrypt on 443 instead1 point

-

1 point

-

1 point

-

1 point

-

Easy. You can write 1 script for the whole thing as long as its possible to differentiate 4k shows from TV shows - like having separate directories for each. If you don't - you'll some criiteria to tell them apart and we can create the script lines. #!/bin/bash lftp << EOF set ssl:verify-certificate no set sftp:auto-confirm yes open sftp://username:[email protected] mirror --use-pget-n=8 /home/username/downloads/deluge/4k /mnt/disk5/Downloads/incoming/4k mirror --use-pget-n=8 /home/username/downloads/deluge/TV /mnt/disk5/Downloads/incoming/TV quit EOF chmod -Rv 770 /mnt/disk5/Downloads/incoming/4k chown -Rv nobody:users /mnt/disk5/Downloads/incoming/4k chmod -Rv 770 /mnt/disk5/Downloads/incoming/TV chown -Rv nobody:users /mnt/disk5/Downloads/incoming/TV mv /mnt/disk5/Downloads/incoming/4k/* /mnt/disk5/Downloads/completed/4k mv /mnt/disk5/Downloads/incoming/TV/* /mnt/disk5/Downloads/completed/TV I've created an incoming directory in my script above since you might not want to run chmod and chown on all the files in the 4k and TV folders, which take forvever ion my system because the folders have a whole ton of assorted files and I only sort and remove every so often.1 point

-

See if just stopping all dockers also returns CPU usage to normal.1 point

-

※Related to the "unexpected GSO type" issue on unRAID 6.8※ Yes, related to unRAID's specific workload, hence the reason I worded it the way I did and linked what Tom wrote on that topic. For clarification, Tom in his own words... "This implies the bug was introduced with some code change in the initial 5.0 kernel. The problem is that we are not certain where to report the bug; it could be a kernel issue or a docker issue. Of course, it could also be something we are doing wrong, since this issue is not reported in any other distro AFAIK." That link is related to passthrough of an NVME device and was a seperate issue that has been resolved to my knowledge. The problem that we are discussing is related to IO_PAGE_FAULT being thrown when a NVME is trimmed, specifically to users who need to have IOMMU enabled. The current kernel patch information is located here https://bugzilla.kernel.org/show_bug.cgi?id=202665#c105 Awesome! It looks like from the latest update on https://bugzilla.kernel.org/show_bug.cgi?id=202665#c107 that you need to build a custom kernel with the patch applied to it. I've never attempted that, but to be completely honest, I don't know how one would apply that into unRAID, www.1 point

-

It is really up to the user to decide. The advantage of the router supporting it is that it does not require the Unraid server to be operational. You could of course use both with different profiles on the remote client. You might want to have a look at the Wireguard web site? You will see there that it is officially still classified as experimental by it's developers which is probably why it currently has limited real-world use. However I can see that changing rapidly and early feedback from those who have tried it already is very positive. Several of the major commercial VPN suppliers have stated that they are actively working on adding support for WireGuard to their offerings which is quite a good endorsement.1 point

-

Alternative approach to changing to SeaBIOS or switching to Cirrus:- 1. mount iso for 'live cd' and boot vm 2. once you get to the grub menu press 'e' and append the following, failure to do this will mean you wont be able to boot to x windows:- systemd.mask=mhwd-live.service 3. press ctrl+x to save this change and the vm should boot. 4. once booted then install to vdisk and shutdown (do not reboot it will get stuck unmounting iso)- may require 'Force Stop' 5. once shutdown edit vm and remove the iso 6. start vm, this will get stuck at a black screen, you now need to press ctrl+alt+F2 in order to get to command prompt 7. login with root and the password you defined. 8. issue the following command to remove the symlink to mhwd - note this cannot be done at step 4. as the symlink does not exist sudo rm -f /etc/X11/xorg.conf.d/90-mhwd.conf && reboot 9. enjoy manjaro! - tested on 'cinnamon' and 'openbox' editions of manjaro.1 point

-

This was an interesting one, builds completed and looked fine, but wouldn't boot, which was where the fun began. Initially I thought it was just because we were still using GCC v8 and LT had moved to GCC v9, alas that wasn't the case. After examining all the bits and watching the builds I tried to boot with all the Nvidia files but using a stock bzroot, which worked. So then tried to unpack and repack a stock bzroot, which also reproduced the error. And interestingly the repackaged stock bzroot was about 15mb bigger. Asked LT if anything had changed, as we were still using the same commands as we were when I started this back in ~June 2018. Tom denied anything had changed their end recently. Just told us they were using xz --check=crc32 --x86 --lzma2=preset=9 to pack bzroot with. So changed the packaging to use that for compression, still wouldn't work. At one point I had a repack that worked, but when I tried a build again, I couldn't reproduce it, which induced a lot of head scratching and I assumed my version control of the changes I was making must have been messed up, but damned if I could reproduce a working build, both @bass_rock and me were trying to get something working with no luck. Ended up going down a rabbit hole of analysing bzroot with binwalk, and became fairly confident that the microcode prepended to the bzroot file was good, and it must be the actual packaging of the root filesystem that was the error. We focused in on the two lines relevant the problem being LT had given us the parameter to pack with, but that is receiving an input from cpio so can't be fully presumed to be good, and we still couldn't ascertain that the actual unpack was valid, although it looked to give us a complete root filesystem. Yesterday @bass_rock and I were both running "repack" tests on a stock bzroot to try and get that working, confident that if we could do that the issue would be solved. Him on one side of the pond and me on the other..... changing a parameter at a time and discussing it over Discord. Once again managed to generate a working bzroot file, but tested the same script again and it failed. Got to admit that confused the hell out of me..... Had to go to the shops to pick up some stuff, which gave me a good hour in the car to think about things and I had a thought, I did a lot of initial repacking on my laptop rather than via an ssh connection to an Unraid VM, and I wondered if that may have been the reason I couldn't reproduce the working repack. Reason being, tab completion on my Ubuntu based laptop means I have to prepend any script with ./ whereas on Unraid I can just enter the first two letters of the script name and tab complete will work, obviously I will always take the easiest option. I asked myself if the working build I'd got earlier was failing because it was dependent on being run using ./ and perhaps I'd run it like that on the occasions it had worked. Chatted to bass_rock about it and he kicked off a repackaging of stock bzroot build with --no-absolute-filenames removed from the cpio bit and it worked, we can only assume something must have changed LT side at some point. To put it into context this cpio snippet we've been using since at least 2014/5 or whenever I started with the DVB builds. The scripts to create a Nvidia build are over 800 lines long (not including the scripts we pull in from Slackbuilds) and we had to change 2 of them........ There are 89 core dependencies, which occasionally change with an extra one added or a version update of one of these breaks things. I got a working Nvidia build last night and was testing it for 24 hours then woke up to find FML Slackbuilds have updated the driver since. Have run a build again, and it boots in my VM. Need to test transcoding on bare metal but I can't do that as my daughter is watching a movie, so it'll have to wait until either she goes for a nap or the movie finishes. Just thought I'd give some background for context, please remember all the plugin and docker container authors on here do this in our free time, people like us, Squid, dlandon, bonienl et al put a huge amount of work in, and we do the best we can. Comments like this are not helpful, nor appreciated, so please read the above to find out, and get some insight into why you had to endure the "exhaustion" of constant reminders to upgrade to RC7. Comments like this are welcome and make me happy..... EDT: Tested and working, uploading soon.1 point

-

1 point

.thumb.jpg.0c644260dacbdbc011d7ad8ba9a1c10a.jpg)