Leaderboard

Popular Content

Showing content with the highest reputation on 11/28/20 in all areas

-

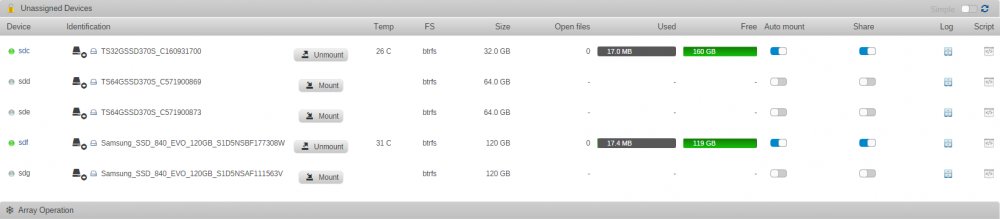

I just saw the release notes for the last version of Unasigned Devices: Might be that ?4 points

-

I come here to see what's new in development and find that there is a big uproar. Hate to say it, but I've been here a long time and community developers come and go and that's just the way it is. This unRAID product opens the door to personalizations, both private and shared. Community developers do leave because they feel that unRAID isn't going in the direction they want it to go or that the unRAID developers aren't listening to them even though there is no obligation to do so. Some leave in a bigger fuss than others. The unRAID developers do the best they can at trying to create a product that will do what the users want. They also do their best to support the product and the community development. The product is strong and the community support is strong and new people willing to put in time supporting it will continue to appear. Maybe some hint of what was coming might have eased tensions, but I just can't get behind users taking their ball and going home because unRAID development included something they used to personally support. That evolution has happened many times over the years, both incrementally and in large steps. That's the nature of this unRAID appliance type OS as it gets developed. There is no place for lingering bad feelings and continuing resentful posts. Hopefully, the people upset can realize that the unRAID developers are simply trying to create a better product, that they let you update for free, without any intent to purposely stomp on community developers.4 points

-

Fehler gefunden. Die SSDs waren in der falschen Reihenfolge. Funktioniert jetzt alles2 points

-

2 points

-

This is the support thread for multiple Plugins like: AMD Vendor Reset Plugin Coral TPU Driver Plugin hpsahba Driver Plugin Please always include for which plugin that you need help also the Diagnostics from your server and a screenshots from your container template if your issue is related to a container. If you like my work, please consider making a donation1 point

-

Application: borgmatic Docker Hub: https://hub.docker.com/r/b3vis/borgmatic Github: https://github.com/b3vis/docker-borgmatic Template's repo: https://github.com/Sdub76/unraid_docker_templates An Alpine Linux Docker container for witten's borgmatic by b3vis. Protect your files with client-side encryption. Backup your databases too. Monitor it all with integrated third-party services. Getting Started: It is recommended that your Borg repo and cache be located on a drive outside of your array (via unassigned devices plugin) Before you backup to a new repo, you need to initialize it first. Examples at https://borgbackup.readthedocs.io/en/stable/usage/init.html Place your crontab.txt and config.yaml in the "Borgmatic config" folder specified in the docker config. See examples below. A mounted repo can be accessed within Unraid using the "Fuse mount point" folder specified in the docker config. Example of how to mount a Borg archive at https://borgbackup.readthedocs.io/en/stable/usage/mount.html Support: Your best bet for Borg/Borgmatic support is to refer to the following links, as the template author does not maintain the application Borgmatic Source: https://github.com/witten/borgmatic Borgmatic Reference: https://torsion.org/borgmatic Borgmatic Issues: https://projects.torsion.org/witten/borgmatic/issues BorgBackup Reference: https://borgbackup.readthedocs.io Why use this image? Borgmatic is a simple, configuration-driven front-end to the excellent BorgBackup. BorgBackup (short: Borg) is a deduplicating backup program. Optionally, it supports compression and authenticated encryption. The main goal of Borg is to provide an efficient and secure way to backup data. The data deduplication technique used makes Borg suitable for daily backups since only changes are stored. The authenticated encryption technique makes it suitable for backups to not fully trusted targets. Other Unraid/Borg solutions require installation of software to the base Unraid image. Running these tools along with their dependencies is what Docker was built for. This particular image does not support rclone, but does support remote repositories via SSH. This docker can be used with the Unraid rclone plugin if you wish to mirror your repo to a supported cloud service.1 point

-

***Update*** : Apologies, it seems like there was an update to the Unraid forums which removed the carriage returns in my code blocks. This was causing people to get errors when typing commands verbatim. I've fixed the code blocks below and all should be Plexing perfectly now Y =========== Granted this has been covered in a few other posts but I just wanted to have it with a little bit of layout and structure. Special thanks to [mention=9167]Hoopster[/mention] whose post(s) I took this from. What is Plex Hardware Acceleration? When streaming media from Plex, a few things are happening. Plex will check against the device trying to play the media: Media is stored in a compatible file container Media is encoded in a compatible bitrate Media is encoded with compatible codecs Media is a compatible resolution Bandwith is sufficient If all of the above is met, Plex will Direct Play or send the media directly to the client without being changed. This is great in most cases as there will be very little if any overhead on your CPU. This should be okay in most cases, but you may be accessing Plex remotely or on a device that is having difficulty with the source media. You could either manually convert each file or get Plex to transcode the file on the fly into another format to be played. A simple example: Your source file is stored in 1080p. You're away from home and you have a crappy internet connection. Playing the file in 1080p is taking up too much bandwith so to get a better experience you can watch your media in glorious 240p without stuttering / buffering on your little mobile device by getting Plex to transcode the file first. This is because a 240p file will require considerably less bandwith compared to a 1080p file. The issue is that depending on which format your transcoding from and to, this can absolutely pin all your CPU cores at 100% which means you're gonna have a bad time. Fortunately Intel CPUs have a little thing called Quick Sync which is their native hardware encoding and decoding core. This can dramatically reduce the CPU overhead required for transcoding and Plex can leverage this using their Hardware Acceleration feature. How Do I Know If I'm Transcoding? You're able to see how media is being served by playing a first something on a device. Log into Plex and go to Settings > Status > Now Playing As you can see this file is being direct played, so there's no transcoding happening. If you see (throttled) it's a good sign. It just means is that your Plex Media Server is able to perform the transcode faster than is necessary. To initiate some transcoding, go to where your media is playing. Click on Settings > Quality > Show All > Choose a Quality that isn't the Default one If you head back to the Now Playing section in Plex you will see that the stream is now being Transcoded. I have Quick Sync enabled hence the "(hw)" which stands for, you guessed it, Hardware. "(hw)" will not be shown if Quick Sync isn't being used in transcoding. PreRequisites 1. A Plex Pass - If you require Plex Hardware Acceleration Test to see if your system is capable before buying a Plex Pass. 2. Intel CPU that has Quick Sync Capability - Search for your CPU using Intel ARK 3. Compatible Motherboard You will need to enable iGPU on your motherboard BIOS In some cases this may require you to have the HDMI output plugged in and connected to a monitor in order for it to be active. If you find that this is the case on your setup you can buy a dummy HDMI doo-dad that tricks your unRAID box into thinking that something is plugged in. Some machines like the HP MicroServer Gen8 have iLO / IPMI which allows the server to be monitored / managed remotely. Unfortunately this means that the server has 2 GPUs and ALL GPU output from the server passed through the ancient Matrox GPU. So as far as any OS is concerned even though the Intel CPU supports Quick Sync, the Matrox one doesn't. =/ you'd have better luck using the new unRAID Nvidia Plugin. Check Your Setup If your config meets all of the above requirements, give these commands a shot, you should know straight away if you can use Hardware Acceleration. Login to your unRAID box using the GUI and open a terminal window. Or SSH into your box if that's your thing. Type: cd /dev/dri ls If you see an output like the one above your unRAID box has its Quick Sync enabled. The two items were interested in specifically are card0 and renderD128. If you can't see it not to worry type this: modprobe i915 There should be no return or errors in the output. Now again run: cd /dev/dri ls You should see the expected items ie. card0 and renderD128 Give your Container Access Lastly we need to give our container access to the Quick Sync device. I am going to passively aggressively mention that they are indeed called containers and not dockers. Dockers are manufacturers of boots and pants company and have nothing to do with virtualization or software development, yet. Okay rant over. We need to do this because the Docker host and its underlying containers don't have access to anything on unRAID unless you give it to them. This is done via Paths, Ports, Variables, Labels or in this case Devices. We want to provide our Plex container with access to one of the devices on our unRAID box. We need to change the relevant permissions on our Quick Sync Device which we do by typing into the terminal window: chmod -R 777 /dev/dri Once that's done Head over to the Docker Tab, click on the your Plex container. Scroll to the bottom click on Add another Path, Port, Variable Select Device from the drop down Enter the following: Name: /dev/dri Value: /dev/dri Click Save followed by Apply. Log Back into Plex and navigate to Settings > Transcoder. Click on the button to SHOW ADVANCED Enable "Use hardware acceleration where available". You can now do the same test we did above by playing a stream, changing it's Quality to something that isn't its original format and Checking the Now Playing section to see if Hardware Acceleration is enabled. If you see "(hw)" congrats! You're using Quick Sync and Hardware acceleration [emoji4] Persist your config On Reboot unRAID will not run those commands again unless we put it in our go file. So when ready type into terminal: nano /boot/config/go Add the following lines to the bottom of the go file modprobe i915 chmod -R 777 /dev/dri Press Ctrl X, followed by Y to save your go file. And you should be golden!1 point

-

I'd like to move my development off of my unraid server. Has anyone worked through setting up TLS on the daemon, so that you connect remotely? Edit: I figured it out, so I'll edit this top post to be a HOWTO. First, I didn't bother setting up security because docker is only exposed on my LAN. If you want security, check out the Docker docs. [*]On your unraid sever, edit /boot/config/docker.cfg, adding two -H options:[br] DOCKER_OPTS="--storage-driver=btrfs -H unix:///var/run/docker.sock -H tcp://0.0.0.0:2375" [*]On your remote machine, install the docker client. Then either add the -H tcp://tower:2375 option to your docker command, or put export DOCKER_HOST=tcp://tower:2375 into your ~/.bashrc. When you're done, you can run "docker ps", "docker build" or whatever from your dev machine, with all the action happening on your unraid server. I like this better because I'm on my normal dev machine, with github credentials, my favorite GUI editor, etc.1 point

-

Those following the 6.9-beta releases have been witness to an unfolding schism, entirely of my own making, between myself and certain key Community Developers. To wit: in the last release, I built in some functionality that supplants a feature provided by, and long supported with a great deal of effort by @CHBMB with assistance from @bass_rock and probably others. Not only did I release this functionality without acknowledging those developers previous contributions, I didn't even give them notification such functionality was forthcoming. To top it off, I worked with another talented developer who assisted with integration of this feature into Unraid OS, but who was not involved in the original functionality spearheaded by @CHBMB. Right, this was pretty egregious and unthinking of me to do this and for that I deeply apologize for the offense. The developers involved may or may not accept my apology, but in either case, I hope they believe me when I say this offense was unintentional on my part. I was excited to finally get a feature built into the core product with what I thought was a fairly eloquent solution. A classic case of leaping before looking. I have always said that the true utility and value of Unraid OS lies with our great Community. We have tried very hard over the years to keep this a friendly and helpful place where users of all technical ability can get help and add value to the product. There are many other places on the Internet where people can argue and fight and get belittled, we've always wanted our Community to be different. To the extent that I myself have betrayed this basic tenant of the Community, again, I apologize and commit to making every effort to ensure our Developers are kept in the loop regarding the future technical direction of Unraid OS. sincerely, Tom Mortensen, aka @limetech1 point

-

Bonjour, je suis très ennuyé, j'aimerais BEAUCOUP acheter une licence Unraid, en l'occurence la PLUS, mais il semble que ce soit une mission impossible. Je suis certain que j'ai des fonds sur le compte avec lequel j'aimerais faire l'achat, j'ai essayé avec ma carte VISA et avec PayPal mais à chaque fois à la finalisation j'ai un message comme quoi il y a une erreur lors de la transaction. N'y a -t-il vraiment pas moyen de régler ce paiement par un autre biais? Merci à celui qui pourra m'aider. J'ai trop travailler dans ma config Unraid pour tout perdre. Je vous souhaites une bonne journée et prenez soin de vous et des autres.1 point

-

I'm pondering a solution that no one will even notice. I'm thinking of mounting remote shares to /mnt/remotes and adding symlinks to the /mnt/disks/ mount point for remote shares so all existing apps will still work, and recommend that as people add new apps, and modify older apps, they begin to use the /mnt/remotes mount point.1 point

-

Thanks for the lesson @jonathanm, next time I will think of what file sytem is used before posting ... go file modified, usbreset will be copied to /usr/local/sbin and I modified the user script accordingly. Thks for the template @SimonF We'll see tomorrow, as now it's 10pm, good night all.1 point

-

for a hdhr you dont need any dvb plugins, so yes, its just simple as is ... nothing to watch for1 point

-

Read errors that are successfully calculated from parity and rewritten to the drive in question do not result in failing the drive. However... it's kind of misleading, and at first glance I'm hesitant to trust the result 100%, since the parity was forced to be correct at those addresses that failed the read. That's a whole can of worms that's a little above my pay grade, if @limetech isn't too busy maybe he can pop by and provide some clarity on what happens when a read error occurs during a parity check. My gut says parity is assumed to be correct for those specific sectors, with no way of verifying that unless you have 2 parity drives. That means it's possible for the data to be wrong at those addresses. Not particularly likely, just possible. As far as the drive itself, I'd run the extended smart test and see what results you get.1 point

-

Puh, ich muss das board erst einbauen da mir noch ein kühler fehlt... Aber ich glaub die 190 ist schon das maximum ich mach dir dann ein foto wenn es drin ist. Das board das ich oben fotografiert hab hat genau 191mm1 point

-

Je viens de voir que ma carte est géolocalisée pour les paiements e-commerce pour uniquement l'Europe. Où se trouve votre serveur de paiement ? Je peux changer cette géolocalisation, sur quel continent ? . C'est peut-être ça ? Merci merci1 point

-

Hi @ich777 as below. I'm starting to think maybe it could be i440x(currently in use) vs q35 issue but just guessing really. I have 3 x win 10 vm's 1x vnc only the other two have 2070 supers passed through and usb 3. Case: Corsair Obsidian 750d | MB: Asrock Trx40 Creator | CPU: AMD Threadripper 3970X | Cooler: Noctua NH-U14S | RAM: Corsair LPX 128GB DDR4 C16 | GPU: 2 x MSI RTX 2070 Super's | Cache: Intel 660p Series 1TB M.2 X2 in 2TB Pool | Parity: Ironwolf 6TB | Array Storage: Ironwolf 6TB + Ironwolf 4TB | Unassigned Devices: Corsair 660p M.2 1TB + Kingston 480GB SSD + Skyhawk 2TB | NIC: Intel 82576 Chip, Dual RJ45 Ports, 1Gbit PCI | PSU: Corsair RM1000i1 point

-

Did you check Spaceinvader One great tutorials ? https://www.youtube.com/channel/UCZDfnUn74N0WeAPvMqTOrtA1 point

-

Salut @latlantis, Je vois d'autres paiements arriver. Avez-vous des bloqueurs de publicités / fenêtres publicitaires? Pas de soucis. Je vais comprendre cela pour vous bien avant le 5 décembre. Pouvez-vous m'envoyer un e-mail avec des captures d'écran de l'erreur? Merci1 point

-

Salut @latlantis, difficile de faire des essais pour t'aider malheureusement. Est-ce que tu es sûr que ce n'est pas un soucis de navigateur ? As-tu essayé avec un autre, de préférence sans aucun plugin qui pourrait causer un problème ? Si tu as toujours l'erreur, peux-tu en faire un imprime écran et le partager sur le forum ? Ca pourrait aider Spencer.1 point

-

In fact, remove everything from NerdPack that you don't use regularly. Looks like you installed all of it. Do you know what any of those are for or did you just install a bunch of stuff for no good reason?1 point

-

I'm new to Grafana myself so I may be wrong, but I think if you go in to edit the panel, you can click on the Query Inspector button. In the pane that slides out you can click on the JSON tab. I think if you copy an paste that code into here, we can copy and paste into our dashboard.1 point

-

If you fix filesystem on an sd device in the array you will invalidate parity. You must use the md device So disk17 is md17 unless you use encryption. Best if you just go through the webUI to check filesystem then it will use the correct device: https://wiki.unraid.net/Check_Disk_Filesystems#Checking_and_fixing_drives_in_the_webGui1 point

-

Probably best to run the File system check against disk 17 after reseating the cable to it1 point

-

So to enter nextcloud docker console, click on the docker icon and select console option unfortunately nextcloud docker doesn't include rsync, but since you know that with krusader did that in about 10 sec. While on docker's console do cp input_path output_path e.g. cp /data/admin/files/testfile.mp4 /data/test/testfile.mp4 if you want to cp folder you have to do it like this cp -r /data/admin/files/ /data/test/ If it takes lot more that 10 seconds for the same file. Probably something is up with the docker engine? Not sure what you cause that. But this will help you narrow it down1 point

-

Aaahh there we go! Looks like the Fix Common Problems plugin hasn't been updated to accomodate this yet. Thanks! I'll flag the error as ignore for now1 point

-

Hier dürfte was dabei sein: https://www.raymond.cc/blog/how-to-use-one-ups-on-multiple-computers/ Insbesondere der letzte Absatz. Zur USV: Ich denke die geben sich alle nicht viel. Selbst die mit "unechter" Sinuskurve scheinen ja problemlos zu funktionieren. Ich würde nur wenn darauf achten, dass man die Ersatzbatterie problemlos beschaffen kann. Also jetzt nicht gerade ein Importmodell. Ich hole mir vielleicht die APC BX700U-GR. Die passt denke ich optimal in ein 10 Zoll Rack. Muss ich noch mal messen.1 point

-

when you say it worked before it should work the same way when the dependencies are the same ... now 1st to clarify DVB-T - u have a DVB-T Antenna plugged in ? DVB-C - is cable, no Antenna, just a simple cable from the wall outlet usually DVB-S - also a cable going to a dish and your tuner is recognized as a LG... in tvheadend which looks weird to me but who knows about dependencies, you use the same plex as before (official plex, lsio plex, binhex plex, ...), u use the same driver version as before (libreelec, TBS, ...)1 point

-

Depends upon what you're trying to prioritize over another, but this is a start https://forums.unraid.net/topic/57181-docker-faq/page/2/?tab=comments#comment-5660871 point

-

I've just released a new version of UD. The main new features: You can now limit the scope of UD to disks only, shares only, or both. If you don't need remote shares, you can turn that feature off. Remote shares are now mounted at /mnt/remotes and not /mnt/disks to separate the mount points for disk devices and remote shares. If you use a remote share local mount point in a Docker container or VM, you should redo them to the new mapping. To verify where the mount point is mapped, mount the share and then click on the mount point text to browse the remote share. At the top of the page, you should see /mnt/remotes/... If the share is still mapping to /mnt/disks, you can unmount the share, then click on the mount point text to change it. Just click 'Change' and the mount point will be corrected.1 point

-

i believe you need a leading / . so "/Downloads/incomplete" not "Downloads/incomplete". also, "/Downloads/complete".1 point

-

Attached OVMF files from edk2 stable release 202011, released today. Work good for me. edk2-202011.zip1 point

-

To anyone still having this problem, I manged to resolve it by setting Tunable (support Hard Links) in Settings -> Global Share Settings to No1 point

-

The world is full with people who make mistakes. Every day 24x7 and it will never stop. True progress and understanding comes from those who honestly and without holding back in public appologise for their f-up and i can only applaud. And its is never ever too late to appologise as long as its honest and fully from the hart. Hope the damage done is not too big and we can move on as one big happy hacking family. I so love unraid and all its amazing company and community developers who put time , blood and sweat in making this product great (again) for us humble end users. Thank you all and please throw this whole ffing year in /dev/null where it belongs and lete make it an amazing 2021 to compensate. And if we compare what happend here to the world wide pandemic and all its pain and suffering we should be able to step over this comparitively little thing right ?1 point

-

I know this likely won't matter to anyone but I've been using unraid for just over ten years now and I'm very sad to see how the nvidia driver situation has been handled. While I am very glad that custom builds are no longer needed to add the nvidia driver, I am very disappointed in the apparent lack of communication and appreciation from Limetech to the community members that have provided us with a solution for all the time Limetech would not. If this kind of corporate-esque "we don't care" attitude is going to be adopted then that removes an important differentiating factor between Unraid and Synology, etc. For some of us, cost isn't a barrier. Unraid has an indie appeal with good leaders and a strong community. Please understand how important the unraid community is and appreciate those in the community that put in the time to make unraid better for all of us.1 point

-

As a user of Unraid I am very scared about the current trends. Unraid as a base it is a very good server operating system but what makes it special are the community applications. I would be very sad if this breaks apart because of maybe wrong or misunderstandable communication. I hope that everyone will get together again. For us users you would make us all a pleasure. I have 33 docker containers and 8 VM running on my system and I hope that my system will continue to be as usable as before. I have many containers from linuxserver.io. I am grateful for the support from limetech & the whole community and hope it will be continued. sorry for my english i hope you could understand me.1 point

-

@linuxserver.io thing makes me very sad... I'm using many dockers from them... Currently the nvidia Unraid driver Settings is already no more working. This seems to be a very sad moment, i feel. From my perspective this is a massiv loss a functionality that is currently not available in Unraid itself. Maybe those two parties should take calm and go into discussion. I hope they not completely out of developing for the community things could be clarified... I hope they're really not stopping all of their great community dockers. I would encourage anyone else, who uses dockers or plugins from them, to write their two cents, so the impact on the community gets more attention.1 point

-

Great news. Crazy how they constantly focus on features benefiting more than 1% of the maximum userbase, right. 🤪1 point

-

1 point

-

Not so much focusing on the niceness option as it is the only option I have found that was not pinning to cores and it seemed to be the linux equivalent to priority in windows. Got to remember that when someone doesn't have a clue what things are called in linux, they don't even know what to search to find the answers they need and will latch on to the first thing that seems to do what they want since by that point that are just glad to see something that might work. I missed the cpu-shares option as it was on the 2nd page. I tried searching for CPU, priority etc but nothing useful came up on the first page. CPU-shares does seem to be what I am looking for, glad you mentioned that I can put the number higher then 1024, that is way easier then trying to change every other docker to lower priority. Will this also give higher priority vs non-docker processes? Not as big of a deal but curious.1 point

-

Figured it out. No need to mount through /etc/fstab. What's missing are entries in /etc/mtab, which are created if mounted from fstab. So a few echo into /etc/mtab is the solution. Just need to do this at boot. Each filesystem that is accessible by smb (even through symlinks) needs a line in mtab to stop the spurious warning spam. echo "[pool]/[filesystem] /mnt/[pool]/[filesystem] zfs rw,default 0 0" >> /etc/mtab1 point

-

I guess this is for anyone who finds this threat in the future. I finally FIXED IT Special thanks to @peter_sm I followed a bunch of his threads and the information from the Lv1Forums where I found that adding "pcie_no_flr=1022:149c,1022:1487" would solve this issue. They (2 of them) suspected that this is related to the x79 platform (which is also what I have). Anyway, I have shut it down, restarted, and updated it more times that I cared to count without issues. I've also left the VM on over night, played heavy games on it, and there was still no issue. Now that everything is working as it should, I'm back to loving unraid.1 point

-

1 point

-

1 point

-

A slightly better way to maintain the keys across reboots is to * copy the authorized_keys file to /boot/config/ssh/root.pubkeys * copy /etc/ssh/sshd_config to /boot/config/ssh * modify /boot/config/sshd_config to set the following line AuthorizedKeysFile /etc/ssh/%u.pubkeys This will allow you to keep the keys on the flash always and let the ssh startup scripts do all the copying.1 point

-

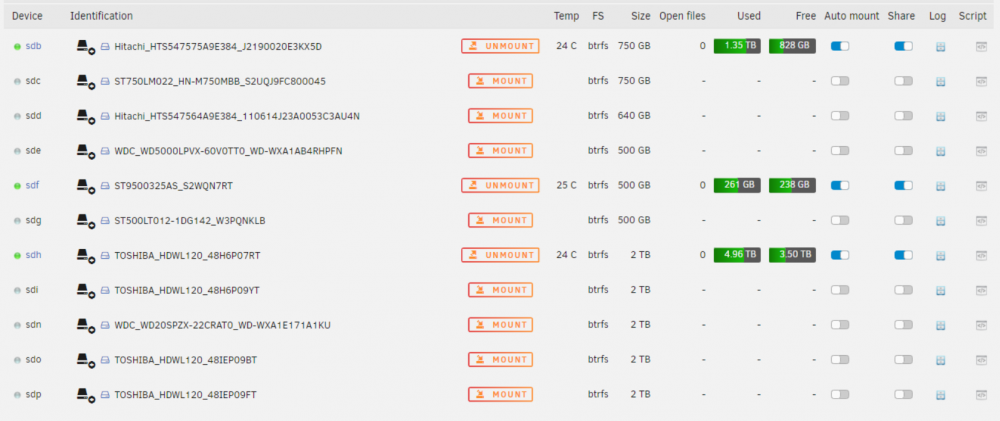

Can I manually create and use multiple btrfs pools? Multiple cache pools are supported since v6.9, but for some use cases this can still be useful, you can also use multiple btrfs pools with the help of the Unassigned Devices plugin. There are some limitations and most operations creating and maintaining the pool will need to be made using the command line, so if you're not comfortable with that wait for LT to add the feature. If you want to use the now, here's how: -If you don't have yet install the Unassigned Devices plugin. -Better to start with clean/wiped devices, so wipe them or delete any existing partitions. -Using UD format the 1st device using btrfs, choose the mount point name and optionally activate auto mount and share -Using UD mount the 1st device, for this example it will be mounted at /mnt/disks/yourpoolpath -Using UD format the 2nd device using btrfs, no need to change the mount point name, and leave auto mount and share disable. -Now on the console/SSH add the device to the pool by typing: btrfs dev add -f /dev/sdX1 /mnt/disks/yourpoolpath Replace X with correct identifier, note the 1 in the end to specify the partition (for NVMe devices add p1, e.g. /dev/nvme0n1p1) -Device will be added and you will see the extra space on the 1st disk free space graph, whole pool will be accessible in the original mount point, in this example: /mnt/disks/yourpoolpath -By default the disk is added in single profile mode, i.e., it will extend the existing volume, you can change that to other profiles, like raid0, raid1, etc, e.g., to change to raid1 type: btrfs balance start -dconvert=raid1 -mconvert=raid1 /mnt/disks/yourpoolpath See here for the other available modes. -If you want to add more devices to that pool just repeat the process above Notes -Only mount the first device with UD, all other members will mount together despite nothing being shown on UD's GUI, same to unmount, just unmount the 1st device to unmount the pool. -It appears that if you mount the pool using the 1st device used/free space are correctly reported by UD, unlike if you mount using e.g. the 2nd device, still for some configurations the space might be incorrectly reported, you can always check it using the command line: btrfs fi usage /mnt/disks/yourpoolpath -You can have as many unassigned pools as you want, example how it looks on UD: sdb+sdc+sdd+sde are part of a raid5 pool, sdf+sdg are part of raid1 pool, sdh+sdi+sdn+sdo+sdp are another raid5 pool, note that UD sorts the devices by identifier (sdX), so if sdp was part of the first pool it would still appear last, UD doesn't reorder the devices based on if they are part of a specific pool. You can also see some of the limitations, i.e., no temperature is shown for the secondary pools members, though you can see temps for all devices on the dashboard page, still it allows to easily use multiple pools until LT adds multiple cache pools to Unraid. Remove a device: -to remove a device from a pool type (assuming there's enough free space): btrfs dev del /dev/sdX1 /mnt/disks/yourpoolpath Replace X with correct identifier, note the 1 in the end Note that you can't go below the used profile minimum number of devices, i.e., you can't remove a device from a 2 device raid1 pool, you can convert it to single profile first and then remove the device, to convert to single use: btrfs balance start -f -dconvert=single -mconvert=single /mnt/disks/yourpoolpath Then remove the device normally like above. Replace a device: To replace a device from a pool (if you have enough ports to have both old and new devices connected simultaneously): You need to partition the new device, to do that format it using the UD plugin, you can use any filesystem, then type: btrfs replace start -f /dev/sdX1 /dev/sdY1 /mnt/disks/yourpoolpath Replace X with source, Y with target, note the 1 in the end of both, you can check replacement progress with: btrfs replace status /mnt/disks/yourpoolpath If the new device is larger you need to resize it to use all available capacity, you can do that with: btrfs fi resize X:max /mnt/disks/yourpoolpath Replace X with the correct devid, you can find that with: btrfs fi show /mnt/disks/yourpoolpath1 point