Leaderboard

Popular Content

Showing content with the highest reputation since 03/18/24 in Posts

-

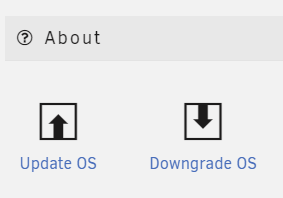

This is a bug fix release, resolving a nice collection of problems reported by the community, details below. All users are encouraged to read the release notes and upgrade. Upgrade steps for this release As always, prior to upgrading, create a backup of your USB flash device: "Main/Flash/Flash Device Settings" - click "Flash Backup". Update all of your plugins. This is critical for the Connect, NVIDIA and Realtek plugins in particular. If the system is currently running 6.12.0 - 6.12.6, we're going to suggest that you stop the array at this point. If it gets stuck on "Retry unmounting shares", open a web terminal and type: umount /var/lib/docker The array should now stop successfully If you have Unraid 6.12.8 or Unraid Connect installed: Open the dropdown in the top-right of the Unraid webgui and click Check for Update. More details in this blog post If you are on an earlier version: Go to Tools -> Update OS and switch to the "Stable" branch if needed. If the update doesn't show, click "Check for Updates" Wait for the update to download and install If you have any plugins that install 3rd party drivers (NVIDIA, Realtek, etc), wait for the notification that the new version of the driver has been downloaded. Reboot This thread is perfect for quick questions or comments, but if you suspect there will be back and forth for your specific issue, please start a new topic. Be sure to include your diagnostics.zip.11 points

-

I think it's two fold. One, there is a group of individuals that do this for a living and want to play around it to learn it for their job, or as a way to see if it's something they should deploy at their workplace. Secondly, I think others do it because of the "cool" factor. Don't get me wrong, I still think it's a mighty fine solution (I've played around with it myself), but it takes a lot more configuration/know-how to get it working. Like you said, it doesn't have docker by default, so you either have to create a VM and/or LXC, install docker and go from there. Personally I've played around with Jellyfin on Proxmox...I did it in a VM, I did it with docker in a VM, as well as an LXC and while it was fun, it wasn't no where near as simple as Unraid, where it's click install, change a few parameters and go. I also see a lot of discussion around the "free" options out there. No offence, but these "free" options aren't new. They've been around just as long, if not longer than Unraid and guess what, people still chose Unraid. Why?, because it fits it target market...."home users." Easy to upgrade storage, similar easy to follow implementations for VMs and docker, a helpful/friendly community, plenty of good resources to help you along (ie: Spaceinvader One),etc... There's more to Unraid then just the software and it's why people chose Unraid over the others. People will pay for convenience, it's not always about getting stuff for free. Does Unraid have every feature that the other solutions have...no, but it doesn't need to and IMHO most of them don't really matter in a home environment anyways. Furthermore, there seems to be a lot of entitled individuals. I feel bad for software developers, because people want their stuff, they want them to make changes, add new features, etc... and oh btw, please do it for free, or charge someone else for it, thank you... I've seen a lot of arm chair business people here offering suggestions to just do what the others do; go make a business version, go sell hardware, etc... No offence, but those things sound way simpler than what it actually takes. Not to mention there's no guarantee that it will pay off in the long run and has the potential to put you in a worse situation compared to where you were before. For example, lets say they do offer a business solution and price it accordingly. Since they (business/enterprise customers) will essentially be the one's funding Unraid's development in this case, they will be the ones driving the decisions when it comes to new features, support, etc....it won't be us home users using it for free. I want Lime Tech to focus on Unraid. I don't want them to focus of other avenues that can potentially make things worse in the long run because they have too much going on. Just focus on what they do best and that's Unraid. We can go back an forth all day long. At the end of the day, if you are that unhappy then please just move on (not you MrCrispy, just speaking generally hehe). While I can understand the reasoning behind the dissatisfaction, but to be honest, none of the naysayers have given Lime Tech a chance to prove themselves with this change. Sure we can talk about what "other" companies have done in the past, but in all honesty I don't care about "other" companies, all I care about is what Lime Tech does. Just because others have done terrible things, that doesn't mean every company will. It's ok to be skeptical, but you can be open minded as well. Give them a chance, don't just wish for them to shutdown and close up shop. I mean they've already listened and are offering a solution to those who want security updates/fixes for a period of time after the fact. Furthermore they've also upped the starter from 4 to 6 devices, so they are listening....yet people are still unhappy. Which leads me to believe there will be a group of entitled individuals who will never be happy no matter what they do.9 points

-

As previously announced, Unraid OS licensing and upgrade pricing is changing. As we finish testing and dialing in our new website and infrastructure, we aim to launch on Wednesday, March 27th, 2024. What this Means You have until March 27th, 2024, to purchase our current Basic, Plus, and Pro licenses or to upgrade your license at the current prices. New License Types, Pricing, and Policies We are introducing three new license types: Starter $49 - Supports up to 6 attached storage devices. Unleashed $109 - Supports an unlimited number of devices. Annual Extension Fee for Starter and Unleashed: $36 Lifetime $249 - Unlimited devices. No extension fee. Starter and Unleashed licenses include one year of software updates with purchase. After one year, customers can pay an optional extension fee to make them eligible for an additional year of updates. If you choose not to extend your license, no problem. You still own the license and have full access to the OS. If your license extension lapses (as in, you do not pay your annual fee), you can download patch releases within the same minor OS version that was available to you at the time of the lapse. Please review our FAQ section below for more on this security update policy. Basic, Plus, and Pro Keys Will Become 'Legacy Keys' These changes do not apply to current Basic, Plus, or Pro license holders. As promised, you can still access all updates for life and upgrade your Basic or Plus license. Once Starter, Unleashed, and Lifetime are available, Legacy licenses will no longer be sold. Legacy License Upgrade Pricing Changes Along with the new license types, we are increasing our Upgrade pricing for all legacy keys. Legacy key holders will also be able to move into the new system if they choose. The pricing will be as follows: Basic to Plus: $59 Basic to Pro: $99 Basic to Unleashed: $49 Plus to Pro: $69 Plus to Unleashed: $19 FAQs Please refer to our blog for a full list of FAQs8 points

-

Es gibt ein Problem mit 6.12.9 unter ganz bestimmten Umständen. Wer diese Konfiguration betreibt sollte 6.12.9 noch nicht installieren. Es geht um CIFS/SMB Remote Mounts die mit Unassigned Devices in Unraid angelegt werden (/mnt/remotes/xxx). Das heißt Unraid ist der Klient einer CIFS/SMB Verbindung. Die Funktion von Unraid als Server einer CIFS/SMB Verbindung ist nach derzeitigem Erkenntnisstand davon nicht betroffen. Es gibt zwei aktuelle Bug Reports, einer ist von mir: Weiterhin gibt es von @dlandon einen Hinweis wohin das Problem zeigt (Kernel/SAMBA): Erkennen könnt Ihr das Problem eindeutig an den CIFS VFS Fehlern in der syslog. Wenn diese auftauchen, dann seht Ihr oder Eure Software nur einen Teil der existierenden Ordner oder Dateien. Das kann erhebliche Probleme nach sich ziehen. Wer 6.12.9 über 6.12.8 bereits installiert hat, und diese Konfiguration betreibt bzw. den Fehler bekommt --> ein Downgrade auf 6.12.8 ist jederzeit problemlos möglich:7 points

-

This release reverts to an earlier version of the Linux kernel to resolve two issues being reported in 6.12.9. It also includes a 'curl' security update and corner case bug fix. All users are encouraged to read the release notes and upgrade. Upgrade steps for this release As always, prior to upgrading, create a backup of your USB flash device: "Main/Flash/Flash Device Settings" - click "Flash Backup". Update all of your plugins. This is critical for the Connect, NVIDIA and Realtek plugins in particular. If the system is currently running 6.12.0 - 6.12.6, we're going to suggest that you stop the array at this point. If it gets stuck on "Retry unmounting shares", open a web terminal and type: umount /var/lib/docker The array should now stop successfully If you have Unraid 6.12.8 or Unraid Connect installed: Open the dropdown in the top-right of the Unraid webgui and click Check for Update. More details in this blog post If you are on an earlier version: Go to Tools -> Update OS and switch to the "Stable" branch if needed. If the update doesn't show, click "Check for Updates" Wait for the update to download and install If you have any plugins that install 3rd party drivers (NVIDIA, Realtek, etc), wait for the notification that the new version of the driver has been downloaded. Reboot This thread is perfect for quick questions or comments, but if you suspect there will be back and forth for your specific issue, please start a new topic. Be sure to include your diagnostics.zip.6 points

-

Devs have said that 6.13 public testing should be ready to start soon (likely within another week or two unless a major bug is found), that will be the first version to bump to the newer kernel with inbuilt ARC support. Likely be in testing for quite a while as it is going to be a big kernel version jump, and this little patch update shows us that kernel upgrades can have all sorts of weird minor edge cases across the mess of mixed hardware people use.6 points

-

Data Volume Monitor (DVM) for UNRAID "A plugin that lets you monitor and act on your consumed data volume utilizing vnStat." With much pride I'd like to present my latest project - the Data Volume Monitor (DVM). Dashboards, footer information, notifications, user actions (on VMs/Docker containers) and custom scripting. These are a few of the features being offered - all centred around the data volume consumption of your server. Don't want to your Syncthing container to pull in more than 50 GB a day? No problem, DVM will stop and restart the container for you within those limits. Running a few data intensive VMs and want to pull the plug when it's been too much? No problem, DVM will disconnect and reconnect specific VMs from/to the network. Want to move around some files and folders when you've pulled in too much data? No problem, set up a custom script and DVM will execute it for you when it's time. Please report back if you are experiencing any problems! This plugin was developed with older machines in mind - it's been tested on 6.8.3 and above.5 points

-

I must say that the new update box, getting the updates notes and known issues before updating (or needing to check them here) is an nice touch!5 points

-

To be honest, based on the level of self entitlement shown by many posters regarding the licence changes, I don't know why Lime Tech should even bother trying to make you happy. From my perspective, Lime Tech have bent over backwards in an attempt to be fair and reasonable to existing and new licence holders. Do people think that software development is free? The entire world is in the midst of massive inflation, the cost of developing and maintaining software is not immune to this. Compared to the cost of hardware, the increases to licence costs are tiny. Some people need to grow up and get with the real world.5 points

-

Unraid plugin that enables you to adjust your Unraid system's power profile to enhance performance or improve energy efficiency. Additionally, it fine-tunes the TCP stack settings and network interface card (NIC) interrupt affinities to optimize network performance. Please note: This plugin is not compatible with other plugins that alter the same settings.4 points

-

Every plugin, no exceptions, are checked for security issues. This doesn't mean that there isn't going to be security flaws caused by libraries utilized, but you do know that any random plugin is not going to go ahead and outright delete your media etc. Every single plugin available via Apps gets installed on my production server, with no exceptions, and a code audit is done on what is actually present instead of what may be showing on GitHub. Every single update to every plugin I am notified about (within 2 hours), and with plugins which are not maintained by a contractor of Limetech is then code audited again. Every update. And with only a few exceptions, this happens on an actual production server not on a test server. If something ever fails the checks, then everything else gets dropped (including the 9-5 job) to handle the issue. Whether that means simply getting the application out of apps temporarily and asking the maintainer / author "WTF? You can't do this", or notifying everyone with it installed via FCP about any issue, or preventing the Auto Update plugin from installing any update to the plugin, or even taken more drastic measures, provisions are in place to protect the user.. Closed source applications, whether they are plugins or containers, may be frowned upon, but are not necessarily disallowed. With plugins, the standard for open-source vs closed-source is more strict, but it is not 100% a requirement that only open source be present.4 points

-

4 points

-

Agree with what I've been doing for the last 5 years or not. I'm good with everyone's opinion and can understand both sides of the argument. But, know that like everything else in CA, these things get tested to death to ensure that they are completely harmless, and functionality isn't impeded, and watch like a hawk every posting to ensure that nothing has gone awry. CA is a passion project. Last year's tribute to the bat if you chose to continue with the homage didn't affect anything (unless you were unable to do a handstand). This year, even if you didn't "revert" to the author dictated images upon installations the author's images would appear in the docker tab, and in all cases it's a simple one click to restore that's clearly communicated. My personal opinion regarding this stuff is that it shows that I actually do care about CA, it's security, and this community. I'm not the type of person (probably few are) who could survive long in a coding factory fashioned after a sweatshop. (Pretty sure that no one else in a space invader one interview inadvertently dropped a dick joke that made it through the sensors) But, everyone did miss the biggest joke of all. While every app had a randomly selected photo (changed every 2 hours), right on the home page (Spotlight section), the "unbalanced" app had a specific picture pinned to it for the duration of the day. While Tom et al did give a go ahead for this, any and all blame / anger / misgivings should get thrown my way. I am glad however that my original idea / planning for this year I did ultimately decide against. It was going to leverage a coding change which is being put into place for a different legitimate reason, but after looking at how the end result turned out I figured it would completely mess everyone up. Either way, as far as I'm concerned, debate about yesterday's event should now be over. What's done is done and let's all get back to business.4 points

-

Life is too short to not have some kind of sense of humor. I approved this little "prank" so come one, let me have it!4 points

-

I know it's April 1, but please, PLEASE, in the future restrain yourselves from trying to come up with a funny hack for MY SERVER SOFTWARE. Write a funny blog post. Make a crazy product announcement. Whatever. But never, EVER, mess with the CRITICAL software that we depend on. Would you enjoy waking up to find that your all your phone's contacts were renamed, "just for fun"? Or all your Windows icons? Well, just imagine my joy late last night when I got the monthly "these things should be updated" email from my server, logged in, and saw RANDOM FACES instead of app icons. Was there a hack on the Docker servers? On Unraid? What the heck was happening? Oh, yeah, sit there and chuckle at your great cleverness. Or don't. This isn't a toy, this isn't some unimportant little side-app. This is server software, and it should be immune to these kinds of too-clever-by-half "pranks". Find another way to prove to the world you have whimsy.4 points

-

Reposting here from my original in General Support: I know it's April 1, but please, PLEASE, in the future restrain yourselves from trying to come up with a funny hack for MY SERVER SOFTWARE. Write a funny blog post. Make a crazy product announcement. Whatever. But never, EVER, mess with the CRITICAL software that we depend on. Would you enjoy waking up to find that your all your phone's contacts were renamed, "just for fun"? Or all your Windows icons? Well, just imagine my joy late last night when I got the monthly "these things should be updated" email from my server, logged in, and saw RANDOM FACES instead of app icons. Was there a hack on the Docker servers? On Unraid? What the heck was happening? Oh, yeah, sit there and chuckle at your great cleverness. Or don't. This isn't a toy, this isn't some unimportant little side-app. This is server software, and it should be immune to these kinds of too-clever-by-half "pranks". Find another way to prove to the world you have whimsy. ---- One of the things that REALLY bothers me about this is that they added NEW CODE to make this happen. At some point, somewhere, code was added to the system that enabled this. It looks like it was in the Community Apps plugin, but still that's code that is running on our UnRaid instances. As a professional software developer (yeah, appeal to authority, I know), this is deeply disturbing. This was essentially a "hidden feature" that nobody knew was being installed on our servers. Adding "joke" code is a bad idea in general. Adding it to a server can lead to potential security vulnerabilities. Pretty much ANY new code can lead to vulnerabilities through unintended consequences, so adding something like this rubs me the wrong way on so many levels.4 points

-

just a quick script you can run in your terminal to output containerIds and corresponding xz version. anything below 5.6.0 should be fine: for containerId in $(docker ps -q); do echo $containerId && docker exec -it $containerId sh -c 'xz --version'; done4 points

-

DEVELOPER UPDATE - OFFICIAL RELEASE - Ultimate UNRAID Dashboard 1.7 Today is the release of the Ultimate UNRAID Dashboard version 1.7! Much has changed since the last official release of UUD 1.6. Various plugins and dependencies have been abandoned/deprecated, multiple unRAID OS updates have occurred, and my personal needs for the UUD have evolved over time. Nevertheless, I am very excited to share the new features, use cases, and updated design/architecture of this latest release with all of you. I feel it is the most refined and fine-tuned version yet, and I use it every single day. It is simply a pure joy to have in my life and I really love having all of this information at my fingertips. Please learn from this latest release and use it as a launch pad to create YOUR own Ultimate Dashboard. Without further delay, I bring to you the UUD 1.7! Screenshots (Click for Hi-Res): Deprecated: unRAID API The unRAID API was abandoned/deprecated by its developer after the release of UUD 1.6 It also contained a perpetual memory leak that was never patched All unRAID API panels have been removed from UUD 1.7 If another alternative is developed in the future, I will consider adding it to a future version of the UUD Variable for Future API Data Source Remains I also left this in for people who still wish to use the unRAID API (understanding the known risks) New Dependencies: None New Features: Plex Streams All Real-Time Plex Panels Have Been Moved to the Top of the UUD Provides a Overall Snapshot of Server/Media Use New/Reworked Panels Current LAN (Home Network) Numeric Transmit (TX) Bandwidth Current WAN (Internet) Numeric Transmit (TX) Bandwidth Current Transcode Streams Current Direct Play Streams Current Direct Streams Current Streams Provides a Detailed Real-Time Log of All Current Streams (Last 30 Seconds) Current Stream Types Current Stream Devices Historical Stream Heatmap Now (Last 1 Hour) Current Day (Last 24 Hours) Last Week (Last 7 Days) Last Month (Last 30 Days) Last Year (Last 365 Days) Current Stream Origination Detailed Stream Origination Map View via Geolocation by IP Address Stream Bandwidth Current WAN (Internet) / LAN (Home Network) Transmit (TX) Numeric Bandwidth Array Growth Array Growth Chart Detailed Heatmap Graph of Array Growth Over Time (Timeline Adjustable)\ Array Total Array Available Array Utilized Array Utilized % Overwatch "Cache Central" Completely redesigned array and cache overview section with net new panels with an increased focus on cache health, including real-time/historical disk statistics. Array Growth Day (Last 24 Hours) Array Growth Week (Last 7 Days) Array Growth Month (Last 30 Days) Array Growth Year (Last 365 Days) Array Growth Lifetime (Last X Number of Years - Adjustable) Cache Utilized Cache Available Cache Utilized % SSD Temperature SSD Life Used (Total) SSD Power On Time SSD Writes Current (Last 30 Seconds) SSD Writes Day (Last 24 Hours) SSD Writes Month (Last 30 Days) SSD Writes Lifetime (Total) SSD Reads Current (Last 30 Seconds) SSD Reads Day (Last 24 Hours) SSD Reads Month (Last 30 Days) SSD Reads Lifetime (Total) SSD I/O Current (Last Hour of Reads/Writes to Cache) SSD I/O Day (Last Day of Reads/Writes to Cache) SSD I/O Month (Last Month of Reads/Writes to Cache) SSD I/O Year (Last Year of Reads/Writes to Cache) Plex History Moved Plex Historical History to Its Own Section New Panel: Cumulative Stream Volume by User Graph Showing Cumulative Stream Volume (Last Month) Stacked by User (Timeline & Number of Users Adjustable) Device Types Historical Pie Chart of Stream Devices with Hover Over Key (Last 1 Month) Stream Types Historical Pie Chart of Stream Types (Direct Stream, Transcode, etc..) With Hover Over Key (Last 1 Month) Media Qualities Historical Pie Chart of Media Qualities (4K, 1080P, etc..) With Hover Over Key (Last 1 Month) Media Types Historical Pie Chart of Media Types (Movies, Shows, etc..) With Hover Over Key (Last 1 Month) Detailed Historical Stream Origination Map View via Geolocation by IP Address (Last 30 Days - Timeline Adjustable) Plex Stream Logs Standalone Panels Showing Detailed Plex Stream Logs Stream Log (Overview) Depicts an abbreviated log of all streams based on stream start times over the last 1 month Provides a Quick and Dirty List of What is Being Streamed By User Over Time Stream Log (Detailed) Depicts a full detailed log of all streams sorted by time over the last 1 week (Timeline Adjustable) Provides and in-depth look of all stream activity every 1 minute Can be used to track server issues/buffering/paused streams/abuse Historical UUD Panels All other historical panels (except for API) remain and have been improved/modified as needed for Grafana updates and bug-fixes Notes: unRAID OS UUD 1.7 was developed and tested on unRAID 6.11.4 It should be compatible with 6.12.X Please let me know if you run into any issues in this area Varken Even though Varken is no longer in development by its developer (from what I last heard), I am still using the latest Varken release for the UUD. I have seen zero issues with it and it is stable. Unless this becomes unavailable as a docker from Community Apps, it will remain along with all of the Plex functionality via Tautulli. Please refer to the first few posts in this thread to download, installation instructions and release note history. Direct Downland Link: Ultimate UNRAID Dashboard 1.7 Download (JSON) Install Instructions Are In Post #1 of This Thread: There are a few people out there who have made great documentation and videos on how to install the UUD if you need a detailed tutorial (for those that are new). These are not official, but may help you. A quick web search of "UUD" or "Ultimate UNRAID Dashboard" should give you what you need. Example Install Instructions (Not Official): Video Tutorial Playlist (4 Video Playlist) Based on UUD 1.5 Text Instructions Based on UUD 1.5 https://liltrublmakr.github.io/Ultimate-UNRAID-Dashboard/1.5.0/install15/ Someone Else's Alternate Docker Compose (Not Required) Method (Not Official): https://github.com/goodmase/Ultimate-Unraid-Dashboard-Guid Reddit Discussion (One of Several): I hope you all enjoy! ~ Thanks, falconexe (Community Developer) @SpencerJ4 points

-

Some of the image i have built MAY have the affected versions installed, i am currently running a build of the base image to perform the 'Resolution' (see link) and i will then kick off builds of subsequent images. For reference here is the ASA for Arch Linux (base os), pay attention to the 'Impact', also keep in mind unless there is code calling xz then xz will not be running and therefore the risk is reduced, however i am keen to get all images updated:- https://security.archlinux.org/ASA-202403-1 EDIT - Further investigation into the way xz interacts with the system, it looks like in order for the exploit to be used you would need to have systemd operational (not the case with any of my images) and OpenSSH installed (not the case with any of my images), so in my opinion the risk is low here, but as I mentioned above I am keen to get all images updated, so please be patient as this can take a while and its Easter time so my time is restricted.4 points

-

There wont be one, at least not one with dates on it and as someone else said ether in this thread or another just recently, with good reason. If there was a time-frame for stuff, people would complain just as much if the dates was not correct. But here is what we know so far at least: 6.13 -ZFS part2 -Will be the last version of Unraid 6 7.x -ZFS expand zpool -Multiply Unraid-Arrays -Everything will be a pool, multiply unraid-pools, zfs-pools, btrfs-pools -Mover updated so you can use mover from pool to pool -Requirement for Unraid-array to start, lifted -VM Snapshot and VM cloning -WebGUI seach, with multilingual support -IntelARC support Future ideas: -Hardware database: will my hardware work with Unraid? Database with users config, if they work or what you need to do for it to work. Users can choose to upload their setup anonymously -My Friends Network: expanding of UnraidConnect. Backup files encrypted/unencrypted to other Unraid servers -Responsive webgui -UnraidAPI4 points

-

We know that Dynamix File Manager is expected to be built-in for the 6.13 release4 points

-

Ich hätte als Threadersteller die Lösung dem Problemlöser (ich777) zugesprochen anstatt sich selbst. Gibt ja auch ein Ranking hier im Forum.4 points

-

I think it is just due to the nature of Unraid development and philosophy. Limetech historically has taken a very long time to develop and test new releases (even minor ones). There are a lot of variables in a product such as Unraid which tries to run on as many hardware platforms as possible. They also thoroughly test to make sure no data loss will result from a new version of the software. It all takes time. The product also seems to be driven a lot by user requests/needs and priorities can change. I think they would rather just "go with the flow" than publish roadmaps that may change a lot. I do not speak for Limetech. If they did provide roadmaps, we would all likely be complaining about versions/features not making published deadlines. I was a software product manager for 25 years and was in charge of many roadmaps. We rarely ever made the published timelines due to unforeseen engineering roadblocks and trying to control "feature creep." It got to the point that I really has to pad the timelines and then customers complained about excessively-long development cycles. Roadmaps are doubly difficult for small companies with limited resources.4 points

-

Seems fair to me. I likely will never have a need to change my current two pro and one plus licenses, but, at least I know what the path is should I choose to go that way. Legacy users are protected and security updates within minor release will be available even to those with lapsed support. I think this addresses most concerns and everyone has enough information to decide where they go with Unraid. Change is often difficult, but, I feel this has been handled well and with more transparency than is often the case in these situations.4 points

-

3.21.24 Update: We have a new blog up here that outlines our new pricing, timeline and some new FAQs.4 points

-

4 points

-

Hey team! I have published another RomM CA template now. (it took a couple of days to appear / publish in CA, please excuse the delay) When you are performing a fresh install, you will be presented with 2 options. Please select 'latest' in the option list to get the current v3.X and the template will have all the current variables available for you. Note: v3.X requires a MariaDB container, which is quick to add in Unraid CA. FYI: @Green Dragon - please notify the Discord community if you feel its appropriate.4 points

-

After another 1.5hrs of searching I finally managed to stumble across some more info, from user "frr" on superuser https://superuser.com/questions/570110/how-to-make-the-linux-framebuffer-console-narrower It really is as simple as adding video=<hres>x<vres>@<refresh> to the sysconfig! e.g. video=1600x1000@60 Leaving this hoping to help someone else in future as this took me forever! Unraid version 6.12.9.3 points

-

Wir nutzen Keepass in der Firma. Das Browser Plugin funktioniert nicht von denen.. Dies und die langsame Performence des Servers bewegte uns nun nach glaube 5-10 Jahren oder so, zu einem andern PMGR zu wechseln. Ich kann Bitwarden (Vaultwarden) empfehlen.3 points

-

No. The whole purpose of the parity swap procedure is to replace a parity drive with a larger one and then rebuild the contents of the emulated drive onto the old parity drive that is replacing the disabled drive. It works in two phases which have to both run to completion without interruption if you do not want to have to restart again from the beginning. the first phase will copy the contents of the old parity drive onto the new (larger) parity drive. During this phase the array is never available.. when that completes the standard rebuild process starts running to rebuild the emulated drive onto the old parity drive. If you were doing this process in Normal mode the array is available but with reduced performance (as is standard with rebuilding). If running in Maintenance mode the array will become available only when the rebuild completes and you restart the array in normal mode.3 points

-

@anknv@Aer@rubicon@ippikiookami (and those I forgot 😅) I just pushed a new version that should fix issues with InvokeAI. They removed almost all parameters when launching the UI so the file "parameters.txt" is no longer used and will be renamed. Instead, a default config.yaml file will be placed in the "03-invokeai" folder, you can modify it to suit your preferences. I had a very short time to test, I hope I didn't leave too many bugs 🤞 Fixes I made for auto1111 path and Kohya are now also in the latest version.3 points

-

Hallo, danke an die Beiträge hier im Thread, das richtige CPU Pinning hat bei mir das ruckeln im HTPC abgestellt mit der Wiedergabe über MPC-HC / MADVR. Gruß Andi3 points

-

3 points

-

@TheCyberQuake Update the Repository: entry with "rommapp/romm:3.1.0-rc.1" - thank you @zurdi15!!3 points

-

3 points

-

I'll just finish out by saying that April 1 is an opportunity, NOT an obligation. There are plenty of people to be pranked who are not your paid customers running your mission critical software on OUR hardware. Especially a prank that appears, at first glance, to indicate that something has gone seriously wrong with that software or the backing infrastructure. The tech world seems to take April 1 as some sort of "global challenge day". But it's not. it has turned into a day I dread, because every company/site wants to prove how clever and "fun" they are - yet often prove the opposite. Prank your friends. Prank your family. Prank your co-workers. But in the future, please leave my server out of it.3 points

-

I mean sure, humor is great. There's a time and a place. The time is definitely April 1st. The place? I'm not sure that should be on mission critical servers (or any servers) that people and businesses trust and rely on. Especially when they have paid for the infrastructure that runs it.3 points

-

I'm waiting on the upstream update, if it doesn't happen by the end of this week then I shall have to compile it myself and include in the build Sent from my 22021211RG using Tapatalk3 points

-

3 points

-

It's an April Fools thing, and incredibly inappropriate to put onto our servers.3 points

-

Der Fehler konnte im BUG Thread zwischenzeitlich reproduziert werden und wird sicherlich bald behoben. Danke an alle die geholfen haben. Den Thread mariere ich als gelöst. Frohe Feiertage euch allen. 🐰3 points

-

Because many users want this plugin, I have forked the template to allow the Apps Tab to install it (and for FCP to not complain about it being installed). I am unlocking this thread because whether or not the author has decided to go with a different direction on his OS needs, this is still the support thread for this plugin, and the plugin itself is unchanged. Note that there is ZERO support for this plugin from the author (and myself), and please feel free to fork this plugin yourself if you have the capability to support and maintain it. I personally do not agree with the fundamental nature of what this plugin does so I will not be maintaining it.3 points

-

I don't think Unraid is widely used in the commercial space. From it's inception, it has been designed and built to target the home market. Unraid first and foremost is a NAS. Business/enterprise customers looking for a NAS want very fast, robust, tried and true solutions and unfortunately, Unraid doesn't meet that criteria currently. Unraid's default storage scheme is good for ease of expansion, that still provides redundancy, but it's no speed demon. They've added caching to help with writes to the array, but when it comes to reads it's still slow. That being said, by adding ZFS Unraid is closing the gap. Whether or not that's enough to make it an option for businesses, remains to be seen. The other big factor in all of this is that a lot of business/enterprise customer's typically want support contracts and to be honest, I don't know if Lime Tech really wants to go down that path atm. Maybe if they grow enough to support it, but business/enterprise customers are different animals entirely compared to home users. The needs, wants and expectations from businesses are very different than home users. So I'd say for now, Unraid will still remain largely a solution targeted at home users, but who knows, that could change down the road. Yes and no, As I've said in my post above, I do understand the frustrations and skepticism, however, Lime Tech did in fact take some of that feedback and implemented some changes before the actual licensing terms changed (ie: increasing starter from 4 to 6 devices, and offering support updates to those who do not wish to renew the update licensing each year). Yet there wasn't even an acknowledgement of any kind from some of these people to at least say "ok Lime Tech is taking feedback into consideration and trying to alleviate customer concerns." I fully understand and I do not blame them one bit if they still feel skeptical and unsure, but to constantly be overly negative no matter what Lime Tech does to help alleviate concerns is just complaining plain and simple. Edited to make points a little clearer.3 points

-

3 points

-

This is not without precedent. When fiber optic Internet came to my neighborhood 20+ years ago, we were all promised free installation ($2700 actual cost) and speed increases as they became available with just a monthly ISP payment. I was among the first to sign up. For 15 years they kept their promise. About 7 years ago we "legacy" users were told that our speed would be capped at 100 Mbps. If we wanted more, we had to renounce our legacy status and pay $30 a month to the fiber optic provider (a consortium formed by 15 cities) + the ISP fee or pay $2700 for the "installation" and skip the $30 a month fee. I have no crystal ball and have no idea what will happen in the future with legacy Unraid licences, but, given their transparancy up to this point, I don't think they will start penalizing legacy users unless they pay up.3 points

-

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+- |.|.|.|.|.|.|o|o|o|o|o|o|O|O|O|O|O|O|0|0|0|0|0|0|0|0|0|0|O|O|O|O|O|O|o|o|o|o|o|o||.|.|.|.|.|.| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+- ██████╗░███████╗██████╗░░█████╗░██████╗░███╗░░██╗ ██╔══██╗██╔════╝██╔══██╗██╔══██╗██╔══██╗████╗░██║ ██████╔╝█████╗░░██████╦╝██║░░██║██████╔╝██╔██╗██║ ██╔══██╗██╔══╝░░██╔══██╗██║░░██║██╔══██╗██║╚████║ ██║░░██║███████╗██████╦╝╚█████╔╝██║░░██║██║░╚███║ ╚═╝░░╚═╝╚══════╝╚═════╝░░╚════╝░╚═╝░░╚═╝╚═╝░░╚══╝ ░█████╗░██████╗░███████╗░██╗░░░░░░░██╗ ██╔══██╗██╔══██╗██╔════╝░██║░░██╗░░██║ ██║░░╚═╝██████╔╝█████╗░░░╚██╗████╗██╔╝ ██║░░██╗██╔══██╗██╔══╝░░░░████╔═████║░ ╚█████╔╝██║░░██║███████╗░░╚██╔╝░╚██╔╝░ ░╚════╝░╚═╝░░╚═╝╚══════╝░░░╚═╝░░░╚═╝░░ +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+- |.|.|.|.|.|.|o|o|o|o|o|o|O|O|O|O|O|O|0|0|0|0|0|0|0|0|0|0|O|O|O|O|O|O|o|o|o|o|o|o||.|.|.|.|.|.| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+- 🅟🅡🅞🅤🅓🅛🅨 🅟🅡🅔🅢🅔🅝🅣🅢 Docker tag: 8.1.113-unraid NEW: Current recommended Home User tag: 11notes/unifi:8.1.113-unraid NEW: Current Company/Corporate recommended tag: 11notes/unifi:8.1.113-unraid Current Critical Infrastructure (no downtime) tag: 11notes/unifi:7.5.187-unraid3 points

-

Ich bin da auch noch etwas Grün hinter den Ohren, aber soweit wie ich es verstanden habe, hängt dies gänzlich davon ab, wie das Image des Containers aufgebaut ist, den man da starten möchte. Gerade für so ziemlich alle Docker, die es in den Community Applications gibt, sind diese bereits so aufgebaut, dass sie auf die PUID und PGID Variablen horchen (und häufig auch UMASK, wenn die Software im Container mit Dateien rumhantiert, damit man die Berechtigungen auf den erstellten Dateien steuern kann). Wofür die Variablen dann genutzt werden, hängt dann davon ab, was im ausführenden Code des Dockers passiert. Z.B. könnte ein dockerfile vorsehen, dass die PUID und PGID Umgebungsvariablen dazu genutzt werden, um eben die Benutzer- und Gruppen-ID eines Linux Users fest vorzugeben, der innerhalb des Containers erstellt wird und darin weitere Prozesse ausführt. Gerade, wenn Volumes vom Host System zum Container als persistenter Speicher durchgereicht werden, werden alle Dateien die dieser Linux User im Container innerhalb der durchgereichten Volumes erzeugt, standardmäßig mit seiner eigenen Benutzer und Gruppen ID versehen. Wäre dann ja naheliegend die IDs 99 für User und 100 für die Gruppe innerhalb des Containers zu verwenden, weil diese im Unraid Host System für den Benutzer "Nobody" und die Gruppe "Users" stehen. Das erleichtert dann den Umgang mit diesen Dateien innerhalb des Unraid Hosts selber, ohne dass man als root User in Unraid unterwegs sein muss. Und wenn man jetzt einen Docker Container erwischt hat, der gar nicht diese Umgebungsvariablen verwendet? Kann sein, dass dieser Docker Container in seinem Code keinen neuen User erzeugt innerhalb des Containers, sondern einfach mit dem default User des Containers los läuft, das wäre dann eben "root" (ID = 0). Möglicherweise kann man sich dann mit dem "--user=99:100" Flag in den Extra Parametern helfen, die steuern eben die IDs des Standard Users. Das sorgt dann wenigstens dafür, dass alles was der Standarduser des Containers an Dateien erzeugt, mit diesen IDs versehen werden. Das kann gut gehen, muss aber nicht. Gerade wenn der Wechsel weg vom "root" User des Containers dafür sorgt, das er dann plötzlich gewisse Prozesse nicht mehr starten darf ("root" darf schließlich alles, aber der Flag sorgt dafür, dass dieser User eben nicht mehr "root" ist). Dann knallt der Container und läuft nicht mehr. Letztendlich hängt es also ganz davon ab, was für einen Container man da hat und was der bei seinem Startup so alles tut. Die feine Unraid'sche Art ist eben die Unterstützung der PUID und PGID Variablen, aber das muss halt auch der Container Code hergeben. Einfach PUID/PGID als Umgebungsvariablen an einen Container klatschen sorgt noch lange nicht dafür, dass dieser mit diesen Variablen auch was macht.3 points

-

ARC will be ready for the next stable 👍3 points

-

3 points

-

3 points

.thumb.png.18a913a7c68fb1435d7c1284b13caa46.png)