helpermonkey

-

Posts

214 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by helpermonkey

-

-

On 12/28/2022 at 4:29 PM, Heppie said:

Its the DNS servers that are causing issues. I changed them to 8.8.8.8 and 8.8.4.4 and all is working well.

where do you change the DNS servers for this?

-

2 minutes ago, tjb_altf4 said:

That's correct

thanks!

-

12 minutes ago, tjb_altf4 said:

It's because your containers are using another container for networking i.e. delugevpn

When that main container gets updated the ID changes so it breaks subsequent updates of the others.

There was supposed to be a possible workaround coming in Unraid, but you could also try the rebuild-dndc container.

thanks for that tip. A question about the instructions for rebuild-dndc....

https://github.com/elmerfdz/rebuild-dndc

on step 2 where it talks about ... docker network create container:master_container_name

do i need to change master_container_name to binhex-delugevpn?

-

19 minutes ago, JGNiDK said:

Ahh....this is what you deleted. And then what? Rebooted?

just deleting them is sufficient.

-

still having this problem - is there a paid support service available? this is quite problematic.

-

1

1

-

-

just thought i'd throw this back up to the top.

-

Just a bump to see how to get this fixed.

-

Whenever an update for one of my docker images becomes available, my unraid installtion ends up with orphan images which in turn creates high image disk utilization.

The only solution I've found is to log in every few days and delete the orphan images manually.

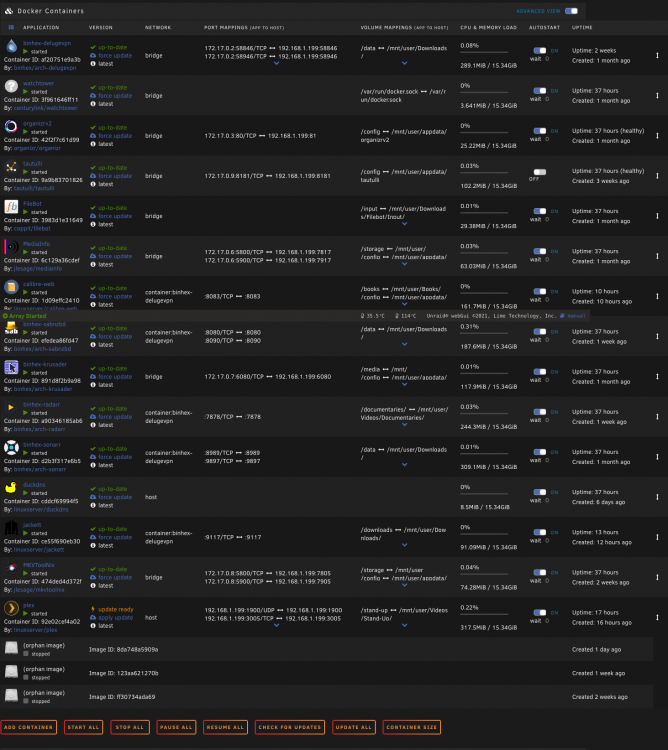

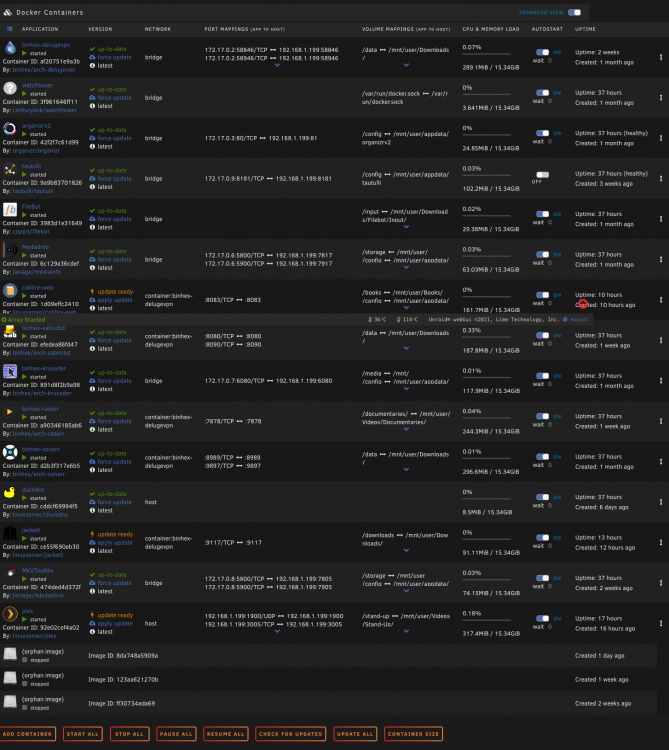

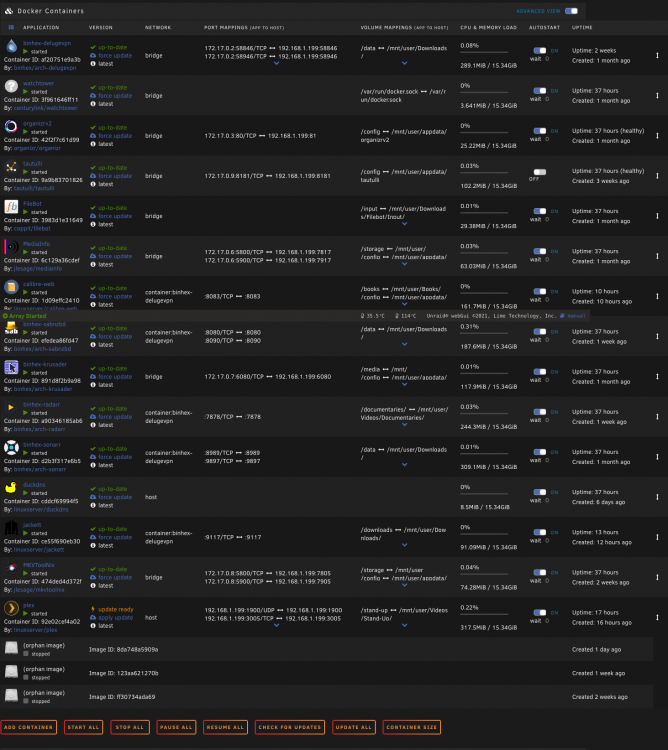

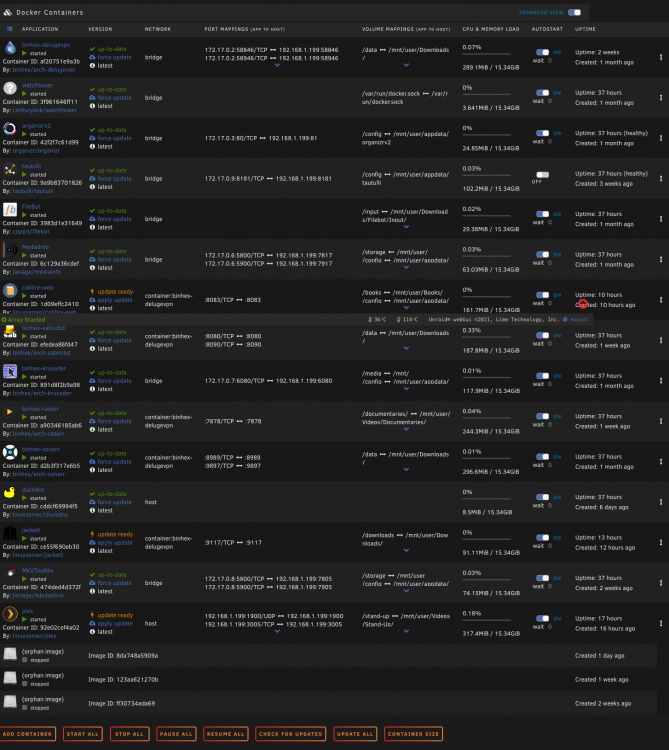

Attached are my diagnostics, as well as a notification list and my docker page. i deleted those orphan images and applied the updates after collecting the diagnostics that are attached. Is it possible this is a bug?

I have mentioned this problem here in the past but we have not been able to uncover a solution. Any suggestions appreciated.

-

rather than start a new thread - i thought i'd add to this one. For those that see this post here's a short summary of my situation:

Whenever my server finds an update of an installed docker, i end up with an orphaned docker image. These dockers are no automatically updating. I have suggested it might be a bug but mindful that I am not technically capable of determining that.

Any suggestions on things to try would be appreciated.

-

@Squidwould you happen to have any thoughts?

-

16 hours ago, trurl said:

I'm out of ideas for now

roger! thanks for all your help to this point.

-

would you have any thoughts on this? was hoping some others might help take the weight of your shoulders.

I left the server alone for a few days - came back today and there were 7 orphans with 5 images needing updates applied. I'm curious that the Total Data Pulled is always 0 B for any docker.... is this unrelated to the size of the file or is it because the data is downloaded ahead of time and then the update is applied so the dockder run doesn't show the download? (or something else? again out of my depth here).

-

okay so when i logged in today - you will notice that i had 3 orphans and one image saying it needed to be updated:

After hitting check for updates - there were indeed 3 that had updates ready.

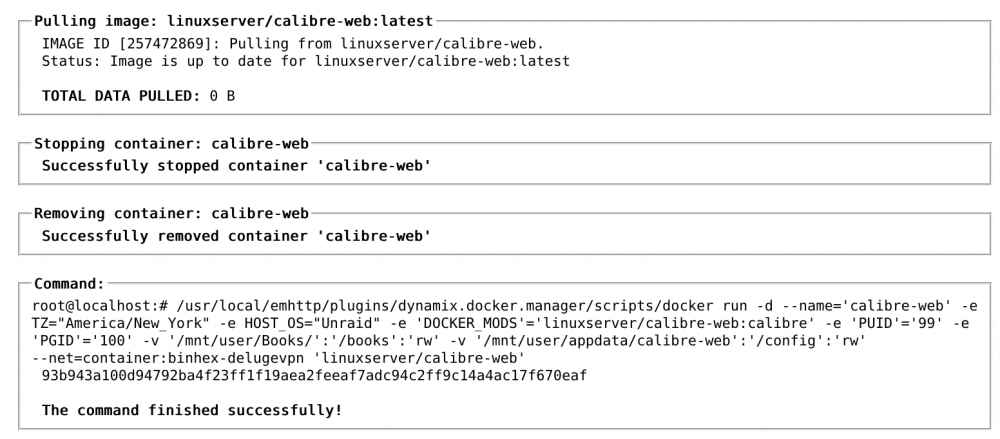

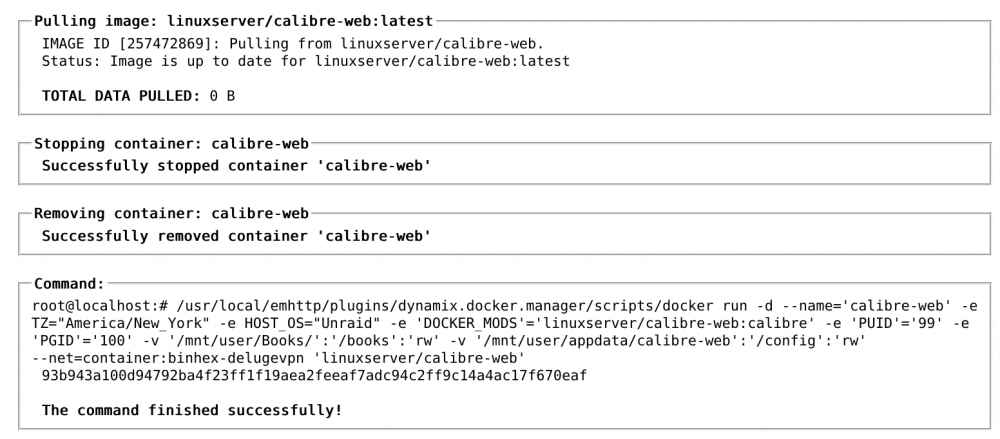

I deleted the orphan images, applied the updates and took this screen cap of the docker run for calibre web...

I'm guessing it's tied to the way my containers are updating - i haven't changed any settings and have tried to show in previous posts all the configurations. Any suggestions?

-

9 hours ago, trurl said:

Do the orphans get created when the backup runs?

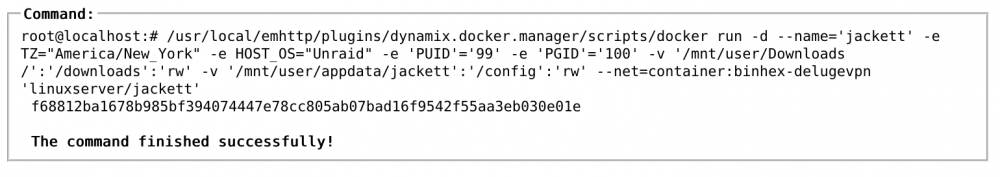

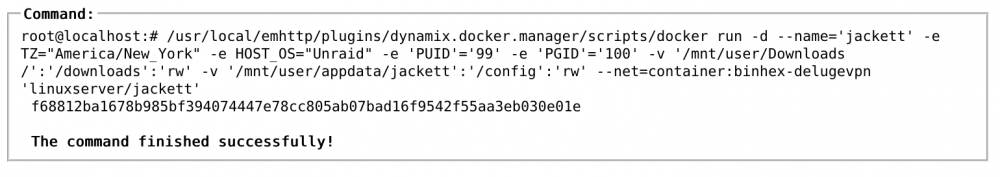

they don't appear to be. okay so interestingly enough - i just checked - a new orphan had appeared - i ran "check for updates" and Jackett had an update ... is it normal for a docker to have an update 3 consecutive days? here's the docker run from that install....

This is really strange and i appreciate your continued help on it.

-

-

1 hour ago, trurl said:

If it is a bug it hasn't been reported by anyone else. I wonder if searching outside in the larger world of docker would find anything.

Yeah, i am all about using google and forum search to turn up stuff but if you don't know what to input - it can severely limit what i'm able to turn up. Is it possible this is tied to a plugin that is somehow checking for updates surreptitiously? Here are my plugins...

-

okay so some more information for you - i logged into my server a few minutes ago and saw that there were again 2 new orphan images. I then compared the ids to the ones from the screenshot a few back and they are indeed different (not sure if that's surprising or not). I then ran "check for updates" - and found that two of the containers needed to be updated ... so perhaps this is not a hacker and is in fact tied to some sort of "bug" in my system???

Here are the docker runs:

Pulling image: linuxserver/duckdns:latest IMAGE ID [1600041090]: Pulling from linuxserver/duckdns. Status: Image is up to date for linuxserver/duckdns:latest TOTAL DATA PULLED: 0 B Stopping container: duckdns Successfully stopped container 'duckdns' Removing container: duckdns Successfully removed container 'duckdns' Command: root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='duckdns' --net='host' -e TZ="America/New_York" -e HOST_OS="Unraid" -e 'SUBDOMAINS'='REMOVEDBYME' -e 'TOKEN'='REMOVEDBYME' -e 'PUID'='99' -e 'PGID'='100' 'linuxserver/duckdns' cddcf69994f595a1ce33e8d26098396b65978d772d49307284c96adf864282cf The command finished successfully!and the other one was jackett which updated last night too so i don't see much having changed there 🙂

-

1 minute ago, trurl said:

I'm beginning to wonder if some hacker isn't trying and failing to create containers on your system.

i would obviously say anything is possible but i don't have any reason to believe that to be the case either... how would i "test" for that?

-

3 minutes ago, trurl said:

And I presume you have no orphans as a result of those updates.

i do not.

-

Just now, trurl said:

delete the orphans first then yes I mean the docker run

Here ya go:

PLEX

Pulling image: linuxserver/plex:latest IMAGE ID [1008960680]: Pulling from linuxserver/plex. Status: Image is up to date for linuxserver/plex:latest TOTAL DATA PULLED: 0 B Stopping container: plex Successfully stopped container 'plex' Removing container: plex Successfully removed container 'plex' Command: root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='plex' --net='host' -e TZ="America/New_York" -e HOST_OS="Unraid" -e 'VERSION'='latest' -e 'NVIDIA_VISIBLE_DEVICES'='' -e 'TCP_PORT_32400'='32400' -e 'TCP_PORT_3005'='3005' -e 'TCP_PORT_8324'='8324' -e 'TCP_PORT_32469'='32469' -e 'UDP_PORT_1900'='1900' -e 'UDP_PORT_32410'='32410' -e 'UDP_PORT_32412'='32412' -e 'UDP_PORT_32413'='32413' -e 'UDP_PORT_32414'='32414' -e 'PUID'='99' -e 'PGID'='100' -v '/mnt/user/Videos/Films/':'/movies':'rw' -v '/mnt/user/Videos/TV/':'/tv':'rw' -v '/mnt/user/Music/':'/music':'rw' -v '':'/transcode':'rw' -v '/mnt/user/Videos/Stand-Up/':'/stand-up':'rw' -v '/mnt/user/Videos/Trailers/':'/Trailers':'rw' -v '/mnt/user/Videos/Documentaries/':'/documentaries/':'rw' -v '/mnt/user/Videos/Sports/':'/sports':'rw' -v '/mnt/user/appdata/plexmediaserver':'/config':'rw' 'linuxserver/plex' e151a5ff03fa1baa766e27264c11ea2e1c65b36babce67931be1e9d8bd3faaf9 The command finished successfully!Jackett

Pulling image: linuxserver/jackett:latest IMAGE ID [166390777]: Pulling from linuxserver/jackett. Status: Image is up to date for linuxserver/jackett:latest TOTAL DATA PULLED: 0 B Stopping container: jackett Successfully stopped container 'jackett' Removing container: jackett Successfully removed container 'jackett' Command: root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='jackett' -e TZ="America/New_York" -e HOST_OS="Unraid" -e 'PUID'='99' -e 'PGID'='100' -v '/mnt/user/Downloads/':'/downloads':'rw' -v '/mnt/user/appdata/jackett':'/config':'rw' --net=container:binhex-delugevpn 'linuxserver/jackett' d60dce3d0f7f7c0fcd12e0f48a1da6711428a7e09501dd3f05c579d275d2bbdb The command finished successfully! -

4 hours ago, trurl said:

Have you tried manually updating any dockers? Be sure to capture the output.

Have you done memtest?

I have not applied the updates yet - should i do that before or after deleting the orphans? When you say capture the output i presume you mean the dockerrun - if not let me know what that is. I have not run memtest - my understanding is that you have to do it at startup but I don't have a monitor anymore - so not sure if that's a problem here or not.

-

7 hours ago, trurl said:

Did those orphans already exist before you disabled autoupdate?

nope.

-

19 hours ago, trurl said:

When you update or edit a container, it gets recreated. The old container becomes an orphan, but normally it gets deleted automatically.

okay so i disabled auto-update as detailed here:

And there are two orphaned images and both Jackett & Plex have updates ready to apply ...

-

1 minute ago, trurl said:

When you update or edit a container, it gets recreated. The old container becomes an orphan, but normally it gets deleted automatically.

gotcha - thanks much. more to follow.

[Support] binhex - DelugeVPN

in Docker Containers

Posted

I have a slew of entries in this field in my config (i don't remember why - as i set this up years ago). Is it safe to delete all of those and just go with 1.0.0.1 and 8.8.8.8? I am happy to post all the addresses i have in that key field if that would help.