celborn

-

Posts

19 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by celborn

-

-

My unRAID server starting locking up when my Windows 11 VM with a 7900xt passed through in addition to two nvme drives passed through.

I've attached my diag. Please let me know what other info I can provide

Also here is my VMs XML

<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm'> <name>Celborn-PC</name> <uuid>b0c0c166-b109-bf4c-8bbb-4c5eb6148833</uuid> <description>Gaming VM Q35-7.1 20230403</description> <metadata> <vmtemplate xmlns="unraid" name="Windows 11" icon="Win10_Radeon.png" os="windowstpm"/> </metadata> <memory unit='KiB'>33554432</memory> <currentMemory unit='KiB'>33554432</currentMemory> <memoryBacking> <nosharepages/> <source type='memfd'/> <access mode='shared'/> </memoryBacking> <vcpu placement='static'>12</vcpu> <cputune> <vcpupin vcpu='0' cpuset='10'/> <vcpupin vcpu='1' cpuset='26'/> <vcpupin vcpu='2' cpuset='11'/> <vcpupin vcpu='3' cpuset='27'/> <vcpupin vcpu='4' cpuset='12'/> <vcpupin vcpu='5' cpuset='28'/> <vcpupin vcpu='6' cpuset='13'/> <vcpupin vcpu='7' cpuset='29'/> <vcpupin vcpu='8' cpuset='14'/> <vcpupin vcpu='9' cpuset='30'/> <vcpupin vcpu='10' cpuset='15'/> <vcpupin vcpu='11' cpuset='31'/> </cputune> <os> <type arch='x86_64' machine='pc-q35-7.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi-tpm.fd</loader> <nvram>/etc/libvirt/qemu/nvram/b0c0c166-b109-bf4c-8bbb-4c5eb6148833_VARS-pure-efi-tpm.fd</nvram> </os> <features> <acpi/> <apic/> <hyperv mode='custom'> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='none'/> </hyperv> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='6' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='none'/> <source dev='/dev/disk/by-id/nvme-ADATA_SX8200PNP_2K1220143762'/> <target dev='hdc' bus='virtio'/> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </disk> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='none'/> <source dev='/dev/disk/by-id/nvme-INTEL_SSDPEKNU020TZ_PHKA202105CQ2P0C'/> <target dev='hdd' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/> </disk> <controller type='usb' index='0' model='qemu-xhci' ports='15'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <controller type='pci' index='0' model='pcie-root'/> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x10'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x11'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0x12'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0x13'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0x14'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x4'/> </controller> <controller type='pci' index='6' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='6' port='0x15'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x5'/> </controller> <controller type='pci' index='7' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='7' port='0x8'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='8' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='8' port='0x9'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='pci' index='9' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='9' port='0xa'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/> </controller> <controller type='pci' index='10' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='10' port='0xb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/> </controller> <controller type='pci' index='11' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='11' port='0xc'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/> </controller> <controller type='pci' index='12' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='12' port='0xd'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/> </controller> <controller type='pci' index='13' model='pcie-to-pci-bridge'> <model name='pcie-pci-bridge'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <controller type='sata' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/> </controller> <interface type='bridge'> <mac address='52:54:00:f9:99:ba'/> <source bridge='br0'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <address type='usb' bus='0' port='4'/> </input> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <tpm model='tpm-tis'> <backend type='emulator' version='2.0' persistent_state='yes'/> </tpm> <audio id='1' type='none'/> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </source> <rom file='/mnt/user/isos/vbios/XFX-7900xt-20230401.rom'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/> </source> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x1'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x046d'/> <product id='0xc049'/> </source> <address type='usb' bus='0' port='1'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x1038'/> <product id='0x1612'/> </source> <address type='usb' bus='0' port='2'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x1532'/> <product id='0x0529'/> </source> <address type='usb' bus='0' port='3'/> </hostdev> <memballoon model='none'/> </devices> </domain>

-

I stumbled through this and finally got the XFX 7900 XT passed through properly with a VM.

Heres what ive got running.

Im booting Unraid 6.12rc2 in legacy mode (will test in UEFI later)

here is my cutrrent grub settings isolating several of the 5955wx CPUs for the VM's exclusive use

kernel /bzimage append initrd=/bzroot iommu=pt amd_iommu=on nofb nomodeset initcall_blacklist=sysfb_init isolcpus=9-15,25-31

Here is my GPU Iommu Group with the two pcie devices i am passing through to the VM

IOMMU group 13: [1022:1482] 40:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1483] 40:03.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge [1002:1478] 44:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Upstream Port of PCI Express Switch (rev 10) [1002:1479] 45:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Downstream Port of PCI Express Switch (rev 10) *[1002:744c] 46:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Navi 31 [Radeon RX 7900 XT/7900 XTX] (rev cc) *[1002:ab30] 46:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Device ab30I setup a new VM passing through the same bare metal SSD as Q35-7.1 instead of the i440 that the previous VM was.

Installed a fresh Windows 11 Pro on the bare metal SSD from the old VM.

I first didnt assign a GPU to the VM and did the win11 install via noVNC and once the install weas finished I added the 7900 XT in place of the virtual display and added the sound card portion.

Here is the gpu portion of my fresh install of Win11 Pro

<hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x46' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x46' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/> </hostdev>

Hopefully this helps someone out down the line that was having the same issues I was and makes things easier on them as I spent 4-5 hours a day for 5 days straight tweaking settings. rebooting the server. rinse and repeat........

-

On 3/31/2023 at 7:59 PM, shpitz461 said:

Your GPU is on the same IOMMU group with other devices, you have to get it isolated to its own group (both xx.0 for video and xx.1 for audio).

If you have an option in the BIOS to enable SR-IOV try that. If that doesn't help you'll have to go the ACS-Override path...

I ive tried SR-IOV and it hasnt helped.

Also, I did the ACS downstream and each of the devices ended up in their own iommu but passing through the VGA and Audio device to the win10 VM just got me a black screen on the monitor.

I was able to boot to the bare metal on the VM with the 7900xt and it worked flawlessly.

The question I have is I keep reading that everyone is passing all 4 devices through but Im only seeing two (23:00.0 & 23:00.1) what is yalls graphics card looking like in the device view?

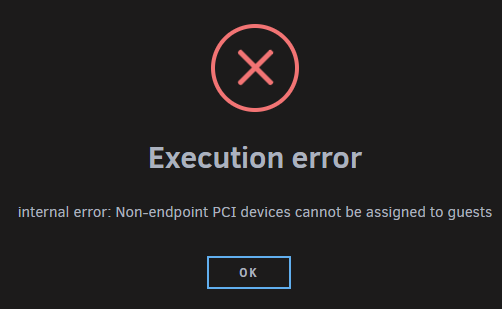

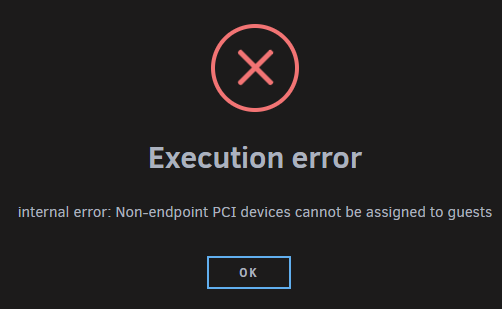

Also, when i try to manually pass through 21:00.0 & 22:00.0 i get a "Non-endpoint cannot be passed through to the guest

-

On 1/4/2023 at 6:52 PM, Ancalagon said:

I'm using the original vbios dump, no edits.

Yes, I am binding all 4 devices to vfio. After adding them to the VM, I'm manually editing the XML to put them on the same bus and slot as a multifunction device.

Here's the GPU portion of my XML:

<hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x0c' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <rom file='/mnt/user/domains/GPU ROM/AMD.RX7900XT.022.001.002.008.000001.rom'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x0c' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x1'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x0c' slot='0x00' function='0x2'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x2'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x0c' slot='0x00' function='0x3'/> </source> <alias name='hostdev3'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x3'/> </hostdev>

Have you been able to boot the VM after booting the host in legacy CSM mode?

When you say that you are "binding all 4 devices to vfio" are you referring to your equivalent of?

Also when i map all 4 of the devices to the win10 vm i get the below error

here is the portion of my gpu xml

<hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </source> <rom file='/mnt/user/isos/vbios/RX7900XT-20230331.rom'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x01' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/> </source> <address type='pci' domain='0x0000' bus='0x04' slot='0x01' function='0x1'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </source> <address type='pci' domain='0x0000' bus='0x04' slot='0x01' function='0x2'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </source> <address type='pci' domain='0x0000' bus='0x04' slot='0x01' function='0x3'/> </hostdev>

also fwiw i am running a xtx 7900xt on a wrx80 creator v2 with a 5955wx as the cpu on Unraid 6.12 rc2

-

I just saw that my /var/logs is full and I cant figure out why.

Hopefully one of the smarter individuals here can point me in the right direction.

Thank you in advance

-

Well i think I fixed(ish) it by disableing docker in Unraid and re-enabling it. When docker went through the startup it rebuilt all the IPTABLES and everything is talking again.

Thank you for letting me vent!

-

Well I think I borked myself accidentally, i flushed the iptables before I had a backup.. stupid noob mistake.

Can someone tell me what the bare minimum iptable I would need to keep unraid using dockers and vms be so this whole house of cards doesnt fall down? I would be forever greatful!

-

9 minutes ago, binhex said:

can you expand on this, are you using a custom bridge or macvlan, are any of them routed through another container?, do any of them use proxy's?.

Custom: br0. And, as far as i know the containers arent routed through other containers or proxies.

9 minutes ago, binhex said:are you sure about that cidr notation?, see Q4:- https://github.com/binhex/documentation/blob/master/docker/faq/vpn.md

sorry it was 192.168.1.0/24

here is a screen shot of my Radarr docker setting for clarification.

-

I think something broke on my IPTables now and now dockers not using the bridge network type (using br0 with a different IP address on the same 192.168.1.0/20 network).

Is there a way to revert back to a previous version of the IPTables or just reset it to what ever the default would be?

Also, im not sure if this would cover it but i do back up the USB and LibVert Image with CA Backup / Restore Appdata

-

2 hours ago, binhex said:

im not 100% sure on the question, but to be clear, you only need to specify web ui ports for ADDITIONAL_PORTS for containers that you are routing through one of the vpn images i produce, if you have a container that doesnt require a vpn then you dont need to change anything for that container

I guess the question would be, is there something done when the docker performs the "[debug] Waiting for iptables chain policies to be in place..." that would cause dockers that are assigned a different IP address (while on the same 192.168.1.0/20 network) to be no longer accessible?

-

49 minutes ago, binhex said:

correct on all accounts

Firstly, thank you for updating the docker and helping keep the community strong.

Secondly, would I need to do the same for dockers that are not using the same IP address and not using the VPN?

-

I was wondering if anyone else had/has the same issue as i am. After installing and running this docker, I can no longer access any of my dockers that dont use bridge network mode which is quite a bit of an issue for my setup and i would like to revert back to my previously working setup.

Any help would be greatly appreciated as I am not very familiar with setting up or using IPTables for unraid.

Below is the output for

iptables -L -v -nChain INPUT (policy ACCEPT 20812 packets, 5493K bytes) pkts bytes target prot opt in out source destination 28M 18G LIBVIRT_INP all -- * * 0.0.0.0/0 0.0.0.0/0 Chain FORWARD (policy ACCEPT 25238 packets, 17M bytes) pkts bytes target prot opt in out source destination 1888M 2261G LIBVIRT_FWX all -- * * 0.0.0.0/0 0.0.0.0/0 1888M 2261G LIBVIRT_FWI all -- * * 0.0.0.0/0 0.0.0.0/0 1888M 2261G LIBVIRT_FWO all -- * * 0.0.0.0/0 0.0.0.0/0 1888M 2261G DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0 1888M 2261G DOCKER-ISOLATION-STAGE-1 all -- * * 0.0.0.0/0 0.0.0.0/0 1084M 1363G ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED 7700 402K DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0 591M 88G ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0 14 1472 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0 Chain OUTPUT (policy ACCEPT 18896 packets, 5692K bytes) pkts bytes target prot opt in out source destination 27M 79G LIBVIRT_OUT all -- * * 0.0.0.0/0 0.0.0.0/0 Chain DOCKER (1 references) pkts bytes target prot opt in out source destination 40 2080 ACCEPT tcp -- !docker0 docker0 0.0.0.0/0 172.17.0.2 tcp dpt:8000 364 19136 ACCEPT tcp -- !docker0 docker0 0.0.0.0/0 172.17.0.3 tcp dpt:7878 654 34496 ACCEPT tcp -- !docker0 docker0 0.0.0.0/0 172.17.0.4 tcp dpt:8989 244 12736 ACCEPT tcp -- !docker0 docker0 0.0.0.0/0 172.17.0.5 tcp dpt:6789 0 0 ACCEPT tcp -- !docker0 docker0 0.0.0.0/0 172.17.0.6 tcp dpt:9117 Chain DOCKER-ISOLATION-STAGE-1 (1 references) pkts bytes target prot opt in out source destination 591M 88G DOCKER-ISOLATION-STAGE-2 all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0 1888M 2261G RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-ISOLATION-STAGE-2 (1 references) pkts bytes target prot opt in out source destination 0 0 DROP all -- * docker0 0.0.0.0/0 0.0.0.0/0 591M 88G RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-USER (1 references) pkts bytes target prot opt in out source destination 1888M 2261G RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 Chain LIBVIRT_FWI (1 references) pkts bytes target prot opt in out source destination 0 0 ACCEPT all -- * virbr0 0.0.0.0/0 192.168.122.0/24 ctstate RELATED,ESTABLISHED 0 0 REJECT all -- * virbr0 0.0.0.0/0 0.0.0.0/0 reject-with icmp-port-unreachable Chain LIBVIRT_FWO (1 references) pkts bytes target prot opt in out source destination 0 0 ACCEPT all -- virbr0 * 192.168.122.0/24 0.0.0.0/0 0 0 REJECT all -- virbr0 * 0.0.0.0/0 0.0.0.0/0 reject-with icmp-port-unreachable Chain LIBVIRT_FWX (1 references) pkts bytes target prot opt in out source destination 0 0 ACCEPT all -- virbr0 virbr0 0.0.0.0/0 0.0.0.0/0 Chain LIBVIRT_INP (1 references) pkts bytes target prot opt in out source destination 0 0 ACCEPT udp -- virbr0 * 0.0.0.0/0 0.0.0.0/0 udp dpt:53 0 0 ACCEPT tcp -- virbr0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:53 0 0 ACCEPT udp -- virbr0 * 0.0.0.0/0 0.0.0.0/0 udp dpt:67 0 0 ACCEPT tcp -- virbr0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:67 Chain LIBVIRT_OUT (1 references) pkts bytes target prot opt in out source destination 0 0 ACCEPT udp -- * virbr0 0.0.0.0/0 0.0.0.0/0 udp dpt:53 0 0 ACCEPT tcp -- * virbr0 0.0.0.0/0 0.0.0.0/0 tcp dpt:53 0 0 ACCEPT udp -- * virbr0 0.0.0.0/0 0.0.0.0/0 udp dpt:68 0 0 ACCEPT tcp -- * virbr0 0.0.0.0/0 0.0.0.0/0 tcp dpt:68 -

On 7/9/2020 at 2:57 PM, Jerky_san said:

Btw if your wondering where you can get info on all this

Thank you that was a good read.

I ended up having to reset my bios (messed up enabling uefi to dualboot off the VM m.2). Luckily switching back to legacy had no negate affects on UNRAID. and created a 'new' win10 VM with the old drives. and wouldnt you know it, the video card is acting just like it should and performing properly... *sigh* not sure exactly which step 'fixed' the issue but its up and running now.

@Jerky_san thank you for your input. My brain hurts just a little from the added knowledge but i enjoyed every minute of it

-

With what you said, my additions should look like the below

--update- added emulatorpin

<cputune> <vcpupin vcpu='0' cpuset='6'/> <vcpupin vcpu='1' cpuset='18'/> <vcpupin vcpu='2' cpuset='7'/> <vcpupin vcpu='3' cpuset='19'/> <vcpupin vcpu='4' cpuset='8'/> <vcpupin vcpu='5' cpuset='20'/> <vcpupin vcpu='6' cpuset='9'/> <vcpupin vcpu='7' cpuset='21'/> <vcpupin vcpu='8' cpuset='10'/> <vcpupin vcpu='9' cpuset='22'/> <vcpupin vcpu='10' cpuset='11'/> <vcpupin vcpu='11' cpuset='23'/> <emulatorpin cpuset='6-11,18-23'/> </cputune> <resource> <partition>/machine</partition> </resource> <numatune> <memory mode='strict' nodeset='0,1'/> <memnode cellid='0' mode='strict' nodeset='0'/> <memnode cellid='1' mode='strict' nodeset='1'/> </numatune> <os>

and

<cpu mode='host-passthrough' check='none'> <topology sockets='1' dies='1' cores='6' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> <numa> <cell id='0' cpus='6-11' memory='4194304' unit='KiB'/> <cell id='1' cpus='18-23' memory='4194304' unit='KiB'/> </numa> </cpu>

-

Just now, Jerky_san said:

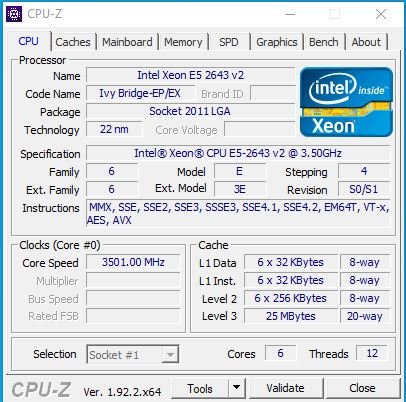

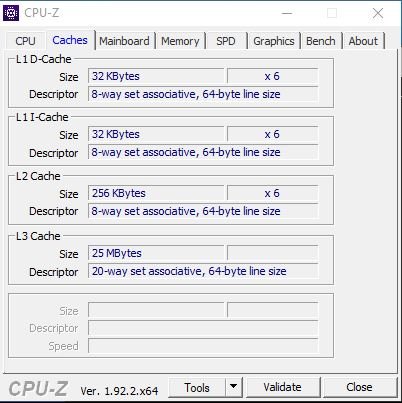

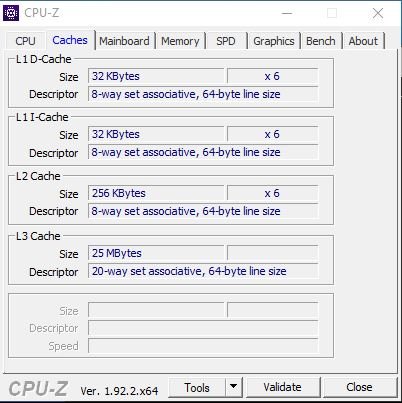

hmm I'd say if you could first make sure in CPUZ all the L caches appear correct(they should since it's an intel). Check GPUZ and make sure it's boosting properly when your running the passmark as well. Also I'd say run a CPUZ benchmark with and without the GPU passed through to see if the score changes at all. If so to what degree?

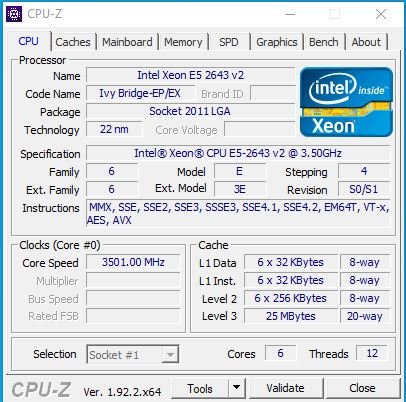

here are the screenshots of CPU-Z and GPU-Z- It looks like the GPU clock speed peaks at 1905 MHZ

-

Also, if this matters much, here is the result from running numactl --hardware

root@Tower:~# numactl --hardware available: 2 nodes (0-1) node 0 cpus: 0 1 2 3 4 5 12 13 14 15 16 17 node 0 size: 32200 MB node 0 free: 4389 MB node 1 cpus: 6 7 8 9 10 11 18 19 20 21 22 23 node 1 size: 32253 MB node 1 free: 31673 MB node distances: node 0 1 0: 10 21 1: 21 10 -

Hello all,

I have been battling a GPU issue with a Windows 10 VM (poor performance, Passmark score of 7413 instead of the 12000+ i should be getting).

My server specs are as follows.

Unraid 6.9.0-beta 22

Motherboard X9DRE-LN4F

Dual E5-2643 v2

16x4GB PC3-12800R

The VM has a 1TB m.2 SSD to PCIE (in a slot for CPU 2) assigned to it for the OS drive, Latest Nvidia drivers

8GB Ram

I have all the windows performance settings set to High/never sleep.

I believe isolated all of the cores on CPU 2 and assigned most of them to the VM

Ive made sure that video card is installed in the PCIe 16x slot assigned to CPU2.

Can someone help me, I'm at a complete loss as to why the graphics card is so wildly under-performing

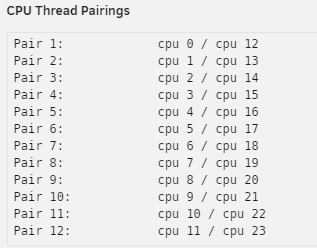

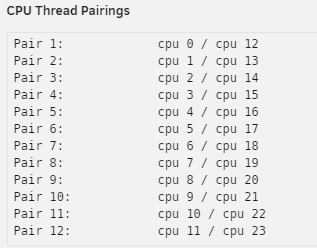

Also, here is what the CPU Parings are showing in UNRAID

Here is the VM's XML

<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm' id='1'> <name>Gaming</name> <uuid>2d3c072c-ebaf-d861-862b-c0eb1270347d</uuid> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>8388608</memory> <currentMemory unit='KiB'>8388608</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>12</vcpu> <cputune> <vcpupin vcpu='0' cpuset='6'/> <vcpupin vcpu='1' cpuset='18'/> <vcpupin vcpu='2' cpuset='7'/> <vcpupin vcpu='3' cpuset='19'/> <vcpupin vcpu='4' cpuset='8'/> <vcpupin vcpu='5' cpuset='20'/> <vcpupin vcpu='6' cpuset='9'/> <vcpupin vcpu='7' cpuset='21'/> <vcpupin vcpu='8' cpuset='10'/> <vcpupin vcpu='9' cpuset='22'/> <vcpupin vcpu='10' cpuset='11'/> <vcpupin vcpu='11' cpuset='23'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-5.0'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/2d3c072c-ebaf-d861-862b-c0eb1270347d_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> </features> <cpu mode='host-passthrough' check='none'> <topology sockets='1' dies='1' cores='6' threads='2'/> <cache mode='passthrough'/> </cpu> <clock offset='localtime'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source dev='/dev/disk/by-id/nvme-ADATA_SX8200PNP_2K1220143762' index='3'/> <backingStore/> <target dev='hdc' bus='sata'/> <boot order='1'/> <alias name='sata0-0-2'/> <address type='drive' controller='0' bus='0' target='0' unit='2'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/user/domains/Gaming/vdisk2.img' index='2'/> <backingStore/> <target dev='hdd' bus='sata'/> <alias name='sata0-0-3'/> <address type='drive' controller='0' bus='0' target='0' unit='3'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/disks/ST2000LM007-1R8174_ZDZ260WC/Gaming/vdisk3.img' index='1'/> <backingStore/> <target dev='hde' bus='virtio'/> <alias name='virtio-disk4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </disk> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='sata' index='0'> <alias name='sata0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </controller> <controller type='usb' index='0' model='qemu-xhci' ports='15'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:80:a1:8e'/> <source bridge='br0'/> <target dev='vnet0'/> <model type='virtio-net'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/0'/> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/0'> <source path='/dev/pts/0'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-1-Gaming/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <alias name='input0'/> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'> <alias name='input1'/> </input> <input type='keyboard' bus='ps2'> <alias name='input2'/> </input> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <rom file='/mnt/user/isos/Videocard BIOS/Gigabyte.GTX1660Super.6144.190918-DumpedRom.rom'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x2'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x3'/> </source> <alias name='hostdev3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x046d'/> <product id='0xc049'/> <address bus='2' device='4'/> </source> <alias name='hostdev4'/> <address type='usb' bus='0' port='2'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x046d'/> <product id='0xc226'/> <address bus='2' device='8'/> </source> <alias name='hostdev5'/> <address type='usb' bus='0' port='3'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x046d'/> <product id='0xc227'/> <address bus='2' device='9'/> </source> <alias name='hostdev6'/> <address type='usb' bus='0' port='4'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x1b1c'/> <product id='0x0a4d'/> <address bus='2' device='3'/> </source> <alias name='hostdev7'/> <address type='usb' bus='0' port='5'/> </hostdev> <memballoon model='none'/> </devices> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+100</label> <imagelabel>+0:+100</imagelabel> </seclabel> </domain>

-

I tried upgrading my processors from E5-2620s to E5-2670v2 and after installing the processors the server wont post.

I have already upgraded my bios to v3.3 and the server still wont post.

The server works just fine with the old processors installed.

Ive also tried installing the CPUs one at a time in slot 1 and no luck.

Does anyone have any advice?

Parity and random disks keep disabling

in General Support

Posted

Hopefully the smart people here can help me out with an issue that has crippled my primary Unraid server.

Starting on saturday my unraid server locked up and stopped responding requiring me to reboot the machine.

When it finally came back up i noticed disk 4 was disabled.

I followed the directions to stop the array, remove the offending disk, start in management mode, stop and readd the disk.

When i did that the array came back up and i decided to run a parity check when my parity disks started disabling.

rinse and repeat but no luck. i have attached my diag file if anyone can help me know what direction to start moving in i would be very appreciative.

Also i looked at the disks that kept being disabled SMART tests and didnt see anything erroring out there FWIW

tower-diagnostics-20240331-1753.zip