Gnomuz

-

Posts

130 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Gnomuz

-

-

Hi,

It seems a new version is available and an update of the docker image is required.

Thanks in advance. -

Thanks for the update, running again

-

Hi there,

It seems there's been a new version of Crash Plan for 3 days or so, and it's repeatedly trying to update, ofc without success.

Thanks in advance for an updated image ! -

Just updated the container, thanks for the quick support as usual

-

Hello,

An upgrade of Crashplan to a new version 15252000006882 is attempting to install, thanks in advance for upgrading the container if ever the upgrade were to become mandatory later. -

The latest version of the official telegraf container no longer runs as root, which explains the issue.

Here I used "telegraf:latest" (i.e. 1.20.3 currently), with apt-get update and install commands in the Post Argument, and ofc that can't work without root privileges. Pulling "telegraf:1.20.2" instead (previous version running as root) solved the issue, but it's only a temporary workaround ...-

2

2

-

1

1

-

-

Thanks for the feedback, I had noticed this "not-available" exit code but didn't pay attention it was a 'Warning" log entry.

So I understand the problem would be in the scan phase. If I may, the warning line you point out is related with the first file, the one that unBALANCE will move happily :

I: 2021/09/16 22:15:26 planner.go:351: scanning:disk(/mnt/disk2):folder(XXXX/portables/1f7n0em0/plot-k32-2021-07-26-14-12-92fa0e550e27c898b01b7d7b839da2a60d7b2ad6f55a9ecf86f1a78627a62b4e.plot) W: 2021/09/16 22:15:26 planner.go:362: issues:not-available:(exit status 1) I: 2021/09/16 22:15:26 planner.go:380: items:count(1):size(108.83 GB) I: 2021/09/16 22:15:26 planner.go:351: scanning:disk(/mnt/disk2):folder(XXXX/portables/1f7n0em0/plot-k32-2021-07-26-15-08-b9330f6ab9e903b464cc97adfecb410f678c90d51b702dbde33c9b58d36de453.plot) W: 2021/09/16 22:15:26 planner.go:362: issues:not-available:(exit status 1) I: 2021/09/16 22:15:26 planner.go:380: items:count(1):size(108.84 GB) I: 2021/09/16 22:15:26 planner.go:110: scatterPlan:items(2)The warnings are the same on both files, but in the end the result of the planning phase is that the first file is transferred, the second is not. And it's the same if I select 3 of them or more, only the first one is selected for transfer as expected, the next ones "will NOT be transferred", same warning on all files including the first one in the scan phase.

Permissions and timestamps seem fine

-rw-rw-rw- 1 nobody users 102G Jul 26 15:36 plot-k32-2021-07-26-14-12-92fa0e550e27c898b01b7d7b839da2a60d7b2ad6f55a9ecf86f1a78627a62b4e.plot -rw-rw-rw- 1 nobody users 102G Jul 26 16:33 plot-k32-2021-07-26-15-08-b9330f6ab9e903b464cc97adfecb410f678c90d51b702dbde33c9b58d36de453.plotAs for a file corruption, these are chia plots as you have seen. There are chia specific tools which check the integrity of the plots and I'm positively sure these are not corrupted at application level, a fortiori at block level.

Other similar transfers worked just fine with unBALANCE on smaller files. I also transferred manually a few chia plots with CLI from disk2 to disk3 using the exact same rsync command as the one generated by unBALANCE, it worked like a charm. So I really suspect the unitary size of the files somehow raises an exception in the code behind the "scan" phase, which prevents the transfer of more than one file at a time.Should you need any further information from me, do not hesitate.

-

Thanks for answering, but sorry, I didn't solve it in the meantime. I tried to set the reserved space to the minimum with no effect.

Here are the logs when I plan for transferring two ~109GB files to a drive (disk3) with 5.2 TB free :

I: 2021/09/16 22:15:26 planner.go:70: Running scatter planner ... I: 2021/09/16 22:15:26 planner.go:84: scatterPlan:source:(/mnt/disk2) I: 2021/09/16 22:15:26 planner.go:86: scatterPlan:dest:(/mnt/disk3) I: 2021/09/16 22:15:26 planner.go:520: planner:array(8 disks):blockSize(4096) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk1):fs(xfs):size(3998833471488):free(2304975196160):blocksTotal(976277703):blocksFree(562738085) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk2):fs(xfs):size(3998833471488):free(509188435968):blocksTotal(976277703):blocksFree(124313583) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk3):fs(xfs):size(13998382592000):free(5195027685376):blocksTotal(3417573875):blocksFree(1268317306) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk4):fs(xfs):size(13998382592000):free(30423252992):blocksTotal(3417573875):blocksFree(7427552) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk5):fs(xfs):size(13998382592000):free(30410973184):blocksTotal(3417573875):blocksFree(7424554) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk6):fs(xfs):size(13998382592000):free(30273011712):blocksTotal(3417573875):blocksFree(7390872) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/disk7):fs(xfs):size(13998382592000):free(29930565632):blocksTotal(3417573875):blocksFree(7307267) I: 2021/09/16 22:15:26 planner.go:522: disk(/mnt/cache):fs(xfs):size(511859089408):free(246631960576):blocksTotal(124965598):blocksFree(60212881) I: 2021/09/16 22:15:26 planner.go:351: scanning:disk(/mnt/disk2):folder(Chia/portables/1f7n0em0/plot-k32-2021-07-26-14-12-92fa0e550e27c898b01b7d7b839da2a60d7b2ad6f55a9ecf86f1a78627a62b4e.plot) W: 2021/09/16 22:15:26 planner.go:362: issues:not-available:(exit status 1) I: 2021/09/16 22:15:26 planner.go:380: items:count(1):size(108.83 GB) I: 2021/09/16 22:15:26 planner.go:351: scanning:disk(/mnt/disk2):folder(Chia/portables/1f7n0em0/plot-k32-2021-07-26-15-08-b9330f6ab9e903b464cc97adfecb410f678c90d51b702dbde33c9b58d36de453.plot) W: 2021/09/16 22:15:26 planner.go:362: issues:not-available:(exit status 1) I: 2021/09/16 22:15:26 planner.go:380: items:count(1):size(108.84 GB) I: 2021/09/16 22:15:26 planner.go:110: scatterPlan:items(2) I: 2021/09/16 22:15:26 planner.go:113: scatterPlan:found(/mnt/disk2/Chia/portables/1f7n0em0/plot-k32-2021-07-26-14-12-92fa0e550e27c898b01b7d7b839da2a60d7b2ad6f55a9ecf86f1a78627a62b4e.plot):size(108828032623) I: 2021/09/16 22:15:26 planner.go:113: scatterPlan:found(/mnt/disk2/Chia/portables/1f7n0em0/plot-k32-2021-07-26-15-08-b9330f6ab9e903b464cc97adfecb410f678c90d51b702dbde33c9b58d36de453.plot):size(108835523381) I: 2021/09/16 22:15:26 planner.go:120: scatterPlan:issues:owner(0),group(0),folder(0),file(0) I: 2021/09/16 22:15:26 planner.go:129: scatterPlan:Trying to allocate items to disk3 ... I: 2021/09/16 22:15:26 planner.go:134: scatterPlan:ItemsLeft(2):ReservedSpace(536870912) I: 2021/09/16 22:15:26 planner.go:463: scatterPlan:1 items will be transferred. I: 2021/09/16 22:15:26 planner.go:465: scatterPlan:willBeTransferred(Chia/portables/1f7n0em0/plot-k32-2021-07-26-14-12-92fa0e550e27c898b01b7d7b839da2a60d7b2ad6f55a9ecf86f1a78627a62b4e.plot) I: 2021/09/16 22:15:26 planner.go:473: scatterPlan:1 items will NOT be transferred. I: 2021/09/16 22:15:26 planner.go:479: scatterPlan:notTransferred(Chia/portables/1f7n0em0/plot-k32-2021-07-26-15-08-b9330f6ab9e903b464cc97adfecb410f678c90d51b702dbde33c9b58d36de453.plot) I: 2021/09/16 22:15:26 planner.go:488: scatterPlan:ItemsLeft(1) I: 2021/09/16 22:15:26 planner.go:489: scatterPlan:Listing (8) disks ... I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/disk1):no-items:currentFree(2.30 TB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/disk2):no-items:currentFree(509.19 GB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:492: ========================================================= I: 2021/09/16 22:15:26 planner.go:493: disk(/mnt/disk3):items(1)-(108.83 GB):currentFree(5.20 TB)-plannedFree(5.09 TB) I: 2021/09/16 22:15:26 planner.go:494: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:497: [108.83 GB] Chia/portables/1f7n0em0/plot-k32-2021-07-26-14-12-92fa0e550e27c898b01b7d7b839da2a60d7b2ad6f55a9ecf86f1a78627a62b4e.plot I: 2021/09/16 22:15:26 planner.go:500: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:501: I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/disk4):no-items:currentFree(30.42 GB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/disk5):no-items:currentFree(30.41 GB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/disk6):no-items:currentFree(30.27 GB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/disk7):no-items:currentFree(29.93 GB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:503: ========================================================= I: 2021/09/16 22:15:26 planner.go:504: disk(/mnt/cache):no-items:currentFree(246.63 GB) I: 2021/09/16 22:15:26 planner.go:505: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:506: --------------------------------------------------------- I: 2021/09/16 22:15:26 planner.go:507: I: 2021/09/16 22:15:26 planner.go:511: ========================================================= I: 2021/09/16 22:15:26 planner.go:512: Bytes To Transfer: 108.83 GB I: 2021/09/16 22:15:26 planner.go:513: ---------------------------------------------------------

It clearly states that the second file will NOT be transferred, without further obvious explanation.

I suspect the problem is due to the huge size of the files, as I managed to transfer multiple smaller files from disk2 to disk3 without any issue. Maybe some kind of overflow in an intermediate variable ?

Thanks in advance for your support. -

Hi,

I've been using unBALANCE for quite some time now, it's really a great tool to reorganize data among disks.

I have recently upgraded some of my disks to a higher capacity and hence reallocate their usage.

And now I have an issue as I have some big files of ~100GiB to transfer in this example from disk2 to disk3.

disk2 has 509GB free, and disk3 has 5.2TB free.

Here's the output of the "Plan" phase when I try to transfer more than one file :PLANNING: Found /mnt/disk2/<sharename>/file1 (108.83 GB) PLANNING: Found /mnt/disk2/<sharename>/file2 (108.84 GB) PLANNING: Trying to allocate items to disk3 ... PLANNING: Ended: Sep 9, 2021 22:41:06 PLANNING: Elapsed: 0s PLANNING: The following items will not be transferred, because there's not enough space in the target disks: PLANNING: <sharename>/file2 PLANNING: Planning Finished

I can transfer the files one at a time, but if I try to select two or more files, unBALANCE refuses to move after the first "because there's not enough space in the target disks:". No disk is indicated.

Below a snapshot of the array :

The share is set to include disk2 to disk7. As can be seen, disk4 to disk7 haven't 100GB free, but of course I only target disk3 in the move operation as the log shows. Also, I've never used unBALANCE before on such big files, maybe it's another possible cause.

Any help or advice would be appreciated.

-

Hi,

I think a new version is available, as my logs have been mentioning unsuccessful upgrade attempts for ~18 hours. -

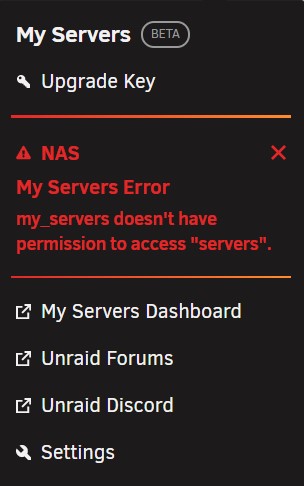

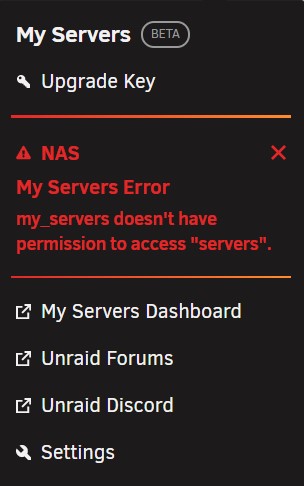

I just updated to 2021.06.07.1554. Everything seems fine, but I get the following error message in the dropdown :

-

11 hours ago, adminmat said:

Anyone found a way to map a new destination drive while the container / plotter is running?

My USB drive is full and I have 6 plots going... I hope I don't have to stop progress 🤓

I don't see any solution to change dynamically the final destination directory for a running plotting process. But if the final copy fails, due to destination drive full for instance, the process doesn't delete the final file in your temp directory, so you can just copy it manually to a destination with free space, and delete it from your temp directory once done.

-

I can't see then, but it's strange that your prompt is "#", like in unRAID terminal, where mine is "root@57ad7c0830ad:/chia-blockchain#" in the container console as you can see. Sorry, I can't help further, it must be something obvious, but it's a bit late here !

-

8 minutes ago, HATEME said:

# source ./activate

sh: 2: source: not found

# ./ .//activate

sh: 3: ./: Permission denied

# source./activate

sh: 4: source./activate: not found

# /activate

sh: 5: /activate: not found

# chia ./activate

sh: 6: chia: not found

# chia_harvester ./activate

sh: 7: chia_harvester: not found

# :./ .//activate

sh: 8: :./: not found

# qepkvcnmSDLJNKVC

sh: 9: qepkvcnmSDLJNKVC: not found

I feel like total boomer right now....

You have to be in the chia-blockchain directory, which happens to be a root directory in this container, for all that to work :

cd /chia-blockchain source ./activateHere's the result in my container ;

root@57ad7c0830ad:/chia-blockchain# cd /chia-blockchain root@57ad7c0830ad:/chia-blockchain# source ./activate (venv) root@57ad7c0830ad:/chia-blockchain# chia version 1.1.7.dev0 (venv) root@57ad7c0830ad:/chia-blockchain#Hey, btw, these commands must be entered in the container console, NOT in the unRAID terminal !

-

7 hours ago, HATEME said:

I got all the way to step Venv: "..activate" Umm Wut? Pardon my noobness, but what command do I enter? Tried every combination of ../activate, venv:../activate, activate venv, venv../activate. Even tried to google what a venv was... Everything just comes up as not found, not found, not found. The processes are running, but that's about it so far.

You tried ALMOST everything !

The rigth command is : ".(dash) (space).(dash)/activate", you can also type "source ./activate", it's the same.

-

8 minutes ago, adminmat said:

Interestingly when I installed 1.1.5 it showed up as 1.1.6dev0. There was a issue on GitHub about this as well with a direction to delete a .json file. The devs said it wasn't an issue. My 1.1.6 shows up as 1.1.7dev0 as well.

Note that I did not use the container template by Partition Pixel. I manually entered the official docker URL location

In fact it's a general issue of crappy versioning and/or repo management. On a baremetal Ubuntu, when trying to upgrade from 1.1.5 to 1.1.6 following the official wiki how-to it also built 1.1.7.dev0. And deleting the .json file is of no effect for building the main stuff, maybe the Linux GUI, I don't use it. And I think the docker image build process simply suffers from the same problem.

The only solution so far to build a "real" (?) 1.1.6 in Ubuntu is to delete the ~/chia-blockchain directory and make a fresh install. No a problem, as all static data are stored in ~/.chia/mainnet, but still irritating.

They will improve over time, hopefully ...

-

1

1

-

-

Beware, the build process by chia dev team definitely needs improvement : I pulled both the (new) '1.1.6' tag as well as 'latest' (which is the default if you don't explicitly mention it). In both cases, the output of 'chia version' in the container console is 1.1.7.dev0 !

Check your own containers, it doesn't seem to raise issues for the moment, but when the pool protocol and the corresponding chia version will be launched, it may lead to disasters, especially in setups where there are several machines (full node, wallet, harvester(s), ...)

I had already created an issue in github, I just complemented it with this new incident.

-

On 5/19/2021 at 5:51 PM, adminmat said:

@Gnomuz good points. I'll eventually move my farming plots to a segregated VLAN. Question: what network type are you using for the Chia container? Host or Bridge?

Also, how do you like the Asrock Rack X470D4U2-2T ? I just picked up a 5900x yesterday and was looking at the PCIe Gen4 version of that board (X570D4U-2L2T). Do you feel like you have enough expansion for your use? Would love to hear your thoughts.

Hi,

I used Host network type, as I didn't see any advantage in having a specific private IP address for the container.

So far, the X470D4U2-2T matches my needs. Let's say the BIOS support from Asrock Rack could be improved ... Support for Ryzen 5000 has been in beta for 3 months, and no sign of life for a stable release.

As for expansion, well, mATX is not the ideal form factor. If ever I were to add a second HBA, I would have to sacrifice the GPU, so no more hardware decoding for Plex. But it's more a form factor issue than something specific to the MB. In terms of reliability, not a single issue with the NAS running 24/7 for a bit more than a year now (fingers crossed), even if in summer the basement is too hot (I live in the south of France).

I had a look at the X570D4U-2L2T. For both MBs, I think their choice of two 10Gb RJ45 interfaces is questionable, I would really prefer to have one or two SFP+ cages, that would make the upgrade to 10Gb much more affordable with a DAC cable. And the X570 clearly privileges the M.2 slots in terms of PCIe lanes allocation if I got it well, that wouldn't be my first choice, but it highly depends on your target use ...

I hope that can help

-

2 hours ago, Trinity said:

Thank you so much, for the detailed reply

I ordered the pi4 with your recommended case I hope I get it installed easily ^^

I hope I get it installed easily ^^

May I ask if it would be okay to contact you, in case of questions? ^^"

Generally it should work, I#ll use the sd card to install linux on the ssd and try to get it running - I hope I will be able to move my keys and everything from the docker container to the pi and it will still work. Not sure, if the plots are kind of bound to my keys or if I could just get new ones, since I am in the 0 XCH club anyway Right know, it seems, my node is not getting all my plots right, maybe I broke something by changing the clock to my time zone, I still have some plots running, so I cant rebuild the container to get the default time back. In the worst case I have to delete 16 plots, if they are broken due to the wrong clock...

Right know, it seems, my node is not getting all my plots right, maybe I broke something by changing the clock to my time zone, I still have some plots running, so I cant rebuild the container to get the default time back. In the worst case I have to delete 16 plots, if they are broken due to the wrong clock...

I hope the new setup will then just work, right now it's pretty frustrating. With every day it doesn't work, it will be less likely to ever win a coin

I can't add parity, since my system is full of HDDs, I am limited to 4x3,5" and thus I don't want to use a quarter of my available space for parity. It's risky, but I just hope the NAS HDDs are just working fine.

One thing I still didn't understand is the plotting with the -f <farmer key> - What key is that? The one the full node prints out when calling chia keys show? Or the one from the harvester container I'll be plotting at?

No problem, PM me if you find issues in setting the architecture up.

For the Pi installation, I chose Ubuntu Server 20.04.2 LTS. You may chose Raspbian also, that's your choice.

If you go for Ubuntu, this tutorial is just fine : https://jamesachambers.com/raspberry-pi-4-ubuntu-20-04-usb-mass-storage-boot-guide/ .

Note that apart from the enclosure, it's highly recommended to buy the matching power supply by Argon with 3.5A output. The default RPi4 PSU is 3.1A, which is fine with the OS on a SD card, but adding a SATA M.2 SSD draws more current, and 3.5A avoids any risk of instability. The Canakit 3.5A power adapter is fine also, but not available in Europe for me.

The general steps are :

- flash a SD card with Raspbian

- boot the RPi from the SD Card and update the bootloader (I'm on the "stable" channel with firmware 1607685317 of 2020/12/11, no issue)

- for the moment, just let the RPi run Raspbian from the SD card

- install your SATA SSD in the base of the enclosure (which is a USB to M.2 SATA adapter), and connect it to your PC

- flash Ubuntu on the SSD from the PC with Raspberry Pi Imager (or Etcher, Rufus, ...)

- connect the SSD base to the rest of the enclosure (USB-A to USB-A), and follow the tutorial from "Modifying Ubuntu for USB booting"

- shutdown the RPi, remove he SD Card, and now you should boot from the SSD.

By default, the fan is always on, which is useless, as the Argon One M.2 will passively cool the RPi just fine. You have to install either the solution proposed by the manufacturer (see the doc inside the box), not sure it works with Ubuntu. There's also a raspberry community package which is great and installs on all OSes, including Ubuntu, see https://www.raspberrypi.org/forums/viewtopic.php?f=29&t=275713&sid=cae3689f214c6bcd7ba2786504c6d017&start=250 . The install is a piece of cake, and the default params work well.

Once the RPi is setup and the fan management of the case installed, just install chia, and then try to copy the whole ~/.chia directory from the container (mainnet directory in appdata/chia share) onto the RPi. Remove the plots directory from the config.yaml as the local harvester on the RPi will be useless anyway. That should preserve your whole configuration and keys, and above all your sync will be much faster, as you won't start from the very beginning of the chain. Run 'chia start farmer' and check the logs. Connect the Unraid harvester from the container as already explained, and check the connection in the container logs. At that stage, you should farm from the RPi the plots stored in the Unraid array through the harvester in the container.

To see your keys, just type 'chia keys show' in the RPi, it shows your farmer public key (fk) and pool public key (pk). With these two keys, you can create a plot on any machine, even outside your LAN. Just run 'chia plots create <usual parameters> -f fk -p pk'. It signs the plot with your keys, and only a farmer with these keys can farm them. Once the final plot is copied into your array, it will be seen by the harvester, and that's all.

-

2

2

-

-

7 hours ago, Trinity said:

Yes, around 1-2 pages back, some of us discovered the same issue. Including myself.

I tried to enter the container and to run: apt-get systemd

to set the correct time. Another option would be installing: apt-get tzdata.

There is also an open Pull request on the official chia docker: https://github.com/Chia-Network/chia-docker/pull/50

Not sure if it will solve the issues tbh. Installing it inside the container is only a quick solution, which will be lost when ever the container is rebuild. So not the best solution. Additionally to that, it didn't fix my sync status. I am now kind of more than one hour off and it never reaches the synced status. So it seems to be something different causing these issues. So you can try to get you clock right, but I can't promise it will solve your sync issues.Btw, the pull request is mine 😂. You could approve it, maybe it will draw attention from the repo maintainer ...

-

1 hour ago, dandiodati said:

Thanks for the details just one thing I'm not clear on is that with this command line how to you chose your plotting sizes ? Basically I saw in the UI the ability to choose different plotting sizes but with this command line tool does not seem to indicate it. I need to determine how many I can run on a SSD and not run out of space?

If you ever want to create something else than k-32 , the CLI tool also accepts the '-k 33' parameter for instance. If '-k' is not specified, it defaults to '-k 32' which is the current minimal plot size.

All options are documented here https://github.com/Chia-Network/chia-blockchain/wiki/CLI-Commands-Reference , and 'chia plots create -h' is a good reference also 😉

-

1

1

-

-

14 hours ago, Trinity said:

This sounds like a really nice setup, but I still didn't fully understand, what you need to extend the farm. Which services do you need running for plotting? Can you plot via the harvesters or via the farmer, which is running together with the full node or does it only require the full node? How many farmers you need, how many harvesters? Right now I am storing the plots on the xfs array, since I don't have unassigned devices yet. I'd maybe invest in a pi4, wanted to buy one for a long time anyway but had no project to use it for in mind, so using it as chia node would be a good enough project. I wasn't aware that the pi has a M.2 slot, are there other options to store the system, like a big sd card or is it better to use a ssd?

So if I get you right:

- There is a pi4 with a m.2 sdd running the full node to accept challenges, syncing the blockchain and handle the wallet and master keys

- You have a special rig for plotting, which stores the plots on your unraid server in a share folder of your array (which is optional) - What services does that rig need to run to plot?

- You are running harvesters on your unraid system via this container, which accesses the plot folder and are connected to the pi, so the pi running the farmer accepting challenges and the harvesters on your unraid server are responding to those?

- You can use the harvesters to create plots from there as well (which is optional)? Or how are you plotting there?

So if I wouldn't have a special plotting rig, I can use the unraid system harvesters to do so or do I need to run something else?

Hi,

Thanks for your interest in my post, I'll try to answer your numerous questions, but I think you already got the general idea of the architecture pretty well 😉

- The RPi doesn't have a M.2 slot out of the box. By default, the OS runs from a SD card. The drawback is that SD cards don't last so long when constantly written, e.g. by the OS and the chia processes writing logs or populating the chain database, they were not designed for this kind of purpose. And you certainly don't want to lose your chia full node because of a failing 10$ SD card, as the patiently created plots are still occupying space, but do not participate in any challenge... So, I decide running the RPi OS from a small SATA SSD was a much more resilient solution, and bought an Argon One M.2 case for the RPi. I had an old 120 GB SATA SSD which used to host the OS of my laptop before I upgraded it to a 512 GB SSD, so I used it. This case is great because it's full-metal and there are thermal pads between the CPU and RAM and the case. So, it's essentially a passive heat dissipation setup. There's a fan if temps go too high, but it never starts, unless you live in a desert I suppose. There are many other enclosures for RPis, but this one is my favorite so far.

- The RPi is started with the 'chia start farmer' command, which runs the following processes : chia_daemon, chia_full_node (blockchain syncing), chia_farmer (challenges management and communication with the harvester(s)) and chia_wallet. chia_harvester is also launched locally, but is totally useless, as no plots are stored on a storage accessible by the RPi. To get a view on this kind of distributed setup, have a look at https://github.com/Chia-Network/chia-blockchain/wiki/Farming-on-many-machines, that was my starting point. You can also use 'chia start farmer-no-wallet', and sync your wallet on another machine, I may do that in the future as I don't like having it on the machine exposed to the internet.

- The plotting rig doesn't need any chia service running on it, the plotting process can run offline. You just need to install chia on it, and you don't even need to have your private keys stored on it. You just run 'chia plots create (usual params) -f <your farmer public key> -p <your pool public key>' , and that's all. The created plots will be farmed by the remote farmer once copied into the destination directory.

- I decided to store the plots on the xfs array with one parity drive. I know the general consensus is to store plots on non-protected storage, considering you can replot them. But I hate the idea of losing a bunch of plots. You store them on high-density storage, let's say 12TB drives, which can hold circa 120 plots each. Elite plotting rigs with enterprise-grade temporary SSDs create a plot in 4 hours or less. So recreating 120 plots is circa 500 hours or 20 days. When you see the current netspace growth rate of 15% a week or more, that's a big penalty I think. I you have 10 disks, "wasting" one as a parity drive to protect the other 9 sounds like a reasonable trade-off, provided you have a spare drive around to rebuild the array in case of a faulty drive. To sum up, two extra drives (1 parity + 1 spare) reasonably guarantee the continuity of your farming process and prevent the loss of existing plots, whatever the size of your array is. Of course with a single parity drive, you are not protected against two drives failing together, but as usual it's a trade-off between available size, resiliency and costs, nothing specific to chia ... And the strength of Unraid is you won't lose the plots on the healthy drives, unlike other raid5 solutions.

- As for the container, it runs only the harvester process ('chia start harvester'), which must be setup as per the link above, nothing difficult. From the container console, you can also optionally run a plotting process, if your Unraid server has a temporary unassigned SSD available (you can also use your cache SSD, but beware of space ...). You will run it just like on your plotting rig : 'chia plots create (relevant params) -f <farmer key> -p <pool key>'. The advantage is that the final copy from the temp dir to the dest dir is much quicker, as it's a local copy on the server from an attached SSD to the Unraid share (10 mins copy vs 20/30 mins over the network for me).

- So yes, you can imagine running your plotting process from a container on the Unraid server if you don't have a separate plotting rig. But then I wouldn't use this early container, and would rather wait for a more mature one which would integrate a plotting manager (plotman or Swar), because managing all that manually is a nightmare on the long run, unless you are a script maestro and have a lot of time to spend on it 😉

Happy farming !

-

1

1

-

-

15 minutes ago, adminmat said:

What is the general consensus on opening router port 8444 to your container for this? Do we feel the Chia software is secure enough?

I haven't opened the port on my router yet but I'm getting fewer peers as those who do.

Thoughts?

Without port 8444 forwarded to the full node, you'll have sync issues, as you will only have access to the peers which have their 8444 port accessible, and they are obviously a minority in the network....

Generally speaking, opening a port to a container is not such a big security risk, as any malicious code will only have access to the resources available to the container itself. But in the case of chia, the container must have access to your stored plots to harvest them. An attacker may thus be able to delete or alter your patiently created plots from within the container, which would be a disaster if you have hundreds or thousands of them ! And one of the ways to maximise one's netspace ownership could be the deletion of others' plots ...

That's one of the reasons why I decided to have a dedicated RPi to run the full-node, with the firewall allowing only the strictly required ports, both on the WAN and LAN sides. There's no zero risk architecture, but I think such a setup is much more robust than a fully centralised one where a single machine supports the full set of functions.

-

1

1

-

-

10 hours ago, Trinity said:

From the official chia docs: https://github.com/Chia-Network/chia-blockchain/wiki/FAQ#i-am-eeing-blocks-and-connections-but-my-node-says-not-synced

So It can be related, I think. I also have troubles to sync, but even after installing systemd it seems like its not syncing correctly.

I have no idea, why the whole docker is causing so much trouble, but I tried to run the GUI in a windows vm on unraid. This. Was. Horrible. The docker container was synced more than twice as fast as the one in the windows vm. So it doesn't seem to be an option. It must work with docker. Because if everything is to slow, one could have a bazillion plots, and would never win, because the reaction of a challenge is to slow...

But the docker feels very unstable. For me it syncs, then it stops, then it works, right now after fixing the time it's again not syncing... It's a nightmare. Oh and the wallet never ever synced on my end. Not sure, what is going on there.My understanding of the official wiki is that your system clock must not be off by more than 5 minutes, whatever your timezone is, reminded that the clocks (both software and hardware) are managed as UTC by default in Linux.

Anyway, I see that many have problems syncing when running the container as a full node.

Personally I went the Raspberry way and everything is working like a charm so far. The RPi4 4GB boots from an old 120GB SATA M.2 SSD under Ubuntu 20.04.2 (in an Argon One M.2 case which is great btw) and runs the full node. In a first time, I ran the harvester on the RPi and it accessed the plots stored on the Unraid server through a SMB share. Performance was horrible, average was 5-7 seconds to respond, with peaks at more than 30 sec ! I tried NFS which was a bit better, but still too slow, often > 3 sec.

With this container running only the harvester, and connected to the farmer on the RPi, response times are under 0,1 sec at farmer level, it has a negligible footprint on the server and it's rock solid. I also create plots in the container as a bonus, to help my main plotting rig.

If others want to replicate this architecture, I posted a step-by-step a few posts above on how to setup the container as a harvester-only, and connect it to a remote farmer/full node.

An RPi is not a big investment, compared with the storage cost, and as chia was designed as a distributed and decentralised project, I think it's the way to go to have a resilient setup.

-

1

1

-

[Support] Djoss - CrashPlan PRO (aka CrashPlan for Small Business)

in Docker Containers

Posted

Hi there,

A new version seems to be available, an updated image would be appreciated