scd780

-

Posts

18 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by scd780

-

-

22 hours ago, Mattti1912 said:

Hello

I've installed duckdns but when i type my dynamic dns, it keeps hitting my router.. Mikrotik..

How can i change that??

regards

Hi, you’ll need to direct that traffic to the appropriate location via a proxy. Most people use the SWAG or NGINX-Proxy-Manager (NPM) containers. I recommend NPM, more user friendly imo!

You’ll want to look into it more if this is brand new to you but the gist of it is that you forward ports 80 and 443 from your router to your proxy software and then your proxy software has NGINX config files and SSL certs to authoritatively direct traffic on your network.

The flow of data would be someone visits your domain (subdomain1.duckdns.org), the duckdns service tells the user’s browser that that subdomain routes to your public IP address, the browser then sends an HTTP/S request to your public IP, this is forwarded from your router to your proxy software via the port forwarding rule, the proxy software then looks at its own config files and knows that subdomain1 goes to location X.X.X.X:XXXX on your network vs subdomain2 which goes to Y.Y.Y.Y:YYYY and forwards the traffic there for access.

there’s a few good videos and guides out there so just search around and you’ll find something. The key word to use is “reverse proxy” for informational videos and “NPM” or “NGINX Proxy Manager” for guides. Just remember to NEVER open your UnRAID webGUI to the internet through a proxy like this, it’s not secure enough for that. If you need remote access, setup Wireguard instead. It’s either a plug-in or built in to unRAID now, I forget but very easy to setup and plenty of guides as well.

-

56 minutes ago, Elmojo said:

Thanks man, I'm getting there!

I have the port forwarding worked out, with the help of a FAQ from mullvad. My port is assigned and tests as open. I've plugged it into qBT, so that part should be done.

As for the pathing thing, does it have to be /data/....? When I was using the non-VPN qBT, I had everything going to a share called "downloads". All my downloader-type apps dump into subfolders of that share. Does this version not support that option?

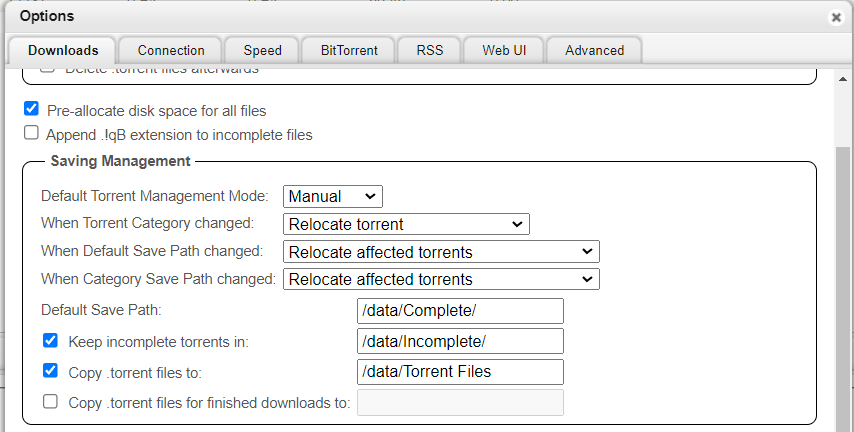

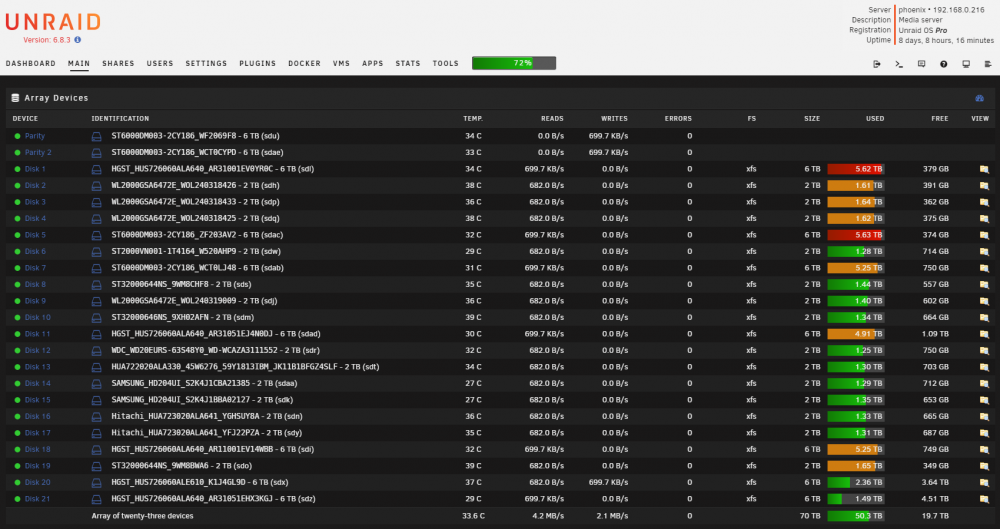

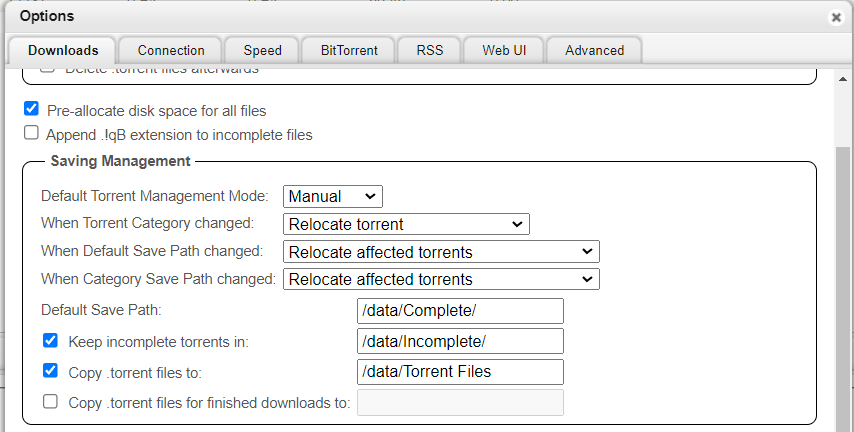

BTW, I think binhex used /data in his example because of the path mappings for the container setup. You can map whatever you'd like array wise to the /data path within the qbit container. For example:

Then within qbit I put these settings:

This makes the full path for completed torrents as seen from Unraid GUI or SMB share: /mnt/user/qBitTorrent_Downloads/Complete

You can map any share/path from your unraid array to the Host Path 2 variable in the first picture. So you can use your downloads share with the subfolders. I specifically mapped just the qBitTorrent_Downloads folder within my Downloads share instead of the whole thing because I didn't want qbit to be able to cause issues in my other download directories (very unlikely, but safer this way anyways so why not!).

-

Hi, I was running delugevpn and now need to run qbittorrentvpn as well due to private tracker requirements. I know I need to change the WEB UI access port for qbittorent so following this documentation (https://github.com/binhex/arch-qbittorrentvpn):

QuoteDue to issues with CSRF and port mapping, should you require to alter the port for the webui you need to change both sides of the -p 8080 switch AND set the WEBUI_PORT variable to the new port.

I decided I needed to delete the "Host Port 3" and "Host Port 4" variables from the docker template since I can't edit the container port value and add new Port variables to map host 8070 to container 8070 (replace 8080) and map host 8119 to container

8119 (replace 8118 privoxy). I also updated the WEBUI_PORT from default of 8080 to 8070.

The container works as expected but the link in Unraid dashboard takes me still to X.X.X.X:8080 instead of 8070. Obviously I can manually change the address in my browser but I'm concerned I might be missing something important if that didn't update. Any thoughts?

-

I’m trying to have prowlarr proxy through delugevpn so that torrent magnet files and nzb files are accessed through the VPN. I have privoxy enabled and put in the correct info on the prowlarr side of things. But when I use the prowlarr console to check IP (curl ifconfig.me) I still get my own public IP from my ISP. If I do the same command through the delugevpn I get a different IP, presumably from PIA endpoint which is my VPN provider. I’ve looked through logs but see no errors related to proxy as far as I can tell.

I’m on the docker tag 2.0.4.dev38_g23a48dd01-3-01 if that makes a difference. I think I had swapped from latest a while ago when PIA had issues and it was recommended as a short term fix and I never swapped back.

These containers are on different docker networks but that shouldn’t have an impact right? Delugevpn is on bridge and prowlarr is on proxynet (I intend to make it accessible through reverse proxy once I get authelia up and running).

I’ve attached a picture of the proxy settings in prowlarr that don’t work.

-

On 4/9/2021 at 1:47 AM, scd780 said:

So I'm back to seeing the fluctuation between 3.5 GB and 4 GB. I think there's only been exploring done in world since the last update, no major building. Is it also normal to see the 2% steady CPU usage when no one is online (CPU is 8 core/16 thread if it matters)?

Not really expecting a solution at this stage, just documenting it in case others have similar problems! No ill side effects have been seen so far. Plan to do a lot of building in world over the next week so we'll see how that affects RAM usage and/or world file size.

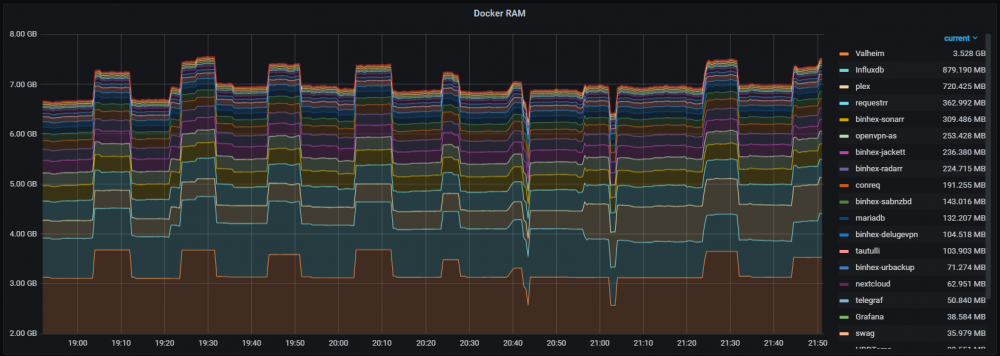

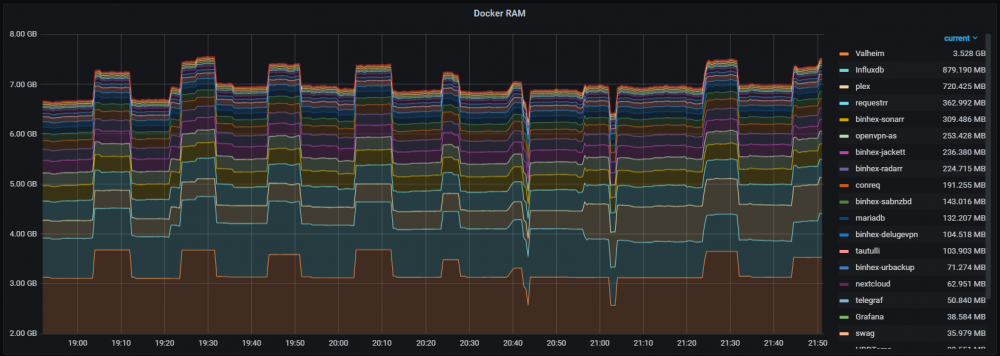

So we finally got a couple people back on the server playing at the same time. There was minimal building but mainly a long lasting boss fight. And weirdly enough the server recorded inverse RAM spikes during the time this was happening (from 20:00 to 2:00 with a small break at 23:00 ish)...

Other than that the RAM usage had continued the same pattern between 3.5 GB and 4 GB. At this point it really just seems like a Valheim server software 'feature' so I probably won't continue with updates here as there's nothing Ich77 can do about that! Thanks

-

1

1

-

-

6 hours ago, der8ertl said:

is there any progress on this?

I have also tried to get it to work in a similar way, but no success..

if anyone has a working proxy config for swag, please share

thanks a lot!

Never got back around to it as life got busy. Still on my mind though and I might find some time to try this again sometime this week. I'll post back here and tag you if I'm able to get it working! @der8ertl

-

Not really a big issue but does anybody know why my DuckDNS container would be mis-reporting a use of 9 Exabytes of RAM? I'm not getting any errors in the log at the time these spikes happen so I'm not worried it's broken, just is mildly annoying/funny because it makes my status graph unreadable as it scales Y to account for the spike:

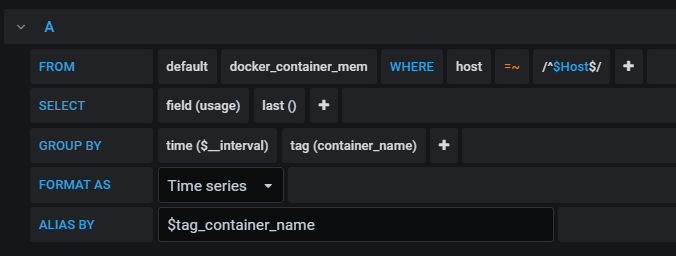

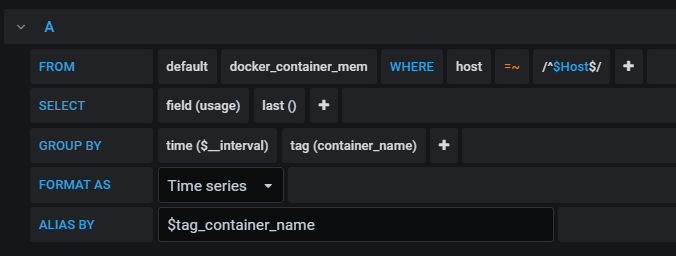

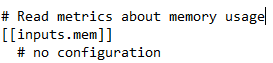

EDIT: This is the Grafana query used to source the data from Influxdb:

And I believe this is the only portion of the telegraf.conf file that would collect memory usage data:

-

On 4/7/2021 at 1:18 AM, ich777 said:

I think so, keep an eye on this.

Also please report back what happens over time if more things in the world are built and changed when the world size grows, I think the file size of the world is bound to the RAM usage, but as said, could be wrong about that...

So I'm back to seeing the fluctuation between 3.5 GB and 4 GB. I think there's only been exploring done in world since the last update, no major building. Is it also normal to see the 2% steady CPU usage when no one is online (CPU is 8 core/16 thread if it matters)?

Not really expecting a solution at this stage, just documenting it in case others have similar problems! No ill side effects have been seen so far. Plan to do a lot of building in world over the next week so we'll see how that affects RAM usage and/or world file size.

-

1

1

-

-

9 hours ago, ich777 said:

I think that can be the reason, the bigger the world gets or the more you change in the world and build the more RAM the game uses, at least from my experience.

In my case the world database is now 66MB and the game now uses about 2.5GB of RAM.

Also note that Valheim is in really early alpha state and it can also be that something that's in the world database is causing a memory leak or something like that, but from the restart of your server I see that the RAM usage quickly is going back to 4GB so in your case or for your world this seems pretty normal, but that's just all guesses...

Eventually try to ask on the Valheim Discord/Forums/Steam Community Hub if someone experience this too.

Finally got the chance to check and my world database is 108 MB. I think I'll remove the container image and re pull it from your repo just in case but if I see the same behavior I guess it's fine!

I wouldn't be surprised if it's something to do with in-game changes either, we ended up doing a lot of terraforming type activities before we heard that it has a huge impact on performance. Thanks for the help!

UPDATE: Blew away the docker image, repulled it, put my backup files back in place. Still works great and RAM usage is down to 2.5 GB now! So I must've screwed up something before somehow... I'll keep an eye on it but looks to be resolved! Thanks again

UPDATE PART 2: After having it running for a few hours it's gone back to the same behavior as before. Guess it's fine?-

1

1

-

-

7 hours ago, ich777 said:

The "Automatically Update Game:" variable has nothing to do with Steam, this is a script that I wrote because some people requested auto updates but as said this has nothing to do with the files that you mentioned.

What logs are you talking about exactly, Steam does nothing, it's the game that calls Steam and nothing you can do about that (a little Background to this: SteamCMD is only called on updates or better speaking at the start of the Container and then the game itself is started and everything that is updated within the mentioned logs is done from the game even if it's Steam related).

Hope that makes sense to you.

Haven't you turned on the Backup function?

How big is your world in terms of filesize?

Thanks for the quick reply! I’m not familiar at all with how this docker is setup to work so sorry if I point to the wrong things, I’ll try and use the folder paths to make it more clear!

I’m talking about all of the logs in the container mapping /serverdata/serverfiles/Steam/logs. There’s about 12 different log files there and at first it seemed like most of them were written to in sync with the RAM usage spikes I saw, though now I think the writes are synced with times I or friends logged onto the world, not 100% sure. So my initial line of troubleshooting may have been quite wrong.

I do have the backup function turned on and it works great! I just said that to mean copy the backup out before nuking everything and starting fresh.

I don’t have access at the moment for the exact file size, but the compressed backup is ~70 MB. I can update later today with uncompressed numbers when I have better access.

Probably hard to help me troubleshoot with just the info above so I’ll do some more poking myself and bring anything back that seems interesting!

if I do end up needing to nuke the container image and re-pulling it, any tips? I think I just need to keep the latest backup archive (and Valheim Plus config) and plug that back in after initial setup.

-

Hi, does anybody have an explanation/fix for the RAM usage behavior by the Valheim docker seen below? Can still connect to the Valheim dedicated server from LAN and WAN and play with normal desync issues, just bugs me seeing this on my status page...

Previously, usage stayed at a flat 2.5-3 GB, don't remember exactly. Now it fluctuates a half GB on a very set timing. Possibly related to either an update to the Valheim game or Valheim Plus mod in the last few weeks but unsure. (The dip seen around 20:00 is due to restarting the docker to grab the newest Valheim Plus update.)

The unraid docker log doesn't seem to show anything useful for this. The steam appinfo_log shows this repeating every 5-10 minutes:

[2021-04-05 20:04:37] RequestAppInfoUpdate: nNumAppIDs 1 [2021-04-05 20:04:41] RequestAppInfoUpdate: nNumAppIDs 1 [2021-04-05 20:04:41] RequestAppInfoUpdate: nNumAppIDs 1 [2021-04-05 20:04:41] Change number 0->11151556, apps: 0/0, packages: 0/0 [2021-04-05 20:04:41] Requesting 744 apps, 1 packages (meta data, 1024 prev attempts) [2021-04-05 20:04:42] Requested 79 app access tokens, 41 received, 38 denied [2021-04-05 20:04:42] Updated SHAs with 41 new access tokens [2021-04-05 20:04:42] Downloaded 28 apps via HTTP, 803 KB (181 KB compressed) [2021-04-05 20:04:42] Requesting 58 apps, 0 packages (full data, 1024 prev attempts) [2021-04-05 20:04:42] UpdatesJob: finished OK, apps updated 86 (1065 KB), packages updated 0 (0 KB) [2021-04-05 20:04:49] Starting async write /serverdata/serverfiles/Steam/appcache/appinfo.vdf (2697301 bytes)It makes me think steam is constantly updating something but I have the "Automatically Update Game:" variable set to false. There's other logs from the Steam/logs location as well that seem to follow the same time-repeating pattern, such as store 'userlocal' and it failing to be read. Is this something fixable on my end? I can backup the world files and wipe the docker image to restart if necessary.

Can reply with more log files if that's helpful!

-

Hi all,

Wondering if anyone else has experienced this issue and/or knows what may be causing it!

I have been running pihole in a docker on Unraid for about a year now. Recently I've found that the speed of wifi on my iPhone 12 Pro Max is greatly reduced when loading items from many places (i.e. loading Apollo feed is extremely slow). However I still get close to full speed when testing through fast.com, which has been very confusing. I'm on the latest iOS, have made sure I'm on the 5GHz band and I am definitely using pihole as my DNS. If I go into my phone settings and change DNS to say 1.1.1.1 then everything loads extremely fast again and it works perfectly. I don't see these issues on anything wired to the router.

So I think I've narrowed it down to a DNS issue, but I'm not sure if it's on the device or pihole side... Has anyone seen anything similar or have ideas? Might just nuke pihole and reinstall or use adguard home if I can't get this working..

Thanks

-

19 hours ago, snowmirage said:

Thanks for the advice. I'll try starting again from scratch. I know around the time I was first trying this I was also setting up timemachine, and some other stuff, and ended up filling my cache drive. That was where I had appdata and all my VMs.... they were not happy about that... I've since moved all my appdata and vms to a 2TB nvme ssd, but its possible some left over config either server or client side is still messing stuff up. So I'll nuke it all and start from scratch.

I also found a docker container in CA that will test each drives read and writes. I'll turn off everything else and give that a try, as well as just try to move some files to the array via a windows share. That should give me some comparisons between urbackup speeds and what I can verify the system can do. My end up pointing out some bigger system problem... though I can't imagine what atm.Ah I did the same thing recently and maxed out my cache drive by accident.... really broke some of my dockers and I ended up having to nuke my docker image and reinstall. This was actually super easy though, because Community Apps saves your setup variable choices and the appdata config stuff is unaffected in the share. Only real *complicated* thing I had to do was remake a custom docker network type for my proxy. That may help you as well! Good luck!

-

1 hour ago, snowmirage said:

For those that have completed backups with this from a Windows Client to unraid

How long did it take?

What was the docker app pointing at to store the backups? Unassigned device / Unraid Cache / Unraid Array

I have this setup and it appears to be working but my 500GB SSD on my windows host has been running for nearly 24hrs now and its still listed in the webUI as "indexing"

I'm seeing writes to the array in the <1MB/s range

I have it set to a share that doesn't use the cache.I have 2 Windows 10 clients hardwired on local gigabit LAN. Logs on one of them are showing an average speed of about 170 MBits/s during full image backups. This works out to about an hour and 15 min for 100 GBs of data. I'm writing straight to the array as well and I think it usually goes to my 5400 rpm drives in the array since they're the least full. Grafana shows my array disks getting hit with about 15MB/s from urbackup during backups. I also haven't had turbo write mode turned on for Unraid until yesterday so I might start to see higher speeds. Oh and I use the compressed format offered by urbackup which probably makes a difference! Seems this is only a single threaded workflow so speeds may vary depending on your CPU capabilities as well (I'm running a Ryzen 7 1700 Stock).

Here are my docker settings in unraid, I had a couple other things I wanted to keep in my Backups share so that's why /media is directed to a subfolder:

Just a couple thoughts you may want to check:

- I have my minimum free space set at 300GB for my Backups share, what is yours at? This might have something to do with it but I doubt it since you said it's stuck on indexing...

-

Have you gone through the Client settings in the urbackup UI?

- This one was big for me, I could not get it to back anything up for awhile because of default directories, excluded files, some windows file paths it didn't like that were hidden deep in AppData, among other things I think...

- Would recommend using the documentation (link in WebUI) to populate all of your settings appropriately from the server side and then uncheck the option allowing clients to change settings. When I allowed clients to change settings and adjusted them client-side, it never seemed to want to sync up!

I'd probably recommend removing the docker image for urbackup completely, cleaning out its spot on your array, uninstalling the client-side software and starting over with a clean slate! Had to do this when I added my second client since I screwed it up somehow. I can share pictures of my server side settings if you'd like as well.

*Disclaimer: I am definitely not an expert on this, just happen to have it running smoothly on my setup so results may vary lol

-

On 10/1/2020 at 9:32 PM, Dent_ said:

Did you ever figure out a solution to this?

Hi Dent_,

Nope didn't get back to troubleshooting as it wasn't a huge concern at the time, just something I wanted to enable to backup my parents' devices. At this point my best guess is something to do with DNS and the proxy? I have a pihole container that is my DNS but not DHCP and it doesn't seem to recognize cnames no matter what I do (newbie so I hope I'm using the term correctly. Example of this behavior would be that I have to use the direct IP of my unRAID server instead of the given name to access the webGUI).

I seem to remember thinking that I may have set the wrong, or not all of the necessary ports in the proxy config file. So that may be something to look into if you're troubleshooting yourself! Let me know if you figure it out

If you do go this route, don't forget to set a password on your urbackup installation!

-

1

1

-

-

Can anyone help me to correctly configure urbackup (and other involved dockers) such that a remote computer can be backed up to my unraid array via internet?

Here's what I thought would work and tried so far:

- Used duckdns to create a subdomain for this use case (**urbackup.duckdns.org)

- Added this to my reverse proxy letsencrypt docker config under the subdomain variable (this letsencrypt reverse proxy setup already works for other services of mine)

- (THIS IS WHERE I THINK I GOT IT WRONG) Tried to copy other .conf file examples to create one for urbackup [copied at bottom]

-

Modified the UrBackup settings on the admin page under General -> Internet

- Added **urbackup.duckdns.org as the internet server name, added the LAN ip and port of my letsencrypt docker in the "Connect via proxy" section

- Checked the boxes to allow backups over internet (including enabling internet mode at top)

- Restarted both docker containers

I assumed this didn't work because the computer I had backing up over LAN could no longer see the server and following the **urbackup.duckdns.org URL got no response. I realized this was because the letsencrypt and urbackup containers were on different network types so I changed urbackup's settings to be on the right network and included 3 new variables to forward ports so I could still access the WebUI locally but still could not find it from the duckdns URL(the ports only get mapped correctly automatically if the network type is host apparently?).

At this point my local computer still couldn't find the local urbackup server instance so I reverted all changes and here I am.

Is this something easy where I used the wrong port in the proxy config file below? Do I have to change local client settings to backup over internet as well? I'm quite new to unRaid and networking concepts so I probably made a few mistakes...

No idea where to go from here but I would appreciate any help! Thanks!

# make sure that your dns has a cname set for urbackup and that your urbackup container is not using a base url server { listen 443 ssl; listen [::]:443 ssl; server_name **urbackup.*; include /config/nginx/ssl.conf; client_max_body_size 0; # enable for ldap auth, fill in ldap details in ldap.conf #include /config/nginx/ldap.conf; location / { include /config/nginx/proxy.conf; resolver 127.0.0.11 valid=30s; set $upstream_app binhex-urbackup; set $upstream_port 55415; set $upstream_proto http; proxy_pass $upstream_proto://$upstream_app:$upstream_port; } } -

Hi,

I have been running unraid with no issues for ~7 months now. I use a Ryzen 7 1700 with 2x8GB sticks of RAM (on QVL list for the mobo and XMP not enabled because it has stability issues). This morning around 8:30am Telegraf data shown in Grafana indicates that 8GBs of RAM was "lost". Unraid's dashboard still indicates 16GBs with usage around 37%.

Has anyone seen this before?

I would troubleshoot by restarting but I currently have a preclear operation running on 2 new disks that won't finish for another day or so. I don't know if I can pause and resume that after a restart. Interestingly, 1 minute after the RAM disappears from telegraf's database, I get the following error on my preclear operation:

Jul 11 08:29:13 TheVault preclear_disk_VDKUMUDK[31360]: blockdev: ioctl error on BLKRRPART: Device or resource busy

I'm not sure if it's related. Just looking for someone to ease my mind... Hoping unraid doesn't crash with an out of memory error if it tries to use more than 8GBs and that RAM stick ends up being "gone" somehow. I'm fairly new to linux systems but happy to mess around in settings and terminal if log files would help!

Thanks

[Plugin] Plex Streams

in Plugin Support

Posted · Edited by scd780

additional info

Not sure where/how to report this error but there seems to be an issue with how the plugin represents music streams. It almost seems like it's storing the information as an array and somehow the music streams add an empty entry that moves all the content over one column's worth? Add that with not clearing done/ended music streams as it reaches the next song and you get this behavior in the UI (song names highlighted):

Happy to provide any extra info if it would help with debugging! Other than this the plugin has been great, appreciate it!

EDIT: Additional info: everything is fixed on a page reload and I'm using the Brave browser, Unraid 6.12.8, and the latest plugin version 2023.11.27