cappapp

-

Posts

24 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by cappapp

-

-

13 minutes ago, dlandon said:

Are you using NFSv3 or NFSv4?

How do I check?

Running UnRaid Version: 6.9.2

-

Hi All,

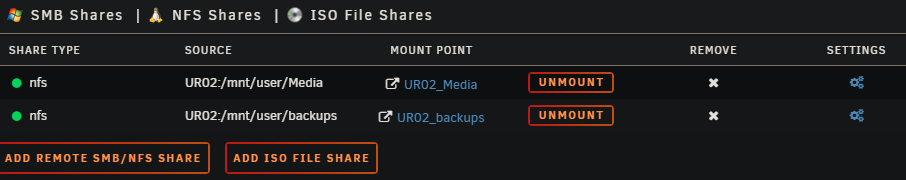

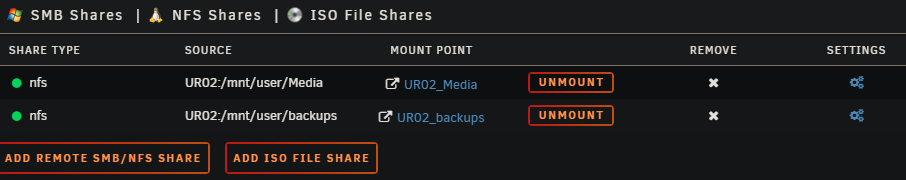

Recently I mapped NFS shares from another Unraid server to use in some dockers on this server. That all seems good and working in dockers like I want.

However recently I tried doing some rsync of some files from Server 1 to Server 2 using this mapped share. Anyway it didn't seem to like that at all. CPUs were randomly and slowly filling up to 100% and not coming back. To the point it would just crash the Unraid UI or become super unresponsive. These files were old VMWare VMs, so some of the individual files were over 1tb. Not sure if that makes it different?

Anyway, it's are transferring just fine now in windows using UNC paths. After this one is done, i'll try another one in rsync and grab logs. I was seeing RED errors. Just forgot to save them. This was yesterday. Processor is a threadripper with 24 cores and 32 RAM.Most of the time the server sits at 20% CPU and 40% RAM. But running rsync using those mapped shares and it goes crazy. Actually, I think I also tried in Krusader transfering the same files using the mapped shares again and krusader became unresponsive too and had to kill it.

So it crashed transferring like this:

rsync -rtvv /mnt/user/backups/EX1 /mnt/remotes/UR02_backups/EX1 --log-file=/mnt/user/Other/rsynclog.log --progress

I think some of the small files worked.

Currently transferring like this (Windows Explorer):

\\192.168.0.5\backups\EX1

\\192.168.0.8\backups\EX1

Maybe I have a config wrong somewhere, any ideas on what I should look at first?

Dockers seem OK using them.

Cheers

-

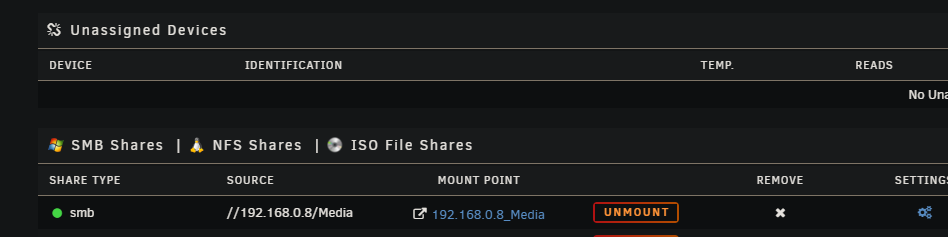

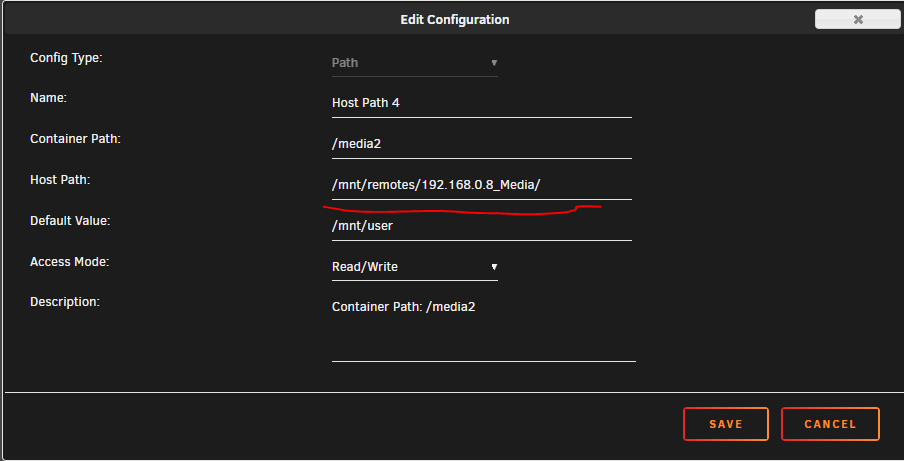

Has anyone had any experience using an Auto mapped share from a 2nd UnRaid server and passing it through to Emby or other dockers?

Emby can see and play media from there, but it can't update the meta data in that folder.

I'm getting permissions errors;

*** Error Report ***

Version: 4.6.7.0

Command line: /system/EmbyServer.dll -programdata /config -ffdetect /bin/ffdetect -ffmpeg /bin/ffmpeg -ffprobe /bin/ffprobe -restartexitcode 3

Operating system: Linux version 5.10.28-Unraid (root@Develop) (gcc (GCC) 9.3.0, GNU ld version 2.33.1-slack15) #1 SMP Wed Apr 7 08:23:18 PDT 2021

Framework: .NET Core 3.1.21

OS/Process: x64/x64

Runtime: system/System.Private.CoreLib.dll

Processor count: 24

Data path: /config

Application path: /system

System.UnauthorizedAccessException: System.UnauthorizedAccessException: Access to the path '/mnt/remotes/192.168.0.8_Media/TV Shows/Buffy The Vampire Slayer/tvshow.nfo' is denied.

---> System.IO.IOException: Permission denied

--- End of inner exception stack trace ---But permission look OK?

-

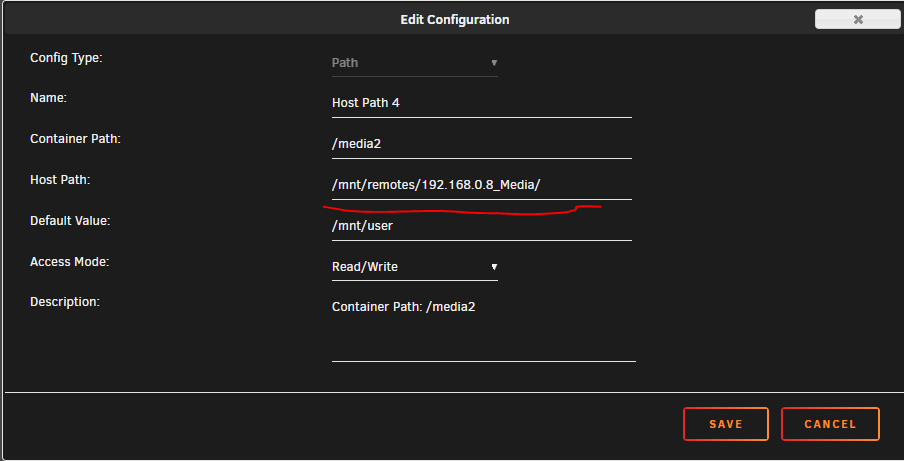

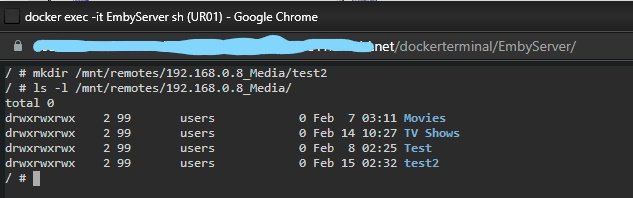

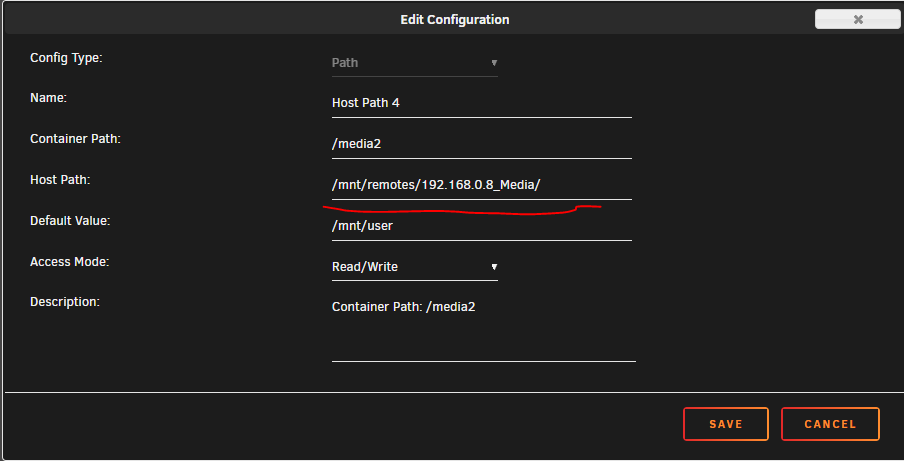

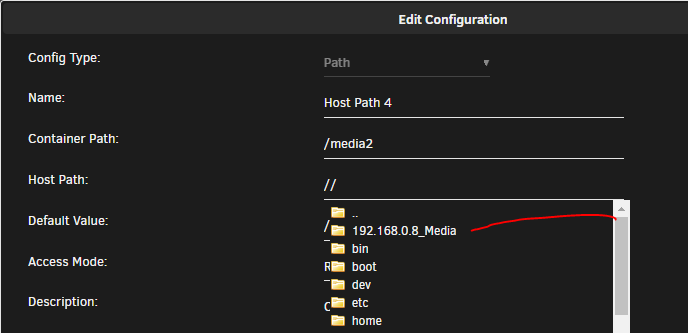

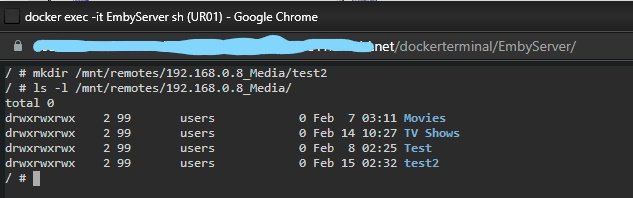

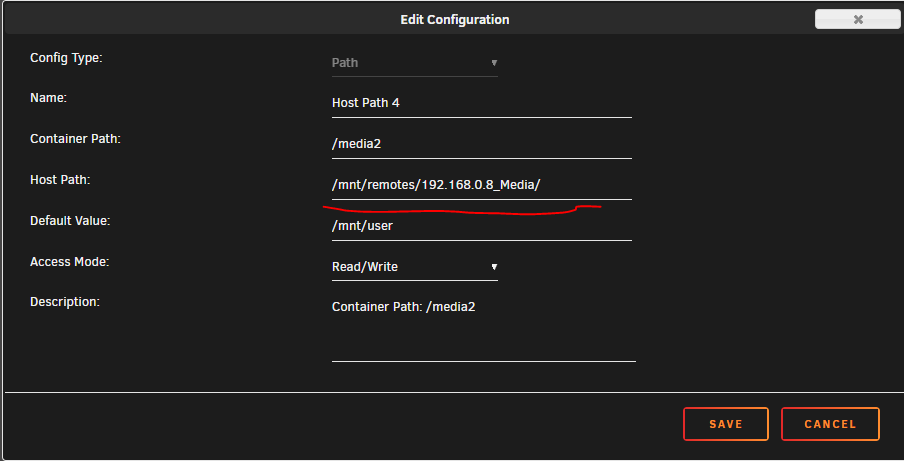

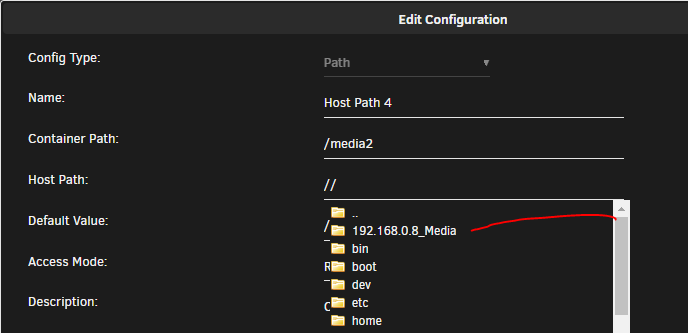

I just figured it out.

In the Docker if I use the full path and not the 'shortcut path'?? it works as I want it.

Back in the parent directory it was listed there, so I just choose that by default.

Thanks for helping me checkout more things

Cheers

-

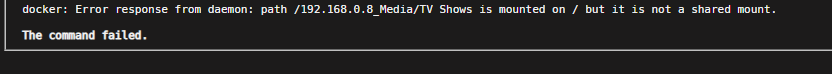

7 hours ago, trurl said:

Access Mode

It's currently read/write.

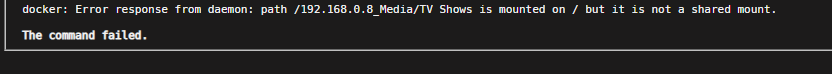

I tried 'read/write shared' and got this.

Then tried 'read/write slave'. The docker updated OK, but it fails to start.

The other share (which is local to that unraid server, so it's not the same I guess) is set to just read/write. But maybe it's not supposed to be the same when it's a share from a separate unraid server.

Edit:

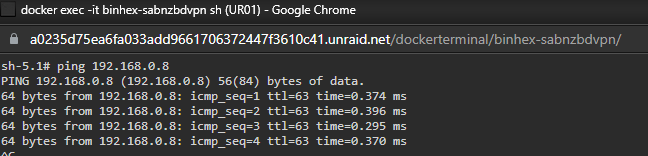

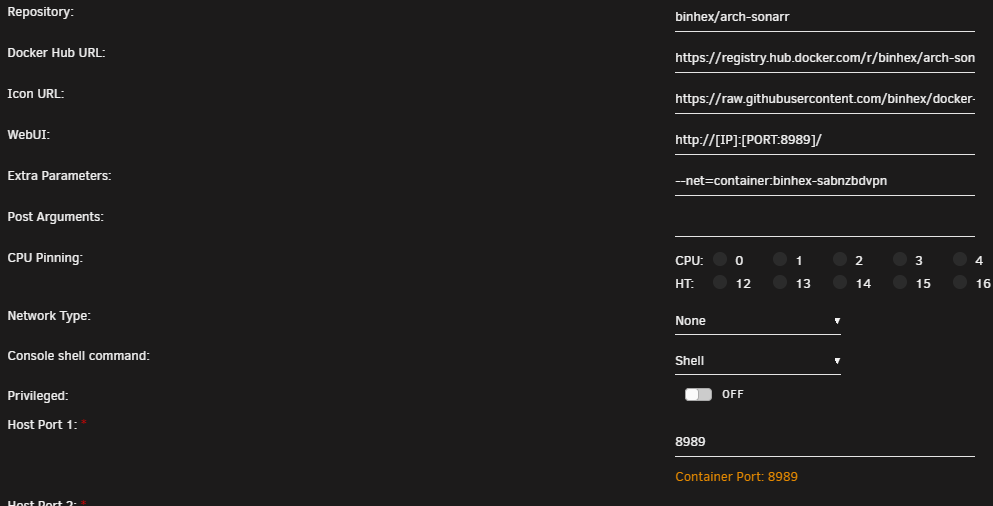

I should add... maybe this adds an extra level of complication to this setup as well, but the network for this docker, runs through another docker. Like this;

Maybe this will be my limitation

As I don't really want to change that.

Edit2:

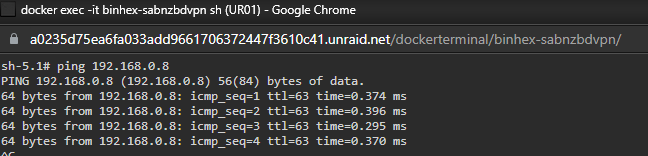

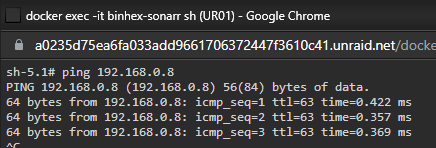

I can ping it from that Docker tho;

hmmmm.

-

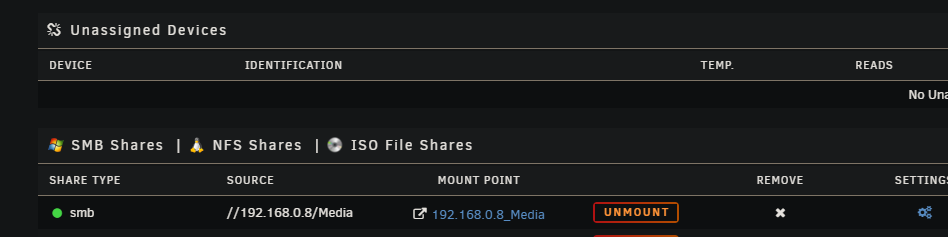

Hi,

I'm trying to make an Auto Mount share accessible to my dockers from a 2nd unraid server.

It seems to successfully auto mount and I can browse files from the 2nd unraid server on the main unraid server file browser.

But when I added the path to my docker, it seems like only the top path is visible, I can't see any of the files inside when I try to use the path in the docker.

Any ideas or settings I need to check/double check?

-

Yep cool. Will do.

Without doing it this way. Technically I should be able to use the UNC path directly in the docker right?

It's a completely separate server. Even when I set the share to Public, the docker on the main server doesn't see files.

I seem to have a similar issue doing it that way too.

-

18 hours ago, itimpi said:

Why? level 3 is less restrictive than level 2.

Opps, yep... The other way. Thanks

-

Ah yep, your right. I have mine set to Top 2 levels. I guess I should go to Top 3 and just monitor and see if that helps. If not, then maybe consider Any Level.

Cheers

-

Hi All,

I've been using Unraid for quite some time. My array is 30 disks. The server is setup as a media center and everything is automated with the ARR dockers etc. Because I have 30 disks, I have lots of TBs but I'm starting to get to the end of my free space. Sort of. I actually have about 20tb free. But I keep running into the issue of disk getting full. Thus far I have been moving data manually to free up space on the disk and it's all good until it happens again. This would be because high water is preferring some files to stay together. But it seems quite a lot of wasted space to use high water with a big array like this. Would there be a big downside to switching to fill-up or most-free this late in the game? Should there be anything else I should consider?

Thanks

-

Hi,

I'm trying to make an Auto Mount share accessible to my dockers from a 2nd unraid server.

It seems to successfully auto mount and I can browse files from the 2nd unraid server on the main unraid server file browser.

But when I added the path to my docker, it seems like only the top path is visible, I can't see any of the files inside when I try to use the path in the docker.

Any ideas?

-

8 minutes ago, binhex said:

you can put multiples in, simply comma separate the 'remote' names, e.g.:-

RCLONE_REMOTE_NAME=onedrive-business-encrypt,gsuite-encryptAwesome, thanks.

That'll make it interesting if you do update to copy the reverse way.

Goodluck

-

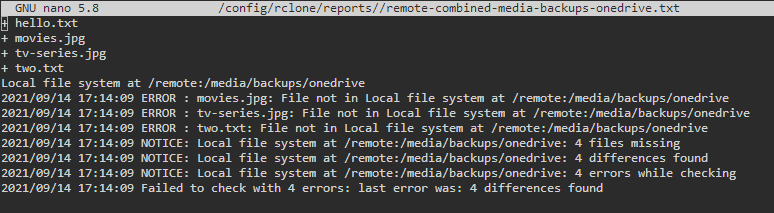

1 hour ago, binhex said:

RCLONE_MEDIA_SHARES is configured correctly, but RCLONE_REMOTE_NAME is not, this should be the name of the remote when you ran through the rclone wizard to create the rclone configuration file, if you cant remember what you set it to then open your rclone config file with a text editor and look at the value in square brackets, that is the remote name.

Oh, the name I set specifically for OneDrive. OK, that worked. I can see the files on OneDrive now

Thanks.

Thanks.

So if I want to repeat the process for copying the same files to Google Drive too, does that mean I need another whole Docker?

Or can I put multiple entries in this field, for the other configs that are setup too?Cheers

-

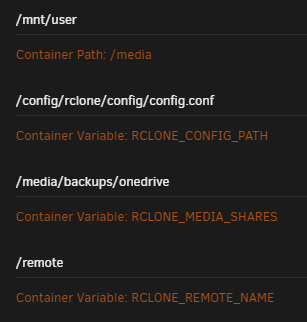

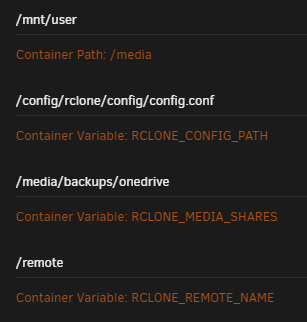

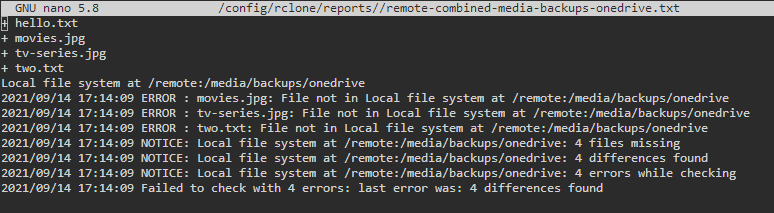

Do I have to choose a whole share or can I choose a folder within a share?

In my backups share, I have a folder called onedrive and I want all files/folders within that to go to my OneDrive in a folder called remote?

Have I configured that right?

-

On 9/6/2021 at 9:52 PM, binhex said:

i get it, hmm interesting, so i think rclone can do this but i would need to do some additional coding, probably another env var something like RCLONE_DIRECTION which would have values of 'localtoremote', 'remotetolocal', or 'both', then have additional code to detect the env var and perform the required sync operation, it would take a bit of testing but should be possible i think, no promises but i might take a look in the next few days.

Is this option still coming? I work out of OneDrive on multiple PCs and would like to backup my OneDrive to Unraid. As I don't sync all files on th ePCs I'm working on to save space.

Cheers

-

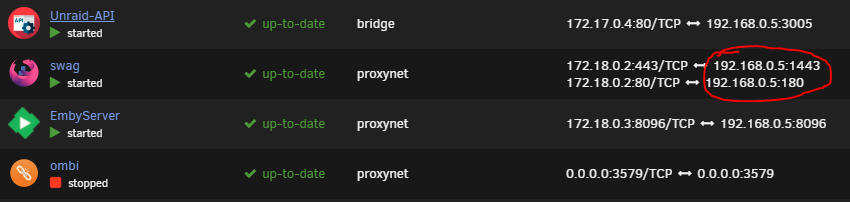

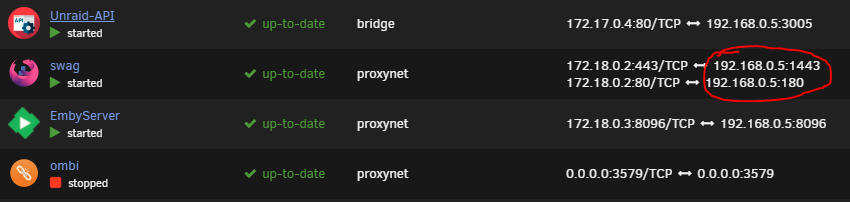

I have a second nic in my server on the same subnet. I'm not trying separate access to anything per se. I was just trying to offload some traffic to another nic for external emby users. My firewall rule points to the 2nd nic.

Emby goes through Swag currently and all is working, but when I monitor traffic with Grafana. I think people are accessing/browsing via the second nic, but when emby starts streaming out, all the traffic seems to go through the 1st nic.

Can I change the mapped host IP on swag? Maybe that'll force all the streaming traffic for external users

Or should I setup a second bridge network or something?

-

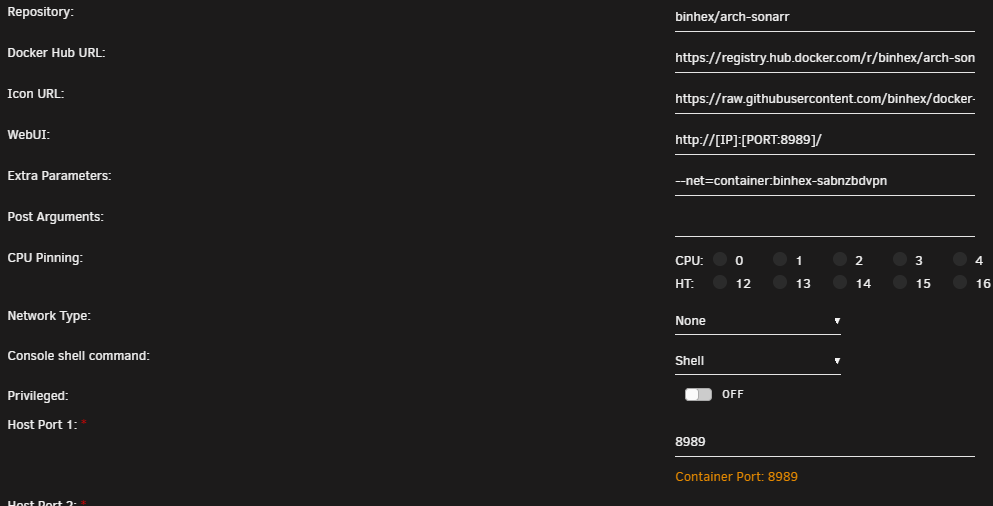

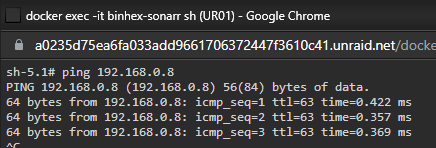

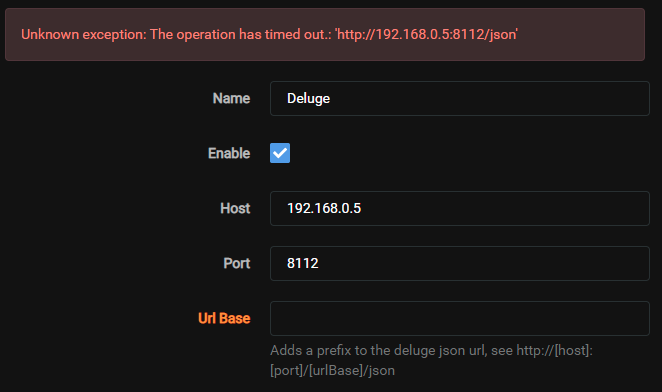

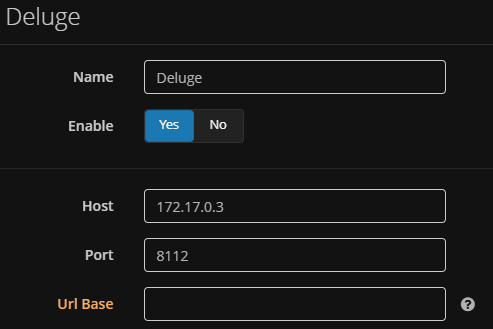

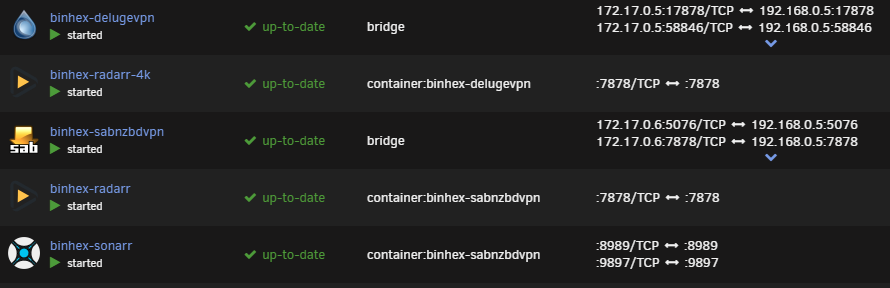

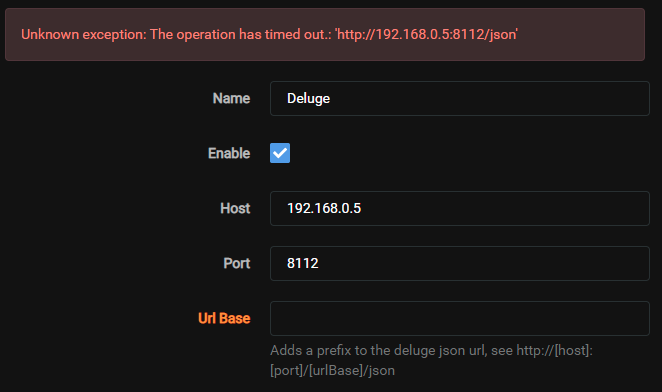

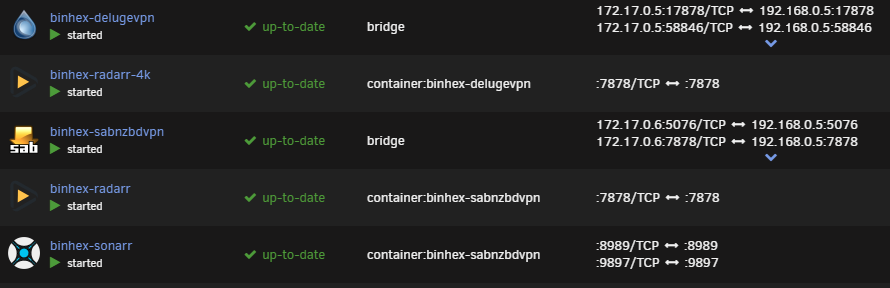

Ok, one last thing. Is there something special I need to do to make radarr/sonarr to talk to binhex-delugevpn, when radarr/sonarr are going through binhex-sabnzbdvpn?

I keep getting;

Other then that, all containers are running and I can connect to them all. All indexers are working.

Just can't connected to Deluge in radarr/sonarr.

Edit: And connecting to SABNZ is all good too.

Edit2: I guess I could download the normal Deluge docker and route that through SABNZ. Then I could just use localhost like I did for Sonarr/Radarr connecting to SABNZ.

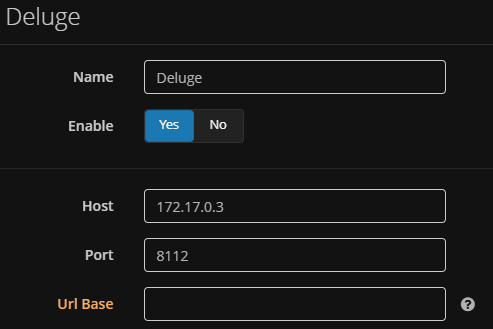

Edit3: I tried various combos for Host; Server IP, localhost. But oddly putting in the Docker IP works;

-

1

1

-

-

-

-

Yep. Cool. Thanks guys.

So booting to legacy is better if possible for utilizing the nVidia driver? Which in turn may help with transcode downsizing 4k to 1080p?

I generally don't do that anyway. But I'm interested in testing. I'll have a go later today.

Cheers

-

9 hours ago, ich777 said:

In general this is not different than before with the old Nvidia build.

Can you give me a little more information, on my System with a GTX1060 3GB with Emby/Jellyfin everything works flawlessly.

Are you booting with Legacy or UEFI? If you are booting with UEFI please try to boot with Legacy mode.

UEFI

Can I set to boot legacy from the Unraid interface? So I can do it remotely? or will I need to be in front of it and do it in the bios?

-

I updated to 6.9 stable today. Previously I was using the old nvidia plugin on 6.8.3 for Emby HW transcoding and all was good.

Emby wouldn't start cuz the old plugin and settings were still there. I deleted it and clear all the settings. Got it working without it.

Then I downloaded the new nVidia plugin and setup all the settings again. It works... but it doesn't seem as good as before?

Maybe my memory is a little fuzzy. But I usually test by choosing a 4k movie and stream to a 1080p device. In this case Emby in Chrome. It starts and I can see hardware transcoding going. But not too long the stream will freeze. After a couple reboots and looking around for other options and testing other 4k movies. It still freezes. I tried a different device, Samsung Note 10. It played a little longer but still froze.

I change the settings in Emby to turn off HW transcoding and my CPU can play the whole 4k movie to 1080p device no probs.

Ultimately this shouldn't be a real issue for me, as I keep 4k in a separate library and don't share it out to my users. And I will only play 4k movies on a 4k device locally.

Anyway, was just wondering if anyone else was having similar issue?

Micro-Star International Co., Ltd. X399 SLI PLUS

AMD Ryzen Threadripper 2920X 12-Core @ 4150 MHz

32GB DDR4

NVIDIA GeForce GTX 1660

-

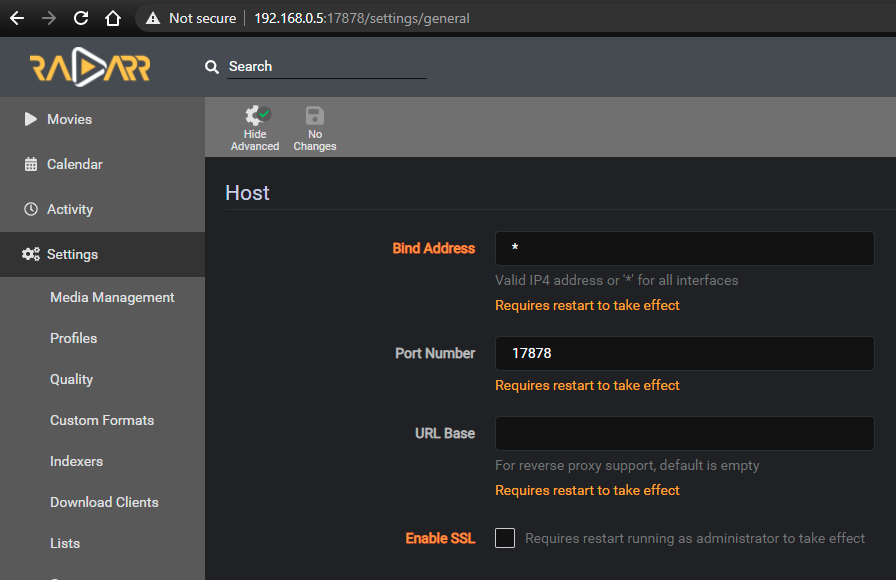

What are people doing when running multiple instances of a docker?

I have 2 Radarrs. One for 4k, to automatically keep the library separate. But the only way I could get it running through Privoxy was to run Radarr through SABNZ and Radarr-4k through Deluge.

After fixing this up as per Q24 to Q26 in the FAQ. All is working except the Radarr going through SABNZ can't see Deluge and the Radarr-4k going through Deluge can't see SABNZ.

The reason they were setup going through different Privoxys was even when I assign a different port number for Radarr-4k, it still didn't want to load. Maybe I need to go back and revisit that?

But on my Docker page it looks like this;

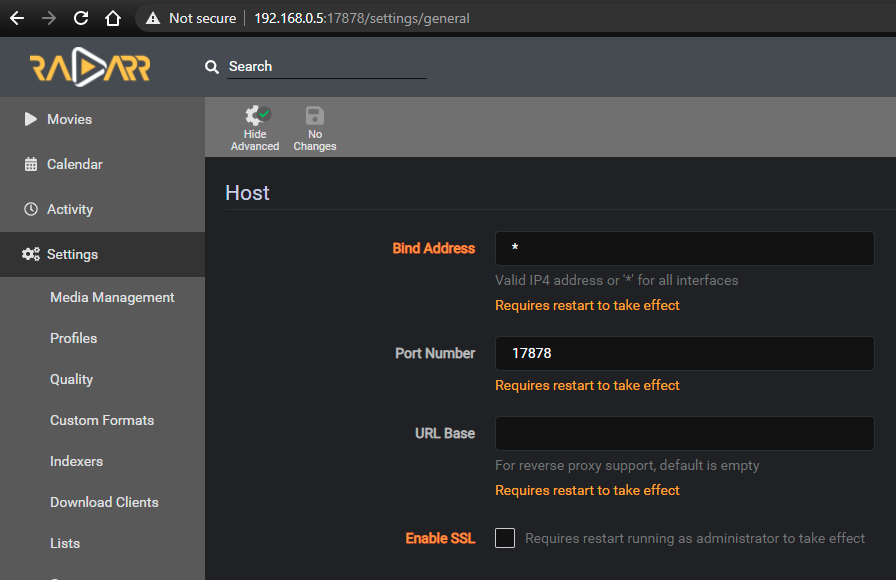

I'm not sure why port mapping still says 7878 and not 17878 as I have set in the docker and in Radarr-4k.

Hopefully others who have multiple docker instances too can help.

Clearly I'm not doing something properly.

Cheers

Unassigned Devices - Managing Disk Drives and Remote Shares Outside of The Unraid Array

in Plugin Support

Posted

Ah OK. That makes sense. No problem. I can work around this for now and revisit it later.

Funny enough, because the 2nd server is not my primary, it's already 6.10. I probably should keep them at the same version moving forward.

Thanks for the update.