Shantarius

-

Posts

36 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Shantarius

-

-

Hello,

in my syslog I have >15000 Entries like this:

Oct 17 05:52:51 Avalon bunker: error: BLAKE3 hash key mismatch, /mnt/disk1/Backup_zpool/zpool/nextcloud_data/20231007_031906/nextcloud_data/christian/files/Obsidian_Vault/.git/objects/ba/edc24a7db2d2f8cc372d12a6cfaa5a7b3b9206 is corrupted

At 10th Oktober the same for disk3. What is happened here and how can I solve this?

Thanks!

-

On 9/30/2023 at 6:38 PM, ich777 said:

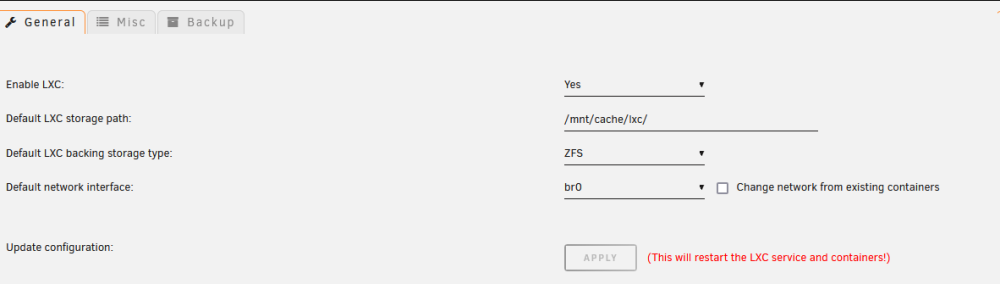

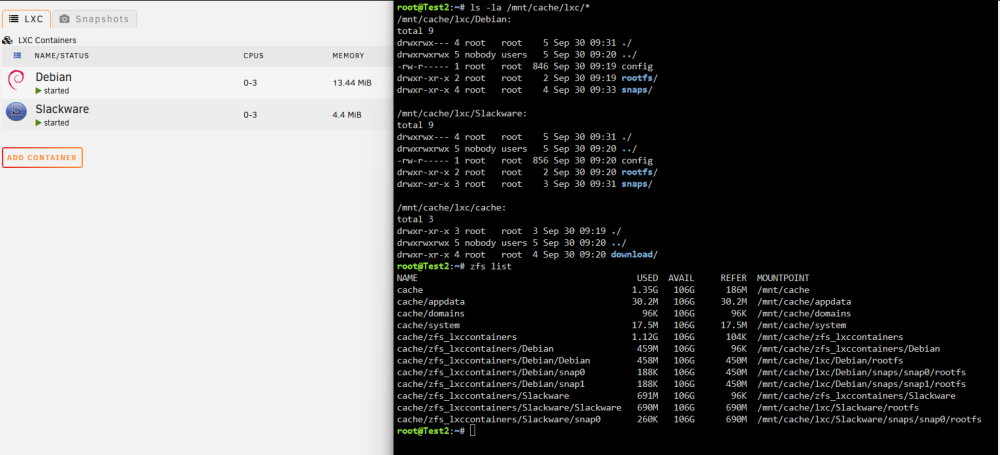

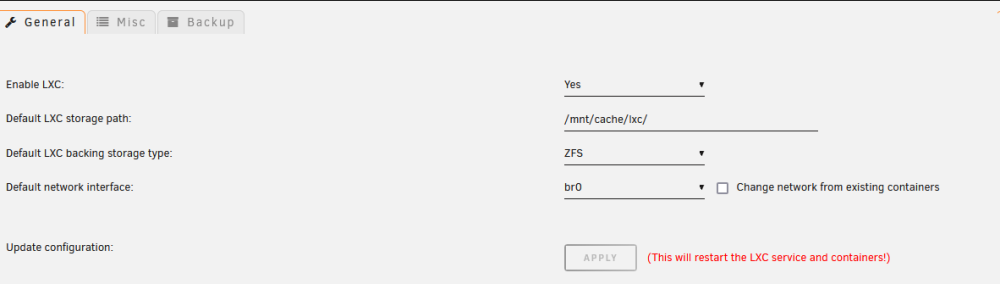

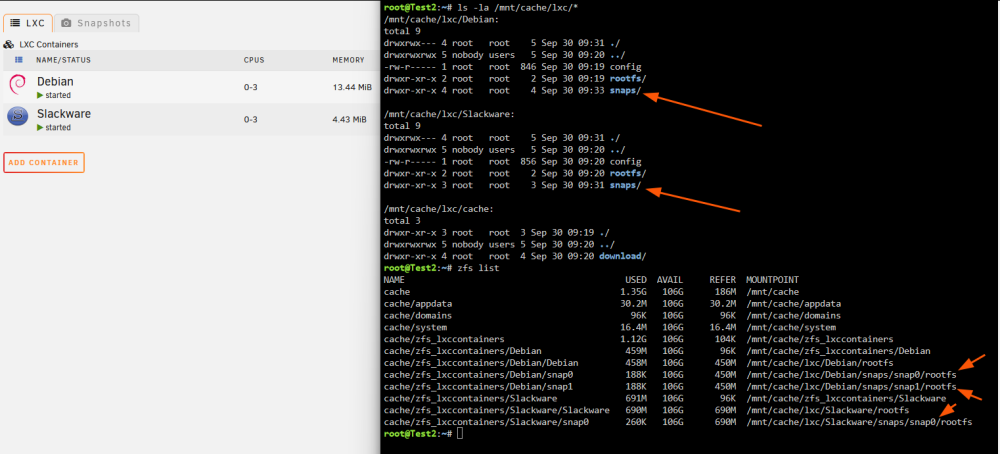

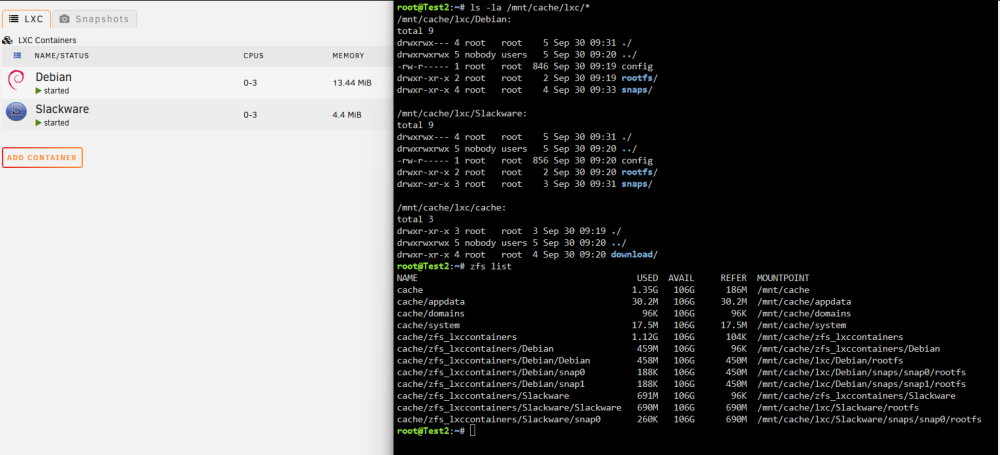

@Roalkege so now I've tested it, I completely wiped my cache drive and formatted it with ZFS and put my LXC directory in /mnt/cache/lxc/:

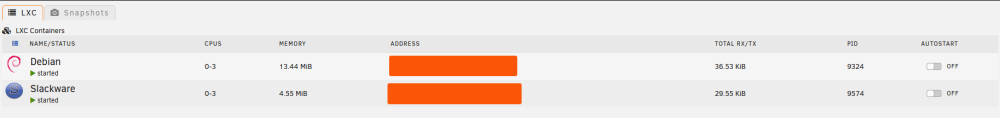

I then created two containers for testing:

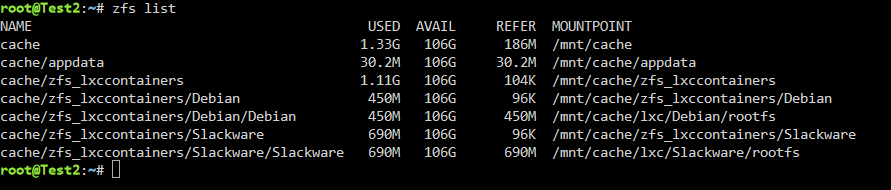

Then I queried the LXC directory (looking good so far with all the configs in there and also the rootfs):

After that I looked up all datasets:

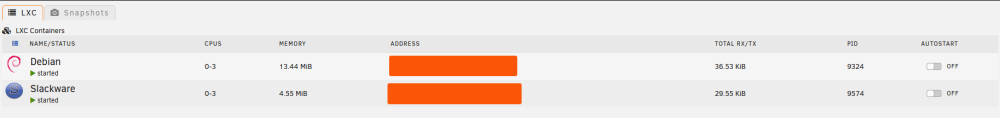

Then I did a reboot just for testing if everything is still working after a reboot:

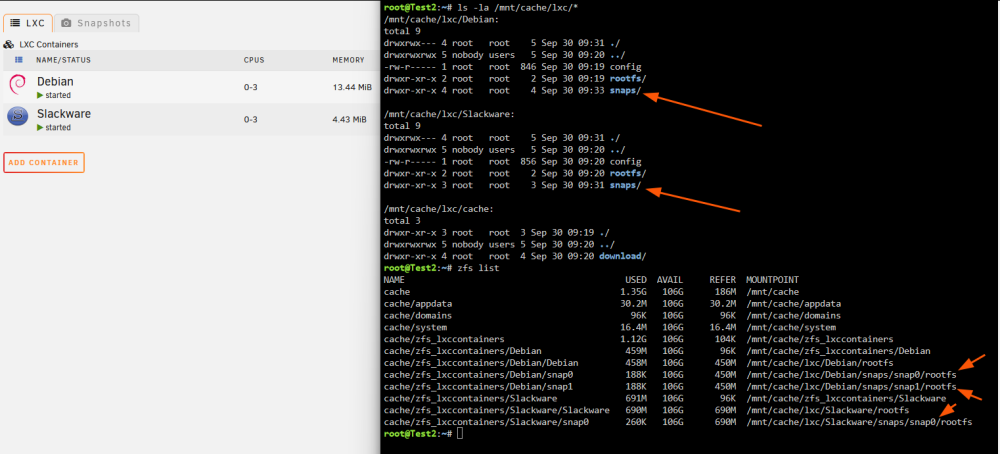

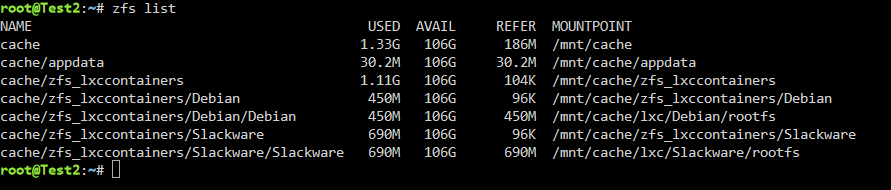

Then I've created two snapshots, one from Debian, then one From Slackware and then again one from Debian:

And everything seems still to work fine:

I really can't help with snapshotting within the ZFS Master plugin however if you are taking snapshots with the ZFS Master plugin I would recommend, at least from my understanding, that you leave LXC in Directory mode because you are taking a snapshot from the directory/dataset.

The ZFS backing storage type is meant to be used with the built in snapshot feature from LXC/LXC plugin itself.

EDIT: I even tried now tried another reboot with the snapshots in place and everything is working as expected:

Works this only with Unpaid >=6.12?

-

-

19 hours ago, defected07 said:

I think the Problem was that the Unraid Samba Service and the Timemachine Samba Service had the same workgroup name. I have changed the workgroup name for the Container and now it is all ok. -

Bei Hetzner bekommt man 1TB Cloud Speicher für 3,81€

Da kannst Du Deine Daten per WEBDAV, SFTP, SSH usw hochladen. Je nach verwendeter Software auch verschlüsselt. Hetzner hostet in Deutschland und Finnland, kannst Du Dir aussuchen. Also in Deutschland. Ich nutze dann für das Backup meiner Daten den Duplicati Docker. Damit kannst Du die Daten auch vollverschlüsselt hochladen. Duplicati gibt es auch für Windows, MacOS und separat für Linux. Das heisst im Notfall kannst Du die hochgeladenen Daten mit jedem OS und dem entsprechenden Duplicati Binary wieder herunterladen. Dazu hast Du mit Duplicati eine ordentliche (Web)Gui und musst nicht mit Scripten rummachen.

7TB Daten in der Cloud zu sichern halte ich aber nicht für sinnvoll. Ich sichere in der Cloud nur das allerwichtigste, wie mein DMS und andere Dokumente. Andere Nutzdaten landen auf zwei externen Festplatten die regelmäßig rotiert und im Keller in einer wasserdichten und feuerfesten Box gelagert werden.

-

Hi,

the Timemachine docker spams into my unraid syslog. How can I prevent for this? Which log level can I use for the container settings (now it is set to 1)?

Feb 25 16:05:49 Avalon nmbd[64984]: ***** Feb 25 16:05:49 Avalon nmbd[64984]: Feb 25 16:05:49 Avalon nmbd[64984]: Samba name server AVALON is now a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:05:49 Avalon nmbd[64984]: Feb 25 16:05:49 Avalon nmbd[64984]: ***** Feb 25 16:17:27 Avalon nmbd[64984]: [2023/02/25 16:17:27.047518, 0] ../../source3/nmbd/nmbd_incomingdgrams.c:302(process_local_master_announce) Feb 25 16:17:27 Avalon nmbd[64984]: process_local_master_announce: Server TIMEMACHINE at IP 192.168.2.139 is announcing itself as a local master browser for workgroup WORKGROUP and we think we are master. Forcing election. Feb 25 16:17:27 Avalon nmbd[64984]: [2023/02/25 16:17:27.047676, 0] ../../source3/nmbd/nmbd_become_lmb.c:150(unbecome_local_master_success) Feb 25 16:17:27 Avalon nmbd[64984]: ***** Feb 25 16:17:27 Avalon nmbd[64984]: Feb 25 16:17:27 Avalon nmbd[64984]: Samba name server AVALON has stopped being a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:17:27 Avalon nmbd[64984]: Feb 25 16:17:27 Avalon nmbd[64984]: ***** Feb 25 16:17:47 Avalon nmbd[64984]: [2023/02/25 16:17:47.344230, 0] ../../source3/nmbd/nmbd_become_lmb.c:397(become_local_master_stage2) Feb 25 16:17:47 Avalon nmbd[64984]: ***** Feb 25 16:17:47 Avalon nmbd[64984]: Feb 25 16:17:47 Avalon nmbd[64984]: Samba name server AVALON is now a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:17:47 Avalon nmbd[64984]: Feb 25 16:17:47 Avalon nmbd[64984]: ***** Feb 25 16:29:28 Avalon nmbd[64984]: [2023/02/25 16:29:28.202819, 0] ../../source3/nmbd/nmbd_incomingdgrams.c:302(process_local_master_announce) Feb 25 16:29:28 Avalon nmbd[64984]: process_local_master_announce: Server TIMEMACHINE at IP 192.168.2.139 is announcing itself as a local master browser for workgroup WORKGROUP and we think we are master. Forcing election. Feb 25 16:29:28 Avalon nmbd[64984]: [2023/02/25 16:29:28.202973, 0] ../../source3/nmbd/nmbd_become_lmb.c:150(unbecome_local_master_success) Feb 25 16:29:28 Avalon nmbd[64984]: ***** Feb 25 16:29:28 Avalon nmbd[64984]: Feb 25 16:29:28 Avalon nmbd[64984]: Samba name server AVALON has stopped being a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:29:28 Avalon nmbd[64984]: Feb 25 16:29:28 Avalon nmbd[64984]: ***** Feb 25 16:29:48 Avalon nmbd[64984]: [2023/02/25 16:29:48.037595, 0] ../../source3/nmbd/nmbd_become_lmb.c:397(become_local_master_stage2) Feb 25 16:29:48 Avalon nmbd[64984]: ***** Feb 25 16:29:48 Avalon nmbd[64984]: Feb 25 16:29:48 Avalon nmbd[64984]: Samba name server AVALON is now a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:29:48 Avalon nmbd[64984]: Feb 25 16:29:48 Avalon nmbd[64984]: ***** Feb 25 16:41:30 Avalon nmbd[64984]: [2023/02/25 16:41:30.678485, 0] ../../source3/nmbd/nmbd_incomingdgrams.c:302(process_local_master_announce) Feb 25 16:41:30 Avalon nmbd[64984]: process_local_master_announce: Server TIMEMACHINE at IP 192.168.2.139 is announcing itself as a local master browser for workgroup WORKGROUP and we think we are master. Forcing election. Feb 25 16:41:30 Avalon nmbd[64984]: [2023/02/25 16:41:30.678645, 0] ../../source3/nmbd/nmbd_become_lmb.c:150(unbecome_local_master_success) Feb 25 16:41:30 Avalon nmbd[64984]: ***** Feb 25 16:41:30 Avalon nmbd[64984]: Feb 25 16:41:30 Avalon nmbd[64984]: Samba name server AVALON has stopped being a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:41:30 Avalon nmbd[64984]: Feb 25 16:41:30 Avalon nmbd[64984]: ***** Feb 25 16:41:48 Avalon nmbd[64984]: [2023/02/25 16:41:48.997249, 0] ../../source3/nmbd/nmbd_become_lmb.c:397(become_local_master_stage2) Feb 25 16:41:48 Avalon nmbd[64984]: ***** Feb 25 16:41:48 Avalon nmbd[64984]: Feb 25 16:41:48 Avalon nmbd[64984]: Samba name server AVALON is now a local master browser for workgroup WORKGROUP on subnet 192.168.2.126 Feb 25 16:41:48 Avalon nmbd[64984]: Feb 25 16:41:48 Avalon nmbd[64984]: *****Thank you!

-

Question about LanCache Prefill

I have deselected one Game in SteamPrefill select-apps. Does Lancache Profil deletes automatically the cached Data for this game? What happened if the Drive where the Lancache Cache save the Data is full?

Thank You!

-

Dazu benötigt man eine Bitcoin Wallet und die muss dann im Miner konfiguriert werden, oder? Oder wo kommen die Bitcoins dann hin? Kenne mich damit null aus 😱

-

3 minutes ago, dlandon said:

I'll have a fix in the next release. If you're not using UD preclear, uninstall it to stop the log messages.

Wow, cool. Thank you! When will the next release coming out? Can you explain what the php warning means? 🙂

-

Hi,

since a few days UA Preclear produces php warnings in the syslog. My Unraid Version is 6.9.2 and the UA Preclear Plugin is the newest version.

Dec 22 19:45:00 Avalon rc.diskinfo[22433]: PHP Warning: strpos(): Empty needle in /usr/local/emhttp/plugins/unassigned.devices.preclear/scripts/rc.diskinfo on line 413 Dec 22 19:45:00 Avalon rc.diskinfo[22433]: PHP Warning: strpos(): Empty needle in /usr/local/emhttp/plugins/unassigned.devices.preclear/scripts/rc.diskinfo on line 413 Dec 22 19:45:00 Avalon rc.diskinfo[22433]: PHP Warning: strpos(): Empty needle in /usr/local/emhttp/plugins/unassigned.devices.preclear/scripts/rc.diskinfo on line 413 Dec 22 19:45:15 Avalon rc.diskinfo[28229]: PHP Warning: strpos(): Empty needle in /usr/local/emhttp/plugins/unassigned.devices.preclear/scripts/rc.diskinfo on line 413 Dec 22 19:45:15 Avalon rc.diskinfo[28229]: PHP Warning: strpos(): Empty needle in /usr/local/emhttp/plugins/unassigned.devices.preclear/scripts/rc.diskinfo on line 413 Dec 22 19:45:15 Avalon rc.diskinfo[28229]: PHP Warning: strpos(): Empty needle in /usr/local/emhttp/plugins/unassigned.devices.preclear/scripts/rc.diskinfo on line 413What mean this php warnings?

Thank you!

Christian

-

Hi,

ich habe mir eine Eaton Ellipse Pro 650 gekauft. Gemessen ohne Verbraucher im eingeschalteten Zustand mit einem Shelly mit Tasmota Firmware. Der Verbrauch schwankt stark, aber im Schnitt Verbraucht das Gerät 14Watt, in 24h insgesamt 330Watt. Das wären bei 30cent/kWh im Jahr 36Euro Leerlaufkosten für die Eaton.

Grüße

Chris

-

Hi,

after a looooong time without errors today i found this error (only one message) in the syslog:

Jul 10 07:56:18 Avalon kernel: unraid-api[45682]: segfault at 371fed7016 ip 000014b5cc11a73c sp 000014b5b60d2e58 error 4 in libgobject-2.0.so.0.6600.2[14b5cc0ef000+33000]Can anyone say what is it and if is it critical? I use Unraid Version 6.9.2 2021-04-07

Thank You!

-

8 hours ago, SimonF said:

Are you on 6.10?

No, 6.9.2

Is this the reason?

-

Hi,

i cannot find the LXC Plugin in the CA App. 😞

-

-

Servus,

habt Ihr es geschafft Unraid-Docker Container mit dem Checkmk-Raw-Docker bzw dem checkmk-Agent zu überwachen wie hier beschrieben? Ich habe zwar unter

/usr/lib/check_mk_agent/pluginsdas Script mk_docker.py installiert, aber ich kann das nicht mit chmod +x ausführbar machen und in checkmk wird mir für den Unraid-Host keine Docker Überwachung angezeigt.

Python2 und Python3 (3.9) sind über die NerdTools installiert.

Kann mir da jemand den nötigen Anstoss geben damit das funktioniert?

Danke & Gruß

-

On 1/4/2022 at 10:24 PM, mgutt said:

I'd say you need to use the relative path and not the absolute path. This means if you backup from "/mnt/zpool/" to /mnt/user/Backups, you need to add "VMs/Debian11_105/" to your exclude list file.

Hi mgutt,

thank you for your answer. This workes for me!

source_paths=( "/mnt/zpool/Docker" "/mnt/zpool/VMs" "/mnt/zpool/nextcloud_data" ) backup_path="/mnt/user/Backup_zpool" ... rsync -av --exclude-from="/boot/config/scripts/exclude.list" --stats --delete --link-dest="${backup_path}/${last_backup}" "${source_path}" "${backup_path}/.${new_backup}"excludes.list

Debian11_105/ Ubuntu21_102/ Win10_106/-

1

1

-

-

Hi mgutt,

i have changed the rsync command in the script (V0.6) to

rsync -av --stats --exclude-from="/boot/config/scripts/exclude.list" --delete --link-dest="${backup_path}/${last_backup}" "${source_path}" "${backup_path}/.${new_backup}"The file exclude.list contains this:

/mnt/zpool/VMs/Debian11_105/ /mnt/zpool/VMs/Ubuntu21_102/ /mnt/zpool/VMs/Win10_106/ /mnt/zpool/Docker/Dockerimage/But the script don't ignore the directorys in the exclude.list

Can you say why?

Thanks!

-

Hi mgutt,

thank you for the superb script. I have a feature request: Is it possible to have an option for excluding files or paths?

Best regards

Chris

-

13 hours ago, SimonF said:

Have a look at my usb manager plugin as can auto connect a device when detected by the system. You can specify by port or device.

Wow this is great and exactly what i need. Now i can use a Debian VM use as a (AirPrint)Printserver. If the Printer is off, the printjob is waiting in the cups-scheduler until i have turned on the printer and the printer auto connects to the VM 🙂 Very nice!

-

Hi,

i passthrough a USB Printer to a Debian VM with this Plugin. I have checked the Box in the VM Setting to passtrhough the printer and the printer is available in the VM:

root@debian11-103:~# lsusb Bus 001 Device 004: ID 03f0:132a HP, Inc HP LaserJet 200 color M251n Bus 001 Device 002: ID 0627:0001 Adomax Technology Co., Ltd QEMU USB Tablet Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 004 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub Bus 003 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub Bus 002 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub root@debian11-103:~#If i turn off the printer, the printer i not more available in the VM. If then i turn on the printer, the printer does not connect automatically to the VM. I must manually add the printer to the VM in the VM-Tab.

How can i passthrough automatically to the VM after turning on the Printer?

Thx

Chris

-

Hi,

thank you for your answer!

I have removed the LSI controler and use now the SSDs with brandnew SATA cables with the Mainboards SATA II Controler. After i have removed the LSI controler i have changed a old SSD with a brandnew EVO870 with which the errors occur again. Nevertheless with the new SSD the errors coming up after a reboot, but over night running with dockers and vms there was no errors.

Today i have made an experiment after i have turned off NCQ to show the status:

1. Before reboot

root@Avalon:/mnt/zpool/Docker/Telegraf# zpool status pool: zpool state: ONLINE scan: scrub repaired 0B in 00:06:59 with 0 errors on Sun Nov 7 17:05:41 2021 config: NAME STATE READ WRITE CKSUM zpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ata-Samsung_SSD_850_EVO_1TB_S2RFNX0HA28280F ONLINE 0 0 0 ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M ONLINE 0 0 0 errors: No known data errors /dev/sdg (EVO 870 new SSD) # Attribute Name Flag Value Worst Threshold Type Updated Failed Raw Value 5 Reallocated sector count 0x0033 100 100 010 Pre-fail Always Never 0 9 Power on hours 0x0032 099 099 000 Old age Always Never 52 (2d, 4h) 12 Power cycle count 0x0032 099 099 000 Old age Always Never 10 177 Wear leveling count 0x0013 099 099 000 Pre-fail Always Never 2 179 Used rsvd block count tot 0x0013 100 100 010 Pre-fail Always Never 0 181 Program fail count total 0x0032 100 100 010 Old age Always Never 0 182 Erase fail count total 0x0032 100 100 010 Old age Always Never 0 183 Runtime bad block 0x0013 100 100 010 Pre-fail Always Never 0 187 Reported uncorrect 0x0032 100 100 000 Old age Always Never 0 190 Airflow temperature cel 0x0032 074 062 000 Old age Always Never 26 195 Hardware ECC recovered 0x001a 200 200 000 Old age Always Never 0 199 UDMA CRC error count 0x003e 100 100 000 Old age Always Never 0 235 Unknown attribute 0x0012 099 099 000 Old age Always Never 5 241 Total lbas written 0x0032 099 099 000 Old age Always Never 3479261532. After reboot, before starting the array

After reboot, before starting Array: root@Avalon:~# zpool status pool: zpool state: ONLINE scan: scrub repaired 0B in 00:06:59 with 0 errors on Sun Nov 7 17:05:41 2021 config: NAME STATE READ WRITE CKSUM zpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ata-Samsung_SSD_850_EVO_1TB_S2RFNX0HA28280F ONLINE 0 0 0 ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M ONLINE 0 0 0 errors: No known data errors root@Avalon:~# /dev/sdg (EVO 870 new SSD) # Attribute Name Flag Value Worst Threshold Type Updated Failed Raw Value 5 Reallocated sector count 0x0033 100 100 010 Pre-fail Always Never 0 9 Power on hours 0x0032 099 099 000 Old age Always Never 52 (2d, 4h) 12 Power cycle count 0x0032 099 099 000 Old age Always Never 10 177 Wear leveling count 0x0013 099 099 000 Pre-fail Always Never 2 179 Used rsvd block count tot 0x0013 100 100 010 Pre-fail Always Never 0 181 Program fail count total 0x0032 100 100 010 Old age Always Never 0 182 Erase fail count total 0x0032 100 100 010 Old age Always Never 0 183 Runtime bad block 0x0013 100 100 010 Pre-fail Always Never 0 187 Reported uncorrect 0x0032 100 100 000 Old age Always Never 0 190 Airflow temperature cel 0x0032 078 062 000 Old age Always Never 22 195 Hardware ECC recovered 0x001a 200 200 000 Old age Always Never 0 199 UDMA CRC error count 0x003e 100 100 000 Old age Always Never 0 235 Unknown attribute 0x0012 099 099 000 Old age Always Never 5 241 Total lbas written 0x0032 099 099 000 Old age Always Never 3479469673. After reboot and after a few minutes after the Array has started

root@Avalon:/mnt/zpool# zpool status pool: zpool state: ONLINE status: One or more devices has experienced an unrecoverable error. An attempt was made to correct the error. Applications are unaffected. action: Determine if the device needs to be replaced, and clear the errors using 'zpool clear' or replace the device with 'zpool replace'. see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P scan: scrub repaired 0B in 00:06:59 with 0 errors on Sun Nov 7 17:05:41 2021 config: NAME STATE READ WRITE CKSUM zpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ata-Samsung_SSD_850_EVO_1TB_S2RFNX0HA28280F ONLINE 0 0 0 ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M ONLINE 0 19 0 errors: No known data errors root@Avalon:/mnt/zpool# /dev/sdg (EVO 870 new SSD) # Attribute Name Flag Value Worst Threshold Type Updated Failed Raw Value 5 Reallocated sector count 0x0033 100 100 010 Pre-fail Always Never 0 9 Power on hours 0x0032 099 099 000 Old age Always Never 52 (2d, 4h) 12 Power cycle count 0x0032 099 099 000 Old age Always Never 10 177 Wear leveling count 0x0013 099 099 000 Pre-fail Always Never 2 179 Used rsvd block count tot 0x0013 100 100 010 Pre-fail Always Never 0 181 Program fail count total 0x0032 100 100 010 Old age Always Never 0 182 Erase fail count total 0x0032 100 100 010 Old age Always Never 0 183 Runtime bad block 0x0013 100 100 010 Pre-fail Always Never 0 187 Reported uncorrect 0x0032 100 100 000 Old age Always Never 0 190 Airflow temperature cel 0x0032 078 062 000 Old age Always Never 22 195 Hardware ECC recovered 0x001a 200 200 000 Old age Always Never 0 199 UDMA CRC error count 0x003e 100 100 000 Old age Always Never 0 235 Unknown attribute 0x0012 099 099 000 Old age Always Never 5 241 Total lbas written 0x0032 099 099 000 Old age Always Never 348067881 root@Avalon:/mnt/zpool# cat /var/log/syslog | grep 16:16:37 Nov 8 16:16:37 Avalon kernel: ata5.00: exception Emask 0x0 SAct 0x1e018 SErr 0x0 action 0x6 frozen Nov 8 16:16:37 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 8 16:16:37 Avalon kernel: ata5.00: cmd 61/04:18:06:6a:00/00:00:0c:00:00/40 tag 3 ncq dma 2048 out Nov 8 16:16:37 Avalon kernel: res 40/00:01:00:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 8 16:16:37 Avalon kernel: ata5.00: status: { DRDY } Nov 8 16:16:37 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 8 16:16:37 Avalon kernel: ata5.00: cmd 61/36:20:57:6a:00/00:00:0c:00:00/40 tag 4 ncq dma 27648 out Nov 8 16:16:37 Avalon kernel: res 40/00:01:01:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 8 16:16:37 Avalon kernel: ata5.00: status: { DRDY } Nov 8 16:16:37 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 8 16:16:37 Avalon kernel: ata5.00: cmd 61/10:68:10:26:70/00:00:74:00:00/40 tag 13 ncq dma 8192 out Nov 8 16:16:37 Avalon kernel: res 40/00:01:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 8 16:16:37 Avalon kernel: ata5.00: status: { DRDY } Nov 8 16:16:37 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 8 16:16:37 Avalon kernel: ata5.00: cmd 61/10:70:10:24:70/00:00:74:00:00/40 tag 14 ncq dma 8192 out Nov 8 16:16:37 Avalon kernel: res 40/00:01:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 8 16:16:37 Avalon kernel: ata5.00: status: { DRDY } Nov 8 16:16:37 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 8 16:16:37 Avalon kernel: ata5.00: cmd 61/10:78:10:0a:00/00:00:00:00:00/40 tag 15 ncq dma 8192 out Nov 8 16:16:37 Avalon kernel: res 40/00:ff:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 8 16:16:37 Avalon kernel: ata5.00: status: { DRDY } Nov 8 16:16:37 Avalon kernel: ata5.00: failed command: SEND FPDMA QUEUED Nov 8 16:16:37 Avalon kernel: ata5.00: cmd 64/01:80:00:00:00/00:00:00:00:00/a0 tag 16 ncq dma 512 out Nov 8 16:16:37 Avalon kernel: res 40/00:01:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 8 16:16:37 Avalon kernel: ata5.00: status: { DRDY } Nov 8 16:16:37 Avalon kernel: ata5: hard resetting link Nov 8 16:16:37 Avalon kernel: ata5: SATA link up 3.0 Gbps (SStatus 123 SControl 300) Nov 8 16:16:37 Avalon kernel: ata5.00: supports DRM functions and may not be fully accessible Nov 8 16:16:37 Avalon kernel: ata5.00: supports DRM functions and may not be fully accessible Nov 8 16:16:37 Avalon kernel: ata5.00: configured for UDMA/133 Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=30s Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 Sense Key : 0x5 [current] Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 ASC=0x21 ASCQ=0x4 Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 CDB: opcode=0x2a 2a 00 0c 00 6a 06 00 00 04 00 Nov 8 16:16:37 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 201353734 op 0x1:(WRITE) flags 0x700 phys_seg 2 prio class 0 Nov 8 16:16:37 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=103092063232 size=2048 flags=40080c80 Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=30s Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 Sense Key : 0x5 [current] Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 ASC=0x21 ASCQ=0x4 Nov 8 16:16:37 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 CDB: opcode=0x2a 2a 00 0c 00 6a 57 00 00 36 00 Nov 8 16:16:37 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 201353815 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 8 16:16:37 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=103092104704 size=27648 flags=180880 Nov 8 16:16:37 Avalon kernel: ata5: EH complete Nov 8 16:16:37 Avalon kernel: ata5.00: Enabling discard_zeroes_data root@Avalon:/mnt/zpool#4. After starting the docker service and the vm service (dockers and vms are on the zpool) no new errors.

5. After zpool scrub no new errors in syslog and zpool status

root@Avalon:/mnt/zpool# zpool status -v zpool pool: zpool state: ONLINE status: One or more devices has experienced an unrecoverable error. An attempt was made to correct the error. Applications are unaffected. action: Determine if the device needs to be replaced, and clear the errors using 'zpool clear' or replace the device with 'zpool replace'. see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P scan: scrub repaired 0B in 00:05:29 with 0 errors on Mon Nov 8 16:34:53 2021 config: NAME STATE READ WRITE CKSUM zpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ata-Samsung_SSD_850_EVO_1TB_S2RFNX0HA28280F ONLINE 0 0 0 ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M ONLINE 0 19 0 errors: No known data errors root@Avalon:/mnt/zpool# /dev/sdg (EVO 870 new SSD) # Attribute Name Flag Value Worst Threshold Type Updated Failed Raw Value 5 Reallocated sector count 0x0033 100 100 010 Pre-fail Always Never 0 9 Power on hours 0x0032 099 099 000 Old age Always Never 52 (2d, 4h) 12 Power cycle count 0x0032 099 099 000 Old age Always Never 10 177 Wear leveling count 0x0013 099 099 000 Pre-fail Always Never 2 179 Used rsvd block count tot 0x0013 100 100 010 Pre-fail Always Never 0 181 Program fail count total 0x0032 100 100 010 Old age Always Never 0 182 Erase fail count total 0x0032 100 100 010 Old age Always Never 0 183 Runtime bad block 0x0013 100 100 010 Pre-fail Always Never 0 187 Reported uncorrect 0x0032 100 100 000 Old age Always Never 0 190 Airflow temperature cel 0x0032 073 062 000 Old age Always Never 27 195 Hardware ECC recovered 0x001a 200 200 000 Old age Always Never 0 199 UDMA CRC error count 0x003e 100 100 000 Old age Always Never 0 235 Unknown attribute 0x0012 099 099 000 Old age Always Never 5 241 Total lbas written 0x0032 099 099 000 Old age Always Never 3491695836. After zpool clear

root@Avalon:/mnt/zpool# zpool status -v zpool pool: zpool state: ONLINE scan: scrub repaired 0B in 00:05:14 with 0 errors on Mon Nov 8 16:42:34 2021 config: NAME STATE READ WRITE CKSUM zpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ata-Samsung_SSD_850_EVO_1TB_S2RFNX0HA28280F ONLINE 0 0 0 ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M ONLINE 0 0 0 errors: No known data errors root@Avalon:/mnt/zpool#What is meant with "ata5: hard resetting link" in the syslog?

Is it possible that the "vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5" Partition is damaged? Can i set the 870EVO offline, delete the Partitions at this SSD and replace/add it back to the mirror?

-

On 11/2/2021 at 12:29 PM, axipher said:

I'm running on the 'telegraf:alpine' default tag and had to make the following changes for it to work, this resulted in running 'Telegraf 1.20.3' per the docker logs.

1) Remove the following 'Post Arguments' under 'Advanced View'. This gets rid of the 'apk error' but also gets rid of 'smartmontools' which means you will lose out on some disk stats for certain dashboards in Grafana.

/bin/sh -c 'apk update && apk upgrade && apk add smartmontools && telegraf'2) Open up '/mnt/user/appdata/telegraf/telegraf.conf' and comment out the following two lines. This gets rid of the 'missing smartctl' errors and also gets rid of the constant error for not being able to access 'docker.sock'. There might be a fix for the docker problem if someone can share it as I find it really useful to monitor the memory usage of each docker from Grafana but currently had to give that up on current version of Telegraf

#[[inputs.smart]] #[[inputs.docker]]As others stated, reverting to an older version works as well if the new Telegraf provides nothing new for your use case and would be the recommended route, but I just wanted to document the couple things I had to change to get the latest Telegraf docker running again alongside InfluxDB 1.8 (tag: influxdb:1.8) and Grafana v8 (tag: grafana/grafana:8.0.2).

At this point I will probably spend next weekend locking most of my dockers in to current versions and setup a trial Unraid box on an old Dell Optiplex for testing latest dockers before upgrading on my main system.

Hello there,

i have a solution for the problem with the access to /var/run/docker.sock. For me it works with

telegraf:1.18.3telegraf:alpine. You just add the following in the Extra Parameters Value:--user telegraf:$(stat -c '%g' /var/run/docker.sock)Then Telegraf has access to the docker.sock and i have some data from all dockers in Grafana (CPU Usage, RAM Usage, Network Usage). Its pretty nice 🙂

For the smartctl Problem i have no solution. If i add to Post Arguments

/bin/sh -c 'apk update && apk upgrade && apk add smartmontools && telegraf'the telegraf docker doesn't starts up and in the docker log i found the error

ERROR: Unable to lock database: Permission denied ERROR: Failed to open apk database: Permission deniedHas anyone a solution for this?

🙂

-

1

1

-

-

Hello,

i have a Problem with my zpool.

In my pool (mirror) are one Samsung 850 EVO 1TB and one one 870 EVO (1TB). This two SSDs were connected to a LSI Controler with IT Mode (Version 19).

Few days before i had some errors on one SSD (Samsung 870 EVO) in the syslog and also some UDMA CRC Errors and Hardware ECC recovered.Then i have disconnected both SSDs from the HBA, connected the Samsung 850 EVO 1TB and a brandnew 870 EVO (1TB) with new SATA cables to the SATA onboard Connectors to my HP Proliant ML310e Gen8 Version 1 (SATA II not III). The new 870 EVO (1TB) i have replaced and resilvered in the zpool and everything was fine.

Since yesterday during or after and today after a reboot and starting of the docker-services and some dockers i have again some errors with the new 870 EVO (1TB) in the syslog and write errors in the zpool showing with "zpool status". Then i have made a zpool scrub, during this scrub some cksum error in zpool status comes up. The i have made zpool clear and all errors are gone. After this i have made a new scrub and had no new error (read/write or cksum). The SMART values for the new SSD 850 EVO 1TB showing okay, no CRC or other errors.

The server is now running since a few hours and in the syslog comes no new errors from the SSD. I dare not to restart the server because otherwise new errors could occur.

This is an excerpt of the syslog from a reboot this afternoon, /dev/sdg is the brandnew replaced Samsung 850 EVO 1TB:

Nov 7 16:39:45 Avalon kernel: ata5.00: exception Emask 0x0 SAct 0xfd901f SErr 0x0 action 0x6 frozen Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: SEND FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 64/01:00:00:00:00/00:00:00:00:00/a0 tag 0 ncq dma 512 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:e0:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: SEND FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 64/01:08:00:00:00/00:00:00:00:00/a0 tag 1 ncq dma 512 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:e0:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/08:10:4b:ab:0c/00:00:0e:00:00/40 tag 2 ncq dma 4096 out Nov 7 16:39:45 Avalon kernel: res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/44:18:5b:83:03/00:00:0e:00:00/40 tag 3 ncq dma 34816 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:e0:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/44:20:73:bb:03/00:00:0e:00:00/40 tag 4 ncq dma 34816 out Nov 7 16:39:45 Avalon kernel: res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/23:60:06:40:00/00:00:1c:00:00/40 tag 12 ncq dma 17920 out Nov 7 16:39:45 Avalon kernel: res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/42:78:31:bb:03/00:00:0e:00:00/40 tag 15 ncq dma 33792 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:e0:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/4d:80:b7:bb:03/00:00:0e:00:00/40 tag 16 ncq dma 39424 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:e0:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/11:90:8f:b2:03/00:00:0e:00:00/40 tag 18 ncq dma 8704 out Nov 7 16:39:45 Avalon kernel: res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/08:98:8d:f5:02/00:00:1c:00:00/40 tag 19 ncq dma 4096 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/70:a0:9a:9f:03/00:00:1c:00:00/40 tag 20 ncq dma 57344 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:00:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/18:a8:0a:a0:03/00:00:1c:00:00/40 tag 21 ncq dma 12288 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:01:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/42:b0:de:90:03/00:00:0e:00:00/40 tag 22 ncq dma 33792 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:09:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5.00: failed command: WRITE FPDMA QUEUED Nov 7 16:39:45 Avalon kernel: ata5.00: cmd 61/30:b8:ab:6a:03/00:00:0e:00:00/40 tag 23 ncq dma 24576 out Nov 7 16:39:45 Avalon kernel: res 40/00:01:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Nov 7 16:39:45 Avalon kernel: ata5.00: status: { DRDY } Nov 7 16:39:45 Avalon kernel: ata5: hard resetting link Nov 7 16:39:45 Avalon kernel: ata5: SATA link up 3.0 Gbps (SStatus 123 SControl 300) Nov 7 16:39:45 Avalon kernel: ata5.00: supports DRM functions and may not be fully accessible Nov 7 16:39:45 Avalon kernel: ata5.00: supports DRM functions and may not be fully accessible Nov 7 16:39:45 Avalon kernel: ata5.00: configured for UDMA/133 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#2 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=42s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#2 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#2 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#2 CDB: opcode=0x2a 2a 00 0e 0c ab 4b 00 00 08 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 235711307 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120683140608 size=4096 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=30s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#3 CDB: opcode=0x2a 2a 00 0e 03 83 5b 00 00 44 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 235111259 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120375916032 size=34816 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=30s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#4 CDB: opcode=0x2a 2a 00 0e 03 bb 73 00 00 44 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 235125619 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120383268352 size=34816 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#12 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=42s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#12 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#12 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#12 CDB: opcode=0x2a 2a 00 1c 00 40 06 00 00 23 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 469778438 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=240525511680 size=17920 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#15 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=30s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#15 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#15 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#15 CDB: opcode=0x2a 2a 00 0e 03 bb 31 00 00 42 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 235125553 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120383234560 size=33792 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#16 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=30s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#16 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#16 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#16 CDB: opcode=0x2a 2a 00 0e 03 bb b7 00 00 4d 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 235125687 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120383303168 size=39424 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#18 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=42s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#18 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#18 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#18 CDB: opcode=0x2a 2a 00 0e 03 b2 8f 00 00 11 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 235123343 op 0x1:(WRITE) flags 0x700 phys_seg 2 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120382103040 size=8704 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#19 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=35s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#19 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#19 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#19 CDB: opcode=0x2a 2a 00 1c 02 f5 8d 00 00 08 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 469955981 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=240616413696 size=4096 flags=180880 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#20 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=35s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#20 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#20 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#20 CDB: opcode=0x2a 2a 00 1c 03 9f 9a 00 00 70 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 469999514 op 0x1:(WRITE) flags 0x700 phys_seg 14 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=240638702592 size=57344 flags=40080c80 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#21 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=35s Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#21 Sense Key : 0x5 [current] Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#21 ASC=0x21 ASCQ=0x4 Nov 7 16:39:45 Avalon kernel: sd 6:0:0:0: [sdg] tag#21 CDB: opcode=0x2a 2a 00 1c 03 a0 0a 00 00 18 00 Nov 7 16:39:45 Avalon kernel: blk_update_request: I/O error, dev sdg, sector 469999626 op 0x1:(WRITE) flags 0x700 phys_seg 1 prio class 0 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=240638759936 size=12288 flags=180880 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120377687040 size=33792 flags=180880 Nov 7 16:39:45 Avalon kernel: zio pool=zpool vdev=/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M-part1 error=5 type=2 offset=120372680192 size=24576 flags=180880 Nov 7 16:39:45 Avalon kernel: ata5: EH complete Nov 7 16:39:45 Avalon kernel: ata5.00: Enabling discard_zeroes_dataActual SMART Values of the brandnew replaced Samsung 850 EVO 1TB after reboot and the error in the syslog:

# Attribute Name Flag Value Worst Threshold Type Updated Failed Raw Value 5 Reallocated sector count 0x0033 100 100 010 Pre-fail Always Never 0 9 Power on hours 0x0032 099 099 000 Old age Always Never 32 (1d, 8h) 12 Power cycle count 0x0032 099 099 000 Old age Always Never 10 177 Wear leveling count 0x0013 099 099 000 Pre-fail Always Never 1 179 Used rsvd block count tot 0x0013 100 100 010 Pre-fail Always Never 0 181 Program fail count total 0x0032 100 100 010 Old age Always Never 0 182 Erase fail count total 0x0032 100 100 010 Old age Always Never 0 183 Runtime bad block 0x0013 100 100 010 Pre-fail Always Never 0 187 Reported uncorrect 0x0032 100 100 000 Old age Always Never 0 190 Airflow temperature cel 0x0032 075 062 000 Old age Always Never 25 195 Hardware ECC recovered 0x001a 200 200 000 Old age Always Never 0 199 UDMA CRC error count 0x003e 100 100 000 Old age Always Never 0 235 Unknown attribute 0x0012 099 099 000 Old age Always Never 5 241 Total lbas written 0x0032 099 099 000 Old age Always Never 291524814Has anyone any idea what is here the problem? Is the brandnew drive faulty or have i a problem with the zpool?

root@Avalon:~# zpool status pool: zpool state: ONLINE scan: scrub repaired 0B in 00:06:59 with 0 errors on Sun Nov 7 17:05:41 2021 config: NAME STATE READ WRITE CKSUM zpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ata-Samsung_SSD_850_EVO_1TB_S2RFNX0HA28280F ONLINE 0 0 0 ata-Samsung_SSD_870_EVO_1TB_S626NF0R226283M ONLINE 0 0 0 errors: No known data errorsThanks

Chris

-

1

1

-

Mover error: move: error: move, 392

in General Support

Posted

Hello,

since a few days mover logs some errors to the syslog:

Can anyone please help me to solve this problem?

Thank you!

Chris