wildfire305

-

Posts

145 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by wildfire305

-

-

This is what I mean when the command to sleep the disks isn't showing up. Usually if I have something keeping the disks awake, I see a lot of "spinning down" followed by "read SMART" when the disks are awoken by the process reading files. I'm not seeing the spinning down and the reads/writes are not increasing in count.

-

The one server I was considering downgrading (cvg05) has now started sleeping on its own. That server only has an rsnapshot container running and a speed test container. The other containers are not used unless the primary server is down and they are started manually. It has no external shares and it pulls backups through ssh from other servers on the network once a day and has no external shares enabled. Nothing is able to write to it by design because it is a backup server, therefore the disks sleep after the backups complete and they stay asleep until the next daily backup. It uses a small SSD cache for appdata, domains, system. It has one VM that boots debian and displays a webpage on a monitor.

The other two servers disks remain awake and the drives are not being commanded to sleep according to the disk logs. I can usually see if I have a thing that is keeping the disks awake in the disk logs by seeing them spin up and down, but that isn't showing up. I had previously moved all highly active file activity to a always on zfs pool and the array is now just WORM policy type stuff. My array disks on both servers are only supposed to wake up during Media Streaming activity. This has been a working design for me for the six months prior to the 6.12.9 upgrade.

-

cvg02 and cvg05 have been power cycled since upgrade, tonyserver has not.

cvg02-diagnostics-20240329-2344.zip cvg05-diagnostics-20240329-2343.zip tony-diagnostics-20240329-2344.zip

-

After updating to 6.12.9 on all three of my servers, their drives will not spin down automatically. These servers previously spun down as expected after the time-out. Two of them are used once a day and only need to spin up at that time. No configuration changes were made besides updating all plugins before upgrading. Considering downgrading one as part of the diagnosis. Is there a bug in 6.12.9 for disk sleep?

Getting diagnostics soon...

-

I'm going to mark this as solved. I never would have suspected a plugin for wake on lan to have that much influence on the system stability. I believe it should have a caution label on the plugin. It didn't immediately cause problems, but removing it has resolved the issues I was having. I could imagine folks that like to fire parts cannons at problems being extremely upset at replacing hardware over a silly plugin. I'm not using "server grade" hardware, but I think it's close enough to it when you look at the base chips. And it is standardized enough that everything so far has "just worked".

-

3 hours ago, JorgeB said:

Not necessarily, it's been known to cause unexpected showdowns/crashes.

Why then would it not be pulled from the app store or at least have an incompatibility warning? It wasted a lot of my time if it ends up being the cause - so far stable as a rock today and I've been running it at about 400 watts worth of processes.

-

The last one I installed...about a week ago... was the WOL plugin - which appeared to be partially broken. I removed it and performed the same tests and the server did not crash. I have a hard time trusting that as the "fix" though. I would assume that plugin does nothing until you push for it to wake a computer.

-

I was able to reliably get the server to crash when writing to the cache drive ssd 4 out of 4 tries dd'ing 100-200GBs to the cache drive it locked and rebooted every time. This was performed while doing parity checks on the main array and ZFS array. The cache drive (and three of the hard drives) isn't connected to the HBA. I rebooted and checked the ram with 4 passes using MemTest86 v10 - Passed 64GB ECC DDR4. I then rebooted into unraid safe mode (selected from the thumb drive) and have written 500GB to the cache SSD with no hiccups. I was simultaneously scrubbing the cache drive to hammer that disk as hard as I could. No lockups. Smart attributes are clean on that SSD, BTRFS device stats are all 0, scrub is clean.

So then I started recreating the same load in safe mode - started a scrub of the unraid array, imported my ZFS pool and started a scrub on it and continued to hammer everything. No lockups whatsoever. All dockers that normally run - running fine (didn't test the others - irrelevant).

So, are plugins the primary difference between safe mode and regular mode? If so, I may have a rogue plugin.

-

Maybe that was my fault - changed the command to " dd if=/dev/random of=test.img bs=1M count=1000000 status=progress" and it has completed almost a terrabyte so far of writing - while also performing a full ZFS scrub. I think my previous command ran me out of RAM.

-

Well....that DD command crashed it...looks like maybe I've got a clue.

-

Started this command on the ZFS array to try to rule out write issues with the HBA "dd if=/dev/random of=test.img bs=1G count=500 status=progress" ...while running a zfs scrub - this outta tax it.

-

Server seems to be crashing nearly every day after running mostly solid for a couple years. Where can I start to look. Syslog is mirrored to flash and available if desired. The only events leading up to the crash is the flash backup plugin running every 30 minutes - which seems excessive to me. Sometimes the crash reboots the server, sometimes I have to reboot it manually. Connecting a monitor displays a black screen. Only recent hardware change was a slightly different HBA card (external connectors vs internal). It ran for a couple weeks after that before this crashing though, so I doubt that it is. How can I start to look for clues? I would like to rule out the HBA quickly because it is still returnable. I allow the parity checks after the crashes (4 data + 1 parity + 1 cache on primary array and 6 zfs disk array) - so I think this rules out read issues. Write issues might be ruled out by the nightly backups - main array and cache disk backs up to zfs array. The only real new addition - I added a second server that is pulling a backup from this server over an NFS share on the ZFS filesystem. I switched from a btrfs pool to the ZFS pool a couple months ago. The new backup is putting a heavy read load on that ZFS share - but it still completed last night with no error then 30 minutes later the primary server locked and rebooted at 4:30am - then again at 6:30am. The only scheduled task during that time is a remote server outside of my local network backs up to this server through an rsync docker that has a static IP. I recently found a forum post about switching from macvlan to ipvlan when running custom ip dockers and made that change this morning.

-

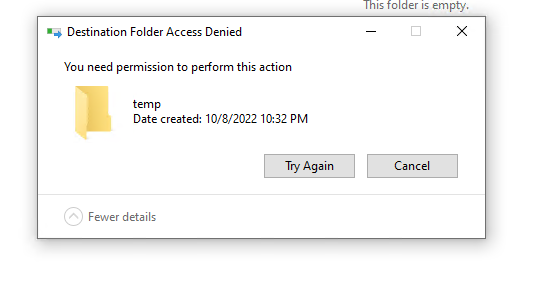

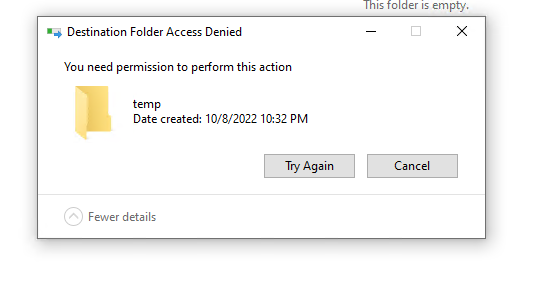

Certainly the new integration of the VirtioFS is exciting. I upgraded to 6.11.1 with no problems. I got VirtioFS working on a windows 10 vm . I get an unexpected result - I can read all the data I want from the share, I can delete anything I want, I can create folders.....but I can't create files. I tried this on a BTRFS and XFS filesystem. The VM user is one of the approved users for those shares (although that is likely irrelevant because this would bypass all user permissions). Where would I look to start diagnosing this?

-

1

1

-

-

-

On 5/17/2022 at 5:03 AM, Masterwishx said:

Do you mean -v /mnt/user/Backup:/media in container?

yes, but I used Unraid to create a share called backup first and then I pointed the container at it.

-

13 hours ago, Masterwishx said:

No its made in /mnt/user/urbackup_tmp_files after install.

i used only default paths in container :

-v /mnt/user:/media \

-v /mnt/user/appdata/binhex-urbackup:/config \

i saw some tmp option in urBackup maybe its used for this...

I created a share called Backup and I pointed my docker container to that so I could control access to that folder specifically. The urbackup_tmp_files is in my mnt/user/backup/ directory. That's probably why I haven't noticed it before.

-

1

1

-

-

1 hour ago, Masterwishx said:

After first install of docker i have automaticly share created "urbackup_tmp_files" do i need it ? for what its used?

That is probably in your /mnt/user/appdata/urbackup/config folder? It's probably used for temporary files needed by urbackup. Did you specify the location for the backups when installing the docker?

-

20 minutes ago, Masterwishx said:

i understand now we can use image backup for windows?

Yes, I use it for 6 windows computers and have tested/restored two of them. Interesting side note: all of the windows computers I run have veracrypt encrypted hard drives as the primary drive that urbackup backs up. When restoring, it is unencrypted until re-encrypting with veracrypt. But the important thing is that it still works!

-

2

2

-

-

4 minutes ago, trurl said:

I thought you

Original array disk, no. Vibrating disk I was testing, yes

-

Do I...stop the array switch emulated disk to none, start array, stop array, put original disk back in that slot, start array and it will rebuild from parity

-

I was testing some 2TB and 3TB non-array disks in the same enclosure as my array disks. Docker and VMs were already disabled prior to testing. One of the non-array test disks started vibrating wildly and kicking udma crc errors left and right. Then, some array disks started building crc errors and one of them became disabled. I stopped all of my processes (badblocks on 5 disks) and tried to powerdown gracefully with the powerdown command. The system hung and could not be ping-ed. I waited 15 minutes and manually powered off everything.

I removed the offending drive and promptly put two .22 caliber bullet holes into it for a quick, easy, and satisfying wipe before putting it in the trash.

Now the server is restarted, and I'm testing the non-array disks again. The array came up with one disabled/emulated disk. I started it leaving the configuration alone as it said it would do a read test of all the other disks. I figured that would be a good thing and it continued gracefully with no weird format prompts.

Questions/Options:

Is it possible to get this disabled disk back in the array, or do I have to rebuild it onto itself?

Do I have to stop the array to run an XFS filesystem check on the disabled disk? It won't mount read only because I assume the emulated disk is using its uuid.

If I were successful at getting it back into the array (possibly using new config), would I need to rebuild/recheck the parity?

Ideally, I would like to get this disk mounted somehow, copy it's contents to another disk, and then put it in the array letting the parity rebuild it. Someone helped me before with an XFS command that generated a new UUID (I found my previous post), But I know if I do that, I probably can't bring it back into the array.

All the data is backed up...to B2. The disks I'm testing are for my onsite backup pool which I had to destroy the data to retest them. I got some larger disks and freed up slots in the server (12 total) So I could move my backup disks from esata/usb3 enclosures and put them into the main server box.

Diagnostics attached for posterity. If y'all have any better paths than what I've thought of, I'm open to suggestions.

-

3 hours ago, gowg said:

Did you ever figure this out? I'm having the same issue.

Unfortunately, no I didn't. I haven't had much free time to dig into it lately - started a new job in January and I'm still learning the ropes. I just tried it again and it did not succeed, but I didn't get an error in the log. I can confirm that the backups absolutely work awesome, even when the client system is using a veracrypt hard drive.

-

1

1

-

-

2 minutes ago, stayupthetree said:

Perhaps someone here can explain how with zero devices passed through to the container, the container still shows both drives?

That's where I got to with it. I couldn't understand how it was seeing sr0 as sr0 still when I intentionally tried to map it to a different sr(x) for testing purposes. This docker works excellent with one drive. My server has one built-in (laptop style) dvd burner. I have a usb bluray burner. In order to rip blurays I have to use the makemkv docker or a vm or my w10 laptop. I could never get ripper to ignore sr0 and go for the external. Mapping sr5 (external burner) to sr0 caused it to try to read from both drives simultaneously. I've been dabbling with Linux since 1999 Redhat 5.2, but only really got stuck in firm last year so I'm just chalking it up to my own inexperience. I can follow complex directions, but I need more directions.

-

6 hours ago, rix said:

b) ship me a secondary optical drive for free (ideally your exact model) so I can try and diagnose this

@rixAre you in the USA? I have a spare internal sata or external usb optical drive (choose one) I'd donate and ship to you. DM me if interested.

Three servers on 6.12.9 drives no longer sleep after upgrade from 6.12.8

in General Support

Posted · Edited by wildfire305

This worked on two of the three servers. I added a reboot in between disable/enable.

The third one was resolved by watching the disks that woke up from manual spin down command with

This showed that some metadata on existing old files was being modified by an hourly rclone process. Not sure why OneDrive has started modifying metadata on files, but since it gets modified, rclone syncs the changes. If there are no changes the disks don't wake up.