flaggart

-

Posts

119 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by flaggart

-

-

That sucks. But thanks for helping.

-

-

Thankyou both for your help with this so far.

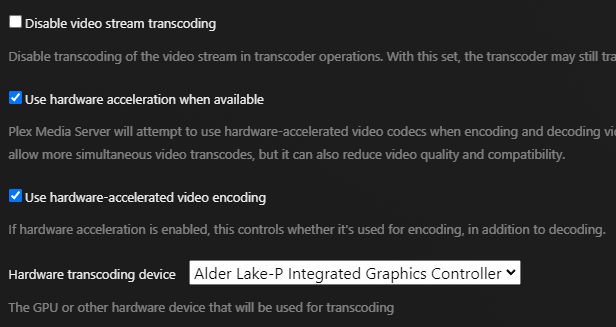

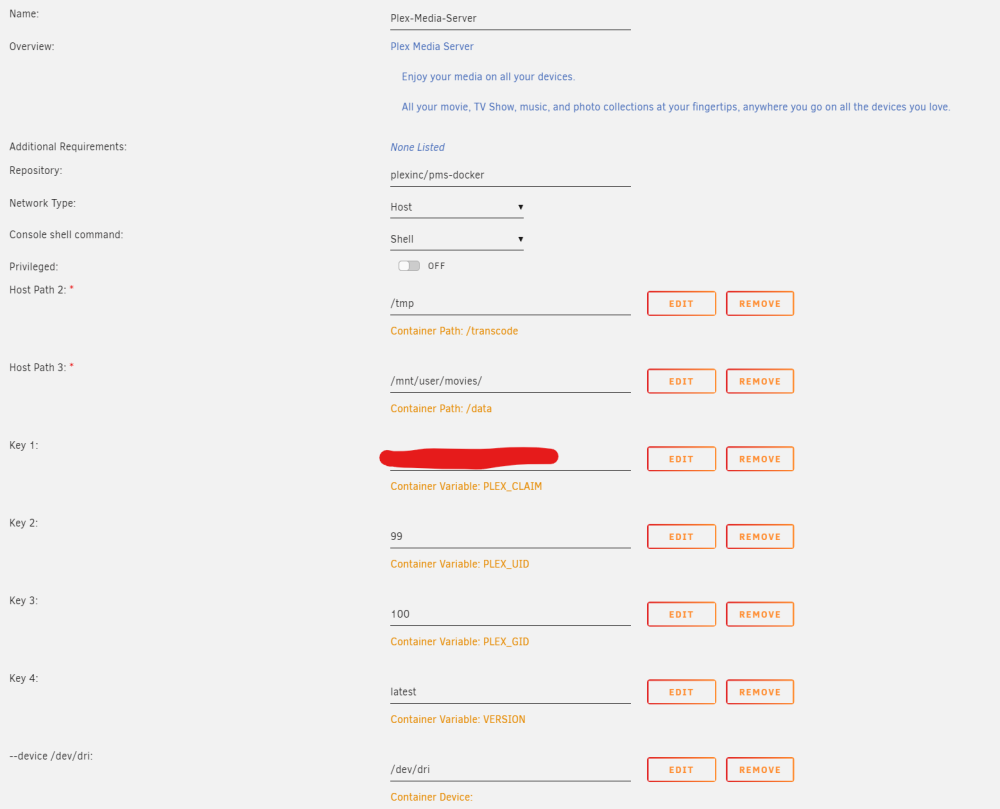

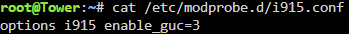

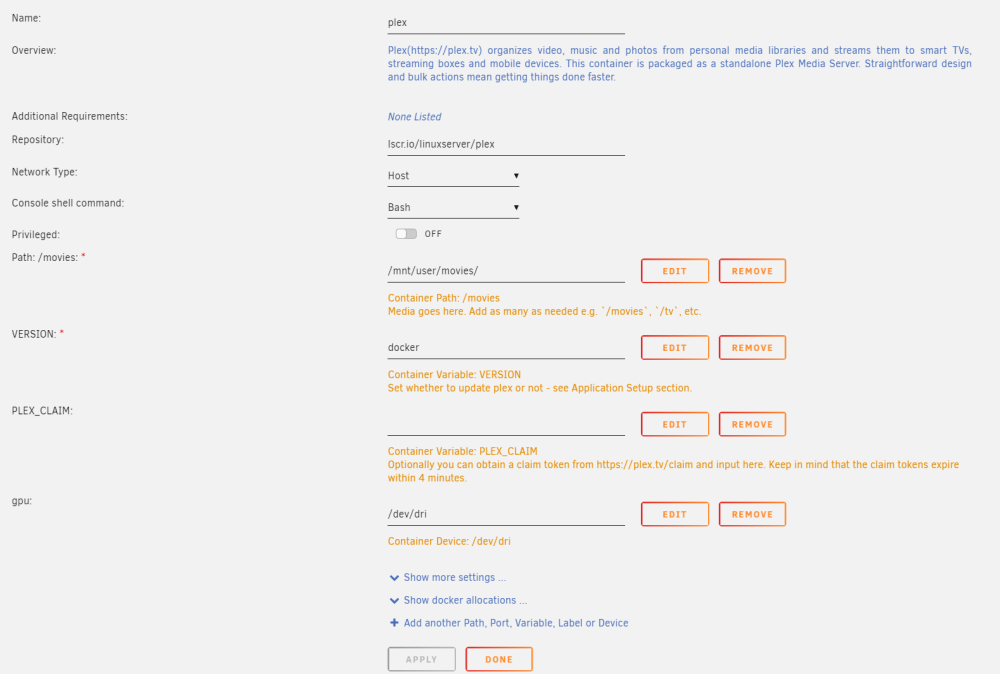

@ich777 I created i915.conf and rebooted. No change. Here is the docker config, I am using linuxserver however I also tried the official docker with the same result:

I don't know what the necessary steps for Plex with the claim are. The Plex server webconfig shows it is claimed under my Plex account, which has PlexPass.

-

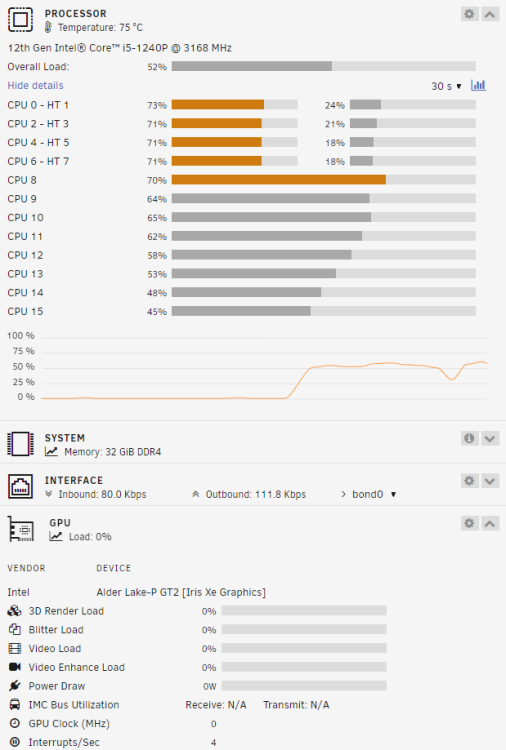

Running Plex with a single transcode, hits the CPU, no GPU usage:

Visible in Plex:

Errors in Plex log:

Jan 22, 2024 09:09:53.968 [22679530531640] DEBUG - [Req#367/Transcode] Codecs: testing h264 (decoder) with hwdevice vaapi

Jan 22, 2024 09:09:53.968 [22679530531640] DEBUG - [Req#367/Transcode] Codecs: hardware transcoding: testing API vaapi for device '/dev/dri/renderD128' (Alder Lake-P Integrated Graphics Controller)

Jan 22, 2024 09:09:53.968 [22679530531640] ERROR - [Req#367/Transcode] [FFMPEG] - Failed to initialise VAAPI connection: -1 (unknown libva error). -

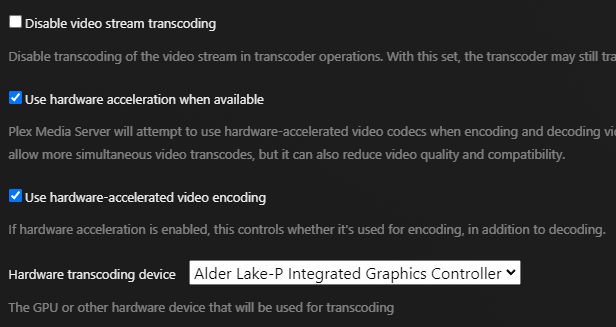

Yes, it is in docker config and I am able to select the GPU in Plex web config, but it can never actually initialize or use it

-

Hello

I am unable to get hardware transcoding working at all with an i5-1240P (Alderlake CPU in Framework mainboard). I am able to select the GPU in Plex web config, but it can never actually initialize or use it:

Jan 22, 2024 09:09:53.968 [22679530531640] DEBUG - [Req#367/Transcode] Codecs: testing h264 (decoder) with hwdevice vaapi

Jan 22, 2024 09:09:53.968 [22679530531640] DEBUG - [Req#367/Transcode] Codecs: hardware transcoding: testing API vaapi for device '/dev/dri/renderD128' (Alder Lake-P Integrated Graphics Controller)

Jan 22, 2024 09:09:53.968 [22679530531640] ERROR - [Req#367/Transcode] [FFMPEG] - Failed to initialise VAAPI connection: -1 (unknown libva error).Unraid 6.12.6

Tried linuxserver and official Plex docker

Any ideas?

Thanks

-

Hello, I am considering a project with a 12th-gen Framework laptop mainboard - https://frame.work/gb/en/products/mainboard-12th-gen-intel-core?v=FRANGACP06

I currently have a reasonably large Unraid NAS, the main purpose for which is a plex server. This is composed of the Unraid host, which is an unremarkable ATX pc case, and 2x 4U cases for the drives (up to 15 drives in each). The drives in each 4U case are connected to 4 ports on an internal SAS expander, which then connects via two external mini-SAS cables to the Unraid box. The Unraid box has a single HBA with 4 external ports (9206-16e).

I have upgraded my framework laptop to AMD, and have the 12th gen mainboard in the CoolerMaster case, waiting for something interesting to do. I am intrigued to use it for Unraid due to low power usage, the intel CPU being good for plex transcoding, and because it has 4x Thunderbolt ports.

. To connect my drives, I am considering going Thunderbolt -> NVME -> pci-e by buying 2x thunderbolt NVME adapters, and 2x ADT-Link NVME to pci-e adapters (such as https://www.adt.link/product/R42SR.html, there are lots of variants). I can then place one of these inside each of my 4U cases, and put a 2-port SAS HBA card (e.g. 9207-8i) in each ADT-Link pci-e slot to connect to the drives via the SAS expander.

I am not looking for ultimate speed, just ease of connectivity, and ultra low power usage. I see reviews for some Thunderbolt NVME adapters to be quite low, e.g. 1 to 1.5 Gbit/sec, but others approach 2.5, which would be sufficient (across 15 drives this would be 166 MB/sec during Unraid parity check).

Interested to see if anyone has thought of doing similar, or anyone who has experience of speeds using Thunderbolt - > NVME -> pci-e or similar.

(PS. Why not Thunderbolt to pci-e directly? Well, these options seem less than ideal as most solutions are geared towards external GPU usage, and are either very expensive / bulky, or are less easy to power (e.g., requires PSU connected via ATX 24-pin, assuming the card is high power). Maybe I am wrong and someone can share a good solution for this approach?)

-

1

1

-

-

https://www.highpoint-tech.com/rs6430ts-overview

1x 8-Bay JBOD Tower Storage

It seems cheaper for me to buy this directly from Highpoint with international shipping than it is to source the older 6418S locally.

-

I think I was overthinking it. Any hard link created by these apps will initially be on cache, and mover will move onto array disks. Presumably it is clever enough to move the file and then make the hard link on the same disk filesystem. This seems to be the case from looking at the output of "find /mnt/disk1/ -type f -not links 1", and therefore I can just use rsync with -H flag to move files from one disk to another preserving hard links. I think it is unlikely there are any hard links that span different disks but are hard-linked across the array filesystem.

-

1

1

-

-

Hello

I use the regular tools such as Sonarr, Radarr etc which keep downloaded files and make a hard copy in the final library location. I want to move all data off of one disk in the array to free it up so that it can be used as second parity disk instead. I realize I can't just dumb copy all of the files from that disk to other disks, as this would lose the hard links and take more disk space as a result.

I know that the mover script checks files on the cache disk to see if they are hard links, and I assume if they are, it then finds the original file, and instead of moving from the cache to the destination disk, it instead creates a new hard link.

My question is - is there an existing tool I can use to empty one of my disks in this way, or will I need to make a similar script which checks each file to see if it is a hard link, and if so find the original file, etc etc...

Thanks

-

On 9/21/2015 at 3:47 PM, JorgeB said:

Intel® SAS2 Expander RES2SV240 - 10w

Single Link with LSI 9211-8i (2400MB/s)

8 x 275MB/s

12 x 185MB/s

16 x 140MB/s (112MB/s*)

20 x 110MB/s (92MB/s*)

* Avoid using slower linking speed disks with expanders, as it will bring total speed down, in this example 4 of the SSDs were SATA2, instead of all SATA3.

Dual Link with LSI 9211-8i (4000MB/s)

12 x 235MB/s

16 x 185MB/s

Dual Link with LSI 9207-8i (4800MB/s)

16 x 275MB/s

Hello

This information is really useful and has inspired be to revamp my setup. I wonder if someone could explain why the single port link speed between HBA and expander would be 2400MB/s rather than 3000MB/s (6gbit * 4 lanes = 24gbit or 3000MB/s).

Intended setup:

- 1x 9207-8e in a PCI-e 3.0 8x slot (max 7.877 GB/s)

- Single links in to 2x RES2SV240

- 16 disks per expander, all support SATA3

- Would this not provide 3000MB/s to each expander, and therefore up to 187.5MB/sec per disk - rather than 2400MB/s and 150MB/s?

Thanks

-

1 minute ago, ich777 said:

Can you replug the USB Tuner when the server is running, some people got a problem with the DVB-Driver Plugin that it was not working on but but if the replugged the USB Tuner it started working.

Can you give me the output from 'lsusb' or at least which tuners those are? I think the firmware or something else is missing.

Replugged, Unraid-Kernel-Helper Plugin still says no custom loaded modules found.

Output of "lsusb -v" attached

-

4 hours ago, ich777 said:

This is a USB Tuner or am I wrong?

Maybe try to replug it and it will hopefully show up, have you rebooted?

I can try and compile you a version with the TBS drivers.

Yes, it is USB. I've rebooted several times and tried all USB ports - I thought I was going mad and not overwriting the boot files properly!! It seems there are a few reports of 5990 not being detected regardless of drivers, so not sure TBS drivers would make any difference, but if it is no hassle for you it would be nice to test

The device is listed under lsusb but /dev/dvb does not exist and it does not show up under your plugin.

-

2 hours ago, ich777 said:

6.8.3 is not available anymore, sorry...

But you can download the prebuilt 6.8.3 image that has LibreELEC drivers builtin (first post at the bottom), they should also work fine with the TBS cards.

Hope that's good enough?

Ah, I see. I tried LibreELEC but it does not detect my TBS 5990. Thanks anyway.

-

Hello

Sorry if I am being a bit thick here, but all I wan't to do is build 6.8.3 with TBS drivers. There is no mention of how to specify this using env on docker hub or the first post here. Can someone please advise?

Thanks

-

On 8/7/2020 at 7:11 PM, rs5050 said:

I saw someone else post this same error a few days ago, but not additional messages were related to it.

I've been running rTorrentvpn under a docker container for months now with zero problems. I updated earlier this morning to the latest image and restarted and now my ruTorrent web won't open up correctly. I know my rtorrent client is running, I've got 1000+ torrents seeding and I can see them validated on the trackers without problems.

[07.08.2020 13:53:11] WebUI started. [07.08.2020 13:53:25] JS error: [http://rutorrent:[email protected]:9080/ : 5145] Uncaught TypeError: Cannot read property 'flabel_cont' of undefinedLog attached.

I had the same issue after update. Browser (Vivaldi) would not load the client and had this JS error. It worked fine in Chromium, and was fine after I unpinned the tab in Vivaldi and reloaded the page, ensuring adblock+tracker protection was off.

-

7 hours ago, jdlancaster13 said:

Hi Flaggart,

Reviving this thread again to ask you a question about your space management with the HDD bay directly above the PSU. I currently have 8 drives installed, and can't get the drive bay above the PSU to fit. What kind of cables are you using to make this work? I'd really appreciate it if you could drop a link.

I am not sure which drive area you mean

if you mean the white 4x drive bay directly above the PSU - there should not be any issues with this regardless of cables?

if you mean the space with velcro strap to the left of the PSU in the images above, I think I had to orient the drive length ways so that the connectors were pointing towards the cable-management hole through to the other chamber. This matches the more recent image from stuntman83 above.

-

On 3/27/2020 at 6:09 AM, doowstados said:

How has your build done in terms of cooling with all of those drives over the years? Have you seen any degraded performance/thermal issues due to how close the drives are to each other/how the cooling in the case is configured? How stable are the drive cages (i.e. do they vibrate much when full of drives)?

I retired it towards the end of 2019, so it had a good 4.5 years. I had three fans in the drive chamber using Fan Auto Control to ramp up with drive temp and never had an issue. If anything, the 4 drives in the main chamber were the ones that got the hottest so I'd suggest putting drives there that maybe have less use or fewer platters, whatever makes them less likely to heat up.

In terms of vibration / noise, I had the box in the same room I slept in for a few years. I would not describe it as quiet but it wasn't bothersome.

-

Same here unfortunately

-

Hi all

Over the past few months I have been experiencing complete hard lockup of Unraid and have to power cycle. Each time it happens as a direct result of attempted to reboot the same Windows 10 VM (via the shutdown menu inside the VM, not using web GUI). Syslog as follows:

May 4 11:17:41 SERVER kernel: mdcmd (552): spindown 10 May 4 12:25:40 SERVER kernel: mdcmd (553): spindown 0 May 4 13:22:59 SERVER kernel: mdcmd (554): spindown 10 May 4 13:23:00 SERVER kernel: mdcmd (555): spindown 5 May 4 13:52:18 SERVER kernel: mdcmd (556): spindown 9 May 4 16:04:17 SERVER kernel: rcu: INFO: rcu_sched detected stalls on CPUs/tasks: May 4 16:04:17 SERVER kernel: rcu: 24-...0: (1 GPs behind) idle=a12/1/0x4000000000000000 softirq=109480389/109480389 fqs=14466 May 4 16:04:17 SERVER kernel: rcu: (detected by 25, t=60002 jiffies, g=587531517, q=76713) May 4 16:04:17 SERVER kernel: Sending NMI from CPU 25 to CPUs 24: May 4 16:04:17 SERVER kernel: NMI backtrace for cpu 24 May 4 16:04:17 SERVER kernel: CPU: 24 PID: 307 Comm: CPU 1/KVM Tainted: P O 4.19.107-Unraid #1 May 4 16:04:17 SERVER kernel: Hardware name: ASUSTek Computer INC. TS700-E7-RS8/Z9PE-D16 Series, BIOS 5601 06/11/2015 May 4 16:04:17 SERVER kernel: RIP: 0010:qi_submit_sync+0x154/0x2db May 4 16:04:17 SERVER kernel: Code: 30 02 0f 84 40 01 00 00 4d 8b 96 b0 00 00 00 49 8b 42 10 83 3c 30 03 75 0b 41 bc f5 ff ff ff e9 27 01 00 00 49 8b 06 8b 48 34 <f6> c1 10 74 68 49 8b 06 8b 80 80 00 00 00 c1 f8 04 41 39 c3 75 57 May 4 16:04:17 SERVER kernel: RSP: 0018:ffffc90006a8bb50 EFLAGS: 00000093 May 4 16:04:17 SERVER kernel: RAX: ffffc9000001f000 RBX: 0000000000000100 RCX: 0000000000000000 May 4 16:04:17 SERVER kernel: RDX: 0000000000000001 RSI: 000000000000006c RDI: ffff88903f418a00 May 4 16:04:17 SERVER kernel: RBP: ffffc90006a8bba8 R08: 0000000000640000 R09: 0000000000000000 May 4 16:04:17 SERVER kernel: R10: ffff88903f418a00 R11: 000000000000001a R12: 00000000000001b0 May 4 16:04:17 SERVER kernel: R13: ffff88903f418a00 R14: ffff88903f40f400 R15: 0000000000000086 May 4 16:04:17 SERVER kernel: FS: 000014bc64dff700(0000) GS:ffff88a03fa00000(0000) knlGS:0000000000000000 May 4 16:04:17 SERVER kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 May 4 16:04:17 SERVER kernel: CR2: ffffe4881907d478 CR3: 000000108a6ba005 CR4: 00000000001626e0 May 4 16:04:17 SERVER kernel: Call Trace: May 4 16:04:17 SERVER kernel: modify_irte+0xf0/0x136 May 4 16:04:17 SERVER kernel: intel_irq_remapping_deactivate+0x2d/0x47 May 4 16:04:17 SERVER kernel: __irq_domain_deactivate_irq+0x27/0x33 May 4 16:04:17 SERVER kernel: irq_domain_deactivate_irq+0x15/0x22 May 4 16:04:17 SERVER kernel: __free_irq+0x1d8/0x238 May 4 16:04:17 SERVER kernel: free_irq+0x5d/0x75 May 4 16:04:17 SERVER kernel: vfio_msi_set_vector_signal+0x84/0x231 May 4 16:04:17 SERVER kernel: ? flush_workqueue+0x2bf/0x2e3 May 4 16:04:17 SERVER kernel: vfio_msi_set_block+0x6c/0xac May 4 16:04:17 SERVER kernel: vfio_msi_disable+0x61/0xa0 May 4 16:04:17 SERVER kernel: vfio_pci_set_msi_trigger+0x44/0x230 May 4 16:04:17 SERVER kernel: ? pci_bus_read_config_word+0x44/0x66 May 4 16:04:17 SERVER kernel: vfio_pci_ioctl+0x52d/0x9a2 May 4 16:04:17 SERVER kernel: ? vfio_pci_config_rw+0x209/0x2a6 May 4 16:04:17 SERVER kernel: ? __seccomp_filter+0x39/0x1ed May 4 16:04:17 SERVER kernel: vfs_ioctl+0x19/0x26 May 4 16:04:17 SERVER kernel: do_vfs_ioctl+0x533/0x55d May 4 16:04:17 SERVER kernel: ksys_ioctl+0x37/0x56 May 4 16:04:17 SERVER kernel: __x64_sys_ioctl+0x11/0x14 May 4 16:04:17 SERVER kernel: do_syscall_64+0x57/0xf2 May 4 16:04:17 SERVER kernel: entry_SYSCALL_64_after_hwframe+0x44/0xa9 May 4 16:04:17 SERVER kernel: RIP: 0033:0x14bc687a54b7 May 4 16:04:17 SERVER kernel: Code: 00 00 90 48 8b 05 d9 29 0d 00 64 c7 00 26 00 00 00 48 c7 c0 ff ff ff ff c3 66 2e 0f 1f 84 00 00 00 00 00 b8 10 00 00 00 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d a9 29 0d 00 f7 d8 64 89 01 48 May 4 16:04:17 SERVER kernel: RSP: 002b:000014bc64dfe2e8 EFLAGS: 00000246 ORIG_RAX: 0000000000000010 May 4 16:04:17 SERVER kernel: RAX: ffffffffffffffda RBX: 000014b84150a200 RCX: 000014bc687a54b7 May 4 16:04:17 SERVER kernel: RDX: 000014bc64dfe2f0 RSI: 0000000000003b6e RDI: 000000000000004b May 4 16:04:17 SERVER kernel: RBP: 000014b84150a200 R08: 000000000000006c R09: 00000000ffffff00 May 4 16:04:17 SERVER kernel: R10: 000014b82cd8406b R11: 0000000000000246 R12: 000000000000006a May 4 16:04:17 SERVER kernel: R13: 0000000000000080 R14: 0000000000000002 R15: 000014b84150a200

The output of the VM at this point is the Windows shutdown sequence saying "Restarting...". If I try to use Virsh to shutdown I get the following, and shortly after a total Unraid lockup:

virsh # destroy "Windows 10" error: Failed to destroy domain Windows 10 error: Timed out during operation: cannot acquire state change lock (held by monitor=remoteDispatchDomainReset)

General info:

Unraid 6.8.3

Platform: Asus Z9PE-D16 with 2x Xeon 2667 v2

RAM: 128GB ECC

The VM is pinned to the second physical CPU, which is isolated from Unraid. It has a GTX 2070 Super and NVMe drive passed through to it.

<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm' id='2'> <name>Windows 10</name> <uuid>39ac96f3-a777-0c5e-419f-596878b407e9</uuid> <description>Win-10</description> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>17301504</memory> <currentMemory unit='KiB'>17301504</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>16</vcpu> <iothreads>2</iothreads> <cputune> <vcpupin vcpu='0' cpuset='8'/> <vcpupin vcpu='1' cpuset='24'/> <vcpupin vcpu='2' cpuset='9'/> <vcpupin vcpu='3' cpuset='25'/> <vcpupin vcpu='4' cpuset='10'/> <vcpupin vcpu='5' cpuset='26'/> <vcpupin vcpu='6' cpuset='11'/> <vcpupin vcpu='7' cpuset='27'/> <vcpupin vcpu='8' cpuset='12'/> <vcpupin vcpu='9' cpuset='28'/> <vcpupin vcpu='10' cpuset='13'/> <vcpupin vcpu='11' cpuset='29'/> <vcpupin vcpu='12' cpuset='14'/> <vcpupin vcpu='13' cpuset='30'/> <vcpupin vcpu='14' cpuset='15'/> <vcpupin vcpu='15' cpuset='31'/> <emulatorpin cpuset='0,16'/> <iothreadpin iothread='1' cpuset='1,17'/> <iothreadpin iothread='2' cpuset='2,18'/> </cputune> <numatune> <memory mode='preferred' nodeset='1'/> </numatune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-3.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/39ac96f3-a777-0c5e-419f-596878b407e9_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> <hyperv> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='1278467890ab'/> </hyperv> </features> <cpu mode='host-passthrough' check='none'> <topology sockets='1' cores='8' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <controller type='usb' index='0' model='ich9-ehci1'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <alias name='usb'/> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <alias name='usb'/> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <alias name='usb'/> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <interface type='bridge'> <mac address='52:54:00:be:8c:35'/> <source bridge='br0'/> <target dev='vnet1'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/2'/> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/2'> <source path='/dev/pts/2'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-2-Windows 10/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='connected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <alias name='input0'/> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'> <alias name='input1'/> </input> <input type='keyboard' bus='ps2'> <alias name='input2'/> </input> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x00' slot='0x1d' function='0x0'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x82' slot='0x00' function='0x0'/> </source> <boot order='1'/> <alias name='hostdev3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x2'/> </source> <alias name='hostdev4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x83' slot='0x00' function='0x3'/> </source> <alias name='hostdev5'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </hostdev> <memballoon model='none'/> </devices> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+100</label> <imagelabel>+0:+100</imagelabel> </seclabel> </domain>

Can anyone advise what the issue might be, or at least on a way to deal with this without it taking down the entire system?

Thanks

-

Surprised there is not more activity from others in this thread, it would be amazing to be able to virtualise integrated graphics in the same way as the rest of the cpu and have multiple vms benefit from it. I would ask you for more details to try myself but I didn't think something like this would be possible and now I am running xeons.

-

I would also like this. In its absence I have been referring to this post which suggests the same information is available without the tool.

-

Hi all

Like many here (I assume), I am planning on a Ryzen 3000 series build in the next couple of months, to replace my existing setup. This brings with it some choices around chipsets, pci-e lanes, etc. My current setup is:

- Node 804 (limited to Micro ATX or smaller)

- i7-2600k

- Supermirco Micro ATX

- Broadcom 9207-8i 8 port HBA (PCI-e 3.0 8x)

- TBS dual DVB tuner (PCI-e 2.0 1x)

Switching to Ryzen has certain limitations:

- Lack of integrated graphics - meaning I have to fit a GPU on a mATX board reducing the number of available slots for other cards

- Only 6 (B450) or 8 (X470) PCI-e 2.0 lanes from chipset.

So the best case would be PCI-e 4x 2.0 for the 9201-8i (in a 16x mechanical slot), meaning it will only be running at 2GB/s total instead of 8GB/s. This works out to ~ 2Gbit per drive so it is above SATA1 speed and I assume unlikely to limit performance much for mechanical disks - can anyone confirm? Will the card even function like this?

Generally, will Ryzen boards boot (and will unRaid function) if no GPU is present?

I am aware that new boards with X570 chipset may solve this problem (12 Gen 4 lanes from chipset), but trying to clarify if it is possible to go with a cheaper chipset.

-

I just used the velcro straps that were already there - I think they are supposed to be for cable management, it wasn't the greatest arrangement. I just settled for one drive there.

Intel 12th generation Alder Lake / Hybrid CPU

in Motherboards and CPUs

Posted

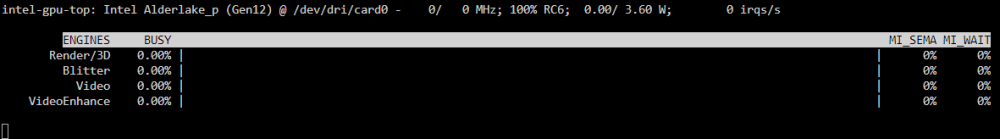

I have tested today unraid with kernel 6.7.5 and still have same issue

Intel Alderlake_p (Gen12) @ /dev/dri/card0 shows in intel_gpu_top and in plex settings it is selectable, but it is not used on transcode