-

Posts

156 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by boxer74

-

I am already using tailscale through a docker container. It is working as I would like. What is the advantage to the plugin? I suppose it would stay connected even if the array is down. Is that about it?

-

Sanoid/Syncoid (ZFS snapshots and replication)

boxer74 replied to steini84's topic in Plugin Support

Any reason to keep using this? Will these features become built-in to 6.12? -

Great, thanks!

-

Will this be updated to work with Unraid 6.12?

-

Will this be updated to support Unraid 6.12? I tried the new rc2 and this plugin isn't working and may be causing the dashboard to be blank.

-

How does one join the beta program?

-

Got this running nicely. Notice that the resolution is maxed at 1600x900 even with a dummy HDMI dongle inserted. Any idea how to increase resolution? This is fine for handhelds, but if I want to play on my 65" 4K TV using Android TV it's not ideal. Thanks.

-

I think it would be a bit much to expect ZFS feature parity with a project like TrueNAS that has always been ZFS focused for years. I think allowing ZFS in pools is a nice complement to unRAID. Snapshot/replication management would be nice, not only for ZFS but BTRFS as well, but I think the existing LUKS encryption implementation is a suitable compromise for now.

-

Would be great if ZFS snapshot and replication management was available natively through the web GUI, but I understand if this will be in a later update.

-

ZFS has been around much longer and is battle tested in production with many businesses. I would trust my data longterm with ZFS more than BTRFS. Other than this, they are pretty similar in terms of feature set.

-

Exciting to hear some official details on this. I will keep my media on a traditional unraid array but migrate important stuff to a zfs pool (likely a mirror of two 4TB hard drives). The important stuff would be documents, family photos, etc. I will likely also migrate docker and vms to a zfs pool of two nvme disks. Loving the potential here.

-

Thanks for this container. What a slick setup. While waiting for my new GTX 1650 to arrive (yes, I know - not a high-end card but still better than what's in the Steam Deck and should be fine for streaming), I was about to setup a Windows VM but then saw this. I tested Hades using a Quadro P400 that I have and it actually worked reasonably well. Obviously that card is no good for larger games. I also ordered a Retroid Pocket 3+ to use as a streaming handheld. I find the Deck too large, loud (fan) and with poor battery life, I don't feel the urge to use it. I am more often using my Nintendo Switch but the lack of AAA titles is tough to take at times. I think this could be the ideal setup. It's also nice having a nearly bottomless pit of Unraid storage to hold all the games.

-

I'm finding the same, but my situation is unique. I'm trying to run unraid as a VM in proxmox. I'd like to use the IPMI plugin to control fan speeds on the host based on the HD temps which are passed through on an LSI HBA via PCI passthrough. Can't get any sensors to show up.

-

For this to work, I had to add --dns=X.X.X.X to the plex container. What I've now found is that my 'arr containers cannot connect to plex without using an external address. They are on different subnets but allowed by my firewall to communicate. I think this might be due to docker networking. What is the ideal way to set this up. I assume most people just have a single subnet and so don't need to worry about this.

-

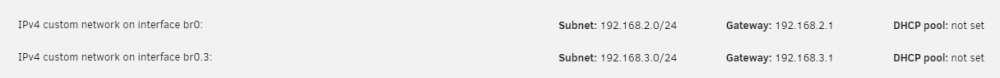

I believe I've enabled settings correctly to add a vlan, configure it in docker settings but when I try to assign a custom ip in that VLAN for my plex container, it has no DNS. I also see an error message during boot that eth0.3 can't be found. Any advice?

-

Every few hours, I see the following messages in my syslog: Nov 24 09:36:59 ur1 rsync[6282]: connect from 192.168.2.1 (192.168.2.1) Nov 24 09:36:59 ur1 vsftpd[6281]: connect from 192.168.2.1 (192.168.2.1) Nov 24 09:36:59 ur1 rsyncd[6282]: forward name lookup for DreamMachine.localdomain failed: Name or service not known Nov 24 09:36:59 ur1 rsyncd[6282]: connect from UNKNOWN (192.168.2.1) Nov 24 09:37:10 ur1 smbd[6284]: [2021/11/24 09:37:10.442874, 0] ../../source3/smbd/process.c:341(read_packet_remainder) Nov 24 09:37:10 ur1 smbd[6284]: read_fd_with_timeout failed for client 192.168.2.1 read error = NT_STATUS_END_OF_FILE. Nov 24 09:39:22 ur1 vsftpd[7804]: connect from 192.168.6.1 (192.168.6.1) Nov 24 09:39:22 ur1 rsync[7805]: connect from 192.168.6.1 (192.168.6.1) Nov 24 09:39:23 ur1 rsyncd[7805]: forward name lookup for DreamMachine.localdomain failed: Name or service not known Nov 24 09:39:23 ur1 rsyncd[7805]: connect from UNKNOWN (192.168.6.1) Nov 24 09:39:33 ur1 smbd[7807]: [2021/11/24 09:39:33.981382, 0] ../../source3/smbd/process.c:341(read_packet_remainder) Nov 24 09:39:33 ur1 smbd[7807]: read_fd_with_timeout failed for client 192.168.6.1 read error = NT_STATUS_END_OF_FILE. 192.168.2.1 is my LAN gateway IP. 192.168.6.1 is a VLAN gateway IP for the VLAN on my UniFi network that all my docker containers are isolated on. I have firewall rules that prevent communication from the docker VLAN to my LAN. I have WireGuard running on Unraid and setup a static route as well as allowed host communication with docker containers using custom networks as recommended in setup instructions. Any ideas what is causing these constant connection attempts?

-

Thanks. That's a good script to have running. Any other recommended scripts to schedule when using btrfs? I have scrubs scheduled already.

-

Thanks. I'll keep an eye on things. I have a scrub scheduled for Dec 1st. Does unraid send a notification for btrfs errors? I didn't get any with this recent error.

-

Ok, I found the affected file. Deleted and restored from a backup. I assume the process now is to run a scrub and see if it comes back clean for disk 1? Not sure what caused the file corruption.

-

My server recently locked up during update of a few docker containers. I was able to save a diagnostic. It appears that my docker.img had some corruption. I've since deleted it, created a new one and added back all my containers. I also ran a BTRFS scrub on my cache drive and it came back clean. What is concerning is that disk 1 in my array which has BTRFS filesystem reported 1 corruption. Nov 21 08:09:19 ur1 kernel: BTRFS warning (device dm-0): csum failed root 273 ino 9248 off 6311936 csum 0x1a753a94 expected csum 0x58a4bd31 mirror 1 Nov 21 08:09:19 ur1 kernel: BTRFS error (device dm-0): bdev /dev/mapper/md1 errs: wr 0, rd 0, flush 0, corrupt 1, gen 0 btrfs dev stats -c /mnt/disk1 [/dev/mapper/md1].write_io_errs 0 [/dev/mapper/md1].read_io_errs 0 [/dev/mapper/md1].flush_io_errs 0 [/dev/mapper/md1].corruption_errs 1 [/dev/mapper/md1].generation_errs 0 I'm currently running a BTRFS scrub on disk 1 to see if I will learn what file has issues. I can restore from a backup if necessary. I'm not sure if scrubbing will give me this information. This experience has me questioning my choice to use BTRFS. Advice would be greatly appreciated. Thanks. ur1-diagnostics-20211122-0833.zip

-

I have a g10+. I'm currently reducing size of my media collection to attempt to consolidate onto 2 data disks with 1 parity disks. This will leave 1 of the 4 drive bays open for an SSD cache. My PCIe slot will be occupied by an Nvidia P400 GPU for plex and tdarr. I love the small form factor and build quality of the g10+ even though it limits drive expansion. With 16TB and 18TB drives out now, this is less of an issue.

-

This wouldn't be an issue for me. I may give this a go.

-

If this were used only for docker, would it be a concern? I have an HPE gen 10 plus with only 4 storage bays and the single PCIe slot will be populated with my GPU for HW transcoding. Therefore, no additional drive slots other than to use USB. Luckily it has two gen 3.2 slots on the front. I would just use a single USB SSD for docker on an unassigned device and backup weekly to the array. People use these as OS boot drives, so I'm thinking it would be okay. Thoughts?

-

I figured out my issue. All is well. Thanks.

-

I've tried everything to get this working between two unraid servers but no matter what I try, I keep getting prompted for a password. Did something change in 6.9 that does not allow this for root users?