-

Posts

116 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by storagehound

-

-

6 hours ago, mgutt said:

As you already replaced the power supply: Did you replace the cables, too? Then I would say your motherboard is dead.

Or do you maybe have a problem with temps?

Simply reboot in safe mode, so Plugins are all disabled. And press "c" while top is running to see the full command.

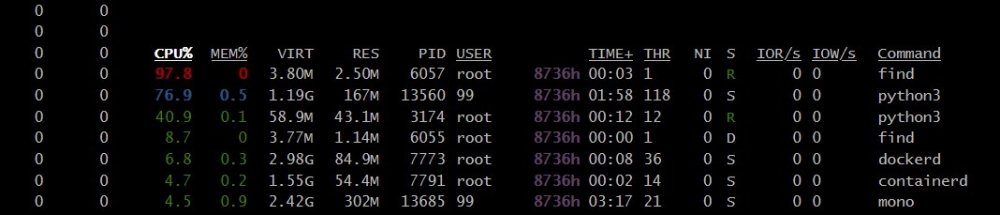

Thank you for the reply, mgutt. I did not replace the cables yet. The temperatures look good. I put the server in safe mode before bed. It's been up for over 8 hours now. I plan to leave it in Safe Mode a couple of days to see if it stays up. Is "top" "htop?"

-

Hello, unRAIDers

Not sure if I am posting in the right place. I could use your help with what I should do next. Diagnostics are attached. Here's what I did so far.

SPEC:

unRAID version 6.11.5 server.

Hardware specs:

Model: AVS-10/4-X-6

M/B: Supermicro - X10SL7-F

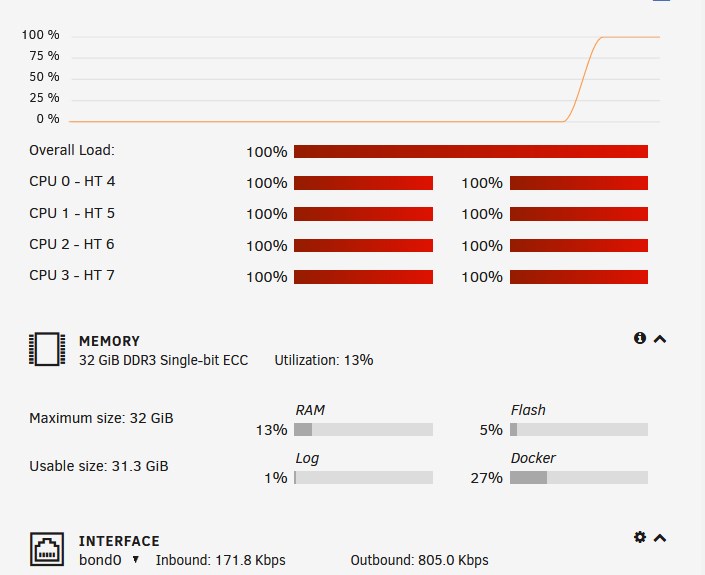

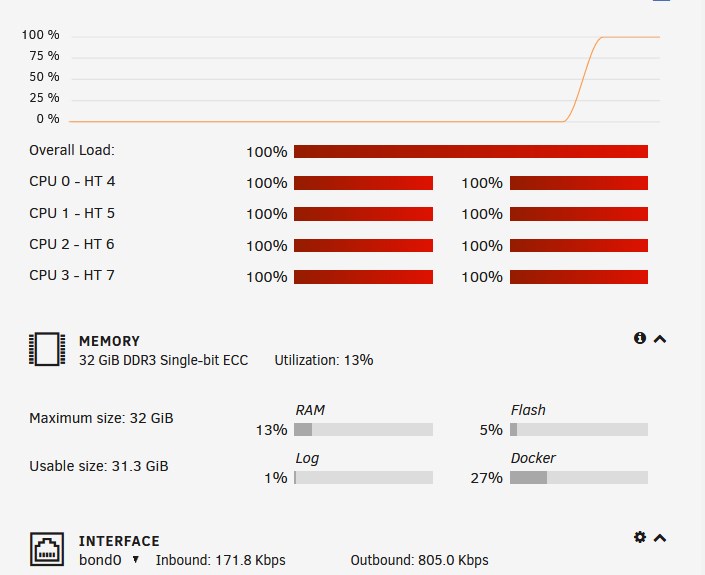

CPU: Intel® Xeon® CPU E3-1230 v3 @ 3.30GHzThe Server just powered off on it's own Friday. After several tries I got it to reboot. I saw each CPU sit on red almost the entire time I was in it.

It stayed up for a maybe an hour and then immediately shut down again and refused to come back up. I saw the LE6 light was red on the mother board... which indicated the Power Supply need to be replaced (according to the motherboard manual https://www.supermicro.com/en/products/motherboard/X10SL7-F).

So Sunday I got a Seasonic 750 PSU to replace my Seasonic 650. (why not upgrade?). I replaced it and the server started. I noticed the crazy utilization again. Not sure that this wasn't contributing to the issue I began doing some things on the unRAID forum. I installed "Glance" from the app store and saw CPU utilization in the red going from the 99% and higher. shfs, Find, OpenVPN and a few Docker's were popping up. But "shfs" was the worst/consistent offender. I followed some a good idea from a thread of @mgutt and checked my Docker paths. I updated anything I missed from "mnt/user/appdata to "mnt/cache/appdata." I also saw other suggestions and revisited the settings in the Tips & Tweaks plugins. I also updated some settings in Dynamix Cache Directories. I turned off Plex's post credit scanning. Now every CPU was not red the majority of the time. There were still spikes... and times they would go orange...but not the consistent red 90+ to 100% utilization across every core. "find" will spike in Glance more than "shfs" but not as often or as long as before. I turned the shut the system down gracefully a few times and it came back up easily (unlke with the 650 PSU)

I thought I was good...but then the system powered off again. I am at a loss. It did come back up again. Things were red with the initial boot but eventually settled down. It was up for over 2 hours. I still didn't rust it. I wanted to eliminate other things before I considered replacing the motherboard.

I checked for swollen capacitors (?). I double checked the cables. unRAIDs tools didn't indicate any hardware issues. OS logs seem reasonable to me. I Docker's/plugins were up to date. *Pause* Then it shut down again. 😵 I also noticed that if I bring it down gracefully the red light is still there. I turn the server on the red light (LE6) goes green. I'm a bit discouraged because I thought I figured everything out.Attached are my diagnostics (again, just to eliminate an OS component to this).

Thank you! 🤞

......

-

2 hours ago, Lolight said:

Their site is still up - http://greenleaf-technology.com/our-servers/

Have you tried to contact them?

"The builds on the website are out of date, I am working on a new website so the custom server email form is the best way to get the build you need and want."

Hi, @Lolight. Thanks for the reply. I did. I'm waiting on an e-mail reply assuming they are still active. However, I am still interested in other recommendations from our unRAID community.

-

1

1

-

-

Hello, People

I am at the point where I may be forced to replace my server. I would appreciate some advice on trusted sources for getting a pre-built server. My current ... whic is failing... was purchased through unRAID (then Lime Tech) years ago when they had Greenleaf building systems for them. My hope is to just transfer over the license and drives over to a new system with minimal fuss. Maybe even get something robust for current VM and trans-coding needs.

Thank you

-

11 hours ago, binhex said:

so containers talking to each other in the SAME network can be done via localhost and the port the application uses, you should not be defining ports in VPN_OUTPUT_PORTS unless you want an application using the VPN network to communicate OUTSIDE of the VPN network to another application.

see A24 note 1 for details about localhost:- https://github.com/binhex/documentation/blob/master/docker/faq/vpn.md

see A27 for details about correct usage of VPN_OUTPUT_PORTS https://github.com/binhex/documentation/blob/master/docker/faq/vpn.md

you should be able to use a custom network - at least it did work in testing a long time ago!, what you cannot use is specific ip addresses for the vpn container, that will not work.

p.s. i do not like the usage of VPN_OUTPUT_PORTS or VPN_INPUT_PORTS actually, its a little confusing for the average user, however its a necessary evil (havent found a better way) to guarantee zero ip leakage which of course is the most important thing, so for now thats how it is.Thank you, @binhex I had only used VPN_OUTPUT_PORTS to get Overseerr to see Plex. I don't have Plex running through DelugeVPN (I think that is not recommended). I don't like it's usage either...but I'm so appreciative you implemented it it.

I was only looking a custom networks and other things in SpaceinvaderOne and Ibracorp videos because I am looking at expanding the functionality of my uNraid in the near future (Reverse Proxy's and etc) as so many of you have.Thanks again...

-

22 hours ago, JonathanM said:

BTW, I'm not sure that is a tested configuration. It may or may not work without modifying other parts of the container because of how locked down it is, @binhex would know.

Really cool of you to not only reply but to tag Binhex.

-

DelugeVPN and a Custom Network

Hi, Gurus

My setup. I have DelugeVPN setup with several containers connected to it by adding "--net=container:binhex-delugevpn" to extra parameters. I setup ADDITIONAL_PORTS for the containers. To allow the containers that needed to communicate with each other I added those container port numbers to VPN_OUTPUT_PORTS for DelugeVPN.

If I create a custom network and add DelugeVPN and those others containers to it, will I still need to use the

VPN_OUTPUT_PORTS for those containers? In an Ibracorp video it sounds like they are saying I will no longer need to specify ports for containers to communicate each other (just the container name). I'm thinking I'm misunderstanding him.

Thanks

-

2 hours ago, Alex.b said:

Hey !

Thanks for your hard work ! I tried to install GluetunVPN to work with Windscribe but I have an error :

docker: Error response from daemon: driver failed programming external connectivity on endpoint GluetunVPN (fe8fb5c60c0ba952bd0b14eade9f1ecd97c9be5fe936e49f8e221241a9e36559): Bind for 0.0.0.0:8888 failed: port is already allocated.

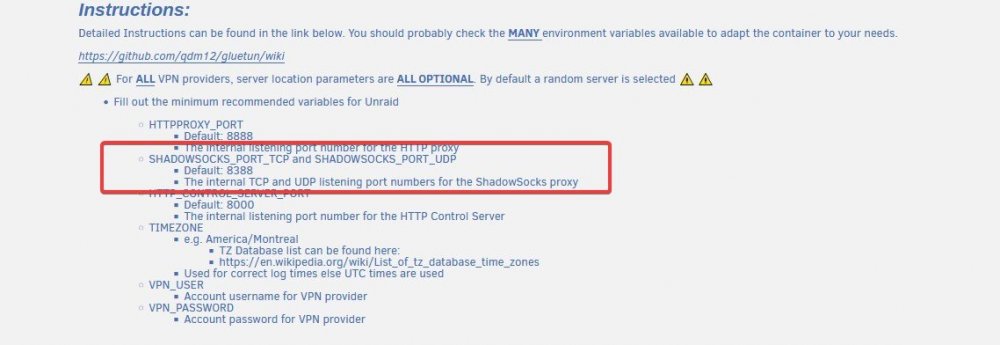

By default, these 3 ports are mapped to 8888, is it intended ?

I checked, no other container uses port 8888

Thank you !

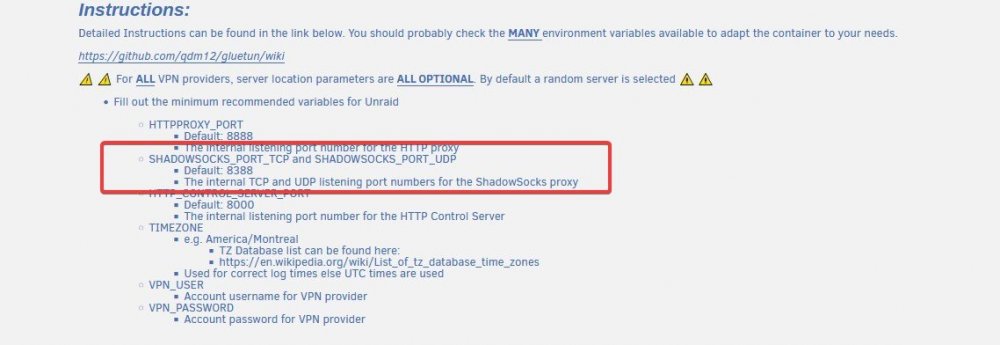

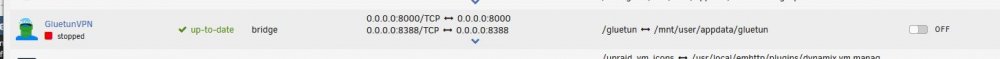

This had gotten me too. The template does not default to the actual numbers that the developer has at the top of this template. Just scroll up and you'll see. I'll include a screenshot. The Template should probably be updated to avoid that confusion. The last I did a complete delete and rebuild it still defaulted to 8888.

-

On 8/13/2021 at 7:36 PM, JonathanM said:

Torrent clients need an open incoming port to function as designed. I don't know how you would manage that with a dedicated VPN container, it's handled for you in this container.

I thought I would be to route DelugeVPN (what I currently use) or the standard Binhex Deluge through GluetunVPN (availabe in our app store) much like I currently connect other dockers to DelugeVPN for that functionality. I'll review.

-

Hi, Everyone

I'm evaluating a dedicated VPN docker. if I should decide to go that route is it wiser to disable the VPN features on the Binhex DelugeVPN or should I switch over version of regular Deluge (sans VPN)?

Thank you -

Thanks. I'll try to remember this when there are updates or if I ever need to recreate the docker.

-

Hello.

I noticed something curious. I have shutdown and restarted my unraid server twice and GlutenVPN starts up automatically. I have to manually stop it. This is strange because I don't have it set to :AUTOSTART" None of my other apps that have this feature disabled are automatically starting on reboot. I don't know why that is happening. It's not happening with any other Docker. -

3 minutes ago, biggiesize said:

The Web port isn't a traditional WebUI. It's more of an API for advanced automation in obtaining and modifying the container without restarting it.

https://github.com/qdm12/gluetun/wiki/HTTP-Control-server

I didn't catch it earlier but your Timezone needs to be in IANA format (example. America/New_York)

https://en.m.wikipedia.org/wiki/List_of_tz_database_time_zones

The dev has some decent notes on connecting other containers to Gluetun if you need help with that.

Good to know, biggiesize

You've been a big help.

I did catch the time error and corrected it. The Container connection directions look like what I've ready from Binhex so I am feeling good about getting that working.Again... Thank you.

-

Here is a modified version of my log if you'd like to see it. I can already tell that I might want to at least get the VPN to use a set of regions for servers for efficiency. I'm reading through this and looking things up for a little better understanding.

Thank you!2021/08/11 20:50:11 INFO storage: creating /gluetun/servers.json with 11007 hardcoded servers

2021/08/11 20:50:11 INFO routing: default route found: interface eth0, gateway 172.xx.xx.1

2021/08/11 20:50:11 INFO routing: local ethernet link found: gretap0

2021/08/11 20:50:11 INFO routing: local ethernet link found: erspan0

2021/08/11 20:50:11 INFO routing: local ethernet link found: eth0

2021/08/11 20:50:11 INFO routing: local ipnet found: xxx.x.xx.xxx/16

2021/08/11 20:50:11 INFO routing: default route found: interface eth0, gateway 172.xx.xx.1

2021/08/11 20:50:11 INFO routing: adding route for 0.0.0.0/0

2021/08/11 20:50:11 INFO firewall: firewall disabled, only updating allowed subnets internal list

2021/08/11 20:50:11 INFO routing: default route found: interface eth0, gateway 172.xxx.xx.1

2021/08/11 20:50:11 INFO routing: adding route for 192.xxx.x.0/24

2021/08/11 20:50:11 INFO openvpn configurator: checking for device /dev/net/tun

2021/08/11 20:50:11 WARN TUN device is not available: open /dev/net/tun: no such file or directory

2021/08/11 20:50:11 INFO openvpn configurator: creating /dev/net/tun

2021/08/11 20:50:11 INFO firewall: enabling...

2021/08/11 20:50:11 INFO firewall: enabled successfully

2021/08/11 20:50:11 INFO dns over tls: using plaintext DNS at address 1.1.1.1

2021/08/11 20:50:11 INFO http server: listening on :8000

2021/08/11 20:50:11 INFO healthcheck: listening on 127.0.0.1:9999

2021/08/11 20:50:11 INFO firewall: setting VPN connection through firewall...

2021/08/11 20:50:11 INFO openvpn configurator: starting OpenVPN 2.5

2021/08/11 20:50:11 INFO openvpn: 2021-08-11 20:50:11 DEPRECATED OPTION: ncp-disable. Disabling cipher negotiation is a deprecated debug feature that will be removed in OpenVPN 2.6

2021/08/11 20:50:11 INFO openvpn: OpenVPN 2.5.2 x86_64-alpine-linux-musl [SSL (OpenSSL)] [LZO] [LZ4] [EPOLL] [MH/PKTINFO] [AEAD] built on May 4 2021

2021/08/11 20:50:11 INFO openvpn: library versions: OpenSSL 1.1.1k 25 Mar 2021, LZO 2.10

2021/08/11 20:50:11 INFO openvpn: TCP/UDP: Preserving recently used remote address: [AF_INET]109xxx.xxx.xx:443

2021/08/11 20:50:11 INFO openvpn: UDP link local: (not bound)

2021/08/11 20:50:11 INFO openvpn: UDP link remote: [AF_INET]109xxx.xxx.xx:443

2021/08/11 20:50:12 WARN openvpn: 'link-mtu' is used inconsistently, local='link-mtu 1601', remote='link-mtu 1549'

2021/08/11 20:50:12 WARN openvpn: 'auth' is used inconsistently, local='auth SHA512', remote='auth [null-digest]'

2021/08/11 20:50:12 INFO openvpn: [ams-229.windscribe.com] Peer Connection Initiated with [AF_INET]109xxx.xxx.xx:443

2021/08/11 20:50:13 INFO openvpn: TUN/TAP device tun0 opened

2021/08/11 20:50:13 INFO openvpn: /sbin/ip link set dev tun0 up mtu 1500

2021/08/11 20:50:13 INFO openvpn: /sbin/ip link set dev tun0 up

2021/08/11 20:50:13 INFO openvpn: /sbin/ip addr add dev tun0 10.xxx.xxx.xx/23

2021/08/11 20:50:13 INFO openvpn: Initialization Sequence Completed

2021/08/11 20:50:13 INFO VPN routing IP address: 109xxx.xxx.xx

2021/08/11 20:50:13 INFO dns over tls: downloading DNS over TLS cryptographic files

2021/08/11 20:50:13 INFO healthcheck: healthy!

2021/08/11 20:50:15 INFO dns over tls: downloading hostnames and IP block lists

2021/08/11 20:50:17 INFO dns over tls: init module 0: validator

2021/08/11 20:50:17 INFO dns over tls: init module 1: iterator

2021/08/11 20:50:18 INFO dns over tls: start of service (unbound 1.13.1).

2021/08/11 20:50:18 INFO dns over tls: generate keytag query _ta-4a5c-4f66. NULL IN

2021/08/11 20:50:19 INFO dns over tls: ready

2021/08/11 20:50:19 INFO You are running on the bleeding edge of latest!

2021/08/11 20:50:21 INFO ip getter: Public IP address is 109.xxx.xxx.xx (Netherlands, North Holland, Amsterdam)

2021/08/11 20:53:59 INFO http server: 404 GET wrote 41B to 192.xxx.x.xxx:50323 in 31.62µs

2021/08/11 20:53:59 INFO http server: 404 GET /favicon.ico wrote 41B to 192.xxx.x.xxx:50323 in 19.55µs

2021/08/11 20:56:32 INFO http server: 404 GET wrote 41B to 192.xxx.x.xxx:50390 in 41.461µs -

Got it! I uninstall the Docker and made sure there were not remnants. When I reinstalled it noticed an additional field and some of the ports changed. When I first ran it failed again. Then I noticed the Template defaults to a different port than what you recommend at the top of the Template. I changed the 8888 to 8388 as the template directions state. So it's running now. I see no errors in the log! 😁 👍

However, when I try to call up the web page for the VPN I get an error see blelow. Do I need to be concerned about that? Otherwise no log errors. I'll wait until tomorrow to begin testing the disabling the VPN portion of DelugeVPN and using your product. 🙂

When I click on the "webUI" link....

Error I see when I try to open the page.

-

On 8/9/2021 at 9:03 PM, biggiesize said:

No worries. Any VPN with the amount of variables and options like this one is a bit challenging to set up.

Feel free to post a screenshot of your settings screen and any errors you are seeing. I will gladly help out. 🙂

Here you go. I thought it was pretty straight forward. But I notice I have that error in the bottom of the log (last image) and it shuts down. I'm missing something obvious, aren't I?

Thank you -

I'm struggling with getting this to work. But it's honestly been a horrendous few weeks. I'll take a break and come back and try again. I did notice there is updated documentation so I am sure it will all make sense once my brain clears.

-

48 minutes ago, biggiesize said:

Thank you for your interest. I was using binhex's PrivoxyVPN container and was happy. This one is a little more flexible and really light-weight. The dev is also amazing to work with. Kudos to him on the effort he put into it.

Very good to read. I am not as savvy as some uNraid users so I tend to be a bit cautious in adopting things that are security related.

-

Ooooooh. 😲 A docker that recognizes specific VPN's like Windscribe? I'm going to have to check this out and see if I gain anything from using that functionality compared DelugeVPN (I tend to lean heavily/happily binhex). Thank you.

-

Thanks for the fix. I'm up and running. I was thinking last week how cool if it got an upgrade like Radarr and Bazarr. And I'm already playing around with some of the updates. I'm going back to re-read the doc to see if I missed any.

-

1

1

-

-

1 hour ago, gordonempire said:

This is the exact reason I was asking.

Reading that back it sounded kinda snarky. Not intended. I wasn't going to mention the Privoxy starting and stopping initially, but forgot that I did include it.

Personally, I didn't think it was snarky. I thought it was a good question.

-

2

2

-

-

19 minutes ago, gordonempire said:

If other containers are being passed into the DelugeVPN using the "--net=container:<name of vpn container>" option, does Privoxy need to be used? I'm thinking it doesn't but I just want to be sure. I seem to have everything working but the logs are showing that Privoxy stops and keeps being started.

Looking at my logs I am seeing this as well.

2021-02-26 18:45:14,261 DEBG 'watchdog-script' stdout output:

[info] Attempting to start Privoxy...

2021-02-26 18:45:15,272 DEBG 'watchdog-script' stdout output:

[info] Privoxy process started

[info] Waiting for Privoxy process to start listening on port 8118...

2021-02-26 18:45:15,278 DEBG 'watchdog-script' stdout output:

[info] Privoxy process listening on port 8118

2021-02-26 18:45:45,335 DEBG 'watchdog-script' stdout output:

[info] Privoxy not running

-

1

1

-

-

8 hours ago, carnivorebrah said:

I tried those steps, but they didn't work for me. See screenshots in a few posts above.

Also, when I point any physical device to the DelugeVPN proxy, I cannot connect to the Unraid web UI on that device.

Carnovorbrah,

I totally missed your post. I must have been pretty fried at that point. In fact, if I'd noticed, I'm sure it would have helped me get mine up sooner. The only thing different with mine is I didn't use the proxy on Jackett since I had decided to route my three containers through Deluge-VPN. I'm going to triple check my connections...I think I don't need the proxy setting in some of those other areas.-

1

1

-

-

I think I got it. Seeing this screenshot from torlh helpd turn on a few brain cells. There were multiple steps to be done.

I searched for "ADDITIONAL_PORTS" and found Binhex's entry in another forum. So many steps.

UGLY DIRECTIONS FOLLOW:

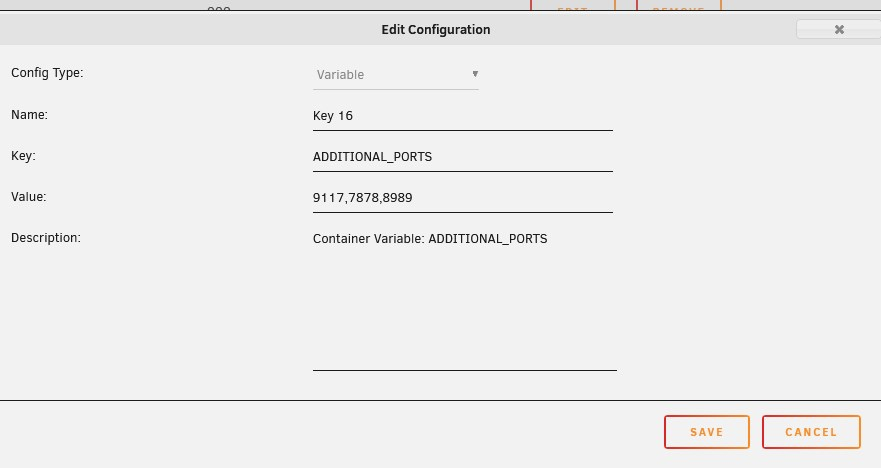

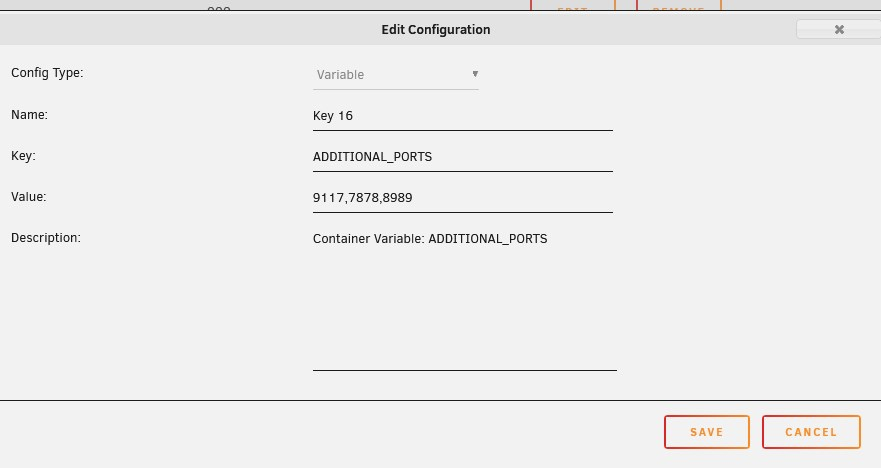

1) I routed Jackett, Sonarr and Radarr (all Binhex versions) through Deluge VPN. Following Spaceinvader one's Youtube tutorial : How to route any docker container through a VPN container will get you most the way there (Link below). But you'll need to add the "ADDITONAL_PORTS" variable if your Deluge-VPN template does not already have it. I'll screenshot mine. Those 3 values you see are Jackett, Radarr & Sonarr. This will allow you to access them in the browser (remember, follow the video first).

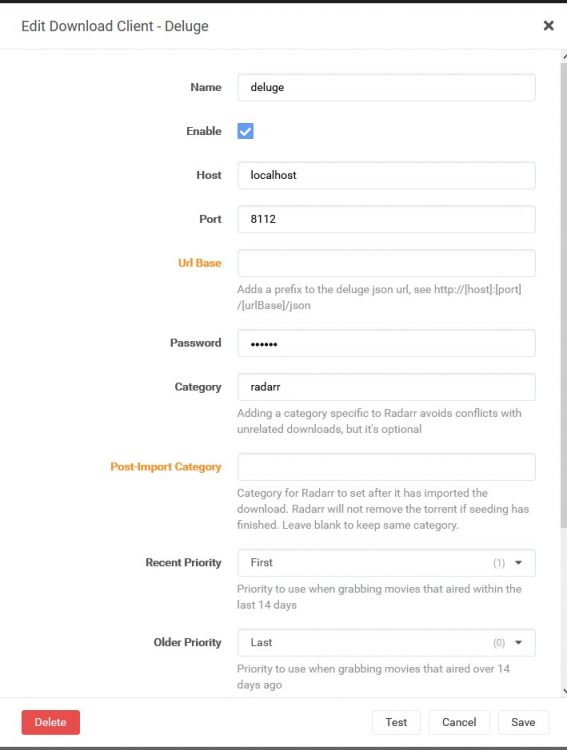

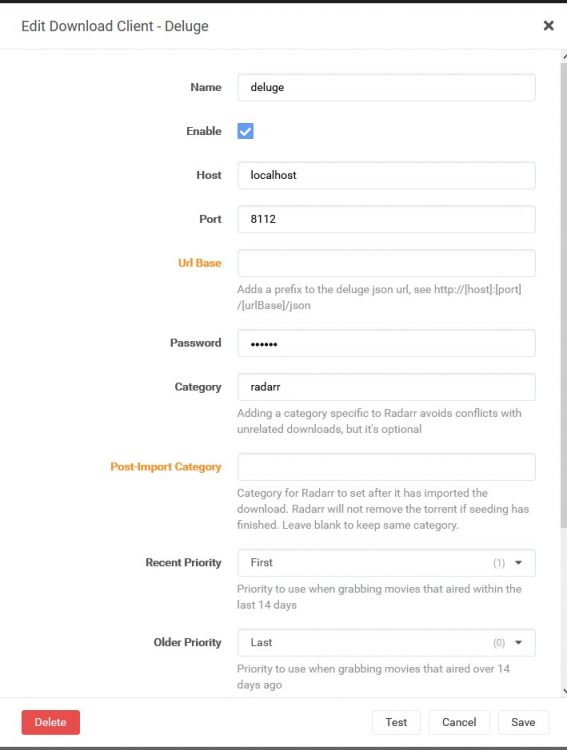

2) Once you are able to access your webpages you should go through them and remove any explicit host name for your server (like 192.167.1.11) and replace it with Localhost. I would start with Jackett (if you are using that) since you may have other programs pointing to it. In the case of Radar I had to do this for all of my indexers, the Proxy settings under General and for the Download Client. Here's a screenshot of my doing the "Download Client" in Radar.

After I had done all of this my containers were able to be accessed by the web page with the appropriated port: http://192.xxx.x.12:9117 for Jackett and etc. I saw that Radarr and Sonarr were able to access their indexers and deluge vpn.

I know these directions are not great. I am exhausted. I only did it for those of you who will be driving yourself crazy and just need a hint. I know one of our better explainer will probably put something more useful and cohesive together.

Thank you Binhex and Torlh for giving me enough to figure out what to do and actually clean up my sever.2 hours ago, Torih said:Hi, sorry another person getting stuck with this new update here. Not really that great with unraid so guessing somethi

ng maybe setup wrong in the first place which is why its not not working. (but has been fine for 2 years ish)Didn't have additional_ports so added this:

Assume that's correct?

Sonarr/Radarr/Jacket all on same IP as deluge. Get this error in those when trying to test download client Unknown exception: The operation has timed out.: 'http://192.168.10.10:8112/json'

I have the host in Sonarr/Radarr/Jacket set to the same IP as deluge/server, tried localhost and it instantly says unable to connect on test.

Also tried setting the proxy to localhost but no change to test results

-

2

2

-

My unRAID server keeps dying

in General Support

Posted

UPDATE 04/02:

I ran the server in safe mode for about 3 days. Then I decided to start the array. My dockers have been running over 2 days without the system shutting down. The Plugin service is not running at all. So far I have not seen all cpus go red for seconds to minutes. Using Glance... I still see some utilization that goes in the high 90% to well over 100% utilization....but it tends to settle down quickly. I noticed some errors doing a short smart test on my parity drive and one other. From what I'm reading they are minor...but I am still going to look into replacing the drives. Later this week I will try turning plugins back on and see if it will trigger the unclean shutdown.