-

Posts

2070 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by archedraft

-

-

Hey All, been awhile!

I recently updated my server to 6.12.6 and got a message from "Fix Common Problems" that macvlan and bridging on should be corrected.

From what I was reading, I left the docker setting with macvlan and I turned off bonding and bridging. I restarted the system and the error message went away. My dockers appear to be working correctly. I went to use Wireguard today and I can connect and access the unRAID web gui but I no longer have access to my local network; whereas, yesterday everything was working fine.I must have messed up something with the network changes. Do I need to recreate the wireguard network now that I updated the unRAID network? or should I change the docker macvlan to ipvlan and turn back on bonding and bridging?

-

Reading the documentation is helpful

Manual/Security - Unraid | Docs

Redirects

When accessing http://[ipaddress] or http://[servername].[localTLD] , the behavior will change depending on the value of the Use SSL/TLS setting:

If Use SSL/TLS is set to Strict, you will be redirected to https://[lan-ip].[hash].myunraid.net However, this behavior makes it more difficult to access your server when DNS is unavailable (i.e. your Internet goes down). If that happens see the note under Https with Unraid.net certificate - with no fallback URL

If Use SSL/TLS is set to Yes, you will be redirected to https://[ipaddress] or https://[servername].[localTLD] as that will likely work even if your Internet goes down.

If Use SSL/TLS is set to No, then the http url will load directly.

Note: for the redirects to work, you must start from http urls not https urls.

-

Updated to 6.10 today from 6.9 - When I first typed the servers IP address it told me it was not secured. In 6.9 it used to redirect the IP to the long string of numbers and letters followed by .unraid.net.

I saw that the new cert added wildcards so I updated my cert and then changed my pfSense DNS resolver from unraid.net to myunraid.net

server:

private-domain: "myunraid.net"If I click on the Certificate subject blue link under Management Access I can log into unRAID through that address and it is secure. If I type in my ip address it doesn't redirect me though.

Is that no longer an option or is there another setting I am missing? -

-

This is a application specific question but read the first sentence here:

https://docs.photoprism.org/user-guide/organize/folders/

I appreciate the link. Thank you.-

1

1

-

-

Is there anyway to get PhotoPrism to auto scan the photo folder for new photos and add them?

-

Does Nextcloud compress images from the phone when it uploads them? The same pictures that get auto uploaded via Dropbox are 2-3x larger than the ones from the Nextcloud app.

-

Hey guys, I am wanting to use External Storages to link an unRAID share into NC. I have read through here that you can do this either by "local" if I pass through the parameters in the docker settings or "SMB / CIFS" which seems to be preferred so that the folder permissions are respected.

My issue, is that my NC docker is on a custom br0 network with a fixed ip address so that I could give letsencrypt that ip address. Since NC is on the br0 network, I am unable to ping my unRAID ip address from within the NC docker and thus I am unable to connect to my unRAID share.

Does anyone know of a way to allow NC to see my unRAID ip?

EDIT: Another interesting development, I can ping any other device on my local network from within the NC docker, expect my unRAID ip... hmmm

EDIT #2: As it turns out, it was not an interesting development that i could not access my unRAID ip. It is very well documented that this is by design. Anyway, I decided I would just test out using the local option and it appears to be working just fine.

-

I like how user friendly setting up a server is and the great community.

Honestly, I am having a hard time coming up with a new feature, it’s pretty fantastic just the way it is, but I would say having built in data integrity functionality would be a nice. -

14 hours ago, Benson said:I don't think so and you haven't problem on 6.7.2

Case seems interesting.

Correct, my windows 10 VM is stored on an UD mount.

So far 6.7.2 hasn’t caused any noticeable loading delays when I open Plex like 6.8 does for me.

I would be interested to see if disabling all of the mitigation fixes would help. Been wondering if my i7 would eventually get so “nerfed” that I would notice it over time but I suspect others would have said something if that was the case.

Other than that, I don’t really have any ideas on what it might be. I restarted plex, docker, and Unraid a bunch of times on 6.8 so it’s not that. -

Hey All,

I upgraded to 6.8 last week and have been noticing that every time I attempt to load Plex, it takes forever to get a list of my media. When I look at the server, the issue appears to be that the CPU is maxing out. I downgraded to 6.7.2 today and everything appears to be working just fine. I have attached both a diagnostics from 6.8 and 6.7.2.

Another note, I also run a Windows 10 VM and on 6.8 all my cores would turn red when doing a stress test vs 6.7.2 only 4 of the 8 cores turned red when stress testing. I have 4 of the 8 cpus pinned to this VM so maybe in 6.8 it's giving this VM all 8 cores and thus my CPU issue?

Is anyone else experiencing any issues like this?

pithos-diagnostics-20191221-1245.zip pithos-diagnostics-20191221-1914.zip

-

-

I don't know who I was kidding, if I actually followed the "if it aint broke" moto I would still be using WD external hard drives to store my data...

Anyway, after messing with the VM last night, I believe the underlying issue was that Hibernation was still enabled. Although, I also removed a few red hat drivers that were not needed, as when I updated to 1903, Windows gave an error message about one of them. I think when I originally created the VM I bulk added all the drivers from the VirtIO driver iso.

-

Thanks, I gave disabling that setting a try by no luck. I just rolled back and I can shutdown and restart again. I suppose "if it aint broke"...

-

My Windows 10 VM Fails to Shutdown or Restart After Windows Update 1903 was installed. Prior to this update, everything worked as expected. Has anyone else experienced this?

-

On 5/22/2017 at 7:26 AM, CHBMB said:

For those that upgrade to v12. Nextcloud have changed the config slightly. You will need to go to your nextcloud appdata and edit the default file in /nginx/site-confs/ and hash the line #add_header X-Frame-Options "SAMEORIGIN";

Woot! Thanks for your help!

Also, I am ashamed that it is 2019 and as of this morning I was still on v12...

-

I love this community, half of 'em are screaming like hellfire when a vulnerability goes longer than 30 seconds unpatched, the other half want to switch it off.

Me, I'm in the latter camp.

I was chuckling about exactly this the other day!

But hey it’s a scary place out there...

-

Another uneventful update... THANKS! 😁 Those are the best kinds. Dashboard is looking great.

-

A software update broke a previously working system is my point. I rolled back and will try a future update. Throwing out working hardware is wasteful. A software update could break another card also. Blame the user?

Maybe I can offer a different perspective. First let’s go to the “1,000 foot bird eye view” and ask the question what are we trying to accomplish? Likely the answer is to protect data. The next question is what are you willing to do to protect said data? Well, we built a dedicated computer to store our important files. So we are going above and beyond what most “common” folks do to store files. When you start adding up the money spent on the computer hardware plus the actual storage drives, there is some serious money invested.

I think if you look at it in that regard, and ask the question is my data worth an extra $100 dollars for a piece of hardware that is known to work great and let’s me stay on up to date software that comes with the latest security patches or is it worth it to use a piece of hardware with known issues that will likely not get better... well I know what my decision would be.

Anyways just something to think about.-

1

1

-

2

2

-

-

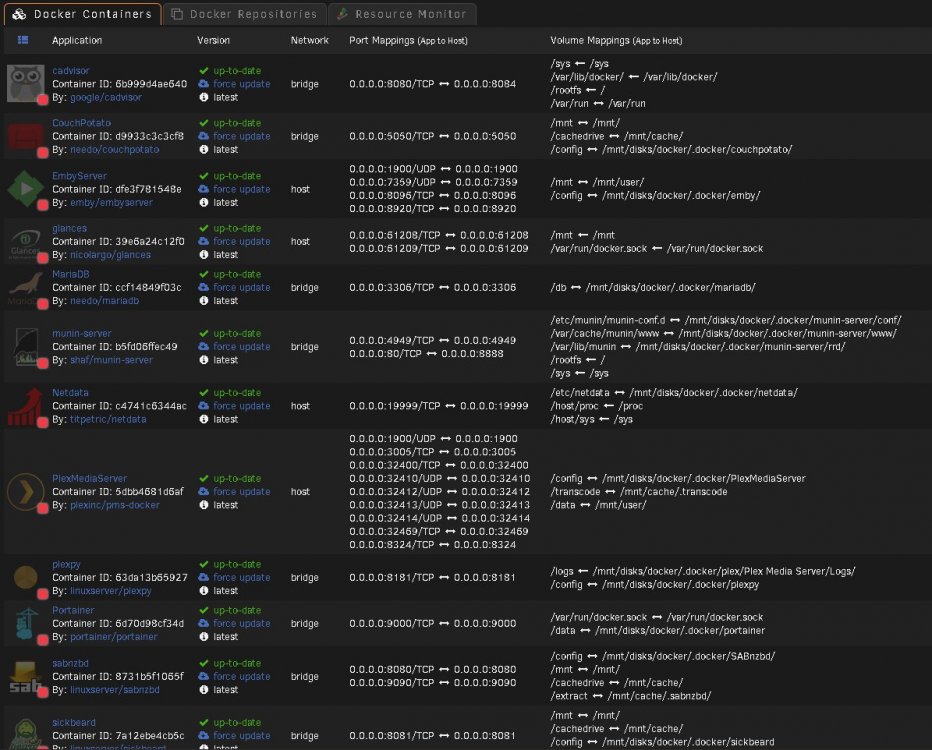

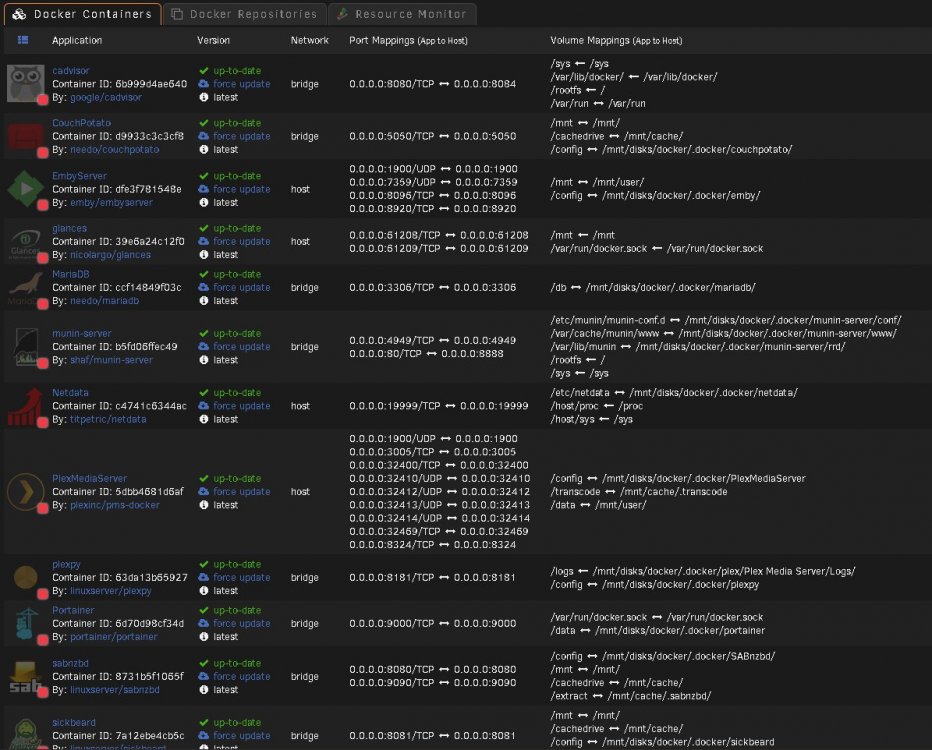

I upgraded yesterday from 6.6.6 to 6.6.7 and now all my docker containers are not showing IP addresses in the docker console and non of my containers are network stable. SAB will open after a while and display the interface. Hitting F5 once will cause the interface to go away with a messgae in the browser stating that the page cannot be reached. Hitting F5 again might / might not display the interface again and it cycles thought this. This happens on all containers.

This happened on all my custom networked (br0) dockers. I edited to network on each one to bridge and let it reconfigure itself and changed it back to custom (br0) and they are back up and running. -

Use a SSD instead of a spinner.

Indeed, my main VM is on a SSD and does not seem to have this issue. Unfortunately it doesn’t have enough space to share. Does there happen to be a known issue with spinners? I wasn’t expecting the spinner to be stellar but I was hoping for a little more...

-

Does anyone have any suggestions on how to improve the Disk performance of a Windows 10 VM? The VM is on a WD Red hard drive (WDC_WD30EFRX) and the VM is the only file accessing this hard drive. I took a screenshot of the Read and Write Speed which seems pretty slow. Windows 10 VM was created using unRAIDs default template (see screenshot).

-

Updated my server last week from 6.5. Everything is functioning as it was before (server, dockers and VMs). Thanks!

P.S. loving the new look!

-

7 hours ago, archedraft said:

Has anyone had any luck setting up Let's Encrypt to work with Blue Iris and Stunnel?

For anyone wondering the answer is yes! I had to edit my let's encrypt config and made "blueiris" a sub domain. As soon as I changed that it started working immediately. I was also able to close my stunnel port forwarding rule in my router! Let's Encrypt is pretty cool stuff. 😎

server { listen 443 ssl; root /config/www; index index.html index.htm index.php; server_name blueiris.random.server.name.org; ssl_certificate /config/keys/letsencrypt/fullchain.pem; ssl_certificate_key /config/keys/letsencrypt/privkey.pem; ssl_dhparam /config/nginx/dhparams.pem; ssl_ciphers 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA'; ssl_prefer_server_ciphers on; client_max_body_size 0; location / { include /config/nginx/proxy.conf; proxy_pass https://192.168.1.100:8777; # NOTE: Port 8777 is the stunnel port number and not the blue iris http port number } }-

2

2

-

Wireguard Local Network Issues After Bonding & Bridging Changes in 6.12.6

in General Support

Posted · Edited by archedraft

After rereading the upgrade notes, I noticed that Host access to custom networks was set to disabled in docker settings and it seemed like if you are using macvlan you probably want it enabled. I enabled it and now I have local network access via Wireguard. No exactly sure why a docker setting would effect the wireguard network but hey it's working again... For anyone who knows more about this, feel free to share the knowledge.