spasszeit

-

Posts

62 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by spasszeit

-

-

Yes, I tried another NIC. Pulled a working one Intel Gigabit CT from my workstation. Works fine with that motherboard when booting into windows 10 but not recognized by Unraid for some reason.

-

-

I upgraded my very old Unraid servers to X11SCA-F and X12SCA-5F boards. The X11SCA-F server is great, but the X12SCA-5F is giving me issues and I am having a hard time understanding whether those are driver or hardware related, so need your help guys. Deciding whether I should exchange the board (not easy with me being in Canada and the board came from the US) while I still a have a few days to do so.

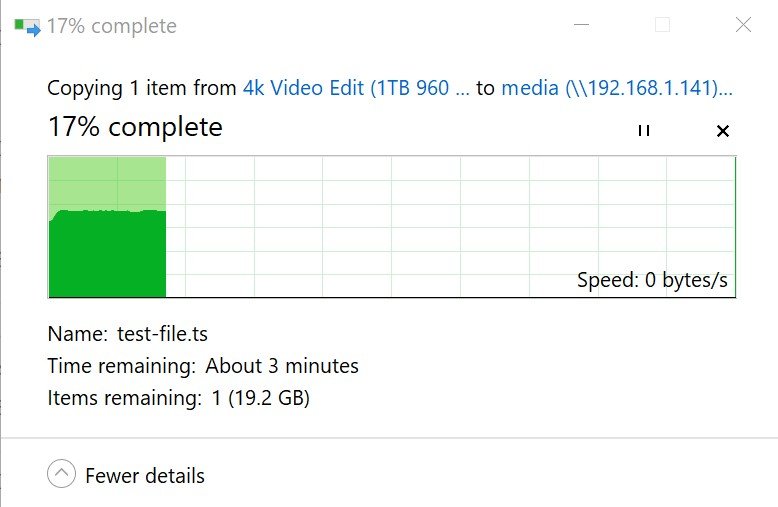

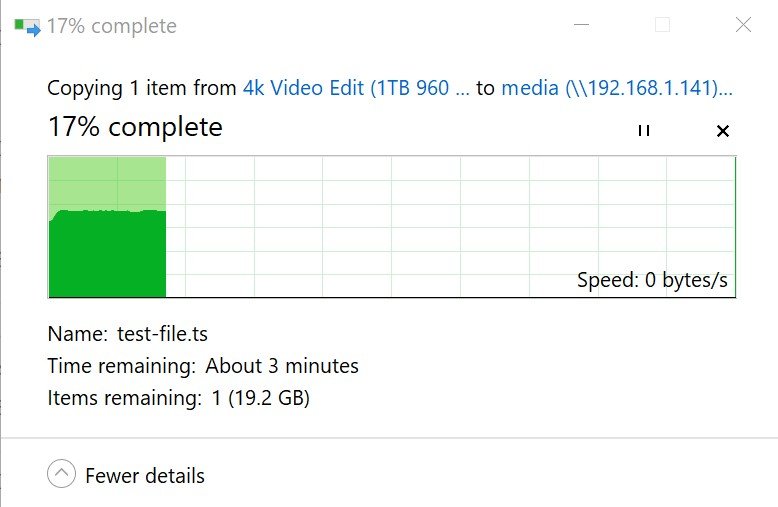

The X12SCA-5F has three NICs - an IPMI NIC and Intel's i225LM and PHY I219LM NICs, both should be supported by Unraid. The issue is that Unraid doesn't recognize the I219LM NIC (tested with 6.9.2 and next RC1). The i225LM is recognized and works but file transfers to the Unraid server are erratic, starting at around 113MB/s, going down to 40-50, up to 80-90, then to zero, back to 40-50, up to 113, then 10-20, etc. From the server to Windows it's a perfect ~113 MB/s line. Why could this be happening? On the other server it's a perfect 113MB/s line for a while then drop to ~69MB/s and it stays there until the file transfer is done. Any thoughts? Deffective board or driver issue?

P.S. Installed windows on this machine too, updated drivers to the latest. The i225LM NIC works fine, wither transmitting or receiving traffic. The I219LM gets speeds of 300 Kbps for a minute than errors out. Accessing the internet is fine though, getting max speeds with speedtest.net.

-

I upgraded my very old Unraid servers to X11SCA-F and X12SCA-5F boards. The X11SCA-F server is great, but the X12SCA-5F is giving me issues and I am having a hard time understanding whether those are driver or hardware related, so need your help guys. Deciding whether I should exchange the board (not easy with me being in Canada and the board came from the US) while I still a have a few days to do so.

The X12SCA-5F has three NICs - an IPMI NIC and Intel's i225LM and PHY I219LM NICs, both should be supported by Unraid. The issue is that Unraid doesn't recognize the I219LM NIC (tested with 6.9.2 and next RC1). The i225LM is recognized and works but file transfers to the Unraid server are erratic, starting at around 113MB/s, going down to 40-50, up to 80-90, then to zero, back to 40-50, up to 113, then 10-20, etc. From the server to Windows it's a perfect ~113 MB/s line. Why could this be happening? On the other server it's a perfect 113MB/s line for a while then drop to ~69MB/s and it stays there until the file transfer is done. Any thoughts?

P.S. Installed windows on this machine too, updated drivers to the latest. The i225LM NIC works fine, wither transmitting or receiving traffic. The I219LM gets speeds of 300 Kbps for a minute than errors out. Accessing the internet is fine though, getting max speeds with speedtest.net.

-

Thanks. Much appreciated.

-

Can someone help me understand, how do I connect the LSI SAS9305-24I to SAS3 Backplane?

The backplane, 24-port BPN-SAS3-846EL1, has 2 Primary SAS ports and 2 Secondary SAS ports.

The HBA has 6 ports

The cables recommended by Supermicro and Broadcom are 1 SFF-8643 connector on one end and 1 SFF-8643 on the other. There are no 1 to 6 breakout cables. Am I to understand that I can plug the cable into one of the primary ports on the backplane and into any one port on the HBA to get 24 drives connected?

Broadcom cable reference for this card - https://docs.broadcom.com/doc/12354774

Supermicro backplane connectivity reference - https://www.supermicro.com/manuals/other/BPN-SAS3-846EL.pdf

Thanks.

-

All good here. Have been running two LSI SAS 9207-8i cards for over week now and no issues. Very happy with the speeds I am getting.

-

Looking for a 9305-24i myself and wondering the same thing. I am leaning toward buying from Alibaba though as I've had very good experience with various sellers there lately. Let me know if you decide to buy off eBay and how it goes.

-

Anyone experienced a problem running two LSI SAS cards in X11SCA-F? Thinking of getting this motherboard but need to make sure that two LSI cards can run simultaneously in it. Someone in another thread mentioned that he had a problem with running an LSI card in the slot nearest the CPU but it could have just been a temporary glitch.

-

A bit off topic but the best thing I did for myself a few years back was to ditch BI in favor of Milestone xProtect.

-

I have two Unraid servers that were upgraded exactly 7 years ago. Both run on Supermicro X8SIL-F motherboards and Xeon processors. There only things that were upgraded are fans, PSUs and drives. Both are running fine and I am thinking why change anything if it ain't broke, or am I missing something?

-

Thank you guys. I've been doing some investigating on my end, looks like it's the sickbeard that caused that. The mover ran pretty much when the errors began. I recall this happened to me a few years back. In both cases I was running parity check. I now disable Sabnzbd and Sickbeard when I run checks, but forgot to do that this time. Anyway, all drives are fine, I ran SMART checks and all passed. Performing restore procedure now.

-

Ahhh... another problem. After I fixed the new 6TB drive size issue with a reboot, I decided to do a parity check before upgrading to v6. That did not go too well, I am seeing a multitude of errors, on parity drive and other drives. Disk 17 is redballed. I am quite unsure what I need to do at this point. I could replace drive 17, which was totally fine until now, but I am concerned about about errors on the parity drive. Any help would be much appreciated.

By the way, the reboot did not go too well. The server did not come back up. I had to shut it down via telnet (saw some kind of error message there, forget what it said exactly) and start again.

Thanks very much in advance.

-

Thank you Rob. Rebooted and all is fine now. I've been hotplugging my drives for a long time now, never an issue. However, I will stop doing that. Thank you for your help.

-

Hi,

I replaced a 2 TB drive with a precleared 6TB drive. Rebuild completed fine (one error on Disk 3 though). The problem is that the new 6TB drive shows 23.38GB free space, which should be 4TB. Any ideas why this is and how to fix it?

Unraid Main reports the drive size as 6TB.

Unmenu Main reports the drive as 2TB and shows Used space on that 6TB drive as 1.98TB and Free only 23.28GB.

The disk has a warning HPA detected, though the size is fine and is the same as the rest of the 6TB drives.

Thanks for your help.

-

Hi Tom,

I have been using 4.7 on my old rig for just over 2 years.

I built it as a copy of your default build at the time (Supermicro C2SEE), using parts available to Australia.

I would love to see a 5.0 final for the following reasons:

1) Support for 3Tb drives

This is important as the drives are cost effective, and it will eliminate expensive upgrade path (bigger PSU, bigger case, additional SATA = >$300)

2) Updated SMB (v2)

There have been considerable updates to SMB on Windows clients (eg Win8), but also from my media players (Openelec). Newer SMB support should mean faster read performance.

3) Chasing your tail

It seems that unRaid development has been chasing its tail for a while now. Upgrade the kernal to support x... then y breaks. This could go on forever.

4) Could you please summarise the "Slow write" issue? I started reading the 12 page thread, but it might be easier for everyone if it was easily quantified about who might be effected.

I would be happy to see V5, then see v5.1beta's with experimental kernals for those with performance issues or who would like to be on the bleeding edge.

I'm with you, I need 3GB support. I'm tired of buying additional drives when I've got 3GB drives already in the array with 1/3 of their space being unutilized. Plus, the longer this goes on and the more 3GB drives I use as 2GB drives, the more time it is going to take me to convert them all to 3GB drives to recover the lost space. I'm really not looking forward to that as it is.

Look, the people with that hardware or thinking of buying that hardware are already SOL and need to wait for a solution. Why make all of us wait? Release 5.0 with a disclaimer for the known issue please.

3GB drives are fully supported in v4.7, as well as 4GB, and all drives up to 2000GB;-)

-

Have 5 CM Stacker 4-in-3 cages for sale. Four are original design, the fifth one is the new design.

All five are in perfect condition, come with 120mm fans, the fans are working fine. Selling due to

an upgrade. $18 a piece. Located in Toronto. Shipping across Canada or US. Can ship from Buffalo, NY too to minimize shipping costs. Buyer pays actual shipping. PM for more details.

-

Have 5 CM Stacker 4-in-3 cages for sale. Four are original design, the fifth one is the new design.

All five are in perfect condition, come with 120mm fans, the fans are working fine. Selling due to

an upgrade. $18 a piece. Located in Toronto. PM for more details.

-

In case someone still want to order, Provantage.com ships overseas. $99 plus $40 shipping to European countries.

Cheaper than 140 Euro plus shipping on Amazon.de.

-

Except for shipping....$24!

The seller can ship within the US at a lower fee if you can wait a coupe of weeks.

-

These are original design which I like better in my CM Stacker cases and not

manufactured any more.

-

But is it a single rail PSU? That matters far more than the wattage. There are 850 W PSUs with multiple rails that can't handle as many drives as a 500 W PSU with a single rail...

If you aren't sure, just post the make and model of the PSU and we can look it up.

Single rail.

-

Are you sure your power supply is powerful enough to handle all the drives you are running? If you have two servers I'm guessing that at least one of them is full.

The tower where one drive dropped off last night has 13 drives, most are green. Running on a 650W high efficiency PSU, well below its capacity.

-

I downloaded the full log, there are two entries:

Apr 14 23:05:01 Tower2 kernel: mvsas 0000:03:00.0: mvsas: driver version 0.8.4

Apr 14 23:05:01 Tower2 kernel: mvsas 0000:03:00.0: PCI INT A -> GSI 16 (level, low) -> IRQ 16

Apr 14 23:05:01 Tower2 kernel: mvsas 0000:03:00.0: setting latency timer to 64

Apr 14 23:05:01 Tower2 kernel: /usr/src/sas/trunk/mvsas_tgt/mv_spi.c 491:Init flash rom ok,flash type is 0x101.

Apr 14 23:05:01 Tower2 kernel: mvsas 0000:02:00.0: mvsas: driver version 0.8.4

Apr 14 23:05:01 Tower2 kernel: mvsas 0000:02:00.0: PCI INT A -> GSI 16 (level, low) -> IRQ 16

Apr 14 23:05:01 Tower2 kernel: mvsas 0000:02:00.0: setting latency timer to 64

Apr 14 23:05:01 Tower2 kernel: /usr/src/sas/trunk/mvsas_tgt/mv_spi.c 491:Init flash rom ok,flash type is 0x101.

Both have the same (old) firmware.

Supermicro X12SCA-5F Networking Performance Issues (RESOLVED)

in General Support

Posted

In case someone runs into this, this was not NIC related. In another thread, I saw someone having similar issues and reporting that copying files directly to hard drives or the cache drive did not cause the issues. I did a test by copying the same large file to every disk share and found that in about half the cases I'd get perfect file transfer jobs that start right away and stay at 113Mbps and then drop to 69Mbps once RAM is space is filled up, and in the other half of the cases file transfers to the server would have delayed starts and once they start, you get wild speed swings all the way to zero. Interestingly, these wild transfer speeds happened on every drive that was formatted to reiserfs. None of the XFS formatted drives had this issue. There's got to be a solution to this as my other server has a mix of reiserfs and xfs formatted drives but doesn't have the same issues when copying files to the share spanning all disks, but I don't know what it is. My solution is to reformat all the remaining drives on the server with the issues to xfs.