dertbv

-

Posts

220 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by dertbv

-

-

Yes it kept giving me the errors above. It cleared its self after a few hours. Upgraded without further issues.

-

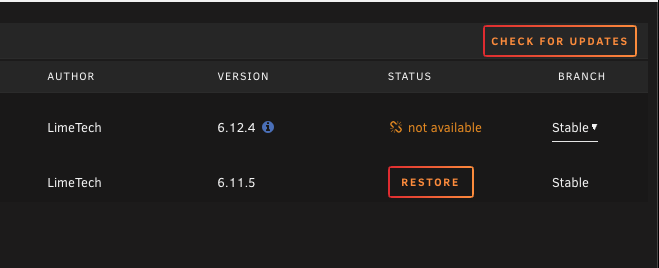

Currently running 6.12.4 trying to update to 6.12.6 however i am showing the broken link signal with not available. all other plugins are updating correctly. Update assistant gives the you are ready to update. Any pointers on how to correct this?

-

Updated the cache drive to ZFS and now my dockers are downloaded extremely slow. Everything else seems to be running good except CPU usage has climbed. I have attached a diagram hoping someone can tell me where i messed up.. Dockers don't show that they have been updated

-

52 minutes ago, Jammy B said:

hey!

been running absolutely fine. i've added a load of files to the folder it monitors and it doesn't do anything.

i've deleted the image and started again, nothing has changed my end except..... i rebooted unraid.

any suggestions?

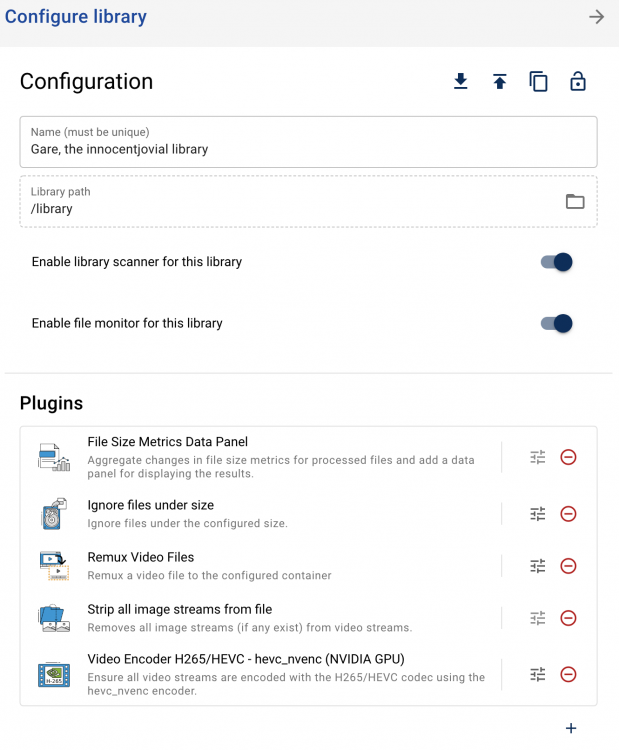

i had a similar issue with the latest release. there are a couple of new steps that have to be done. What i did was to remove the container and then delete the folder in the app directory. This will allow you to reinstall with all of your container settings. once installed go the unmainac webpage and go to settings. In the libraries directory you need to edit the library to turn on the scanning options, and then reinstall your plugins in the LIBRARY Configuration page.

-

1

1

-

-

Just installed the latest version. Version: 0.2.0~a3bd45a. Changed the settings to scan libraries I only have one that is nested. It starts to scan and does about 20 files then says it is complete. I know there are files that have not been processed but not finding them through scanning .

Resolved..

I had to remove the unmanic folder from the app directory and configure from scratch..

-

4 minutes ago, Squid said:

what is d-----s is set to "only", but it exists also on 3&4. You'd need to set that to prefer to move it to that cache

T-----s and T-----s are both set to No, but files exist on the cache. You'd have to set that to "yes"

for d-----s I will manually copy the files back to the cache drive.

However i have changed the exist on share back and forth for the T....'s it does not matter either way. the files are not moving off the cache drive. I am in the process of manually moving them and will let it run for 24 hours to see if the next batch get moved correctly.

-

i have about 50gig of tv shows that have not moved over. it looks like it stopped working about a month ago. I have checked and the files are on the cache drive and not in the storage drive.

-

It looks like it has not been running for a while any hints to what might be wrong?

-

On 9/1/2021 at 10:56 PM, Josh.5 said:

There is also a plugin specifically for remuxing video files. Search for "remux" or "container" in the plugin installer.

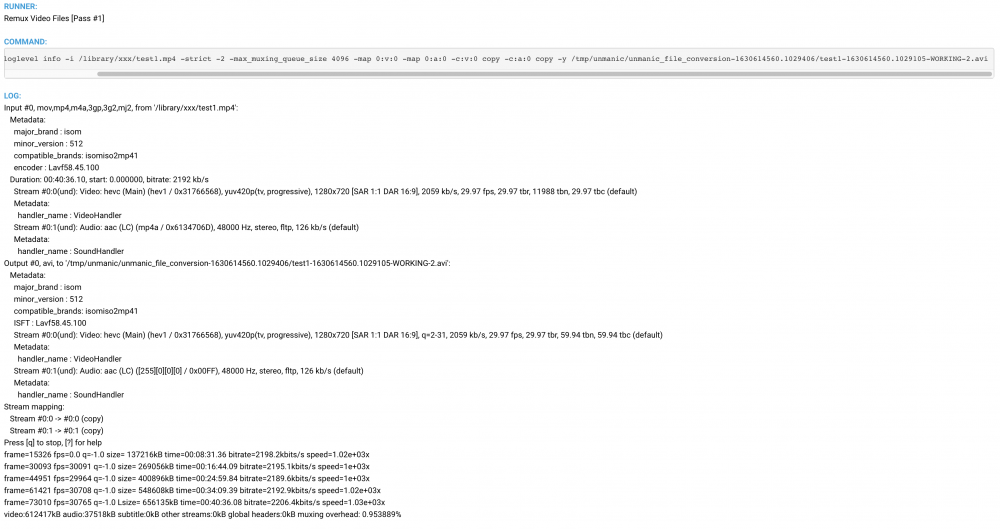

I have the remux plugin installed however it is not changing the file name

2021-09-02T16:19:40:INFO:Unmanic.TaskHandler - [FORMATTED] - Adding inotify job to queue - /library/xxx/test.mp4

2021-09-02T16:19:41:INFO:Unmanic.Foreman - [FORMATTED] - Processing item - /library/xxx/test.mp4

2021-09-02T16:19:41:INFO:Unmanic.Worker-W1 - [FORMATTED] - Picked up job - /library/xxx/test.mp4

2021-09-02T16:19:45:INFO:Unmanic.Worker-W1 - [FORMATTED] - Successfully ran worker process 'video_remuxer' on file '/library/xxx/test.mp4'

2021-09-02T16:19:45:INFO:Unmanic.Worker-W1 - [FORMATTED] - Successfully converted file '/library/xxx/test.mp4'

2021-09-02T16:19:45:INFO:Unmanic.Worker-W1 - [FORMATTED] - Moving final cache file from '/library/xxx/test.mp4' to '/library/xxx/test-1630613985.7216587.mp4'

2021-09-02T16:19:45:INFO:Unmanic.EventProcessor - [FORMATTED] - MOVED_TO event detected: - /library/xxx/test.mp4

2021-09-02T16:19:45:INFO:Unmanic.Worker-W1 - [FORMATTED] - Finished job - /library/xxx/test.mp4

2021-09-02T16:19:45:ERROR:Unmanic.FileTest - [FORMATTED] - Exception while carrying out plugin runner on library management file test 'ignore_under_size' - [Errno 2] No such file or directory: '/library/xxx/test.mp4'

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/unmanic/libs/filetest.py", line 135, in should_file_be_added_to_task_list

plugin_runner(data)

File "/config/.unmanic/plugins/ignore_under_size/plugin.py", line 67, in on_library_management_file_test

if check_file_size_under_max_file_size(data.get('path'), minimum_file_size):

File "/config/.unmanic/plugins/ignore_under_size/plugin.py", line 45, in check_file_size_under_max_file_size

file_stats = os.stat(os.path.join(path))

FileNotFoundError: [Errno 2] No such file or directory: '/library/xxx/test.mp4'

2021-09-02T16:19:46:INFO:Unmanic.PostProcessor - [FORMATTED] - Post-processing task - /library/xxx/test.mp4

2021-09-02T16:19:46:INFO:Unmanic.PostProcessor - [FORMATTED] - Copying file /library/xxx/test-1630613985.7216587.mp4 --> /library/xxx/test.mp4

2021-09-02T16:19:54:INFO:Unmanic.EventProcessor - [FORMATTED] - CLOSE_WRITE event detected: - /library/xxx/test.mp4

2021-09-02T16:19:54:INFO:Unmanic.TaskHandler - [FORMATTED] - Skipping inotify job already in the queue - /library/xxx/test.mp4

Side not since this is copying the file with a new name rather than over writing it. It runs over and over creating copy after copy.

-

I love the new look and the way the plugins are working. I have run into a problem where i chose the output format on h254 nvidia plugin. I am not seeing a way to make it default to mkv files. I have a few mp4 files that it appears to work on but they are still mp4 when completed.

A nice have would to be able to unpause all workers at the same time rather than doing them one at a time.

-

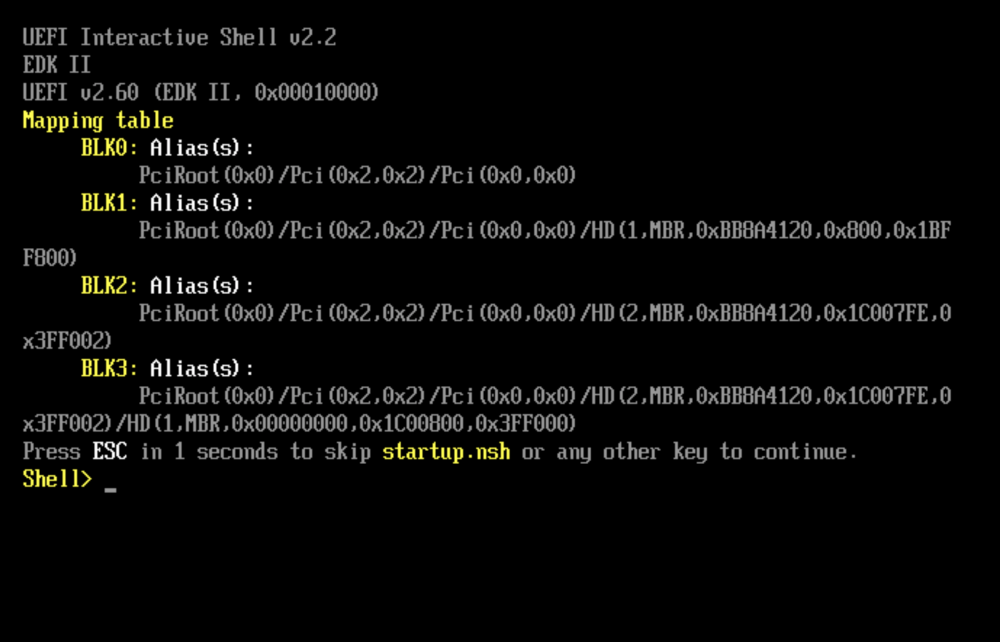

Going to answer my own question. It appears that I had to choose the Seabios from the windows plavor on drop down. This resolved my linus vm's and windows. see this page for info..

-

-

2 hours ago, Squid said:

If you were running the appdata backup plugin with the libvirt backup option enabled, there's a copy in your backup share

I thought i had it checked but apparently it was not. It looks like it is the only file that i do not have a back up of. :( Back up the usb once a day but not that file DOH!

-

So i made a stupid mistake deleting the file and lost all of my VM's, I still have the img files and am looking for a way to import them back in? Is this possible? I have tried to setup a few by choosing the appropriate osx and make the changes in the gui, linking the img file as the hard drive. However i a start shell screen when i launch them. Any ideas?

-

Thinking it is about time to upgrade my unraid box.

https://www.newegg.com/intel-core-i9-10900k-core-i9-10th-gen/p/N82E16819118122?Item=N82E16819118122

https://www.newegg.com/g-skill-64gb-288-pin-ddr4-sdram/p/N82E16820232939?Item=N82E16820232939

Do you see any issues with these?

Thanks

-

Has anyone had an issue where unmanicis running correctly for a short period of time and then it stops processing files. It shows them in the queue to be processed but it is just not grabbing them. If i restart the container it will process them again for a little while and then stop again at some point?

-

17 minutes ago, Josh.5 said:

Thanks for the report and the logs.

A fix will be available in the 'staging' tag again for download in about 10 mins.

Thanks that fixed it..

-

I have been using the staging branch for a while. Just did a pull and I am not getting the dashboard. getting Traceback (most recent call last): File "/usr/local/lib/python3.6/dist-packages/tornado/web.py", line 1697, in _execute result = method(*self.path_args, **self.path_kwargs) File "/usr/local/lib/python3.6/dist-packages/unmanic/webserver/main.py", line 64, in get self.render("main/main.html", historic_task_list=self.historic_task_list, time_now=time.time(), session=self.session) File "/usr/local/lib/python3.6/dist-packages/tornado/web.py", line 856, in render html = self.render_string(template_name, **kwargs) File "/usr/local/lib/python3.6/dist-packages/tornado/web.py", line 1005, in render_string return t.generate(**namespace) File "/usr/local/lib/python3.6/dist-packages/tornado/template.py", line 361, in generate return execute() File "main/main_html.generated.py", line 34, in _tt_execute elif session.level > 0: # page_layout.html:119 TypeError: '>' not supported between instances of 'NoneType' and 'int'

-

My Current setup is

Supermicro X10SRH-CF Version 1.00A - s/n: NM163S019478

CPU: Intel® Xeon® CPU E5-2673 v3 @ 2.40GHz @ 32GB Ram

TI1660 for Video Conversion

I am thinking of upgrading to either

Intel Core i7-10700K C

ASUS ROG STRIX Z490-E

or

Intel Core i9-10850KA

ASUS ROG STRIX Z490-E

Which would be better and do you see any knowns?

-

I am showing

"Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/unmanic/libs/ffmpeg.py", line 191, in set_file_in

self.file_in['file_probe'] = self.file_probe(vid_file_path)

File "/usr/local/lib/python3.6/dist-packages/unmanic/libs/ffmpeg.py", line 156, in file_probe

probe_info = unffmpeg.Info().file_probe(vid_file_path)

File "/usr/local/lib/python3.6/dist-packages/unmanic/libs/unffmpeg/info.py", line 55, in file_probe

return cli.ffprobe_file(vid_file_path)

File "/usr/local/lib/python3.6/dist-packages/unmanic/libs/unffmpeg/lib/cli.py", line 67, in ffprobe_file

raise FFProbeError(vid_file_path, info)"

in my log file what can i do to resolve this?

-

Anyone have a down and dirty way to remove the attachments on the files before we processionals them. they are causing unmanic to fail. looking for a bulk way to do it as i have over 6k that are failing..

-

Any idea how i can resolve this error?

"2020-08-01T22:00:59:ERROR:Unmanic.Worker-2 - [FORMATTED] - Exception in processing job with Worker-2: - 'utf-8' codec can't decode byte 0xb2 in position 24: invalid start byte"

This was caused by the Audio. I had to change the settings just to pass though.

-

2 minutes ago, Josh.5 said:4 hours ago, dertbv said:When opening the webpage it is taking a while to populate the see all records. then it takes more time to actually view details on a completed item. Chosing failures and massive wait time..

The issue is the page being loaded does not paginate the query. And the query is not optimised as an SQL dataset. Switching to mysql will not improve page load times. The orm calls for those pages need to be optimised. This is something already on my to-do list and will probably be a few hours of work to do properly when I get the time. No ETA ATM.

Not complaining thank you for all that you do! I just turned on the Nvida piece and have completed more in 10 days than i have in the last year.

-

2 hours ago, chiefo said:

I've seen something similar. Mine has gotten to the point twice where the page will never load when i try to view all records. I've had to blow away the database to restore functionality to that page. I can almost never get it to open an individual record even after starting with a fresh database. The entire tab locks up

If you blow away the entire database won't it go through every file that you have ever done?

6.12.6 - DASHBOARD IS NO LONGER ACCESSIBLE

in General Support

Posted

Removed the Disk Location plugin and still not showing the dashboard in Safari on my mac. Chrome is showing the dashboard and all drives are spun up. but really prefer safari. So any help would be appreciated.

tower2-diagnostics-20231208-1948.zip