-

Posts

3497 -

Joined

-

Last visited

-

Days Won

7

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by hawihoney

-

-

-

I add my link here:

-

Sure, did not post diagnostics because I thought this difference must be obvious. Diagnostics attached now. Sorry for this delay. What I did:

My plan was to upgrade Unraid from 6.10.3 to 6.11.1 on my bare server this morning. I saw that there were two Container updates (swag and homeoffice). So I've set them to "don't autostart" and did my upgrade thing.

After reboot of the bare metal server into "Don't autostart array" I checked everything and did start array after that. After array start I did upgrade both Container. Both Containers (swag/homeoffice) did end in "Please wait" but with the "Done" button shown at the bottom of the dialog.

After upgrade I did set both Containers to "Autostart" again.

That's all. If I had to present an idea: Upgrade "Do not autostart container" Containers look like a good candidate.

-

3 hours ago, itimpi said:

I believe this a restriction built into the hardware (although I could be wrong).

Yes, that's what I thought. Think about the "usual" environment for these types of HBA. They are built for disks running all the time.

-

On 4/8/2020 at 4:48 PM, ptr727 said:

Seems highly unlikely that this is a LSI controller issue.

My guess is the user share fuse code locks all IO while waiting for a disk mount to spin up.

My Unraid Servers use LSI/Broadcom 9300-8i and 9300-8e HBAs (latest firmware). User Shares (FUSE) are not activated, only Disk Shares here. The effect happened in all Unraid releases I do remember. Whenever a disk spins up all activity on other disks attached to that same HBA stop for a short time.

-

Got that. So my VMs only autostart after an Unraid upgrade. That's what I know now.

My servers only boot for Unraid updates - they run 365/24. Alle Disk Additions or Replacements can be done with a stopped array without shutdown or reboot. With the "Autostart Array=no" (this is IMHO more important) the manual array start after Disk Replacements will trigger VM autostart only once and then never again until next boot.

That's what I learned and that's what I have to live with ...

-

Quote

I tested with Autostart of the array set to No, and the VM still autostarted when I manually started the array. It appears that it only works once until you next reboot.

That's what I described and said:

Autostart Array=off, boot, start Array manually --> Autostart VM works.

Now stop Array, start Array, Autostart VM does not work --> that was my problem and the reason for this bug report.

Simply confusing, not documented on VM page and I still don't see a reason for that.

Autostart means Autostart. Don't change that. Just my two cents.

-

And you think that this logic avarage users like me will understand? There's an option "Autostart VM" that only works if another option "Autostart Array" is set. But only if it is a first start of the array after boot, not on a second or later start of the array.

Please take a step back and look at this from a distance. I already gave up and closed because I don't see that anybody understands that this option "Autostart VM" will be source of confusion in the future. It will NOT autostart VMs always and it works different than other autostart options (e.g. Autostart Docker Container).

I'm not here to change the logic, I was here to make it more transparent.

Let's stop here. I already closed.

-

Changed Status to Closed

-

1 hour ago, itimpi said:

No

So Unraid works different in your environment than in mine.

Trust me, I'm working long enough with Unraid to know what I do and what settings are in place since years (I guess).

The machine was running since monday (upgrade from 6.10.2 to 6.10.3). "Autostart Array" was off as always. Today I had to replace a disk in the array. I manually stopped all running VMs, manually stopped all running Docker Containers, stopped the array, replaced the disk, started the array to rebuild the replacement disk and the VMs, marked with "Autostart", did not start. That's all.

In the meantime I've set "Autostart Array" to on, so that my VMs honor the "Autostart VM" setting in the future.

I'm not satisfied but I will close that bug. Seems that it is intendent behaviour.

-

1 hour ago, itimpi said:

In my experience this works if the array is auto started on boot.

58 minutes ago, JorgeB said:It will autostart the VMs at first array start if array autostart is enabled.

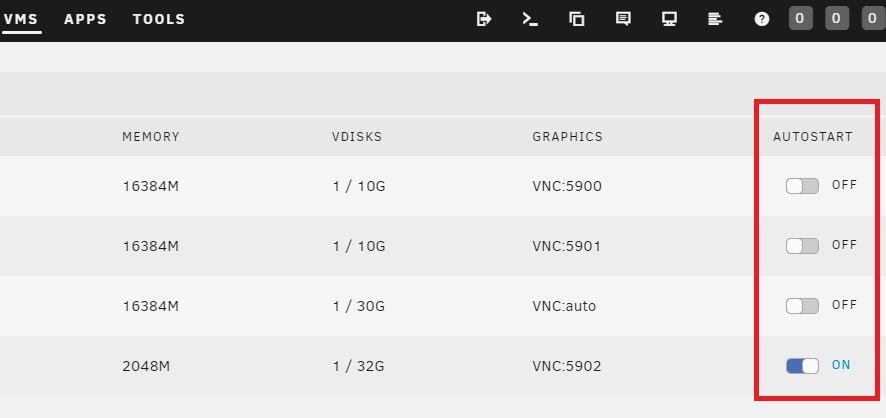

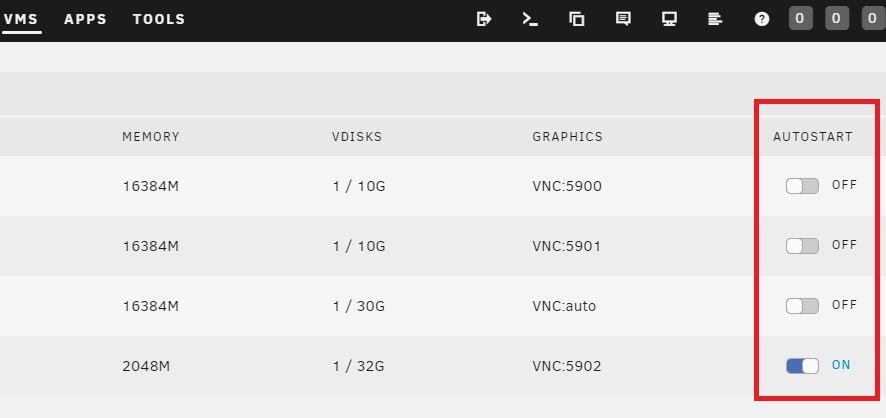

So "Autostart VM" will only work if "Autostart Array" is enabled during a boot process.

But "Autostart VM" will not work if "Autostart Array" is disabled, the machine is already booted and the Array is started afterwards.

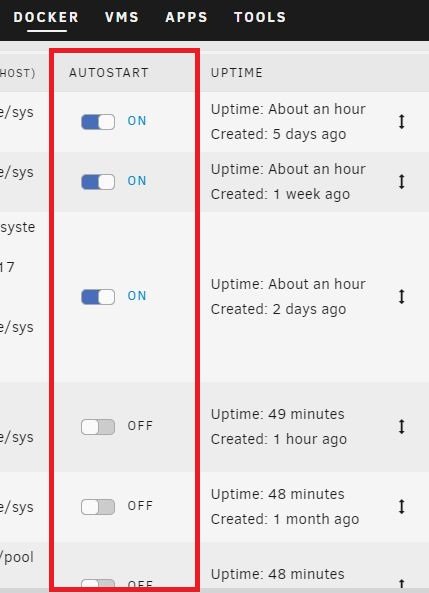

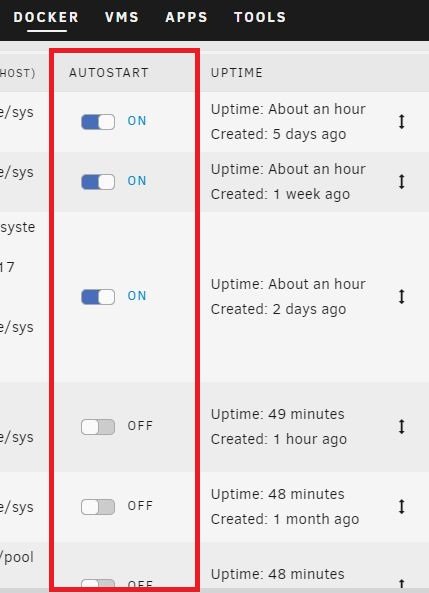

So the "Autostart" options on the "VM tab" are bound to the "Autostart Array" AND the VM - with the "Autostart Array" being the main factor. This differs from the "Autostart" option on the "Docker tab" that does not care about "Autostart Array" at all.

Got it, very confusing, but got it.

IMHO, this should be mentioned on the "VM page". Some hours were gone without running VMs when I finally found out that my VMs with Autostart were not started. After that I scanned thru all logs this morning because I thought the VMs had errors during start.

If an option suggests an Autostart it should not be bound to a different option behind the scene.

Consider what I did: I stopped the array (no shutdown, no reboot, simply stop) to replace a disk. After that I started the array again and my VMs did not start. Guess nobody would expect that in this situation.

-

1

1

-

-

1 hour ago, JorgeB said:

This is done on purpose

What does Autostart on VM page do then? Perhaps I don't understand your answer:

1,) Autostart on VM page does not Autostart VMs. Why is this option available?

2.) Autostart on VM page does not Autostart VMs on Array Start, but does work .... (when?)

Whatever it is: "Autostart" on two different pages (Docker, VM) has two different meanings then. IMHO this is a bad GUI descision.

*** EDIT *** I'm talking about these two settings:

How to reproduce:

- On Settings > Disk Settings > Enable auto start --> Off

- On Docker > [Container of your choice] > Autostart --> On]

- On VM > [VM of your choice] > Autostart --> On

- Stop a running Array (no reboot, no shutdown, simply stop array)

- Start array

--> Docker Containers with Autostart=On will start

--> VMs with Autostart=On will NOT start

-

14 minutes ago, itimpi said:

Are you trying to do something that is not already supported by the Parity Check Tuning plugin?

I don't want to put to much text here in the Bug Report section. I did create a post in the General Support forum. Would be happy if you'd follow me over:

-

@itimpi I know that. The disks did spin down after the set spin down delay.

To answer the "doesn't make sense": I don't know where you live but I live in Germany and my power company increased their prize by 100% (EUR 0,49 / kwh) because of the current situation in Europe. I will run parity checks only when my solar roof produces enough power during the day. I doesn't make sense to me to run parity checks when I have to buy.

So please expect my feature request in a day about how to start/stop/pause/resume/query parity checks from within user scripts via URL 😉

-

Quote

while the NETWORK graph is a new option under Interface (see the 'General Info' selection)

I can't find where to activate the network graph. Where is this General Info selection?

-

I think it's my fault that this was introduced

Only those pages/lists, that do have activity buttons at the end (Main, Shares, File Manager) and/or have a checkbox in the front, should toggle the background color IMHO. I know the list design has alternating backgrounds already, but these lists grew in content and details so that it is hard to find the correct button at the end.

I was fine with the old design up until File Manager and it's button was introduced. I never used the old button and used MC on the command line instead. Now with File Manager I changed my mind and I'm using File Manager a lot. That's when I found that I have a hard time hitting the correct button on the far right.

-

1

1

-

-

6 minutes ago, Squid said:

My answer was to how to handle "private" repositories.

These six XML files currently (6.9.2) automatically stored on /boot are my private repository

Time to take screenshots instead of using saved container configurations with 6.10 and above.

-

9 hours ago, Squid said:

You can still handle your own "private" repositories by placing the xml files within

How? While VM configurations can be easily exported with "virsh dumpxml ..." I never found a way to do this with Docker containers.

-

I do have a question according the mount command.

On 6.9.2 the output of the mount command for my own mount commands issue thru user scripts looks like this:

//192.168.178.35/disk1 on /mnt/hawi/192.168.178.35_disk1 type cifs (rw)

On 6.10 RC3 the output of the same mount looks like that:

//192.168.178.35/disk1 on /mnt/hawi/192.168.178.35_disk1 type cifs (rw,relatime,vers=3.0,cache=strict,username=hawi,uid=99,noforceuid,gid=100,noforcegid,addr=192.168.178.35,file_mode=0777,dir_mode=0777,iocharset=utf8,soft,nounix,serverino,mapposix,rsize=4194304,wsize=4194304,bsize=1048576,echo_interval=60,actimeo=1,_netdev)

Is this intended behaviour and stay like that? Is that a change within the mount command itself or does Unraid set some different default values for the mount command?

Thanks.

-

Why no RC2 announcement in the Announcement thread? Came here by accident.

-

1 hour ago, ich777 said:

Look at the my servers page

Oh, please. This is a naive argument. Not all that's stored becomes shown. Google has trillions of data about you, look at their dashboard. E.g. not one single line about relationships to other Google users.

I suggest to stop here and wait for some clarification from the developers.

-

9 minutes ago, ich777 said:

And the installation from the My Servers plugin too

Don't forget UPC. AFAIK, this is mandatory for users (trial and upgrade). I do have mixed feelings about that.

With the current procedure (pre 6.10) the key is sent to my E-Mail address. What's known to Limetech is my E-Mail address, the GUID of the stick, payment details, address details (name, ...). Only optional Limetech knows my forum account. Currently not all users do have a forum account. A happy user with no problems won't create one.

Starting with 6.10 new users or upgrading users need to have a forum account (is this correct?) And the way more interesting part: What is know additionally from my servers thru UPC if My Servers Plugin is not installed?

As said previously here. It's a matter of communication. Cloud services have a bad reputation and are tolerated only with personal benefits. So, what are the cool benefits

Currently I can not sign out offline servers. Currently I can not manually upload spare key files (not in use currently). Currently I can't rename the server tiles. You get the idea.

Currently I can not sign out offline servers. Currently I can not manually upload spare key files (not in use currently). Currently I can't rename the server tiles. You get the idea.

-

37 minutes ago, ich777 said:

don't install the My Servers plugin

In fact I did sign out and remove the My Servers plugin RC from my 6.9.2 systems this morning, because all my Unraid servers did not work out of sudden. Connecting the unraid.net URL of these servers ended in DNS ERR_NAME_NOT_RESOLVED. I'm back to local IP connections now and I will keep it that way. My 6 license keys are stored in /config on all sticks and I hope it will work that way in 6.10 and beyond.

1 hour ago, alturismo said:when i think about it that pretty sure almost all here using a smartphone, facebook, ...

It's a descision that users make. If they want Facebook but not Google (or whatever) - it's their personal descision then. IMHO it's no valid argument to say "If you 'trust' Facebook you must 'trust' Google as well" .

-

1

1

-

-

Don't know what happened. Without further activity my post has been posted and I cann't edit it any longer.

---

Now compare the CPU usage of my system with your screenshot. My VMs are heavily in use and your VMs are not actively used? I guess that the VM's are busy with itself.

I would check what the VMs are doing, what and were they writing to. Is there enough RAM for the VMs, etc.

This may block SMB out.

can't reformat array disks to zfs encryption V6.12.0-rc2

-

-

-

-

-

in Prereleases

Posted

Excuse my stupid question, but what does that mean exactly? Is data safe during that process or does it simply mean that a XFS formatted disks can be reformatted to ZFS without preserving data?