-

Posts

287 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by jortan

-

-

It does look like some progress in decoding AX1600i output has been made recently:

-

26 minutes ago, giganode said:

I want to know why you need to chmod the executable.

The other question is why the executable is being placed in the cpsumon directory, and not in /usr/local/bin as with other users?

This means the executable is also not in path. I wonder if these are related (is Unraid automatically chmod +x'ing plugin files placed in /usr/local/bin but not a subfolder?)

I don't have corsair psu connected currently so my diagnostics will not be useful.

-

1 hour ago, giganode said:

Just to clarify.. the plugin is now working as expected? Values are shown in the dashboard widget?

Which exact version of unraid are you running?

I'm running the latest RC: Unraid Version: 6.12.0-rc6

Plugin: 2023.03.26e

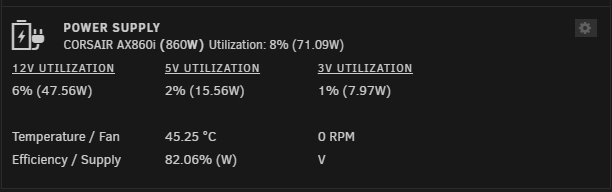

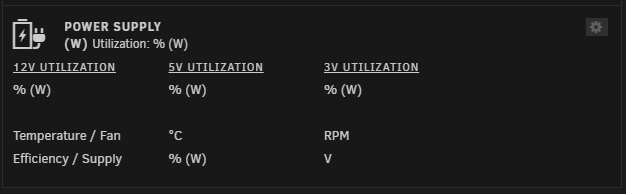

It doesn't survive a reboot, so I get this:

Until I run:

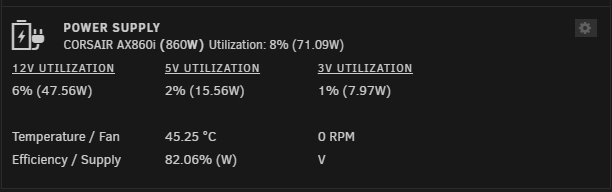

chmod +x /usr/local/bin/cpsumon/cpsumoncliThen it starts working again:

-

48 minutes ago, giganode said:

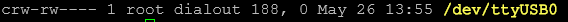

Please post the output of cpsumoncli /dev/ttyUSB0

Thank you

So the plugin was installed, but the command cpsumoncli was not foundUninstalling from Apps didn't do anything, and the plugin would still show as installed. Uninstalled from Plugins page

And then reinstalled from Apps. Still not availalable in path and dashboard still not working

root@servername:~# /usr/local/bin/cpsumon/cpsumoncli -bash: /usr/local/bin/cpsumon/cpsumoncli: Permission deniedls -alh /usr/local/bin/cpsumon/ total 16K drwxr-xr-x 3 root root 80 Mar 27 01:41 ./ drwxr-xr-x 1 root root 100 Mar 27 01:41 ../ -rw-rw-rw- 1 root root 16K Mar 27 01:41 cpsumoncli drwxr-xr-x 2 root root 60 Mar 27 01:41 libcpsumon/Added executable permission:

chmod +x /usr/local/bin/cpsumon/cpsumoncliNow it's working! Not sure if this is a new requirement in the latest RCs?

ps: I still can't execute cpsumoncli without specifying the full path: /usr/local/bin/cpsumon/cpsumoncli

-

Any idea what the issue might be here?

Unraid 6.12.0-rc6

Corsair plugin:

###2023.03.26e

- Repo forked from CyanLabs/corsairpsu-unraid. Made the necessary changes for UnRAID v.6.12.0-beta7 while maintaining backwards compatibility.

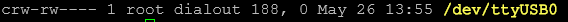

ttyUSB0 does appear when the AX860i is connected:

Apologies if I have missed something obvious

-

-

6 minutes ago, ashman70 said:

Right so if I use ZFS as I intend to, convert all my disks to ZFS and just have them operate independently, with no vdevs then I will get the detection and self healing features of ZFS?

Strictly speaking you're talking each disk being a separate pool with a single vdev containing one disk.

As everyone else has pointed out, in this scenario ZFS can detect errors thanks to checksums, but will have no ability to repair them because you have no redundancy in each pool.

If you want to pool dissimilar disks, Unraid arrays are perfect for this. If you want the performance and resilience of a ZFS pool, you may want to invest in some new disks.

-

1 hour ago, ashman70 said:

I have a backup server running the latest RC of unRAID 6.12. I am contemplating wiping all the disks and formatting them in ZFS so each dis is running ZFS on its own. Would I still get the data protection from bit rot from ZFS by doing this?

Is there any reason you don't want to create a single pool of your disks? Less total capacity? Concerns around being able to expand the array? ZFS has supported expanding raidz pools for some time now and Unraid will be adding GUI features for this at some point.

-

29 minutes ago, Marshalleq said:

I have ... way more than 4 drives

Are you referring to this?

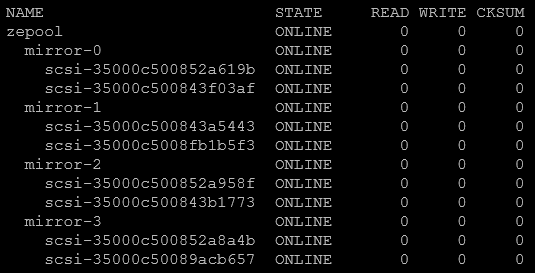

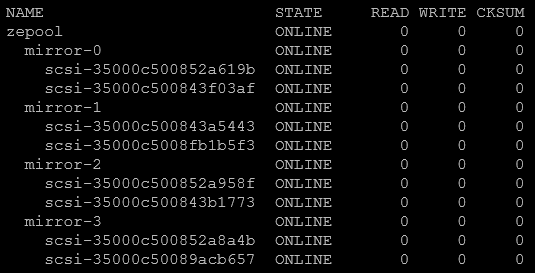

Up to 4 devices supported in a mirror vdev.If I'm understanding correctly, this should almost never be an issue. Even a mirrored pool of 8 drives will typically only have 2 per vdev. Example:

-

31 minutes ago, 0edge said:

I underclocked it to 3200mhz, it's a 3600mhz kit.

It's still a reasonable diagnostic step to run this all the way back to 2133/2400 and see if the issue persists. If it does and if your memory passes memtest for a reasonable amount of time, then you can move on to other potential causes.

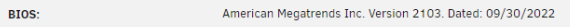

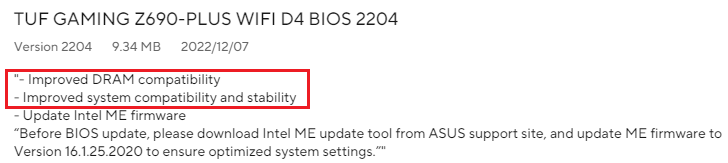

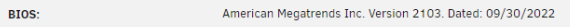

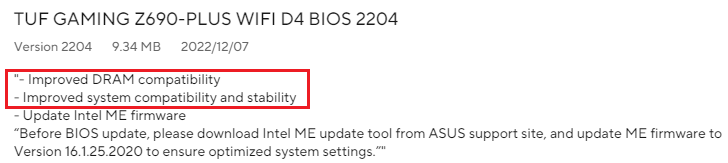

Also:

-

1

1

-

-

On 3/4/2023 at 1:57 AM, Xdj said:

I'm going to start over and try again with the new information!

ps: if you're still looking to build a second server for backups, probably the best option is sanoid to manage snapshots of your main ZFS pool, and syncoid to pull/replicate those snapshots on your backup server.

I have this setup so that on startup, my backup server will replicate snapshots from my main server, send an email summary and then shut itself down.

-

3 hours ago, Xdj said:

I'm just curious why in the video guides they don't mention the config file.

I haven't watched any of the video guides, but this includes some explanation of what's going on in the above SMB (windows share) configuration examples:

https://forum.level1techs.com/t/zfs-on-unraid-lets-do-it-bonus-shadowcopy-setup-guide-project/148764

3 hours ago, Xdj said:I'm building a second server to back up the first so I'm trying to figure out the best solution to protect 30+ years of photos and videos.

One thing to consider is something like borg to backup your data offsite

-

1

1

-

1

1

-

-

On 2/28/2023 at 5:46 PM, wout4007 said:

yes it does. /mnt/disk1/pool_name

I would suggest mounting your ZFS pool elsewhere, ie. /mnt/poolname

/mnt/diskx is where Unraid mounts disks for the Unraid array. Seems likely that is the problem?

-

On 2/23/2023 at 12:37 AM, wout4007 said:

hi i have a problem every time I restart my server my mount point disappears. does someone know how to solve this?

Does the pool have a mountpoint configured?

zfs get mountpoint poolname -

11 hours ago, wout4007 said:

hi i am new at zfs but have managed until this point. I have zfs pool set upand a mountpoint to an unraid shere but every time I restart the server my data disappears. but in the command line it stil says it is mounted at that location.

can someone please help me

I don't know what method people are using to try and have Unraid's own array system mount ZFS shares, but I don't believe that is supported.

If you're happy to have an open share you can add something like this in /boot/config/smb-extra.conf

[sharename] path = /mnt/poolname/dataset comment = share comment browseable = yes public = yes writeable = yes(edit: ZFS support is coming to Unraid soon and presumably this will include creating shares in the GUI for ZFS pools/datasets)

-

5 hours ago, Xxharry said:

This is from the SMART test. does that yellow line mean the disk is buggered?

Depends on your risk profile. Your disk may fail soon, on the other hand I've had disks continue to operate for tens of thousands of hours with a handful of "reported uncorrect" errors.

-

2 hours ago, nathan47 said:

I've now tried resilvering a drive in my pool twice now, and this is the second time I've run into this error:

Any ideas? I'd love to finish the resilver, but after this it seems that all i/o to the pool is hung and ultimately leads to services and my server hanging.

Jan 10 20:53:59 TaylorPlex kernel: BUG: unable to handle page fault for address: 0000000000003da8 Jan 10 20:53:59 TaylorPlex kernel: #PF: supervisor write access in kernel mode Jan 10 20:53:59 TaylorPlex kernel: #PF: error_code(0x0002) - not-present page

Potentially bad memory:

-

15 minutes ago, Marshalleq said:

That may then lead me to think that you have a similar issue if you have a lot of entries - though I could be completely off here as I'm just guessing. But it's likely worth googling why you might get this in your log and can it happen when ZFS is detecting errors.

I don't think it's indicative of a faulty pool, something is issuing a command to import (bring online and mount) all detected zfs pools. I think 1WayJonny's point is that there could be situations where a plugin randomly issuing a command to import all ZFS pools might not be desirable.

-

7 minutes ago, Marshalleq said:

I assume by your post this is just printing live in the console?

You can check this with:

zpool history | grep importI have a several of these entries, but not since around 6 months ago, and this may have actually been me running the commands. I don't use these plugins, but I've just installed zfs-companion to see if that's causing these commands to be run.

-

5 hours ago, miketew said:

in this case, unraid's ZFS plugin essentially renders part of unraid's draw (mix and match disks) somewhat moot, as it behaves just like a normal ZFS zpool with the inability to scale out or scale up. is that right?

The ZFS plugin isn't intended to replace the Unraid array (with it's flexibility to mix and match disks), but rather to replace the functionality otherwise provided by the cache drive/pool (ie. BTRFS)

When ZFS is officially supported in Unraid (which has been hinted at), that will still be the case.

edit: That said, ZFS raidz expansion is now a thing, and there has always been the option to fairly easily expand arrays with mirrored pairs (which is generally regarded as the best pool topology)

-

1 hour ago, Defendos said:

When setting those mount points does that also mean that i have to first create the corresponding smb/nfs shares in unraid to link them to my zfs pool/disks or will the shares show up in windows explorer for example when i create the mounting points as you have described?

The Unraid "Shares" GUI is really designed for sharing Unraid array/pools. I would also recommend sharing a ZFS dataset using smb-extra.conf (or zfs set) rather than trying to do this in the Unraid Shares GUI.

If you want a share that's open to anonymous (unauthenticated) access on your network:

chown nobody:users /mnt/poolname/dataset

chmod 777 /mnt/poolname/dataset

In /boot/config/smb-extra.conf add a new share:

[sharename] path = /mnt/poolname/dataset comment = this is a comment browseable = yes public = yes writeable = yesRestart samba service to load the new config

/etc/rc.d/rc.samba restartsharename should now be visible at \\unraidhostname or \\unraid.ip.address

-

On 10/13/2022 at 11:34 PM, ph0b0s101 said:

Any chance for english translation or written instructions?

You can turn on closed captions, and then enable translation to english in the video settings - was pretty easy to follow:

Thanks for this @UnRAID_ES

-

38 minutes ago, Valiran said:

No, what I call a high bitrate is more like 25-30MB/sec

50Mbit/sec = ~6.5MB/sec <- typical 4K Bluray

edit: it's typically a bit higher than this: https://en.wikipedia.org/wiki/Ultra_HD_Blu-ray

Still, the array is probably not your issue with Plex + 4K

-

52 minutes ago, Valiran said:

Hello!

Plex metadata and stuff were on cache only.

Plex libraries were on unraid array and that's where I had perf issues, I barely could direct play 1 4k movie, and 4k movies with high bitrate were always buffering that's why I moved to Xpenology that works as expected EXCEPT that HDD hibernation doesn't work so HDD always spin and power consumption is high

But that was like 2 years ago, I don't know if Unraid Arrays are better now!

A high bitrate 4K file is likely to be around 5-10MB/sec, I doubt this was an issue with array performance. Plex has always struggled with 4K where transcoding is required. Worth another try, things may have improved.

ZFS plugin for unRAID

in Plugin Support

Posted

Once installed you will need to restart Unraid. I think it will automatically attempt to mount any ZFS pools found on startup.