tr0910

-

Posts

1449 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by tr0910

-

-

Thanks, the best advice when something goes wrong, is take a deep breath and do nothing until you've thought it all through. I should have this backed up, but it will be difficult to access. I'll have to think carefully.....

-

I have been doing this for over 10 years with unRaid and this is my first big user error. I was moving a bunch of full array disks between servers and instead of recalculating parity, I made a mistake and unRaid started wiping the disks.

These 5 disks are all XFS and I only allowed it to go for less than 10 seconds, but it was enough to wipe the file system and now unRaid thinks these are unmountable. I would really like to bring back the XFS file system........

Does anyone have any suggestions? (while I beat myself with a wet noodle)

-

On 7/29/2021 at 10:41 AM, glennv said:

Cool. Its the test of all tests. If ZFS passes this with flying colours , you will be a new ZFS fanboy i would say

I am a total ZFS fanboy and proud of it

Keep us posted

@jortanAfter a week, this dodgy drive had got up to 18% resilvered, when I got fed up with the process. Dropped a known good drive into the server and pulled the dodgy one. This is what it said:

zpool status pool: MFS2 state: DEGRADED status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Mon Jul 26 18:28:07 2021 824G scanned at 243M/s, 164G issued at 48.5M/s, 869G total 166G resilvered, 18.91% done, 04:08:00 to go config: NAME STATE READ WRITE CKSUM MFS2 DEGRADED 0 0 0 mirror-0 DEGRADED 0 0 0 replacing-0 DEGRADED 387 14.4K 0 3739555303482842933 UNAVAIL 0 0 0 was /dev/sdf1/old 5572663328396434018 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-WDC_WD30EZRS-00J99B0_WD-WCAWZ1999111-part1 sdf ONLINE 0 0 0 (resilvering) sdg ONLINE 0 0 0 errors: No known data errorsIt passed the test, 4 hours later we have this:

zpool status pool: MFS2 state: ONLINE status: Some supported features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(5) for details. scan: resilvered 832G in 04:09:34 with 0 errors on Tue Aug 3 07:13:24 2021 config: NAME STATE READ WRITE CKSUM MFS2 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 sdf ONLINE 0 0 0 sdg ONLINE 0 0 0 errors: No known data errors-

1

1

-

-

3 hours ago, glennv said:

Should finish in a week or so

Yep, and just as it passed 8% we had a power blink from a lightning storm and I intentionally did not have this plugged into the UPS. It failed gracefully, but restarted from zero. I have perfect drives that I will replace this with, but why not experience all of ZFS quirks since I have a chance. If the drive fails during resilvering I won't be surprised. If ZFS can manage resilvering without getting confused on this dingy harddrive, I will be impressed.

-

@glennv @jortan I have installed a drive that is not perfect and started the resilvering (this drive has some questionable sectors). Might as well start with a worst possible case and see what happens if resilvering fails. (grin)

I have docker and VM running from the degraded mirror while the resilvering is going on. Hopefully this doesn't confuse the resilvering.

How many days should a resilvering take to complete on a 3tb drive? Its been running for over 24 hours now.

zpool status pool: MFS2 state: DEGRADED status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Mon Jul 26 18:28:07 2021 545G scanned at 4.30M/s, 58.6G issued at 473K/s, 869G total 49.1G resilvered, 6.75% done, no estimated completion time config: NAME STATE READ WRITE CKSUM MFS2 DEGRADED 0 0 0 mirror-0 DEGRADED 0 0 0 replacing-0 DEGRADED 0 0 0 3739555303482842933 FAULTED 0 0 0 was /dev/sdf1 sdi ONLINE 0 0 0 (resilvering) sdf ONLINE 0 0 0 errors: No known data errors -

(bump) Has anyone done the zpool replace? What is the unRaid syntax for the replaced drive? Zpool status is reporting strange device names above.

-

unRaid has a dedicated following, but there are some areas of general data integrity and security that unRaid hasn't developed as far as it has with Docker and VM support. I would like Open ZFS baked in at some point, and I have seen some interest from the developers, but they have to get around the Oracle legal bogeyman.

I have seen no discussion around snapraid.

Check out ZFS here.

-

I need to do a zpool replace but what is the syntax for using with unRaid? I'm not sure how to reference the failed disk?

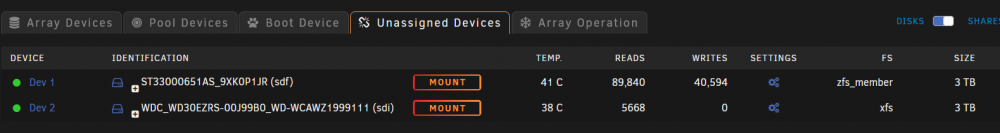

I need to replace the failed disk without trashing the ZFS mirror. A 2 disk mirror has dropped one device. Unassigned devices does not even see the failing drive at all any more. I rebooted and swapped the slots for these 2 mirrored disks, and the same problem remains. The failure follows the missing disk.

zpool status -x pool: MFS2 state: DEGRADED status: One or more devices could not be used because the label is missing or invalid. Sufficient replicas exist for the pool to continue functioning in a degraded state. action: Replace the device using 'zpool replace'. see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J scan: scrub repaired 0B in 03:03:13 with 0 errors on Thu Apr 29 08:32:11 2021 config: NAME STATE READ WRITE CKSUM MFS2 DEGRADED 0 0 0 mirror-0 DEGRADED 0 0 0 3739555303482842933 FAULTED 0 0 0 was /dev/sdf1 sdf ONLINE 0 0 0My ZFS had 2 x 3tb Seagate spinning rust for VM and Docker. BothVM's and Docker seem to continue to work with a failed drive, but aren't working as fast. I have installed another 3tb drive that I want to replace the failed drive with.

Here is the unassigned devices with the current ZFS disk and a replacement disk that was in the array previously.I will do the zpool replace but what is the syntax for using with unRaid? Is it

zpool replace 3739555303482842933 sdi -

9 hours ago, Hoopster said:

Well, since there is multi-page thread dedicated to that topic and is over 5 years old, I assumed there was interest in passing through an iGPU and many were doing so.

Whether or not it is needed/required depends on the use case as you say and, so far, I have not found a use case for it.

Yeah, same here.

-

1 hour ago, Hoopster said:

Sorry, I do not pass through the iGPU to a VM. I use RDP and/or VNC for Windows VM access as it is more or less just for testing.

There are many who do pass through an Intel iGPU and it is possible but I cannot offer any tips on this unfortunately.

Pass through of iGPU is not often done, and not required for most Win10 use via RDP. Mine was not passed through.

-

On 7/5/2021 at 10:26 AM, learnin2walk said:

I also went with this combo. I'm interested in using the iGPU with a windows 10 VM. Is this possible? Do I need a second/dummy card? @Hoopster any advise on this?

I don't have this combo, but a similar one. Windows 10 will load and run fine with the integrated graphics on mine. I'm using Windows RDP for most VM access.

The only downside is that that video performance is nowhere near bare metal. Perfectly usable for Office applications, Internet browsers and totally fine for programming, but weak for anything where you need quick response from keyboard and mouse such as gaming. The upside is that RDP will run over the network so no separate cabling for video or mouse. For bare metal performance a dedicated video card for each VM is required, and then you need video and keyboard / mouse cabline.

-

Every drive has a death sentence.

But just like Mark Twain, "the rumors of my demise are greatly exaggerated". It's not so much the number of reallocated sectors that is worrying, but whether the drive is stable and is not adding more reallocated sectors on a regular basis. Use it with caution, (maybe run a second preclear to see what happens) and if it doesn't grow any more bad sectors, put it to work.

I have had 10 yr old drives continue to perform flawlessly, and I have had them die sudden and violent deaths much younger. Keep your parity valid, and also backup important data separately. Parity is not backup.

-

11 hours ago, Marshalleq said:

Ah yeah, that annoying app data folder that sometimes insists on creating itself in a location not set in the defaults!

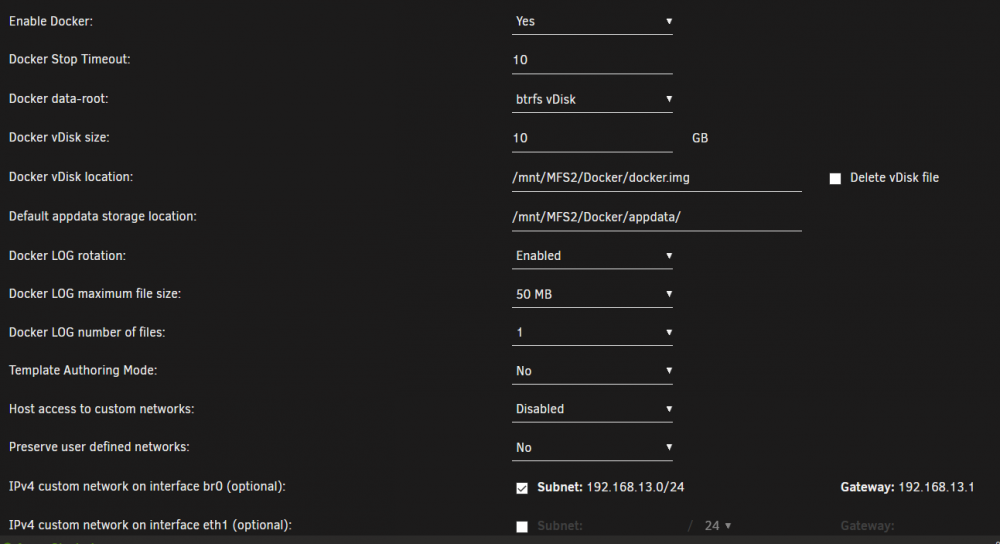

I've attempted to move the docker image to ZFS along with appdata.

VM's are working. Docker refuses to start. Do I need to adjust the BTRFS image type?Correction, VM's are not working once the old cache drive is disconnected.

-

2 hours ago, Marshalleq said:

That doesn't seem to make a lot of sense does it. I assume you know about zpool upgrade and that shouldn't be impacting this. Assuming you're using the plugin, perhaps just uninstall and reinstall the zfs plugin and reboot. Also make sure this is the latest unraid version as recent versions were problematic. Also, if your docker is in a folder rather than in an image I would suggest trying it as an image. Docker folders on ZFS seem to be hit and miss depending on your luck. Can't think of anything else to try right now.

ZFS was not responsible for the problem. I have a small cache drive and some of the files for docker and VM's still comes from there for startup. This drive didn't show up on boot. Powering down, and making sure this drive came up, resulted in VM and docker behaving normally. I need to get all appdata files moved to ZFS and off this drive as I am not using it for anything else.

-

I have had one server on 6.9.2 since initial release and a pair of ZFS drives are serving Docker and VM's without issue.

I just upgraded a production server 6.8.3 to 6.9.2 and now Docker refuses to start and VM's on the ZFS are not available.

Zpool status looks fine

pool: MFS2

state: ONLINE

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

scan: scrub repaired 0B in 02:10:49 with 0 errors on Fri Mar 26 10:24:18 2021

config:NAME STATE READ WRITE CKSUM

MFS2 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdp ONLINE 0 0 0

sdo ONLINE 0 0 0The mount points for Docker are visible in MNT and everthing looks similar to the working 6.9.2 server. This server has been running 6.8.3 for an extended period of time with ZFS working fine.

-

22 minutes ago, ConnectivIT said:

Unless I'm misunderstanding something, I have a disk with Reported Uncorrect sectors in my main unRAID array and unRAID does not appear to reset these during parity checks?

This information can be useful to determine whether this was a one-off event or whether the disk is continuing to degrade.

If I understand you right, you are suggesting that I just monitor the error and don't worry about it. As long as it doesn't deteriorate it's no problem. Yes, this is one approach. However if these errors are spurious and not real, reseting them to zero is also ok. I take it there is no unRaid parity check equivalent for ZFS? (In my case, the disk with these problems is generating phantom errors. The parity check just confirms that there is no errors)

-

I have a 2 disk ZFS being used for VM's on one server. These are older 3tb Seagates. And one showing 178 pending and 178 uncorrectable sectors. UnRaid parity check usually finds these errors are spurious and resets everthing to zero. Is there anything similar to do with ZFS?

-

Thx, using ZFS for VM and Dockers now. Yes, its good. Only issue is updating ZFS when you update unRaid.

Regarding unraid and enterprise, it seems that the user base is more the bluray and DVD hoarders. There are only a few of us that use unRaid outside of this niche. I'll be happy when ZFS is baked in.

-

1

1

-

-

A few months ago, there was chatter about ZFS being part of unRaid supported file systems. @limetech was expressing frustration with btrfs. What is the current status of this?

-

Likely the top one will perform best if you can get all the cores busy, but I have never used this Dell. What processor does it have?

The second machine has higher clock rate so individual core performance will be better. It will really depend on your planned usage, and whether your apps will be able to utilize all the cores. Often, you find that some utilities or apps are single core only, so having massive numbers of cores is useless for that app.

I am using Xeon 2670v1 based servers and find that sticking a second cpu in with another 16 cores is not that much of a benefit for what I do.

-

8 hours ago, Marshalleq said:

Yeah, I used to run it that way, but right now the performance aspects of using ZFS will keep me from going back there for a while. I really forgot what proper storage was meant to perform like.

Well in your case you want all your storage in the fast zone. I also want to have ZFS continue to work and VM's continue to run even if the unRaid array is stopped and restarted. Then unRaid will be perfectly able to run our firewall's, and pfSense without the (your firewall shuts down if the array is stopped).

-

4 hours ago, Marshalleq said:

Hey, I just wanted to share my experiences with ZFS on unraid now that I've migrated all my data (don't use the unraid array at all now).

The big takeaway is the performance improvements and simplicity are amazing.

I've been running my VM's of a pair of old 2tb spinners using ZFS. I have been amazed that it just works, and I don't notice the slowness of the spinners for running VM's. The reason I switched was for the snapshot backups. I love the ability to just snapshot my windows VM back to a known good state. I look forward to having ZFS baked in more closely to unRaid.

I still have one VM on the SSD with BTRFS but I can't see any speed benefit to the SSD compared with the ZFS spinners. I have a Xeon 2670 with 64GB ECC ram. Lesser RAM and/or non ECC RAM may not be such a good option.

I can see benefits via a 2 stage storage system in the future, with ZFS for the speed, and unRaid array for the near line storage that can be spun down most of the time.

-

So the process for those who have 6.8.3 and some version of your plugin is update ZFS plugin then update unRaid to 6.9rc1 then reboot?

My unRaid only finds an update to the ZFS plugin from November, not your December one.

-

Herrobin-diagnostics-20201130-0353.zipe is new diagnostics file. Disk is WDC - 7804.

I clobbered a bunch of disks

in General Support

Posted

XFS_Repair was able to find 2nd superblock on 5 of the 6 affected disks. But only about 2/3 of the files were recovered, and they all were put in lost and found. It will be a dog's breakfast to get anything good. But at least we don't have a total failure.

I do have another backup that I will be pulling from far away that should be able to recover all files.

Moral of the story, your backups matter. 2nd moral, human error is a much bigger source of data loss than anything else.