niietzshe

-

Posts

71 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by niietzshe

-

-

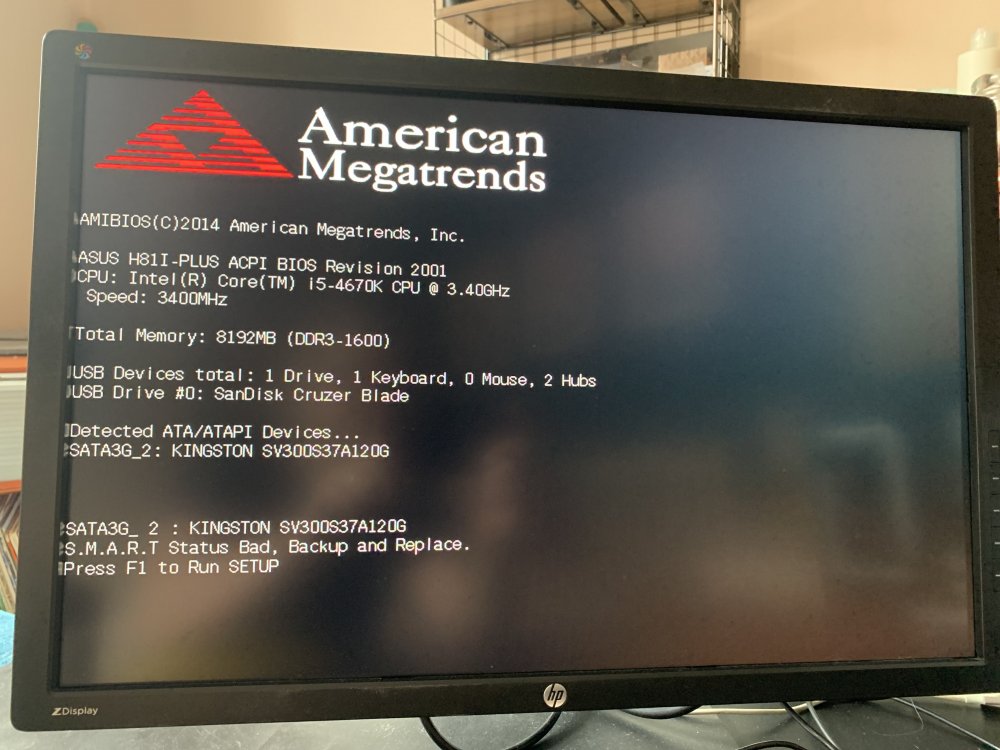

Thanks all. I mean I’ve had a good run of at least 8 years without a failure, so it’s a good thing I’ve never had to deal with this I guess.

I’ve tried the SSd in multiple ports just to make sure and it’s certainly knackered. I’ve bought a new SSd and will be shopping for a ups and little monitor to go in the cupboard.

Thanks for the advice

Christian

Sent from my iPhone using Tapatalk -

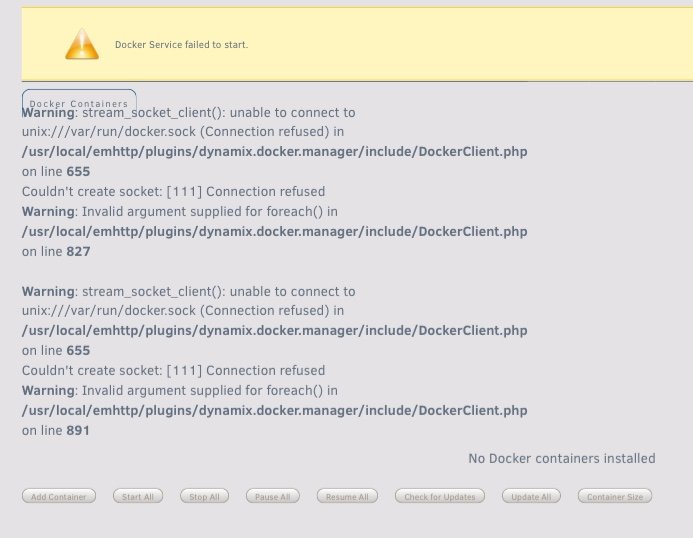

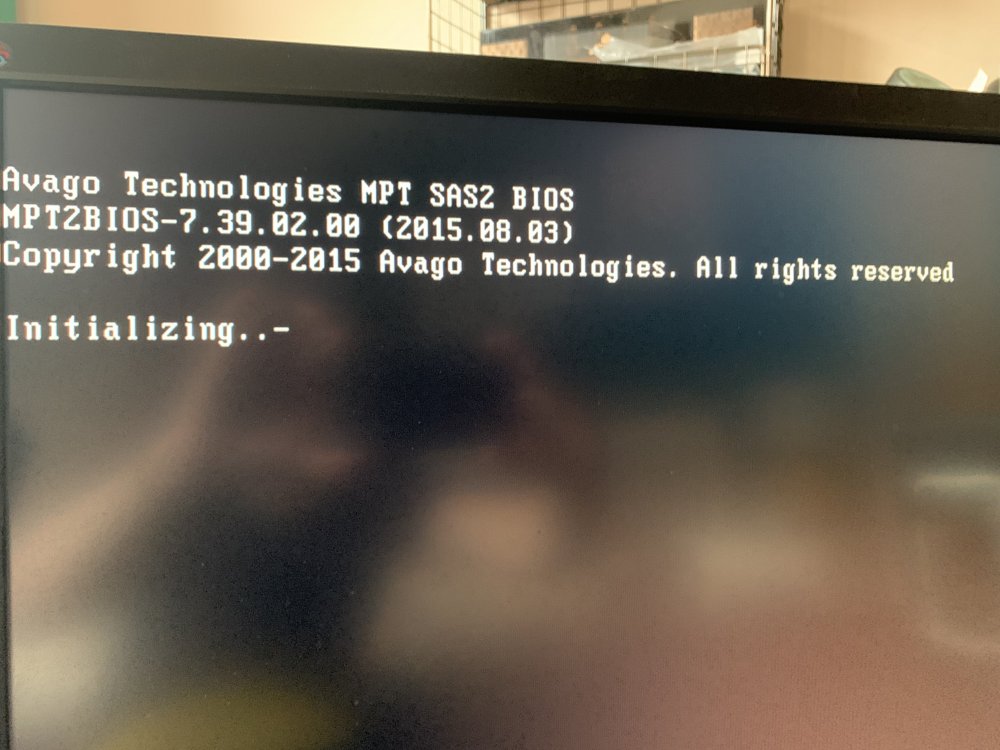

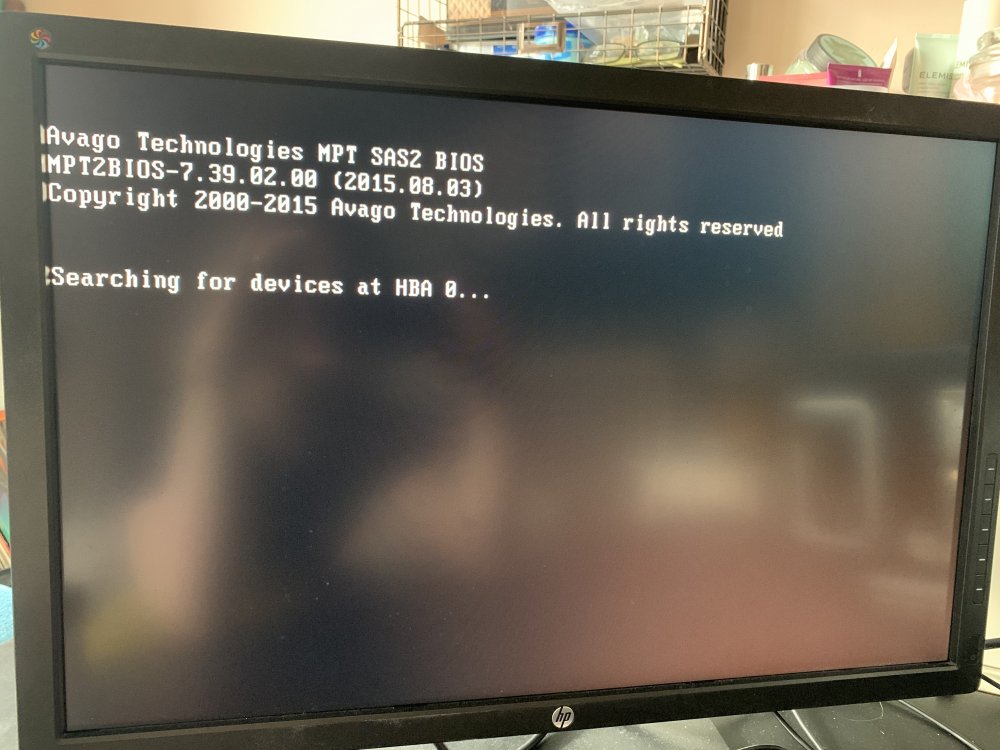

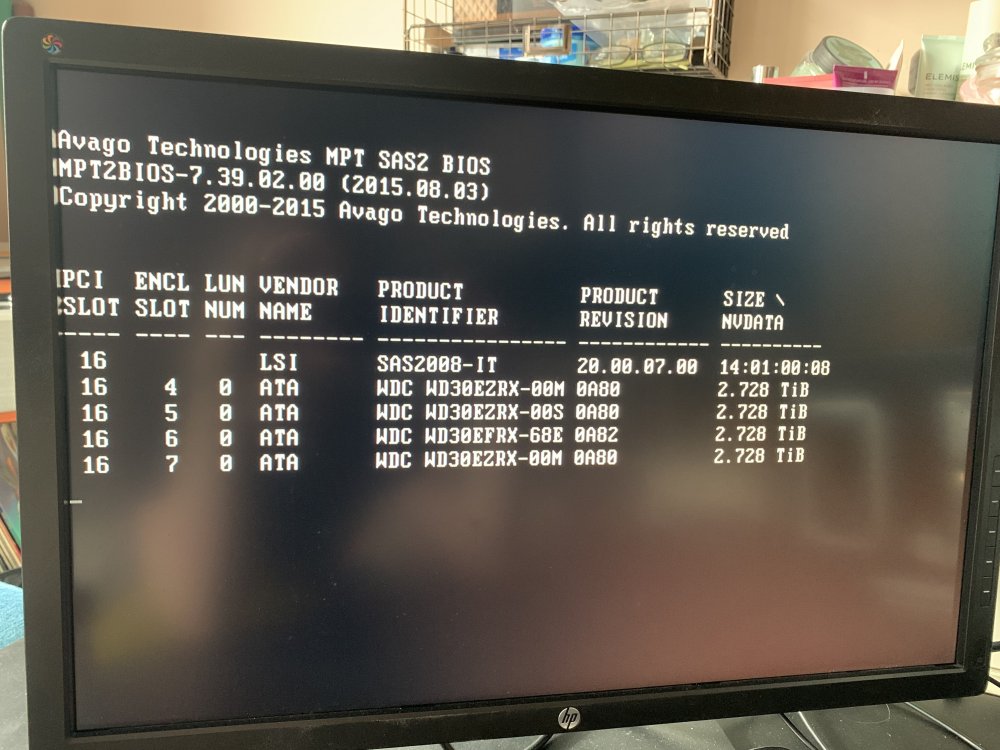

Ohh yeah sure, I know this isn’t unraids fault, I’m just wondering if the bios or the HBA is getting in the way and how to avoid this on a hardware/firmware basis.

Sent from my iPhone using Tapatalk -

Thanks Michael, I quickly disconnected the ssd before I ran out and it booted straight into unraid, so have ordered a new SSD.

Now here’s a question:

Seeing as unraid is on the usb flash drive, why didn’t this boot into unraid and then I would be able to see that it’s a busted drive? I’m thinking of getting a second SSd for redundancy, but this wouldn’t really help me as it would still stop unraid from booting to discover that I need to replace the disk. As it’s totally headless I don’t see the bios boot until I move the server into my office and hook it up to a monitor.

Sent from my iPhone using Tapatalk -

Ahhh.

So the Kingston drive is just my stash drive or whatever it’s called. The SSD. It might hold my VM, which will be a pain, but I can sort that out.

I’ll remove it when I get back and see if that helps it. I have turned off the smart detection but that didn’t resolve the issue.

Thanks for the tips. I’ll report back. Time to get a UPS!

Christian

Sent from my iPhone using Tapatalk -

Hey all,

my servers been up and running for like 8 years with no real problems, probably only restarted once or twice last year, uptime has been great.

The other day we had a power cut and now i cant get it to boot into the UnRaid flash drive automatically. when it fails i can force it to start and everything is fine, but ive had to move the server away from the cupboard to do that and get a monitor connected.

Any tips on how to debug this? Ive tried to force it to the sandisc in bios, but the hba always takes over and tries to boot from the raid drives.

thanks

christiab

-

Thanks for this.

I'd upgraded my CPU to a supported chip, was going to spend money on a full build just to get an extra pci slot of graphics card, so this has saved me a lot of bother and money.

C

-

Thanks. I honestly can’t remember, but I’m sure I connected to the header on the motherboard. I’ve checked ambient temp, it’s 25c a few inches in front of the case.

I’ll check my fans and your suggestions, although it’s a pain to get to bios as I have to rip it all out and connect it to my tv. But I’ll have a go.

I was going to upgrade the case and move to Matt for a graphics card, but apart from plex hardware decoding, I no longer have much of a need for it (I was going to do some 3d work at home, but now I can just vpn into the studio thanks to covid). But a bigger/better case would have given me better airflow.

Sent from my iPhone using Tapatalk -

No there isn’t a hepa filter

Sent from my iPhone using Tapatalk -

Hi all. This is long overdue. My Lian-Li build has had fans on constantly for years now. I’ve always meant to look into why, but thought it was probably just it’s environment (in a large cupboard under the stairs). But we recently opened up said cupboard to change its configuration and haven’t noticed any change in temps or noise.

Build:

LianLi q25b

ST30SF PSU

2 Gelid silent pwm fans

ASUS H81I-Plus motherboard

8gb ram

120gb ssd

4x 3TB drives (WD greens/reds)

i5 4670k

Applications:

usual plex/nzb dockers

home assistant vm

Average drive temps are 45C

Cpu is 48C at 6% load

Fans are 2700 and 860 rpms

Im open to suggestions. It’s just something I know very little about. If it’s a software tweak, or a hardware change, just throw ideas at me.

When we build up the under stairs again I’m going to have a little area at the top. So I might install in and out vents under there to bring the temps down if I can.

Thanks for your time.

-

I replaced my data card with a Dell PERC H310 IT mode And my problems seem to be resolved.

thanks for all the help

-

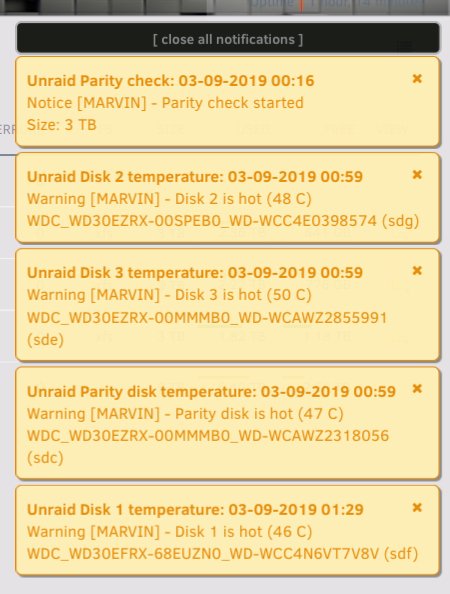

Well after a night of parity checks I normally have an endless list of notifications telling me all disks have overheated, normally well into 50’s or even 60s sometimes.

This morning was a lot better!

96% done and fans running 100% still.

Im still interested in making this better if anyone has any tips/thoughts/comments.

-

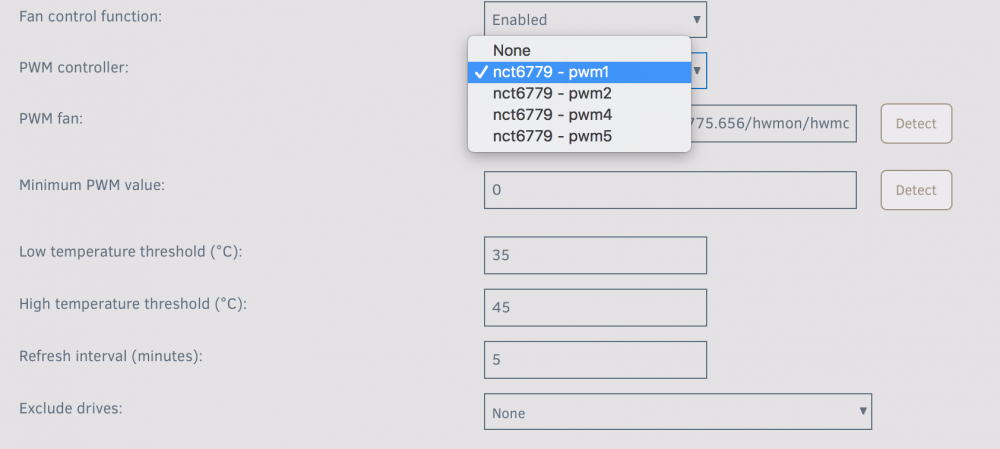

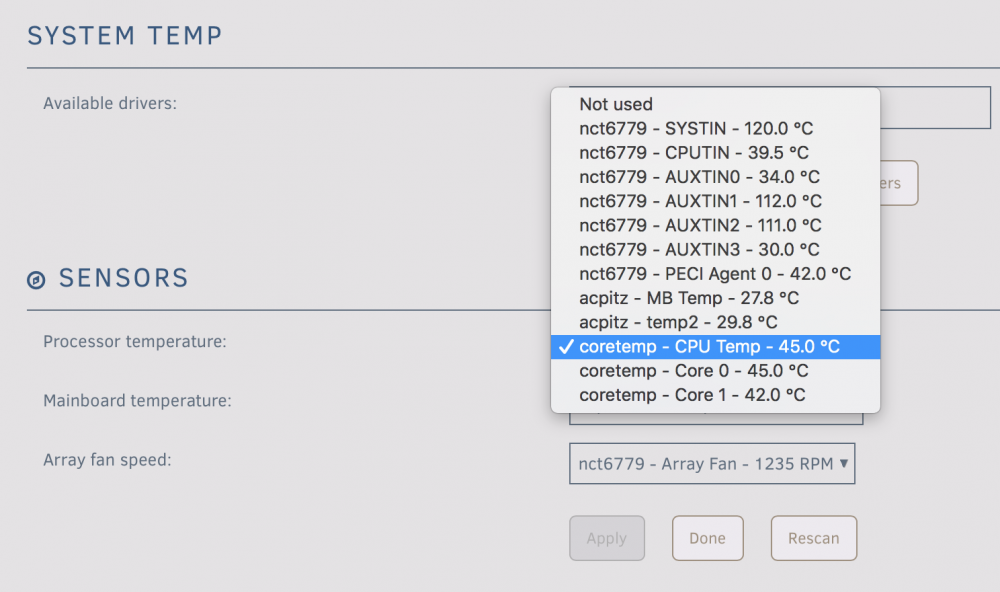

Hey all, sorry I just do not know anything about pwm fans so have to ask some pretty basic questions as I've got hot running drives. Or had, they might be more stable now.

My motherboard is a Asus H81I-PLUS, I have upgraded the fans to pwm fans and installed the dynamix temp and fan control plugins. They seem to be working. I have an airflow field on my dashboard now, one running at 1521RPM, the other at 1236RPM. I then have a cpu fan, but that's not registering anywhere.

Processors temperature (pentium G3420) is at 45C.

My drives (4 spinners, 1 SSD) are running at an average of 37C, SSD at 26C.

I think previously my fans just weren't working, so those drives would hit 60C easily under load.

So I just want to double check I have everything setup correctly.

Under Fan Auto Control - PWM controller, I have selected nct6779-pwm1, but there's also pwm2, 4 and 5.

The fan it's found is '/sys/devices/platform/nct6775.656/hwmon/hwmon2/fan1_input' but why only one fan?

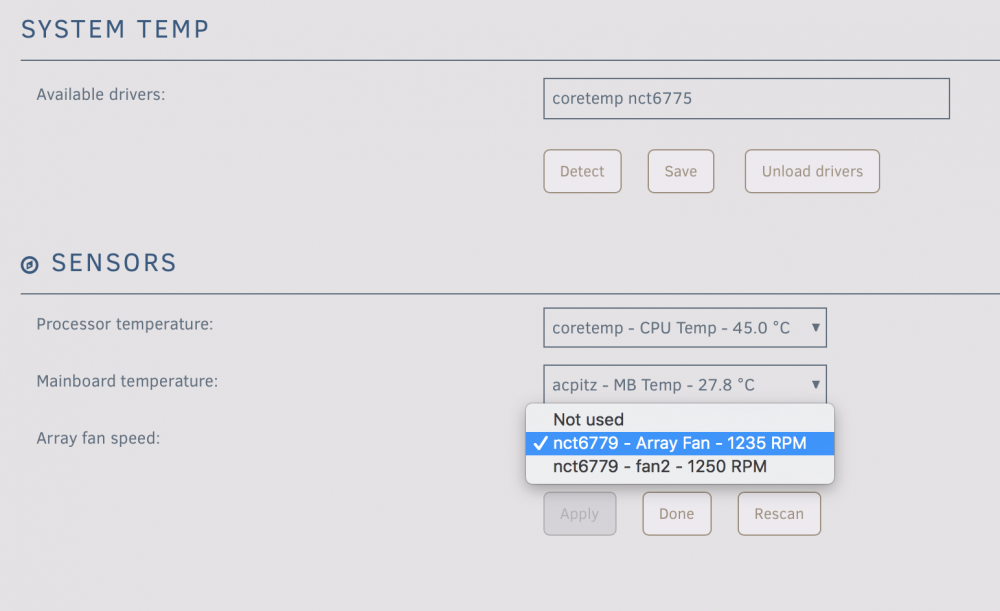

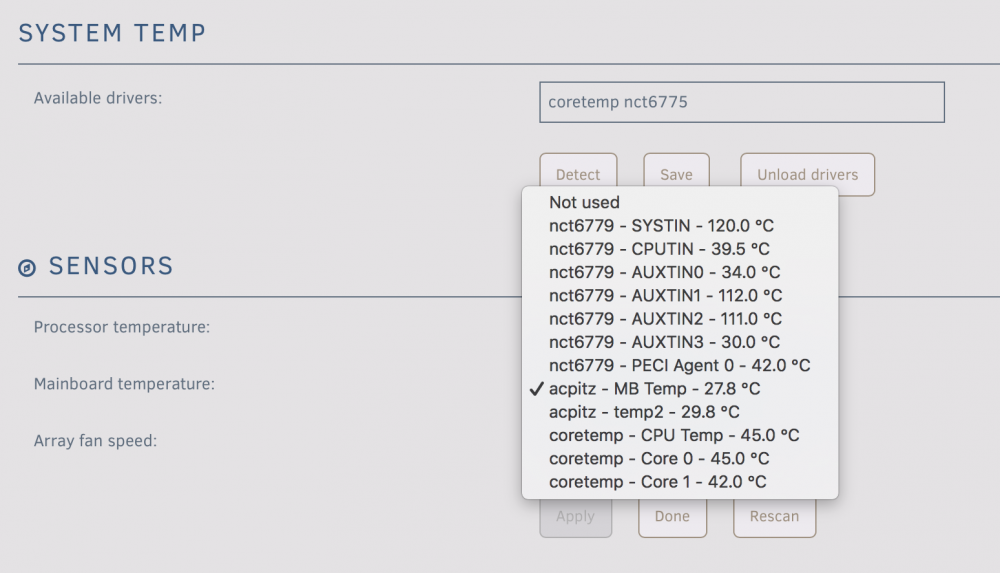

Under the temp plugin, the available drivers are 'coretemp nct6775'.

Processor temp = coretemp - CPU Temp - 44C

Mainboard temp = acpitz - MB Temp - 27.8C

Array fan speed = nct6799 - Array Fan - 1508 RPM

Because these have different options and I'm slightly confused why it's only dealing with one fan, I'm here asking for guidance. Is what I've done correct? Could I change things to make it better?

The case is quite small, a Lian-Li q25B m-itx case.

Additional info:

MB = Asus H81M-PLUS

CPU = Intel Pentium G3420

PSU = SilverStone SST-ST30SF

Fan1 = Gelid Solutions Silent 14 PWM

Fan2 = Gelid FN-PX12-15 Silent

I've included a diagnostic and some screenshots of drop down choices, just incase that's helpful.

Thanks for any advice.

Christian

marvin-diagnostics-20190902-2305.zip

-

Thanks again!

I’ll look for an lsi hba, in the mean time I’ll try moving the ssd to the motherboard if it’s on the card and see if that helps.

Sent from my iPhone using Tapatalk -

Great thanks for the info! I was more worried it was a power supply issue.

I can get same day delivery on this:

Semlos 4 Ports Sil3114 PCI Sata Raid Controller Card with 2 Sata Cables https://www.amazon.co.uk/dp/B00L2X6DE6/ref=cm_sw_r_cp_api_i_SqPtDbWJ9X274

Which is a Silicon image SIL 3114 host controller chip.

Or have you got any other suggestions?

Thanks

Christian

Sent from my iPhone using Tapatalk -

On 8/9/2019 at 7:03 AM, johnnie.black said:

Syslog is just after rebooting, nothing to see, next time post after the problem happens, and please post the full diagnostics (tools -> diagnostics)

Sorry about that, a reboot resolves everything and I didn't realise the logs would re-set, it then took a while overnight to re-fail. Here are the full logs. Thanks!

-

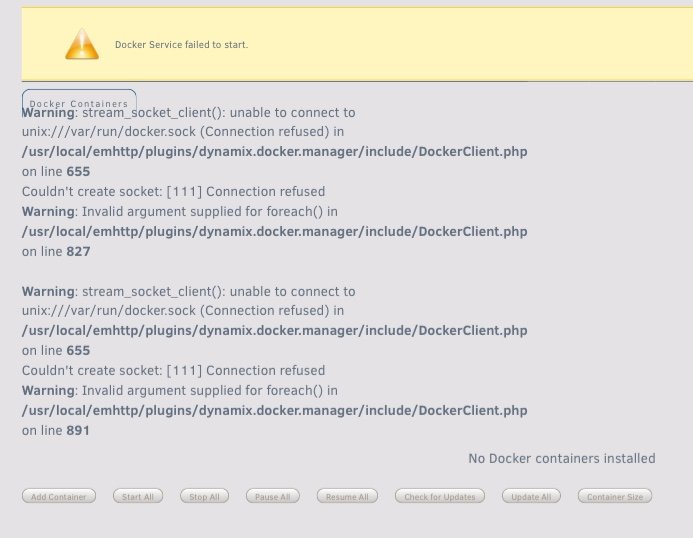

Hey all, I've had a consistent problem for the past 5+ years with UnRaid taking down the cache drive when a parity check happens (monthly). For this reason I've been running an NUC for Home Automation dockers as this needs to stay up, or at least be back up by the morning. I'd like to put an i7 in and return everything back to UnRaid, but I'll have to solve this issue. I'm currently running 6.7.2 but it's been happening for a long time.

I've attached my syslog, it would be great to get this resolved.

Thanks

Christian

-

Consumer user. Been using UnRaid about 6-7 years. All important backups go here as well as media, my photography workflow and docker stack for whole house automation, serving the web ui’s, media and various vms for VFX work.

-

Really?

I've been looking at building a new machine to upgrade a mitx intel pentium build. Should I be avoiding Ryzen still?

I was looking at the same motherboard but a 1600 cpu for esxi with unraid as guest along with some other VMs and dockers (most intensive being plex of course).

-

+1000009 from me

-

I did a parity check last night just to make sure I wasn’t going mad:

Sent from my iPhone using Tapatalk -

Sorry that’s when I start a parity check every month. I’ll check my settings.

Sent from my iPhone using Tapatalk -

If parity checks do cause a problem then you can schedule them to only be run at times when the server would normally be idle.

I normally have to reboot after a parity check (3am). It takes dockers down...

There’s enough power in the NUC for what I need, it stays at 40%. I was just wondering if I should start a new path.

Sent from my iPhone using Tapatalk -

That’s true,

I’m currently monitoring Plex with tautuli through home assistant to see what’s going on.

To be fair the easiest thing to do is replace my router with an R7800, an Apple TV upstairs to replace the nexus player and hardwire it. Then I probably wouldn’t be transcoding at all.

Sent from my iPhone using Tapatalk -

Hey all, Bare with me while I slightly ramble.

I've been running UnRaid on a miniITX board in a LianLi Q25b case for about 5 years. It's a Pentium3.2ghz build with 8GB DDR3 ram, 3x3TB data, 1x3TB parity and a 120GB SSD for cache. I run a number of dockers, mainly Plex is the biggie, no VMs. I also run an IntelNUC Celeron on Ubuntu for home automation, this is also up 24/7 and runs lots of Dockers like Home-Assistant, Node-REd, Mosquitto, MariaDB etc.

The reason I moved all that stuff to an NUC instead of keeping it in UnRaid was because of Parity checks taking it down which wasn't a good WAF.

I'm pretty happy with the setup. I do see plex spiking the CPU, but I mainly just don't like having two places to maintain dockers and the NUC won't run Plex very well. I also need to Update my Router (a Linksys e4200....old!).

So I started thinking I could upgrade my UnRaid server, run Proxmox, Virtualize Unraid as a guest and also run my dockers in another VM and even PFSense in another. This would require me to rebuild, get a Fractal Node 8, new MB, new CPU, probably another 8GB ram and a new NIC card. I'm hoping that would be all.

What do you guys/gals think of this approach? Would you do it? Is it a big no-no bringing it all together into one. Any alternatives or suggestions? I'm thinking of building it slowly over a few months, so could look out for eBay deals, I'm not after blazing, just enough+.

Thanks for any thoughts on the matter.

Christian

Asus motheboard wont boot into os drive

in General Support

Posted

With the new SSd installed everything booted up as normal. Thanks all.

Sent from my iPhone using Tapatalk