allanp81

-

Posts

333 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by allanp81

-

-

Yes, within the motioneye appdata directory you'll see some files named "thread-1.conf", "thread-2.conf" etc. that relate to each camera. In theory stop the docker, identify which config file applies to which camera, make a backup of the file then change the necessary properties in the file and restart the docker.

-

Has anyone managed to get this working as subfolder yet? ie. https://myserver/nextcloud?

-

Has anyone managed to get this working as a subfolder instead of the default? I've gone through the nextcloud documentation but it doesn't seem to work. If I do the changes in the config.php to add /nextcloud so http://192.168.x.x:8443/nextcloud instead of just http://192.168.x.x:8443/ it doesn't work so means I can't get it work via reverse proxy either.

-

On 10/6/2023 at 8:19 PM, ashkasto said:

can anyone help me with radarr and sonarr docker. When new folders are being made by docker container they do not have correct permissions. I get errors when sonarr and radarr try to migrate files to plex server. When i reset permission in tools section of unraid I am then ok, but the following day same thing happens again. Thank you

Under settings and then Media Management (make sure you enable show advanced) there's a section if you scroll down so that you can set permissions.

-

I think my problem with trying to get this working again via reverse proxy is that I can't get it to work even internally with a changed webroot. If I set this on the config.php:

'overwritewebroot' => '/nextcloud'

It doesn't work for some reason. I can't see any obvious errors in the error.log or even anything really in the access.log that would suggest why it fails once the webroot is changed.

-

3 hours ago, hblockx said:

Anyone an idea how to change the webroot since the big update of this container? No matter what I try, I am getting nginx issues, the time I am using 'overwritewebroot' => '/nextcloud',

Nope, I've tried everything and have given up with trying to get it working externally again. Worked perfectly previously but I've spent hours and days trying to get it working again.

-

It seems something might've changed, I used to use the /nextcloud webroot but since the docker was updated I can't get it working anymore. I've tried replicating all of the settings I had before with swag and nextcloud but it just refuses to work.

-

17 hours ago, MikaelTarquin said:

Did you upgrade the nextcloud version inside the web UI before upgrading the docker? I finally got mine working again last night and pinned the docker to release 27 so that it doesn't break again. This page helped me a lot:

Originally I tried to get my docker to update etc. but gave up and ended up renaming the appdata folder and letting it create new and then copied back my config.php.

My instance works fine internally and things sync etc. but I can't get external access to work anymore when I try to replicate the settings I had applied to nginx and nextcloud. The main issue from the logs appears to be an SSL handshake problem but I don't recall having to do anything fancy other than change the url base to /nextcloud and add ips and hostnames to the trusted domains and trusted proxies etc.

-

I still cannot get my nextcloud instance to work with swag anymore. I've tried everything I can think of but can't get it to work.

-

Does anyone else have their Nextcloud docker set up to access externally via a subfolder? Mine worked perfectly before Nextcloud was ugpraded and now I can't get it to work no matter what I try. I used to be able to use https://my.domain.com/nextcloud and have that forward to the internal docker running at 192.168.7.11:8443 but now I can't get it to work with lots of errors regarding SSL handshakes etc.

Previously I had this in my default.conf within swag and it's worked fine for years:

location ^~ /nextcloud {

proxy_pass https://192.168.7.11:8443;

include /config/nginx/proxy.conf;

} -

Does anyone have a definitive guide on reverse proxy with swag? Previous to the changes to nextcloud docker I had this all working perfectly but now I can't for the life of me get it working again rendering my nextcloud docker essentially useless to me.

I have to have mine set up as https://my.domain.com/nextcloud as I can't create subdomains with my DDNS but this was never an issue before.

My nextcloud server works internally fine so I know the server part of it is working fine, just the proxy stuff that I can't get working like before.

-

I managed to get mine working again internally again but can't get external access to work anymore.

I can't set up a subdomain on my DDNS so have always had it set as this in my swag default.conf:

location ^~ /nextcloud {

proxy_pass https://192.168.7.11:8443;

include /config/nginx/proxy.conf;

}I have overwritehost etc. set in the config.php within nextcloud itself but I can tell it partially works as I get a nextcloud error page when attempting to access externalyl.

-

Is anyone able to help me get this working again? I've been using it for years without issue so this is incredibly annoying to now have it non-working.

I have what used to be lets encrypt running fine and had a reverse proxy set up for nextcloud but now I don't know where the problem lies and whether the problem is this new nextcloud setup or the reverse proxy.

-

Mine is running latest version but can't access it even though it was all working properly beforehand.

Log on starting docker shows

using keys found in /config/keys

**** The following active confs have different version dates than the samples that are shipped. ****

**** This may be due to user customization or an update to the samples. ****

**** You should compare the following files to the samples in the same folder and update them. ****

**** Use the link at the top of the file to view the changelog. ****

┌────────────┬────────────┬────────────────────────────────────────────────────────────────────────┐

│ old date │ new date │ path │

├────────────┼────────────┼────────────────────────────────────────────────────────────────────────┤

│ │ 2023-04-13 │ /config/nginx/nginx.conf │

│ │ 2023-06-23 │ /config/nginx/site-confs/default.conf │

└────────────┴────────────┴────────────────────────────────────────────────────────────────────────┘

[custom-init] No custom files found, skipping...

[ls.io-init] done.I have a reverse proxy set up that I haven't made any changes to so can anyone give me some pointers on what else I can do to get this working again?

I've followed the guide here: https://info.linuxserver.io/issues/2023-06-25-nextcloud/ and still no joy.

-

In case anyone ever asks, I got this working quite simply by doing the following:

#!/bin/bash f=/path/to/file/on/nextcloud/instance inotifywait -m -e modify "$f" --format "%e" | while read -r event; do if [ "$event" == "MODIFY" ]; then cat $f contents=$( cat $f ) if [ $contents == "on" ]; then echo "Starting VM" virsh start "VM NAMe" elif [ $contents == "off" ]; then echo "Stopping VM" virsh shutdown "VM NAMe" fi fi doneSo basically create a text file on your nextcloud instance and then find the path to it via the terminal. If you then edit the file via the nextcloud web interface or mobile app and set the contents to "on" or "off" it will power the VM on or off accordingly.

-

I only need my VM to be accessible 2 days a week so is there a simple way I could somehow trigger a power on of the VM?

I have nextcloud so I thought is there a way using a script that I could watch for the presence of a file or a change of a file to trigger?

-

So does this container support nvidia transcoding? I found some info quite a few pages back but I can't get it to work. I have installed the nvidia drivers etc. and have tried adding the NVIDIA_DEVICE_CAPABILITIES and NVIDIA_VISIBLE_DEVICES as container variables but nothing happens.

*Edit: nevermind, I got it working by adding "--runtime=nvidia" to the extra parameters"

-

2 minutes ago, Kilrah said:

It's in Plugins like the older one.

hmm, found it by choosing the backup category on the left but it wouldn't appear even just searching for the word "backup" for some reason.

-

What am I doing wrong? I uninstalled the old version but don't see the new version when I search for it in apps.

-

It seems that reapplying new thermal paste has made zero difference. There's nothing wrong with the fan on the CPU either so it's a very odd problem. Will hopefully get something in the logs.

-

Cool thanks, I've set it up to log to my main server so I'll be able to collect the logs to see what it says.

-

I'll try applying some new thermal paste to it, I don't know if atmospheric conditions can affect it as my backup server is in my loft (attic).

-

The server reboots so can't get diags. Nothing has physically changed on the hardware and I used to run this script daily without fuss so I feel like something on unraid itself has changed.

-

I have an rsync that backs up my main server to a backup server and this used to work absolutely fine but it seems that recently it's started making the 2nd server reboot as it seems to max out 1 of the CPU cores and makes it start overheating.

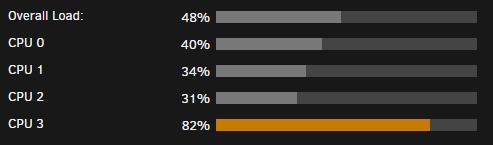

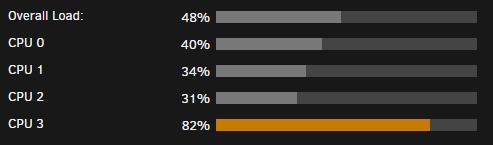

You can see how it oddly spreads the load across the cores. It appears that it's SSHD that causes the large amount of CPU usage.

Sometimes the transfer rates drops from about 110meg/sec to much much lower and the CPU usage drops right down and then subsequently the temps and everything is fine but obviously backup then takes a crazy amount of time. I have the turbo write plugin enabled and set to automatic but doesn't seem to make much difference.

Anyone got any ideas as to why this is happening? The 2nd server is a core i5 but it's literally only used as a copy of my main server.

[Support] Linuxserver.io - Nextcloud

in Docker Containers

Posted

Has anyone managed to get this working via swag as a subfolder rather than subdomain? It used to work fine but since the changes last year I just cannot get it to work anymore and I've followed the guides on here and on the nextcloud docs as best I can.