-

Posts

1726 -

Joined

-

Days Won

29

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by SpaceInvaderOne

-

-

Um I am not sure if the nvidia drivers would be a problem with the 1 gpu. Maybe as they are loaded before the gpu is passed through it maybe could.

Try uninstalling the drivers and try again.

if still doesnt work then goto tools/systemdevices and stub the P2000 both graphics and its sound parts and reboot

You mention splashtop showing a black screen. Can you test with a monitor directly connected to the gpu whilst testing?

-

Are you booting the server by legacy boot or uefi? You can check this on the maintab/flash at the bottom of the page.

I find that booting legacy works better for me with passthrough so if you are not booting legacy you could try.

Also you can try adding this to your syslinux config file

video=efifb:off

so for example

label Unraid OS menu default kernel /bzimage append initrd=/bzrootwith added would be

label Unraid OS menu default kernel /bzimage append video=efifb:off initrd=/bzrootHope this may help

-

Just now, Linus said:

Hi. I have a Intel Xeon D-1528 CPU.Ah okay zenstates in go isnt necessary for your cpu

-

1

1

-

-

What CPU is in your server. If Ryzen do you have the zenstates in your go file?

-

12 hours ago, Kich902 said:

Hi Spaceinvader. First of I'm new to Unraid and started on the trail for a few days now and way before been going through -and downloaded-your videos on necessary topics I'm interested in especially VMs. You , Sir, are a God given gift! 😃. Now, onto my issue: i tried this and it worked for a minute but then the VM shuts down. The windows in on a SATA SSD. What could be the issue?

Its very hard to say without more info. Please post the xml of the vm here. Try a different machine type ie if using i440fx try q35 and vice versa

-

On 4/5/2021 at 4:06 AM, RevelRob said:

macOS Big Sur 11.2.3 is 071-14766. Bear in mind, it does take awhile to download though. check the logs in:

mnt/user/appdata/macinabox/macinabox/macinabox_BigSur.logI thought I had posted here about update a few days ago but it seems i didnt!

Well I pushed out a fix for Macinabox over Easter and now it will pull BigSur correctly so if you update the container all should be good.

Now both download methods in the docker template will pull Bigsur. Method 1 is quicker as it downloads base image as opposed to method 2 which pulls the InstallAssistant.pkg and extracts that.

-

2

2

-

-

Ever wanted more storage in your Unraid Server. See what a 2 petabytes of storage looks like using Nimbus data 100TB ssds.

(check todays date

)

)

-

2

2

-

7

7

-

-

8 hours ago, Rikul said:

My problem with Unraid is that I have a dynamic IP and I'm looking forward to find the best and easy way to forward all my domains to my public IP. I'm already using swag and duckdns to access remotly to my running dockers and everything work like a charm.

I have studied the @SpaceInvaderOne and ibracorp ( @Sycotix)videos' dealing with domain names and webproxy with cloudflare. I think it can do the trick for me but I have some more research to do to deal with the cloudflare docker to be able to manage more than one domain.

If you want to have 3 domains all point to your public WAN IP it is very easy to do.

The Cloudflare DDNS container would update your main domains IP (yourdomain1.com ) by means of a subdomain .

For example you would set the container to update the subdomain dynamic.yourdomain1.com to be your public WAN IP

So then dynamic.yourdomain1.com will always be pointing to your public WAN IP.

Then any other domains or subdomains that you want to point to your public WAN IP, you just make a CNAME in your cloudflare account which points to dynamic.yourdomain1.com

So for example you could point and subdomain for example www.yourdomain2.com to dynamic.yourdomain.1.com

However many domains like to use a “naked” domain (which is a regular URL just without the preceding WWW) so for example you can type google.com and goto google without having to type www.google.com.

This "naked" domain is the root of the domain. DNS spec expects the root to be pointing to an IP with an A record.

However Cloudflare allows the use of a CNAME at root (without violating the DNS spec) by using something called CNAME flattening which enables us to use a CNAME at the root, but still follow the RFC and return an IP address for any query for the root record. So therefore you can point the "naked" root domain to a CNAME and could use yourdomain2.com pointing by CNAME to dynamic.yourdomain1.com

So with your 3 domains you can point any subdomain or the root domain to the (cloudflare ddns docker container updated on unraid) dynamic.yourdomain1.com

But you don't need to use Cloudflare DDNS if you don't want to. Instead of using the Cloudflare DDNS container you could use the DuckDNS container.

Then for the subdomain dynamic.yourdomain1.com instead of having its a record updated by the Cloudflare container you would use a CNAME for dynamic.yourdomain1.com to point to yourdomain.duckdns.org (or whatever your DuckDNS name was)

So basically its just a chain of things that eventually resolve an IP which is your public WAN IP.

Also Swag reverse proxy allows you to make letsencrypt certs for not just one domain but multiple domains too.

I hope that makes sense

-

2

2

-

-

here is a guide for setting up Binhex's minecraft container

In addition to what I say in this video

It actually isn't necessary to use an SRV record to have the connection as minecraft will by default try port 25565 by default. But using an SRV I think is still a good idea especially if you want to use a 'non standard' port for minecraft. Then when using a non standard port you dont have to set the port in the minecraft client. I think it is better not to use the common port for the minecraft server and use an unknown port as this potentially is more secure.

Also if you only want people you know to be able to access the server then edit the server properties config file and change whitelist to true. Restart container then Adding and removing players to the accesslist

1. Open webui on the container to see the console.

2. In the console, you will need to type “whitelist add playername”. For example, “whitelist add spaceinvaderone”.

3. After you type this command, you will see a message stating “player added to whitelist”.

4. to remove type “whitelist remove playername”

-

1

1

-

-

-

Here is a guide that i have made showing howto setup Guacamole. Hope its useful.

-

On 12/26/2020 at 6:28 PM, reporrted said:

I have been unsuccessful dumping a GTX 570 Rev. 2 vbios. I also receive the cat: rom: Input/output error. I thought this may have been due to the SMB security, but even set as public it had no change. When it does go through the initial steps it sometimes reports 70Kb seems to small and does the sleep/wake method but always ends with the Input/output error.

Is there a deeper log I can look into for this?

Love the video's SpaceInvaderOne.

No sorry no more logs are available. If you get it to dump and it says its less than 70kb you could just stop the script at that point and see if that vbios is in fact okay. Some older gpus may have a smaller vbios size ( i have a very old card a cirus logic from the 1990s that is a pci card, i use in a pcie to pci bridge, its vbios is only 30kb !)

-

2

2

-

-

Some extra things you can try .

1. Stub gpu

2 Shutdown fully the server then start server. (not reboot)

3. make a temp vm using ovmf attach the gpu you want vbios from start vm up then after couple seconds force stop.(the script makes one with seabios )

run script

If still getting an error repeat above then edit sctipt and look for a variable forcereset="no" and change it to yes. This will force the scr4upt to always disconnect sleep the server then reconnect before dumping the vbios.

-

1

1

-

-

Hi Guys. So I have found that recently i have had to use a vbios with both my GPUs in my server whether Primary or Secondary GPUs.

So i thought i would try and write a script that would automatically dump the vbios from a GPU in the server even if its a Primary GPU without having to do any command line work or without having to download a vbios from techpowerup and then hex edit it.

I have tested this in about a half a dozen servers and it has worked for all of them. Before running the script I recommend shuting down the server completely (not rebooting) the start it back up. Make sure no containers are running (disable docker service) and make sure no VMs are running. This is because if the GPU is Primary the script will need to temporarily put the server to sleep for a few seconds to allow the GPU to be in a state where it can have the vbios dumped from it. If alot of containers are running the server doesnt always goto sleep and the script fails.

How the script works.

It will list the GPUs in the server showing the ids. Then with your choosen GPU id the script will dump the vbios using these steps. It will make a temporary seabios vm with the card attached, then quickly start and stop the vm with GPU passed through. This will put the GPU in the correct state to dump the vBios.

It will then delete the temporary vm as no longer needed.

It will try to dump the vbios of the card then check the size of the vbios.

If the vbios looks correct (which normally if gpu is NOT primary it will be) it will finish the process and put the vbios in the location specified in the script (default /mnt/user/isos/vbios)

However if the vbios looks incorrect and the vbios is under 70kb then it was probably dumped from a primary GPU and will need an additional stage. This is because the vbios was shadowed during the boot process of the server and so the resulting vbios is a small file which is not correct. So the script will now disconnect the GPU then put the server to sleep. Next it will prompt you to press the power button to resume the server from its sleep state. Once server is woken the script will rescan the pci bus reconnecting the GPU. This now allows the primary gpu to be able to have the vbios dumped correctly. Script will then redump the vbios again putting the vbios in the loaction specified in the script (defualt /mnt/user/isos/vbios)

Here is a video showing how to use the script

Of course you will need squids excellent user scripts plugin to use this script. You wil find the dump script on my github here https://github.com/SpaceinvaderOne/Dump_GPU_vBIOS

I was hoping that if this script works for most people then we could get a big repository of dumped vbioses (not hexedited) and I will put them in the github. I have made a start with a few of my GPUs. So if you want to contribute then please post a link too your vbios and i will add it. Please name the vbios like this.

Tith type, vendor and model and memory size. For example RTX 2080ti - Palit GAMING PRO - 11G The vbios collection is here https://github.com/SpaceinvaderOne/Dump_GPU_vBIOS/tree/master/vBIOSes

Well I hope the script works for you and you find it useful. Oh and as its the now 12 midnight here in the UK - its Christmas day. So merry christmas fellow Unraiders I hope you have a great day and a good new year

-

1

1

-

4

4

-

-

7 hours ago, SpencerJ said:

I will look into this Ed.

Edit: Sent PM for test.

Perfect all working now. Thankyou

-

1

1

-

-

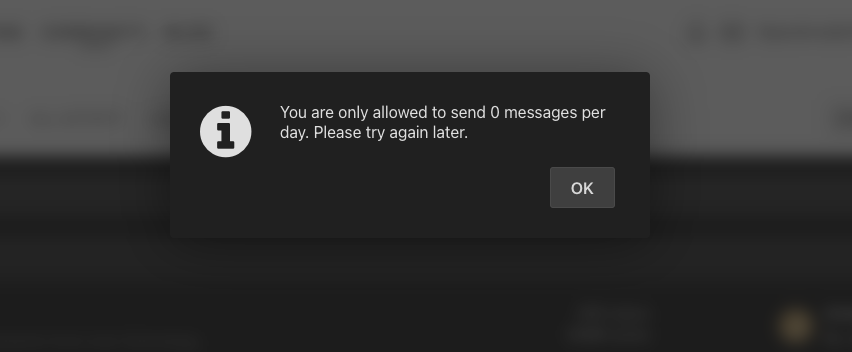

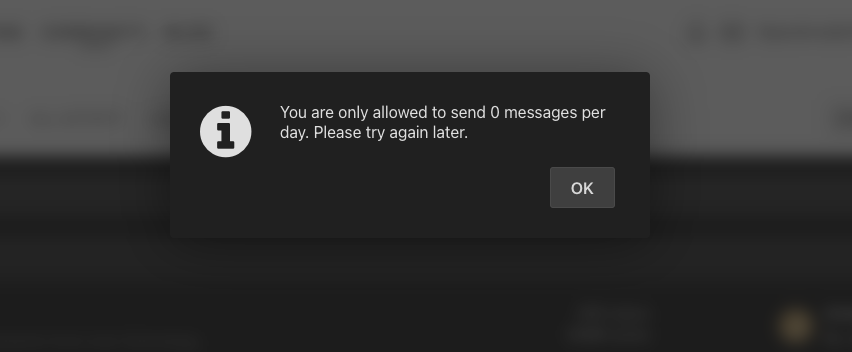

Hi

For some reason recently I have been unable to send PMs

I get the following error

-

1

1

-

-

17 minutes ago, alturismo said:

i will once i would get the macos to boot again, i tried to add the EFI stuff and wrecked it (again)

You can just run the vm with the opencore vdisk as the first vdisk. You dont have to transfer it into the main vdisk

-

6 minutes ago, alturismo said:

sadly i didnt get any audio device from nvidia

Try setting the nvidia gpu as a multifunction device as in this video here

-

15 minutes ago, czarci said:

unfortunately it's stuck on "Updating..." I waited like 5-10 minutes, then if I try to remove it, it's the same. Funny enough if I go to Tools -> Docker Safe New Perms, the VM disappeared? and then I just removed the file on cache...

If you have the "stuck on updating" with a vm this is normally due to when you have had something added previously (like a usb device passed through) and the device is no longer there. Or if you have removed the vdisk etc. Basically Unraid cant see all the devices that are defined in the template so when clicking on update it just hangs

Have you removed anything from the vm since creating it?

-

22 hours ago, erasor2010 said:

Looks more like the servers are down, if you choose method 2 there is this error

working on a solution

-

1

1

-

-

23 minutes ago, czarci said:

If you want to delete the vm and it moans about not being able to remove the nvram please do the following

1. In unraid vm manager click to edit the vm

2. scroll to bottom and click update. (dont run helper script afterwards)

3. now you can delete the vm from in unraid vm manager

-

13 hours ago, nlash said:

Using High Sierra and intending to pass through a 1080ti...what needs to be done to the config.plist in OpenCore to allow pass through?

You shouldnt need to do anything on the opencore config

You will just need to download the nvidia drivers once booted into osx.

Run this in terminal and it will download the correct nvidia drivers in high sierra

bash <(curl -s https://raw.githubusercontent.com/Benjamin-Dobell/nvidia-update/master/nvidia-update.sh) -

13 minutes ago, arkestler said:

So if I create a Big Sur VM when I first install this docker, how can I create a second VM with Catalina?

After installing Big Sur next goto the docker template and choose Catalina, goto the bottom of the template click apply and it will then install catalina.

-

7 hours ago, susnow said:

hi... i've try it again and i faild again and again.... i'm not sure is there some steps wrong or just i'm unlucky🥺

my step at first i bind immo device at unraid system device and reboot unraid

then i choose other pcie device at vm edit view

after these step i run macinabox_helper user script and fix xml automatic

finally i run the mac os big sur vm and faild to start...

i can run macos just do not select the pcie device

maybe the device is not work for macos? i think...

Try passing the same device to another vm a windows or linux to see if it is the hardward just not passing through or is os specific.

Nvidia P2000 Passthrough to Win 10 VM

in VM Engine (KVM)

Posted

Glad you have it working. By the way SI1 and TAFKA gridrunner is the same person .My orginal username on the formums many years ago was gridrunner hence the tag TAFKA Gridrunner !! 😉

If you want to be able to have no monitor plugged in you will need an hdmi or display port dummy like here https://amzn.to/3mH5e3I