Rich

Members-

Posts

268 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Rich

-

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

That's the reason I've been waiting a week between tests, although last night my VM and Docker backup cron job ran, that's at least 200GB written to the array. So i think only waiting 5 days should be ok this time -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Well the first parity check after disabling vt-d has come back with zero errors of any kind. I am going to wait until the weekend and then run another check and if it comes back clear, then try re-ebabling vt-d and adding iommu=pt to the syslinux.conf. Then after that I'm going to re-flash the bios of the new controller and see what that does. Fingers crossed! -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Lol, no worries -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Both lspci's were run today, I swapped the second and RMA'ed cards over on Thursday, so definitely not a card mix up. I'm going to let this parity check run, as so far it is error free, then I'll re-enable vt-d and see what lspci says. Its a bit weird though, isn't it -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Ok this seems strange. I've just disable vt-d and am running another parity check (so far, so good) and decided to run lspci again and the info has changed, Before (vt-d enabled) 01:00.0 RAID bus controller: Marvell Technology Group Ltd. 88SE9485 SAS/SATA 6Gb/s controller (rev c3) 06:00.0 RAID bus controller: Marvell Technology Group Ltd. 88SE9480 SAS/SATA 6Gb/s RAID controller (rev c3) Now (vt-d disabled) 01:00.0 RAID bus controller: Marvell Technology Group Ltd. 88SE9485 SAS/SATA 6Gb/s controller (rev c3) 06:00.0 RAID bus controller: Marvell Technology Group Ltd. 88SE9485 SAS/SATA 6Gb/s controller (rev c3) The number and description are different - 88SE9485 SAS/SATA 6Gb/s controller (rev c3) Should this randomly change? I assumed it was effectively the version number and name of the card. I've not attempted to update anything, simply disabled vt-d and rebooted -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Ok, I'll give that a go too. Is updating it via the standard method (booting into DOS etc) the recommended way to go, or is there another way to do it? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

So much for waiting a week! This replacement controller, for some reason, takes a lot less time to start generating errors. A few hours into a parity check and I got errors and timeouts on one of the disks connected to the new SAS2LP, Jan 15 15:16:45 unRAID kernel: drivers/scsi/mvsas/mv_94xx.c 625:command active 00000336, slot [0]. Jan 15 15:17:17 unRAID kernel: sas: Enter sas_scsi_recover_host busy: 1 failed: 1 Jan 15 15:17:17 unRAID kernel: sas: trying to find task 0xffff8801b6907900 Jan 15 15:17:17 unRAID kernel: sas: sas_scsi_find_task: aborting task 0xffff8801b6907900 Jan 15 15:17:17 unRAID kernel: sas: sas_scsi_find_task: task 0xffff8801b6907900 is aborted Jan 15 15:17:17 unRAID kernel: sas: sas_eh_handle_sas_errors: task 0xffff8801b6907900 is aborted Jan 15 15:17:17 unRAID kernel: sas: ata18: end_device-8:3: cmd error handler Jan 15 15:17:17 unRAID kernel: sas: ata15: end_device-8:0: dev error handler Jan 15 15:17:17 unRAID kernel: sas: ata16: end_device-8:1: dev error handler Jan 15 15:17:17 unRAID kernel: sas: ata17: end_device-8:2: dev error handler Jan 15 15:17:17 unRAID kernel: sas: ata18: end_device-8:3: dev error handler Jan 15 15:17:17 unRAID kernel: ata18.00: exception Emask 0x0 SAct 0x40000 SErr 0x0 action 0x6 frozen Jan 15 15:17:17 unRAID kernel: ata18.00: failed command: READ FPDMA QUEUED Jan 15 15:17:17 unRAID kernel: ata18.00: cmd 60/00:00:10:9e:1a/04:00:69:00:00/40 tag 18 ncq 524288 in Jan 15 15:17:17 unRAID kernel: res 40/00:ff:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Jan 15 15:17:17 unRAID kernel: ata18.00: status: { DRDY } Jan 15 15:17:17 unRAID kernel: ata18: hard resetting link Jan 15 15:17:17 unRAID kernel: sas: sas_form_port: phy3 belongs to port3 already(1)! Jan 15 15:17:20 unRAID kernel: drivers/scsi/mvsas/mv_sas.c 1430:mvs_I_T_nexus_reset for device[3]:rc= 0 Jan 15 15:17:20 unRAID kernel: ata18.00: configured for UDMA/133 Jan 15 15:17:20 unRAID kernel: ata18: EH complete Jan 15 15:17:20 unRAID kernel: sas: --- Exit sas_scsi_recover_host: busy: 0 failed: 1 tries: 1 So its onto disabling vt-d now, i'll post back my findings John_M. Squid, just so I'm clear, are you saying to update the card with the most up to date firmware version (which its already on), to see if the ID changes, or to see if it helps fix the problem? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

I have two SAS2's, the older of which works perfectly, but after the installation of the newer controller (and its subsequent RMA'd replacement) I have started to see the mentioned problems. So I could at least differentiate between a potential functional SAS2 and a dodgy one. -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

That's frustrating, same chipset but a variation of symptoms. If it is the Linux driver, is this something Limetech could address, or at least flag to the right party? If you'd like me to try anything else to help you with your controller diagnosis, just let me know. I'll post back my results as soon as I have an update. -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

These are mine, 01:00.0 RAID bus controller: Marvell Technology Group Ltd. 88SE9485 SAS/SATA 6Gb/s controller (rev c3) 06:00.0 RAID bus controller: Marvell Technology Group Ltd. 88SE9480 SAS/SATA 6Gb/s RAID controller (rev c3) Yeah I will do. Unless I get instant errors, I've been running changes for a week before moving to the next one, so I'll do the same with the power cable, then disabling vt-d and then adding iommu=pt to syslinux.conf. I'm really hoping its the power cable, lol Thanks for pitching in, I appreciate the help. Are you aware of anyone contacting either SuperMicro or Marvell regarding the potential chipset bug? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Well it certainly looks like it could be the same issue, I've checked the marvell chipsets on the cards and one has 9485 which is on the list and one has 9480, which isn't. If my first card is the 9480 chipset, that would certainly explain why I've only started seeing errors since the second card was installed. I've just swapped over the power cables and am waiting to see what happens, if I get errors, then I will disable vt-d. I pass through a NIC and usb connections from a UPS and Corsair link PSU, so it's gonna mean selling the card if it's this -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Agreed. I'm definitely going to try it. -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Oh that's interesting, I hadn't come across that. Thanks for the link John_M. The only strange thing about that though, is that I've been running with one SAS2LP controller for years, its only since I have added another that I have been seeing problems. Do you think its possible that this problem could occur with one card and not the other? They're both running the same and most up to date firmware version. -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Awesome, thank you. I'll try that if swapping the cable doesn't help. -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

I run dockers and a Windows 10 VM, so its not ideally what I want to do, but I can try it for a while. Its been enabled for well over a year and not caused any issues though, its only since the new PSU and additional controller + cabling, that I've started seeing errors. I know I'll have to stop using the VM when disabling vt-d, but will it effect docker as well? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

So swapping the controller seems to be causing more and / or, more frequent errors, like its somehow agitated the issue. I am now seeing end point connection errors, Jan 14 22:01:45 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:02:07 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:02:07 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:02:07 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:04:07 unRAID emhttp: cmd: /usr/local/emhttp/plugins/dynamix/scripts/tail_log syslog Jan 14 22:04:31 unRAID emhttp: cmd: /usr/local/emhttp/plugins/dynamix/scripts/tail_log syslog Jan 14 22:07:39 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:07:39 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:08:14 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected Jan 14 22:08:17 unRAID php: /usr/local/emhttp/plugins/advanced.buttons/script/plugin 'check' 'advanced.buttons.plg' 'ca.cleanup.appdata.plg' 'ca.update.applications.plg' 'community.applications.plg' 'dynamix.active.streams.plg' 'dynamix.local.master.plg' 'dynamix.plg' 'dynamix.ssd.trim.plg' 'dynamix.system.buttons.plg' 'dynamix.system.info.plg' 'dynamix.system.stats.plg' 'dynamix.system.temp.plg' 'unassigned.devices.plg' 'unRAIDServer.plg' &>/dev/null & Jan 14 22:08:58 unRAID emhttp: err: need_authorization: getpeername: Transport endpoint is not connected I am way out of my depth in trying to diagnose this, so would appreciate any help anyone can give. I still have yet to swap out the power cables feeding the drives that are generating errors, but if its not that, i'm out of ideas After all the previously listed symptoms / errors, is anyone able to suggest anything else it could be? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Well, looks like i don't have to wait a week after all :'( I ran an initial parity check (the first parity check after a reboot has traditionally been fault free) and got 0 errors, but saw a timeout within the first hour of the check. Jan 13 00:13:40 unRAID kernel: sas: Enter sas_scsi_recover_host busy: 1 failed: 1 Jan 13 00:13:40 unRAID kernel: sas: trying to find task 0xffff88038c341400 Jan 13 00:13:40 unRAID kernel: sas: sas_scsi_find_task: aborting task 0xffff88038c341400 Jan 13 00:13:40 unRAID kernel: sas: sas_scsi_find_task: task 0xffff88038c341400 is aborted Jan 13 00:13:40 unRAID kernel: sas: sas_eh_handle_sas_errors: task 0xffff88038c341400 is aborted Jan 13 00:13:40 unRAID kernel: sas: ata15: end_device-8:0: cmd error handler Jan 13 00:13:40 unRAID kernel: sas: ata15: end_device-8:0: dev error handler Jan 13 00:13:40 unRAID kernel: sas: ata16: end_device-8:1: dev error handler Jan 13 00:13:40 unRAID kernel: ata15.00: exception Emask 0x0 SAct 0x0 SErr 0x0 action 0x6 frozen Jan 13 00:13:40 unRAID kernel: sas: ata17: end_device-8:2: dev error handler Jan 13 00:13:40 unRAID kernel: sas: ata18: end_device-8:3: dev error handler Jan 13 00:13:40 unRAID kernel: ata15.00: failed command: READ DMA EXT Jan 13 00:13:40 unRAID kernel: ata15.00: cmd 25/00:00:08:2b:f4/00:04:27:00:00/e0 tag 29 dma 524288 in Jan 13 00:13:40 unRAID kernel: res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout) Jan 13 00:13:40 unRAID kernel: ata15.00: status: { DRDY } Jan 13 00:13:40 unRAID kernel: ata15: hard resetting link Jan 13 00:13:40 unRAID kernel: sas: sas_form_port: phy0 belongs to port0 already(1)! Jan 13 00:13:42 unRAID kernel: drivers/scsi/mvsas/mv_sas.c 1430:mvs_I_T_nexus_reset for device[0]:rc= 0 Jan 13 00:13:42 unRAID kernel: ata15.00: configured for UDMA/133 Jan 13 00:13:42 unRAID kernel: ata15.00: device reported invalid CHS sector 0 Jan 13 00:13:42 unRAID kernel: ata15: EH complete Jan 13 00:13:42 unRAID kernel: sas: --- Exit sas_scsi_recover_host: busy: 0 failed: 1 tries: 1 Now (its not even been 24 hours since installing the new controller and rebooting) i'm getting kernel crashes again (below). So to recap, I've changed the controller that all the disks getting errors are attached to. I've changed the sas cable running from the controller to the four disks. I've run a 24 hour mem test and found 0 errors. That leaves power cables as the only easy thing left to swap out, so i'm going to try that next. The disks that are experiencing errors are all fed from the same output on the PSU, but the cable has an extension, so it powers other drives as well (connected to the other controller), so i'm not sure how likely it is to be the cable, as i would have thought more disks would be experiencing problems. What are the odds of this being motherboard or PCIe port related? I'm struggling, to logically think of what else it can be, as its only these four drives (connected to the same controller, which I've now replaced), that are experiencing parity errors. All the drives connected to the motherboard sata ports and connected to the other controller are fine Jan 13 18:00:23 unRAID kernel: ------------[ cut here ]------------ Jan 13 18:00:23 unRAID kernel: WARNING: CPU: 0 PID: 9493 at arch/x86/kernel/cpu/perf_event_intel_ds.c:334 reserve_ds_buffers+0x110/0x33d() Jan 13 18:00:23 unRAID kernel: alloc_bts_buffer: BTS buffer allocation failure Jan 13 18:00:23 unRAID kernel: Modules linked in: xt_CHECKSUM ipt_REJECT nf_reject_ipv4 ebtable_filter ebtables vhost_net vhost macvtap macvlan tun iptable_mangle xt_nat veth ipt_MASQUERADE nf_nat_masquerade_ipv4 iptable_nat nf_conntrack_ipv4 nf_nat_ipv4 iptable_filter ip_tables nf_nat md_mod ahci x86_pkg_temp_thermal coretemp kvm_intel mvsas i2c_i801 kvm e1000 i2c_core libahci libsas r8169 mii scsi_transport_sas wmi Jan 13 18:00:23 unRAID kernel: CPU: 0 PID: 9493 Comm: qemu-system-x86 Not tainted 4.4.30-unRAID #2 Jan 13 18:00:23 unRAID kernel: Hardware name: ASUS All Series/Z87-K, BIOS 1402 11/05/2014 Jan 13 18:00:23 unRAID kernel: 0000000000000000 ffff880341663920 ffffffff8136f79f ffff880341663968 Jan 13 18:00:23 unRAID kernel: 000000000000014e ffff880341663958 ffffffff8104a4ab ffffffff81020934 Jan 13 18:00:23 unRAID kernel: 0000000000000000 0000000000000001 0000000000000006 ffff880360779680 Jan 13 18:00:23 unRAID kernel: Call Trace: Jan 13 18:00:23 unRAID kernel: [<ffffffff8136f79f>] dump_stack+0x61/0x7e Jan 13 18:00:23 unRAID kernel: [<ffffffff8104a4ab>] warn_slowpath_common+0x8f/0xa8 Jan 13 18:00:23 unRAID kernel: [<ffffffff81020934>] ? reserve_ds_buffers+0x110/0x33d Jan 13 18:00:23 unRAID kernel: [<ffffffff8104a507>] warn_slowpath_fmt+0x43/0x4b Jan 13 18:00:23 unRAID kernel: [<ffffffff810f7501>] ? __kmalloc_node+0x22/0x153 Jan 13 18:00:23 unRAID kernel: [<ffffffff81020934>] reserve_ds_buffers+0x110/0x33d Jan 13 18:00:23 unRAID kernel: [<ffffffff8101b3fc>] x86_reserve_hardware+0x135/0x147 Jan 13 18:00:23 unRAID kernel: [<ffffffff8101b45e>] x86_pmu_event_init+0x50/0x1c9 Jan 13 18:00:23 unRAID kernel: [<ffffffff810ae7bd>] perf_try_init_event+0x41/0x72 Jan 13 18:00:23 unRAID kernel: [<ffffffff810aec0e>] perf_event_alloc+0x420/0x66e Jan 13 18:00:23 unRAID kernel: [<ffffffffa00bf58e>] ? kvm_dev_ioctl_get_cpuid+0x1c0/0x1c0 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffff810b0bbb>] perf_event_create_kernel_counter+0x22/0x112 Jan 13 18:00:23 unRAID kernel: [<ffffffffa00bf6d9>] pmc_reprogram_counter+0xbf/0x104 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa00bf92b>] reprogram_fixed_counter+0xc7/0xd8 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa03ca987>] intel_pmu_set_msr+0xe0/0x2ca [kvm_intel] Jan 13 18:00:23 unRAID kernel: [<ffffffffa00bfb2c>] kvm_pmu_set_msr+0x15/0x17 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa00a1a57>] kvm_set_msr_common+0x921/0x983 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa03ca400>] vmx_set_msr+0x2ec/0x2fe [kvm_intel] Jan 13 18:00:23 unRAID kernel: [<ffffffffa009e424>] kvm_set_msr+0x61/0x63 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa03c39c4>] handle_wrmsr+0x3b/0x62 [kvm_intel] Jan 13 18:00:23 unRAID kernel: [<ffffffffa03c863f>] vmx_handle_exit+0xfbb/0x1053 [kvm_intel] Jan 13 18:00:23 unRAID kernel: [<ffffffffa03ca105>] ? vmx_vcpu_run+0x30e/0x31d [kvm_intel] Jan 13 18:00:23 unRAID kernel: [<ffffffffa00a7f92>] kvm_arch_vcpu_ioctl_run+0x38a/0x1080 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa00a2938>] ? kvm_arch_vcpu_load+0x6b/0x16c [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa00a29b5>] ? kvm_arch_vcpu_load+0xe8/0x16c [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa0098cff>] kvm_vcpu_ioctl+0x178/0x499 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffffa009b152>] ? kvm_vm_ioctl+0x3e8/0x5d8 [kvm] Jan 13 18:00:23 unRAID kernel: [<ffffffff8111869e>] do_vfs_ioctl+0x3a3/0x416 Jan 13 18:00:23 unRAID kernel: [<ffffffff8112070e>] ? __fget+0x72/0x7e Jan 13 18:00:23 unRAID kernel: [<ffffffff8111874f>] SyS_ioctl+0x3e/0x5c Jan 13 18:00:23 unRAID kernel: [<ffffffff81629c2e>] entry_SYSCALL_64_fastpath+0x12/0x6d Jan 13 18:00:23 unRAID kernel: ---[ end trace 9a1ea458c732cff8 ]--- Jan 13 18:00:23 unRAID kernel: qemu-system-x86: page allocation failure: order:4, mode:0x260c0c0 Jan 13 18:00:23 unRAID kernel: CPU: 0 PID: 9493 Comm: qemu-system-x86 Tainted: G W 4.4.30-unRAID #2 -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

That's ok then -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

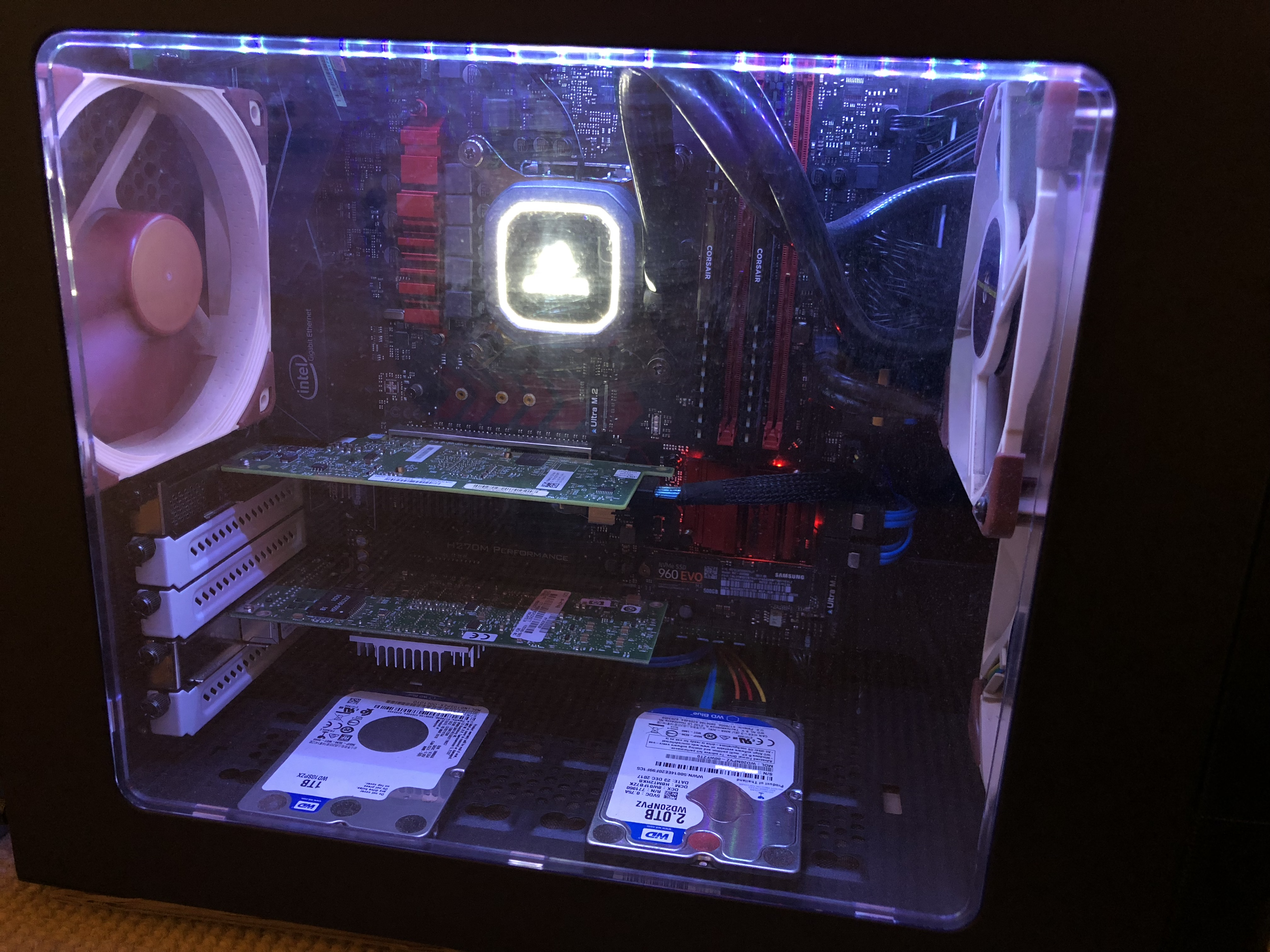

Sorry for the random and probably odd question, but with dual parity, should the read and writes be the same for both disks (see pic)? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Yeah i did that after reading other posts regarding the error, but i'm running the most up to date version. It's really odd that they never occured before though!? Harmless is good I'll keep an eye on them and also see what happens after the next parity check then. Thank you. -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

I'm still at it! lol. Just recieved a new controller and swapped it over. I'm going to wait a week and then run a parity check and see what happens. I checked the syslog after boot up, to make sure nothing strange appeared and i noticed the below. These errors never presented before and i never changed anything in the bios as far as ACPI is concerned, so could they be another symptom of my problem? Could these errors signify a problem with the motherboard? Thank you, Rich Jan 12 21:07:47 unRAID kernel: ata1: SATA max UDMA/133 abar m2048@0xf7c1a000 port 0xf7c1a100 irq 28 Jan 12 21:07:47 unRAID kernel: ata2: DUMMY Jan 12 21:07:47 unRAID kernel: ata3: SATA max UDMA/133 abar m2048@0xf7c1a000 port 0xf7c1a200 irq 28 Jan 12 21:07:47 unRAID kernel: ata4: SATA max UDMA/133 abar m2048@0xf7c1a000 port 0xf7c1a280 irq 28 Jan 12 21:07:47 unRAID kernel: ata5: SATA max UDMA/133 abar m2048@0xf7c1a000 port 0xf7c1a300 irq 28 Jan 12 21:07:47 unRAID kernel: ata6: SATA max UDMA/133 abar m2048@0xf7c1a000 port 0xf7c1a380 irq 28 Jan 12 21:07:47 unRAID kernel: i801_smbus 0000:00:1f.3: SMBus using PCI interrupt Jan 12 21:07:47 unRAID kernel: ata4: SATA link up 6.0 Gbps (SStatus 133 SControl 300) Jan 12 21:07:47 unRAID kernel: ata1: SATA link up 6.0 Gbps (SStatus 133 SControl 300) Jan 12 21:07:47 unRAID kernel: ata5: SATA link up 6.0 Gbps (SStatus 133 SControl 300) Jan 12 21:07:47 unRAID kernel: ata3: SATA link up 6.0 Gbps (SStatus 133 SControl 300) Jan 12 21:07:47 unRAID kernel: ata6: SATA link up 6.0 Gbps (SStatus 133 SControl 300) Jan 12 21:07:47 unRAID kernel: ata4.00: ATA-9: WDC WD60EZRZ-00GZ5B1, WD-WX21D36PPX7X, 80.00A80, max UDMA/133 Jan 12 21:07:47 unRAID kernel: ata4.00: 11721045168 sectors, multi 16: LBA48 NCQ (depth 31/32), AA Jan 12 21:07:47 unRAID kernel: ACPI Error: [DSSP] Namespace lookup failure, AE_NOT_FOUND (20150930/psargs-359) Jan 12 21:07:47 unRAID kernel: ACPI Error: Method parse/execution failed [\_SB.PCI0.SAT0.SPT5._GTF] (Node ffff88040f0629b0), AE_NOT_FOUND (20150930/psparse-542) Jan 12 21:07:47 unRAID kernel: ACPI Error: [DSSP] Namespace lookup failure, AE_NOT_FOUND (20150930/psargs-359) Jan 12 21:07:47 unRAID kernel: ACPI Error: Method parse/execution failed [\_SB.PCI0.SAT0.SPT4._GTF] (Node ffff88040f062938), AE_NOT_FOUND (20150930/psparse-542) Jan 12 21:07:47 unRAID kernel: ata6.00: supports DRM functions and may not be fully accessible Jan 12 21:07:47 unRAID kernel: ata1.00: ATA-9: WDC WD10EZRX-00A3KB0, WD-WCC4J2NH9DDT, 01.01A01, max UDMA/133 Jan 12 21:07:47 unRAID kernel: ata1.00: 1953525168 sectors, multi 16: LBA48 NCQ (depth 31/32), AA Jan 12 21:07:47 unRAID kernel: ata5.00: ATA-9: WDC WD5000LPVX-22V0TT0, WD-WX21A152AR3V, 01.01A01, max UDMA/133 Jan 12 21:07:47 unRAID kernel: ata5.00: 976773168 sectors, multi 16: LBA48 NCQ (depth 31/32), AA Jan 12 21:07:47 unRAID kernel: ata3.00: ATA-9: WDC WD60EZRX-00MVLB1, WD-WX21D9421D3V, 80.00A80, max UDMA/133 Jan 12 21:07:47 unRAID kernel: ata3.00: 11721045168 sectors, multi 16: LBA48 NCQ (depth 31/32), AA Jan 12 21:07:47 unRAID kernel: ata4.00: configured for UDMA/133 Jan 12 21:07:47 unRAID kernel: ata1.00: configured for UDMA/133 Jan 12 21:07:47 unRAID kernel: ACPI Error: Jan 12 21:07:47 unRAID kernel: scsi 2:0:0:0: Direct-Access ATA WDC WD10EZRX-00A 1A01 PQ: 0 ANSI: 5 Jan 12 21:07:47 unRAID kernel: [DSSP]<5>sd 2:0:0:0: [sdb] 1953525168 512-byte logical blocks: (1.00 TB/932 GiB) Jan 12 21:07:47 unRAID kernel: sd 2:0:0:0: [sdb] 4096-byte physical blocks Jan 12 21:07:47 unRAID kernel: sd 2:0:0:0: Attached scsi generic sg1 type 0 Jan 12 21:07:47 unRAID kernel: sd 2:0:0:0: [sdb] Write Protect is off Jan 12 21:07:47 unRAID kernel: sd 2:0:0:0: [sdb] Mode Sense: 00 3a 00 00 Jan 12 21:07:47 unRAID kernel: sd 2:0:0:0: [sdb] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA Jan 12 21:07:47 unRAID kernel: Namespace lookup failure, AE_NOT_FOUND (20150930/psargs-359) Jan 12 21:07:47 unRAID kernel: ACPI Error: Method parse/execution failed [\_SB.PCI0.SAT0.SPT4._GTF] (Node ffff88040f062938), AE_NOT_FOUND (20150930/psparse-542) Jan 12 21:07:47 unRAID kernel: ata5.00: configured for UDMA/133 Jan 12 21:07:47 unRAID kernel: ata3.00: configured for UDMA/133 Jan 12 21:07:47 unRAID kernel: scsi 4:0:0:0: Direct-Access ATA WDC WD60EZRX-00M 0A80 PQ: 0 ANSI: 5 Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: [sdc] 11721045168 512-byte logical blocks: (6.00 TB/5.46 TiB) Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: Attached scsi generic sg2 type 0 Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: [sdc] 4096-byte physical blocks Jan 12 21:07:47 unRAID kernel: scsi 5:0:0:0: Direct-Access ATA WDC WD60EZRZ-00G 0A80 PQ: 0 ANSI: 5 Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: [sdc] Write Protect is off Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: [sdc] Mode Sense: 00 3a 00 00 Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: [sdc] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: [sdd] 11721045168 512-byte logical blocks: (6.00 TB/5.46 TiB) Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: Attached scsi generic sg3 type 0 Jan 12 21:07:47 unRAID kernel: scsi 6:0:0:0: Direct-Access ATA WDC WD5000LPVX-2 1A01 PQ: 0 ANSI: 5 Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: [sde] 976773168 512-byte logical blocks: (500 GB/466 GiB) Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: [sde] 4096-byte physical blocks Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: Attached scsi generic sg4 type 0 Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: [sde] Write Protect is off Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: [sde] Mode Sense: 00 3a 00 00 Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: [sde] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA Jan 12 21:07:47 unRAID kernel: ata6.00: disabling queued TRIM support Jan 12 21:07:47 unRAID kernel: ata6.00: ATA-9: Crucial_CT512MX100SSD1, 15020E592B9D, MU01, max UDMA/133 Jan 12 21:07:47 unRAID kernel: ata6.00: 1000215216 sectors, multi 16: LBA48 NCQ (depth 31/32), AA Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: [sdd] 4096-byte physical blocks Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: [sdd] Write Protect is off Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: [sdd] Mode Sense: 00 3a 00 00 Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: [sdd] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA Jan 12 21:07:47 unRAID kernel: ACPI Error: [DSSP] Namespace lookup failure, AE_NOT_FOUND (20150930/psargs-359) Jan 12 21:07:47 unRAID kernel: ACPI Error: Method parse/execution failed [\_SB.PCI0.SAT0.SPT5._GTF] (Node ffff88040f0629b0), AE_NOT_FOUND (20150930/psparse-542) Jan 12 21:07:47 unRAID kernel: ata6.00: supports DRM functions and may not be fully accessible Jan 12 21:07:47 unRAID kernel: ata6.00: disabling queued TRIM support Jan 12 21:07:47 unRAID kernel: ata6.00: configured for UDMA/133 Jan 12 21:07:47 unRAID kernel: scsi 7:0:0:0: Direct-Access ATA Crucial_CT512MX1 MU01 PQ: 0 ANSI: 5 Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: Attached scsi generic sg5 type 0 Jan 12 21:07:47 unRAID kernel: ata6.00: Enabling discard_zeroes_data Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: [sdf] 1000215216 512-byte logical blocks: (512 GB/477 GiB) Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: [sdf] 4096-byte physical blocks Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: [sdf] Write Protect is off Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: [sdf] Mode Sense: 00 3a 00 00 Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: [sdf] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA Jan 12 21:07:47 unRAID kernel: ata6.00: Enabling discard_zeroes_data Jan 12 21:07:47 unRAID kernel: sdf: sdf1 Jan 12 21:07:47 unRAID kernel: ata6.00: Enabling discard_zeroes_data Jan 12 21:07:47 unRAID kernel: sd 7:0:0:0: [sdf] Attached SCSI disk Jan 12 21:07:47 unRAID kernel: sdb: sdb1 Jan 12 21:07:47 unRAID kernel: sd 2:0:0:0: [sdb] Attached SCSI disk Jan 12 21:07:47 unRAID kernel: sdc: sdc1 Jan 12 21:07:47 unRAID kernel: sd 4:0:0:0: [sdc] Attached SCSI disk Jan 12 21:07:47 unRAID kernel: sdd: sdd1 Jan 12 21:07:47 unRAID kernel: sd 5:0:0:0: [sdd] Attached SCSI disk Jan 12 21:07:47 unRAID kernel: sde: sde1 Jan 12 21:07:47 unRAID kernel: sd 6:0:0:0: [sde] Attached SCSI disk Jan 12 21:07:47 unRAID kernel: random: nonblocking pool is initialized -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Cool thank you, they're both disks that are connected to the suspect controller. I'm going to wait another week and run another check and see what happens then and go from there. Merry Christmas -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

I see. So as I've swapped out a sas cable for a brand new one and haven't got any errors, but did get timeouts, does that suggest that the problem is still there and its probably not the cable? I'm assuming that the timeouts previously lead to the errors, so the catalyst is potentially still there despite the cable change? Or could it be a coincidence and be unrelated? What are the odds? lol Also, is it possible to ascertain what disk the timeouts relate to? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Ah ok. Thank you. As there were only two timeout cycles and no errors (plus no errors over the rest of the parity check) does that mean that it eventually found / did what it was trying to do? I assume that if it hadn't been able to complete what it was trying to do, that would have created an error? -

Repeated parity sync errors after server upgrade SAS2LP-MV8

Rich replied to Rich's topic in General Support

Any help would be greatly appreciated