-

Posts

1608 -

Joined

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by StevenD

-

-

2 hours ago, jbartlett said:

The plugin "Disable Mitigation Settings" might help you troubleshoot that.

I have been running that for a long time. Maybe thats why I havent seen this issue.

I dont have a video card to pass through on my test unRAID VM, so I cant do any additional testing without taking down my main unraid box.

-

17 minutes ago, hawihoney said:

6.8.3 is a stable release and there's a prebuilt image with NVIDIA 450.66 available.

Not anymore. The Nvidia builds are no longer available for download.I forgot about @ich777 build:

-

49 minutes ago, TexasUnraid said:

Except that the speed issues I was experience only presented themselves with really small files, like ~4kb and the speed would drop to abysmally slow. As the packet captures showed, it was having lots of errors for each file on the server side.

This issue is still present in the 6.9 beta.

I don't believe I quoted you, or addressed my comment to you.

-

You

35 minutes ago, Womabre said:I also have this problem. I get around 36Mbps speed directly to the cache disk share or to a user share that uses the cache.

But when I mount the /mnt/user with CloudMounter using SFTP. I get around 1300!! Mbps. That is a 36x speed difference.

Both tests from a Mac using LAN SpeedTest using a 500mb file.

You really should use a much larger file for testing. At least bigger than your RAM size. I typically use 50-100GB files for testing.

-

27 minutes ago, Wavey said:

@StevenDWhat is that output you are using to monitor the amount of writes?

There is probably a better way, but I just have a script run at the top of every hour

date >> /mnt/cache/cache1.txt smartctl -a -d nvme /dev/nvme0n1 | grep "Units Written" >> /mnt/cache/cache1.txt date >> /mnt/cache/cache2.txt smartctl -a -d nvme /dev/nvme1n1 | grep "Units Written" >> /mnt/cache/cache2.txt

-

1

1

-

-

Upgrading to 6.9.0-beta25, and wiping and rebuilding cache seems to have fixed the excessive drive writes. I updated at 1PM yesterday.

Thanks @limetech

Sun Jul 19 00:00:01 CDT 2020 52,349,318 [26.8 TB] Sun Jul 19 01:00:01 CDT 2020 52,423,388 [26.8 TB] Sun Jul 19 02:00:01 CDT 2020 52,489,648 [26.8 TB] Sun Jul 19 03:00:01 CDT 2020 52,555,542 [26.9 TB] Sun Jul 19 04:00:01 CDT 2020 52,620,891 [26.9 TB] Sun Jul 19 05:00:02 CDT 2020 52,704,944 [26.9 TB] Sun Jul 19 06:00:02 CDT 2020 52,781,371 [27.0 TB] Sun Jul 19 07:00:01 CDT 2020 52,857,676 [27.0 TB] Sun Jul 19 08:00:01 CDT 2020 52,969,998 [27.1 TB] Sun Jul 19 09:00:01 CDT 2020 53,060,428 [27.1 TB] Sun Jul 19 10:00:02 CDT 2020 53,143,267 [27.2 TB] Sun Jul 19 11:00:01 CDT 2020 53,226,597 [27.2 TB] Sun Jul 19 12:00:01 CDT 2020 53,302,735 [27.2 TB] Sun Jul 19 13:00:02 CDT 2020 53,370,136 [27.3 TB] Sun Jul 19 14:00:01 CDT 2020 53,497,045 [27.3 TB] Sun Jul 19 15:00:01 CDT 2020 53,570,280 [27.4 TB] Sun Jul 19 16:00:02 CDT 2020 53,660,287 [27.4 TB] Sun Jul 19 17:00:01 CDT 2020 53,757,767 [27.5 TB] Sun Jul 19 18:00:01 CDT 2020 53,843,113 [27.5 TB] Sun Jul 19 19:00:01 CDT 2020 54,494,403 [27.9 TB] Sun Jul 19 20:00:01 CDT 2020 54,591,716 [27.9 TB] Sun Jul 19 21:00:01 CDT 2020 54,684,939 [27.9 TB] Sun Jul 19 22:00:01 CDT 2020 54,769,497 [28.0 TB] Sun Jul 19 23:00:01 CDT 2020 54,881,700 [28.0 TB] Mon Jul 20 00:00:01 CDT 2020 54,962,156 [28.1 TB] Mon Jul 20 01:00:01 CDT 2020 55,012,101 [28.1 TB] Mon Jul 20 02:00:01 CDT 2020 55,114,507 [28.2 TB] Mon Jul 20 03:00:01 CDT 2020 55,199,643 [28.2 TB] Mon Jul 20 04:00:01 CDT 2020 55,285,523 [28.3 TB] Mon Jul 20 05:00:01 CDT 2020 55,390,072 [28.3 TB] Mon Jul 20 06:00:01 CDT 2020 55,492,177 [28.4 TB] Mon Jul 20 07:00:01 CDT 2020 55,562,868 [28.4 TB] Mon Jul 20 08:00:01 CDT 2020 55,641,502 [28.4 TB] Mon Jul 20 09:00:01 CDT 2020 55,709,571 [28.5 TB] Mon Jul 20 10:00:01 CDT 2020 55,778,340 [28.5 TB] Mon Jul 20 11:00:01 CDT 2020 55,855,175 [28.5 TB] Mon Jul 20 12:00:01 CDT 2020 55,937,448 [28.6 TB] Mon Jul 20 13:00:01 CDT 2020 56,014,597 [28.6 TB] Mon Jul 20 14:00:01 CDT 2020 56,092,328 [28.7 TB] Mon Jul 20 15:00:01 CDT 2020 56,156,565 [28.7 TB] Mon Jul 20 17:00:01 CDT 2020 56,273,142 [28.8 TB] Mon Jul 20 18:00:01 CDT 2020 56,344,795 [28.8 TB] Mon Jul 20 19:00:01 CDT 2020 56,364,160 [28.8 TB] Mon Jul 20 20:00:01 CDT 2020 56,407,275 [28.8 TB] Mon Jul 20 21:00:01 CDT 2020 56,447,405 [28.9 TB] Mon Jul 20 22:00:01 CDT 2020 56,471,394 [28.9 TB] Mon Jul 20 23:00:02 CDT 2020 56,544,547 [28.9 TB] Tue Jul 21 00:00:01 CDT 2020 56,558,841 [28.9 TB] Tue Jul 21 01:00:01 CDT 2020 56,572,818 [28.9 TB] Tue Jul 21 02:00:01 CDT 2020 56,588,893 [28.9 TB] Tue Jul 21 03:00:01 CDT 2020 56,619,137 [28.9 TB] Tue Jul 21 04:00:01 CDT 2020 56,649,114 [29.0 TB] Tue Jul 21 05:00:01 CDT 2020 56,694,088 [29.0 TB] Tue Jul 21 06:00:01 CDT 2020 56,734,883 [29.0 TB] Tue Jul 21 07:00:01 CDT 2020 56,740,772 [29.0 TB] Tue Jul 21 08:00:01 CDT 2020 56,764,329 [29.0 TB] Tue Jul 21 09:00:01 CDT 2020 56,791,261 [29.0 TB] Tue Jul 21 10:00:01 CDT 2020 57,390,492 [29.3 TB] Tue Jul 21 11:00:02 CDT 2020 57,481,471 [29.4 TB] Tue Jul 21 12:00:01 CDT 2020 57,522,137 [29.4 TB] Tue Jul 21 14:00:01 CDT 2020 58,216,955 [29.8 TB] Tue Jul 21 15:00:01 CDT 2020 58,222,173 [29.8 TB] Tue Jul 21 16:00:01 CDT 2020 58,235,354 [29.8 TB] Tue Jul 21 17:00:01 CDT 2020 58,270,523 [29.8 TB] Tue Jul 21 18:00:01 CDT 2020 58,300,798 [29.8 TB] Tue Jul 21 19:00:01 CDT 2020 58,346,858 [29.8 TB] Tue Jul 21 20:00:01 CDT 2020 58,382,861 [29.8 TB] Tue Jul 21 21:00:01 CDT 2020 58,403,922 [29.9 TB] Tue Jul 21 22:00:01 CDT 2020 58,420,439 [29.9 TB] Tue Jul 21 23:00:01 CDT 2020 58,493,227 [29.9 TB] Wed Jul 22 00:00:02 CDT 2020 58,494,926 [29.9 TB] Wed Jul 22 01:00:01 CDT 2020 58,529,097 [29.9 TB] Wed Jul 22 02:00:01 CDT 2020 58,556,746 [29.9 TB] Wed Jul 22 03:00:01 CDT 2020 58,574,415 [29.9 TB] Wed Jul 22 04:00:01 CDT 2020 58,605,297 [30.0 TB] Wed Jul 22 05:00:01 CDT 2020 58,632,079 [30.0 TB] Wed Jul 22 06:00:01 CDT 2020 58,655,069 [30.0 TB] Wed Jul 22 07:00:01 CDT 2020 58,672,137 [30.0 TB] Wed Jul 22 08:00:01 CDT 2020 58,689,196 [30.0 TB] Wed Jul 22 09:00:01 CDT 2020 58,712,601 [30.0 TB] Wed Jul 22 10:00:01 CDT 2020 58,731,743 [30.0 TB] -

1 hour ago, boomam said:

ok.

Another 20min test -

Pre-any change = 1,600Mb in 20 min.

Post "sed" change = 1500Mb in 20 min.

Post "/mnt/cache" change = 300Mb in 20 min

(mount -o remount -o space_cache=v2 /mnt/cache)

Thats the difference between

115Gb/day or 3.34Tb/month.

&

21Gb/day or 648Gb/month

That's a major improvement! Still not perfect, but still noticeably better than it was.

I'll see if i can find a way to script that to run on array start each time until 6.9 rolls around.

Thanks for the input!

Either put it in your go file (in the config folder on your flash drive), or add it to the User Scripts plugin.

-

15 minutes ago, limetech said:

Also could use 'space_cache=v2'.

Upcoming -beta23 has these changes to address this issue:

- set 'noatime' option when mounting loopback file systems

- include 'space_cache=v2' option when mounting btrfs file systems

- default partition 1 start sector aligned on 1MiB boundary for non-rotational storage. Will requires wiping partition structure on existing SSD devices first to make use of this.

I have another 2TB nvme installed, so I can easily backup, wipe and restore the cache pool.

-

1

1

-

1 minute ago, johnnie.black said:

So that's still a good result, was close to 1TB a day before, correct?

Correct. Certainly better.

-

Looks like about 400GB was written yesterday. Nothing was written, except normal docker appdata stuff.

22,685,135 [11.6 TB]

22,687,899 [11.6 TB]

-

Looks like that worked. I will check it again tomorrow. I would expect to see it over 12TB tomorrow.

Cache1:

22,040,574 [11.2 TB]

Cache2:

22,039,620 [11.2 TB]

-

1

1

-

-

I suppose I can run that command and see where it sits 24 hours from now.

-

How would I see what is being written?

Eleven days ago, I replaced my cache with 2 x 1TB NVMe in a BTRFS RAID1. Since then, more than 1TB per day is being written to the drive. That seems excessive since I am only using it for Docker, appdata, and a couple of shares (which has only had ~400GB written in that time).

Cache 1:

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-4.19.107-Unraid] (local build) Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: Samsung SSD 970 PRO 1TB Serial Number: Firmware Version: 1B2QEXP7 PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 1,024,209,543,168 [1.02 TB] Unallocated NVM Capacity: 0 Controller ID: 4 Number of Namespaces: 1 Namespace 1 Size/Capacity: 1,024,209,543,168 [1.02 TB] Namespace 1 Utilization: 719,635,980,288 [719 GB] Namespace 1 Formatted LBA Size: 512 Namespace 1 IEEE EUI-64: 002538 540150134f Local Time is: Thu Jun 25 11:50:58 2020 CDT Firmware Updates (0x16): 3 Slots, no Reset required Optional Admin Commands (0x0037): Security Format Frmw_DL Self_Test Directvs Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp Maximum Data Transfer Size: 512 Pages Warning Comp. Temp. Threshold: 81 Celsius Critical Comp. Temp. Threshold: 81 Celsius Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 6.20W - - 0 0 0 0 0 0 1 + 4.30W - - 1 1 1 1 0 0 2 + 2.10W - - 2 2 2 2 0 0 3 - 0.0400W - - 3 3 3 3 210 1200 4 - 0.0050W - - 4 4 4 4 2000 8000 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 47 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 0% Data Units Read: 2,930,203 [1.50 TB] Data Units Written: 21,981,884 [11.2 TB] Host Read Commands: 25,097,587 Host Write Commands: 411,986,950 Controller Busy Time: 4,473 Power Cycles: 14 Power On Hours: 281 Unsafe Shutdowns: 6 Media and Data Integrity Errors: 0 Error Information Log Entries: 0 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 47 Celsius Temperature Sensor 2: 57 Celsius Error Information (NVMe Log 0x01, max 64 entries) No Errors LoggedCache 2:

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-4.19.107-Unraid] (local build) Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: Samsung SSD 970 PRO 1TB Serial Number: Firmware Version: 1B2QEXP7 PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 1,024,209,543,168 [1.02 TB] Unallocated NVM Capacity: 0 Controller ID: 4 Number of Namespaces: 1 Namespace 1 Size/Capacity: 1,024,209,543,168 [1.02 TB] Namespace 1 Utilization: 719,635,988,480 [719 GB] Namespace 1 Formatted LBA Size: 512 Namespace 1 IEEE EUI-64: 002538 510150a811 Local Time is: Thu Jun 25 11:53:44 2020 CDT Firmware Updates (0x16): 3 Slots, no Reset required Optional Admin Commands (0x0037): Security Format Frmw_DL Self_Test Directvs Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp Maximum Data Transfer Size: 512 Pages Warning Comp. Temp. Threshold: 81 Celsius Critical Comp. Temp. Threshold: 81 Celsius Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 6.20W - - 0 0 0 0 0 0 1 + 4.30W - - 1 1 1 1 0 0 2 + 2.10W - - 2 2 2 2 0 0 3 - 0.0400W - - 3 3 3 3 210 1200 4 - 0.0050W - - 4 4 4 4 2000 8000 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 45 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 0% Data Units Read: 4,319,316 [2.21 TB] Data Units Written: 21,981,076 [11.2 TB] Host Read Commands: 38,573,640 Host Write Commands: 412,195,982 Controller Busy Time: 4,469 Power Cycles: 27 Power On Hours: 278 Unsafe Shutdowns: 13 Media and Data Integrity Errors: 0 Error Information Log Entries: 5 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 45 Celsius Temperature Sensor 2: 47 Celsius Error Information (NVMe Log 0x01, max 64 entries) No Errors Logged -

22 minutes ago, ptr727 said:

Looks to me like a disk IO problem in Unraid, not a Samba problem.

https://blog.insanegenius.com/2020/01/18/unraid-vs-ubuntu-smb-performance/

Definitely disk IO as my parity checks are almost half the speed they were under 6.6.

-

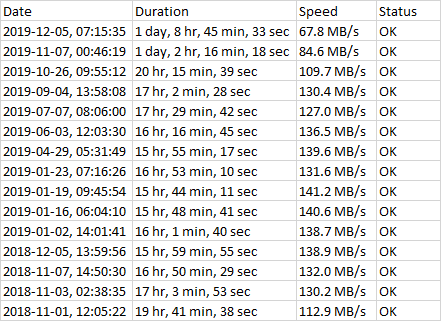

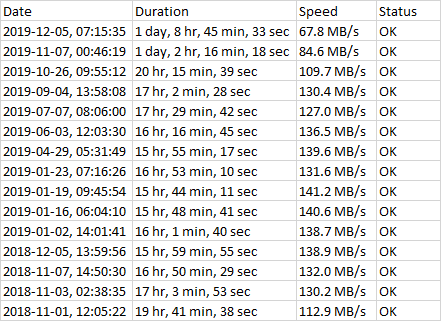

Unfortunately, I do not have version history. That would be nice to have in the Parity Check History. But, I usually update fairly shortly after an update is released, with the exception of 6.7. That one took me a while, IIRC. I was using "tuned" settings, but reverted to defaults with 6.8.

For a couple of years, I have had the same size array: 2 x Parity + 13 x Data. All HGST 8TB HDN728080ALE604.

-

4 minutes ago, scubieman said:

I wish i could get RC3

https://s3.amazonaws.com/dnld.lime-technology.com/next/unRAIDServer-6.8.0-rc3-x86_64.zip

-

1

1

-

-

21 minutes ago, Kaveh said:

Has anyone tried the 6.7.3 release candidates? It looks like a new kernel and md/unraid version in rc3.

It’s certainly not fixed in rc1 or rc2.

-

8 minutes ago, bonienl said:

Of course I wasn't talking about concurrent drives access...

(disclosure: I am testing on a newer kernel)

So, I'm guessing a new kernel fixes this problem?

I see similar speeds on 6.6.x, but not on any version of 6.7.x.

-

6 minutes ago, Marshalleq said:

And that's not the latest RC - there's been RC2 out for a while now.

But the only change from rc1 to rc2 was a docker version. It was specifically targeted at users with SQLite corruption.

-

9 minutes ago, craigr said:

Does anyone know if the new beta with the new Linux kernel solves this?

Thanks,

craigr

it Does not

-

I, too, reverted to 6.6.7 last night. All is working as expected again.

Unraid OS version 6.9.0-rc2 available

-

-

-

-

-

in Prereleases

Posted · Edited by StevenD

I finally got a chance to test this.

I have a VM, on ESXi 7.0u1. It boots directly from USB and its on v6.9.0-rc2. It boots just fine, with or without, hypervisor.cpuid.v0 = FALSE.

I am not passing through any hardware on this test VM.

Also, this VM is NOT running "Disable Mitigation Settings"