-

Posts

159 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by Ryonez

-

-

Is there any feedback on this? Has it been looked into?

-

Reporting in this is still a severe issues for me.

-

Issue is present in version 6.12.0 as well.

It is getting very tedious to baby docker along... I can't even do backup because it'll trigger this.

Here's the latest diagnostics. -

So, for some behavioural updates.

Noticed my RAM usage was at 68, not the 60 it normally rests at. Server isn't really doing anything, so figured it was from the two new containers, diskover and elasticsearch, mostly from elasticsearch. So I shut that stack down.

That caused docker to spike the CPU and RAM a bit.

The containers turned off in about 3ish seconds, and RAM usage dropped to 60. Then, docker started doing it's thing:

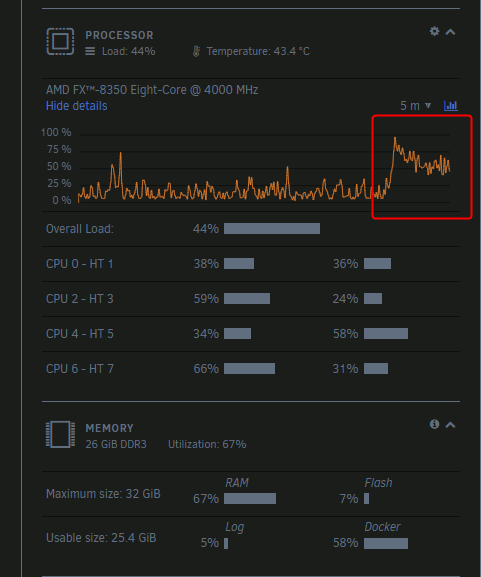

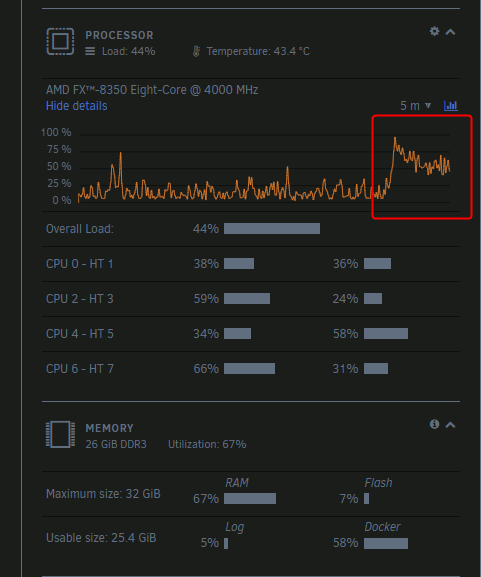

Ram usage started spiking up to 67 in this image, and CPU usage is spiking as well.

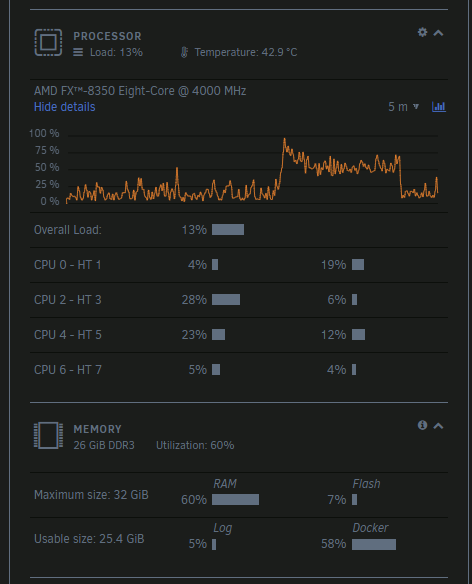

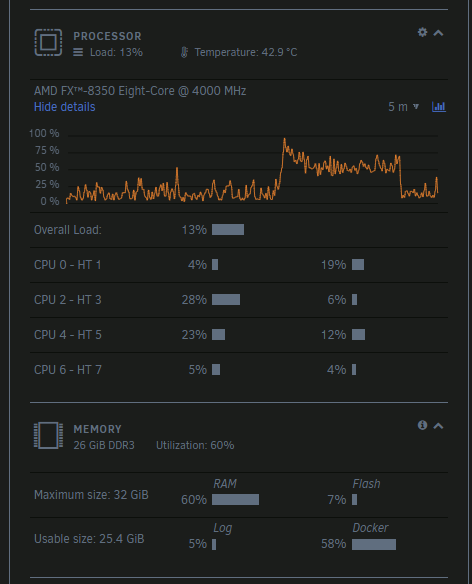

After docker settles with whatever it's doing:

This is from just two containers being turned off. This gets worse the more docker operations you preform. -

5 minutes ago, Vr2Io said:

Diskover ( indexing ) may use up all memory suddenly. Once OOM happen, system crash also expected. I run all docker in /tmp ( RAM ), even CCTV's recording, as memory usage really steady so haven't trouble.

Diskover just happened to be what I was trying out yesterday when the symptoms occurred yesterday. It has not been present during the earlier times the symptoms occurred. After rebuilding docker yesterday I got diskover working and had it index the array, with minimal memory usage (I don't think I saw diskover go above 50mb).

8 minutes ago, Vr2Io said:Try below method to identify which folder will use up memory and best map out to a SSD.

I'm not really sure how this is meant to help with finding folders using memory. It actually suggesting it's moving log folders to ram, which would increase the ram usage. If the logs are causing a blowout of data usage, that usage would go to the cache/docker location, not memory.

It might be good to note I use docker in the directory configuration, not the .img, so I am able to access those folders if needed. -

Just a heads up on my progress from this morning.

My containers are back up, didn't pass 50% of my RAM during this. So currently up and running until docker craps itself again/ -

13 minutes ago, Squid said:

I don't believe this to be an issue with the docker containers memory usage.

For example, the three images I was working on today:- scr.io/linuxserver/diskover

- docker.elastic.co/elasticsearch/elasticsearch:7.10.2

- alpine (elasticsearch-helper)

diskover wasn't able to connect to elasticsearch, elasticsearch was crashing due to perm issues I was looking at, and elasticsearch-helper runs a line of code and shuts down.

I don't see any of these using 10+GB of ram in a non functional state. The system when running steady uses 60% of 25GB of ram. And this wasn't an issue with the set of containers I was using until the start of this month.

I believe this to be an issue in docker itself currently. -

1 hour ago, trurl said:

Just changing the SSH port is not a way to secure this. Bots will just find whatever other port you configure. You need a VPN or proxy

Also, Diagnostics REQUIRED for bug reports.

Changing the port was not done to secure it. It was done so a docker container could use it.

Only specific ports are exposed, wireguard is used to connect when out of network for management, as management ports are not meant to be exposed.

The issue is unRaid starts it with default configs, creating a window of attack until it restarts services with the correct configs. This is not expected behaviour.

Diagnostics have been added to the main post.

[6.11.5 - 6.12.0] Docker is Maxing out CPU and Memory, Triggering `oom-killer`

in Stable Releases

Posted

Another check in, still a huge issue for me.