Connor Moloney

-

Posts

48 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Connor Moloney

-

-

-

In my unraid server I have started receiving errors on one of my WD drives however no reallocated sectors as of yet. I have only had the drive about 9 months and it is already showing 'old-age' and 'pre-fail' in smart reports being run on the server. How should I proceed now? Should I request an RMA as it is still in warranty or leave it until it starts spitting out sector errors? I can provide latest smart reports if necessary.

-

5 minutes ago, CHBMB said:

Anyways, has it fixed your problem?

Yes thank you so much for your help

-

2 minutes ago, CHBMB said:

I'm not sure you could accomplish that with a click.......

Generally takes the command line to do something like that. You always used our container? Or migrated from somebody elses version?

Yeah I have always used your sonarr version have recently transferred to your plex media server and tautulli builds as well, in the process of trying to configure them exactly as I had them previously but then I noticed that hundreds of my films had disappeared

so using radarr to get them back and then will finish configuring the server

so using radarr to get them back and then will finish configuring the server

-

3 minutes ago, CHBMB said:

I dunno, but nowhere in our container is www-data even referenced as a user, so something has changed it.

How strange, I must have clicked something by accident

-

10 minutes ago, CHBMB said:

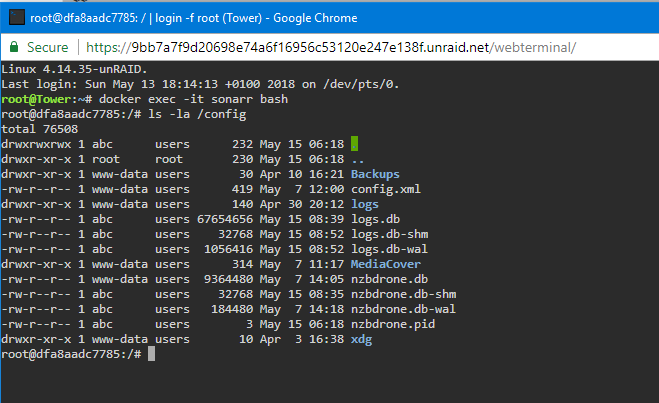

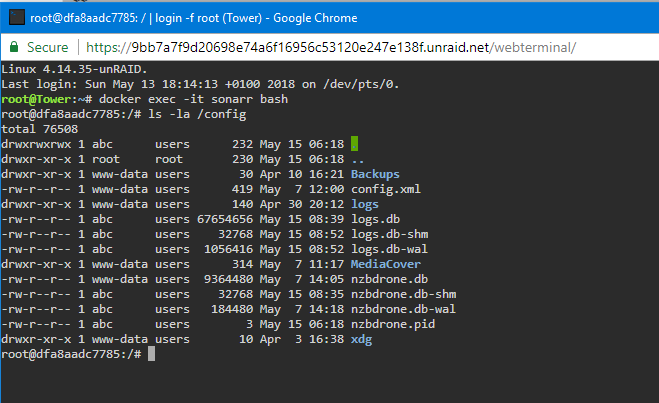

I dunno what you've done, but you've changed permissions on some pretty important files there.

run this then restart the container.

chown -R abc:users /config

You need to work out what the hell changed those perms to www-data and make sure it doesn't happen again.

Okay so I ran this, restarted the docker then afterwards re-ran the commands you gave me earlier to get this output now:

is this normal?

-

4 minutes ago, CHBMB said:

I'm gonna guess you've followed some tutorial on a webserver blindly as www-data is commonly used in that context. Either that or migrated data from somewhere else.

I don't think I have done either, only thing I have done recently which could remotely be connected to this is set up a dns for my openvpn and that's about it? So how do you think it's happened then?

-

7 minutes ago, CHBMB said:

Have you run "New Perms" or changed permissions on your Unraid box at all? Just a hunch.

Try running these two commands and posting the output of the second one.

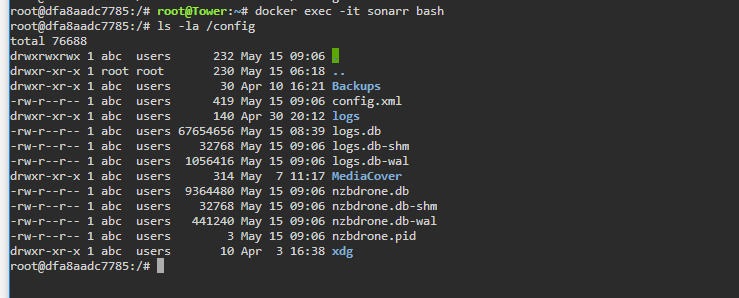

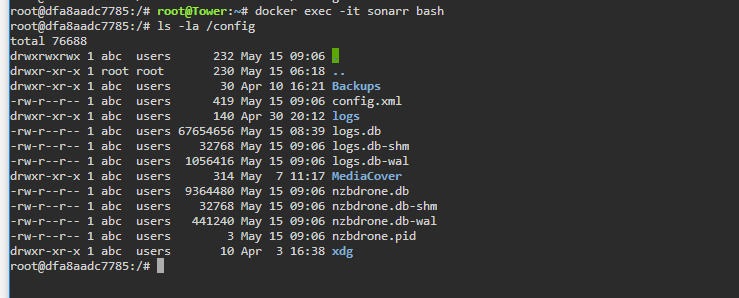

docker exec -it sonarr bash ls -la /config

I ran that and got this output:

-

6 minutes ago, CHBMB said:

My gut feeling looking at those logs is some sort of database corruption tbh. SQLite is readonly which is a problem.

You may want to grab some debug logs and post over at the Sonarr forums, I'm not as convinced as @saarg that the remote mount is the issue, although it's a possibility. Be interesting to see @hayleraid's logs etc because until we see them, to assume it's the same problem is a little too speculative for my liking.

I just tried turning sonarr log mode to debug and it said "failed to save general settings"

I think you are right with the database corruption as all it says in the log table is stuff about the database and errors, how can I fix that?

-

Okay so after trying to reconfigure sonarr to work with deluge (container i used to use for downloads) I would set it all up correctly click test it would work got the notification in the corner popping up saying it had succeeded. So i press save and wait for the spinny circle to disappear then press the 'x' in the top right and deluge isn't there in the download clients. Like I said earlier it's almost as if sonarr just isn't responding, I have tried restarting the container, turning docker on and off but don't know what else to try?

-

2 minutes ago, saarg said:

Can you test to download to unraid, to rule out that the smb share is the issue?

Yeah will test it now

-

6 minutes ago, saarg said:

Have you tried to use unraid for completed storage?

When I first started using this docker I had the download client on my unraid server so yes I have done that in the past but now I download using my desktop (vpn) so the location is on that computer now

-

7 hours ago, CHBMB said:

Docker run commands please, you're the only two reports of this that we've seen, nothing in Discord or Github about it.

Stopping container: sonarr

Successfully stopped container 'sonarr'

Removing container: sonarr

Successfully removed container 'sonarr'

Command:root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='sonarr' --net='bridge' -e TZ="Europe/London" -e HOST_OS="unRAID" -e 'PUID'='99' -e 'PGID'='100' -p '8989:8989/tcp' -v '/dev/rtc':'/dev/rtc':'ro' -v '/mnt/user/Media/TV Shows/':'/tv':'rw' -v '/mnt/disks/192.168.1.72_My_Pc_-_Spare_Storage/Movies/completed/':'/downloads':'rw,slave' -v '/mnt/cache/appdata/sonarr':'/config':'rw' 'linuxserver/sonarr'

dfa8aadc778577e64943c3ac6fc3a814925d814b2eda5fd9be4a90ed98e52abe

The command finished successfully! -

Having a few issues with this container it has been working for ages then 7 days ago it stopped working, its still running but none of the automated tasks are running, it doesn't send requests to look for new episodes, it isn't recognising when new episodes are added to the array and also the log file hasn't been updated in 7 days. Any thoughts?

So in the log shown in the docker window this is repeated god knows how many times:

[v2.0.0.5163] System.Data.SQLite.SQLiteException (0x80004005): attempt to write a readonly database

attempt to write a readonly database

at System.Data.SQLite.SQLite3.Reset (System.Data.SQLite.SQLiteStatement stmt) [0x00083] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at System.Data.SQLite.SQLite3.Step (System.Data.SQLite.SQLiteStatement stmt) [0x0003c] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at System.Data.SQLite.SQLiteDataReader.NextResult () [0x0016b] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at System.Data.SQLite.SQLiteDataReader..ctor (System.Data.SQLite.SQLiteCommand cmd, System.Data.CommandBehavior behave) [0x00090] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at (wrapper remoting-invoke-with-check) System.Data.SQLite.SQLiteDataReader..ctor(System.Data.SQLite.SQLiteCommand,System.Data.CommandBehavior)

at System.Data.SQLite.SQLiteCommand.ExecuteReader (System.Data.CommandBehavior behavior) [0x0000c] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at System.Data.SQLite.SQLiteCommand.ExecuteScalar (System.Data.CommandBehavior behavior) [0x00006] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at System.Data.SQLite.SQLiteCommand.ExecuteScalar () [0x00006] in <61a20cde294d4a3eb43b9d9f6284613b>:0

at Marr.Data.QGen.InsertQueryBuilder`1[T].Execute () [0x00046] in M:\BuildAgent\work\b69c1fe19bfc2c38\src\Marr.Data\QGen\InsertQueryBuilder.cs:140

at Marr.Data.DataMapper.Insert[T] (T entity) [0x0005d] in M:\BuildAgent\work\b69c1fe19bfc2c38\src\Marr.Data\DataMapper.cs:728

at NzbDrone.Core.Datastore.BasicRepository`1[TModel].Insert (TModel model) [0x0002d] in M:\BuildAgent\work\b69c1fe19bfc2c38\src\NzbDrone.Core\Datastore\BasicRepository.cs:111

at NzbDrone.Core.Messaging.Commands.CommandQueueManager.Push[TCommand] (TCommand command, NzbDrone.Core.Messaging.Commands.CommandPriority priority, NzbDrone.Core.Messaging.Commands.CommandTrigger trigger) [0x0013d] in M:\BuildAgent\work\b69c1fe19bfc2c38\src\NzbDrone.Core\Messaging\Commands\CommandQueueManager.cs:82

at (wrapper dynamic-method) System.Object.CallSite.Target(System.Runtime.CompilerServices.Closure,System.Runtime.CompilerServices.CallSite,NzbDrone.Core.Messaging.Commands.CommandQueueManager,object,NzbDrone.Core.Messaging.Commands.CommandPriority,NzbDrone.Core.Messaging.Commands.CommandTrigger)

at NzbDrone.Core.Messaging.Commands.CommandQueueManager.Push (System.String commandName, System.Nullable`1[T] lastExecutionTime, NzbDrone.Core.Messaging.Commands.CommandPriority priority, NzbDrone.Core.Messaging.Commands.CommandTrigger trigger) [0x000b7] in M:\BuildAgent\work\b69c1fe19bfc2c38\src\NzbDrone.Core\Messaging\Commands\CommandQueueManager.cs:95

at NzbDrone.Core.Jobs.Scheduler.ExecuteCommands () [0x00043] in M:\BuildAgent\work\b69c1fe19bfc2c38\src\NzbDrone.Core\Jobs\Scheduler.cs:42

at System.Threading.Tasks.Task.InnerInvoke () [0x0000f] in <71d8ad678db34313b7f718a414dfcb25>:0

at System.Threading.Tasks.Task.Execute () [0x00010] in <71d8ad678db34313b7f718a414dfcb25>:0

[Error] TaskExtensions: Task ErrorBut in the log section in the actual docker it shows it not having been updated for 7 days?

-

13 hours ago, NGMK said:

Well I build my server. The initial learning curb can be overwhelming but am so glad I decided to go this route rather than the Synology way. I was able to find cheap (169.99) WD Red Nas drives from Bestbuy 8TB each. Question I was initially planing on having a 16GB of RAm but one of may ram stick failed, the server is running fine now with 12GB DD3, at the moment i'm only running ruTorrent, Plex and PiHole, I do plan to play around with couple more dockers and even possibly a VM. Should I be lucking at a 32GB ram kit or not. Ram is expensive right but If I need it then it is what it is .

I haven't played around with vm's yet but I guess it depends on what you want to be running on it? If its just a small one for browsing the internet then you won't need much ram dedicated to it but if you want to do anything more powerful on it you should probably put more in

-

1

1

-

-

32 minutes ago, Tward2 said:

Thanks, that did it. I disabled the Plex docker and used mover before but I didn't disable the docker service. After I did that and pressed mover they are all spinning down now but the cache disc. What is the brand and size of a SSD cache drive that is recommended?

The cache drive wont spin down if you have your dockers installed on it no matter what size or brand that it i. However it seems that majority of users use samsung 850 or 860 evo's as they are good for the price and very reliable. I would probably go for a 250gb but it depends on what you want to use it for

-

On 5/4/2018 at 2:52 PM, Thiago Porto said:

Sure i will!!!

Thanks

-

On 5/4/2018 at 4:17 PM, Tward2 said:

The heat is one of my main concerns I think that was because the drives weren't spinning down. I installed a cache drive yesterday and now all of them are spinning down except the parity and drive 1. How do I figure out what is keeping them spun up?

Turn off all of your dockers to see whether the drives spin down or not, then start the dockers up again one by one to see which is writing to the array and keeping it spun up

-

On 4/29/2018 at 2:29 PM, L0rdRaiden said:

Sonnar have been adding epsiodes to ruTorrent (those are added in stop mode but I can live with this) when the download finished sonnar says "eppisode missing from disk" but the files are there.

This was working in the past with other series, so now I don't understand why it doesn't work.

18-4-29 15:24:54.2|Info|SceneMappingService|Updating Scene mappings 18-4-29 15:24:55.3|Info|RefreshSeriesService|Updating Info for XXXXX 18-4-29 15:24:55.3|Info|RefreshEpisodeService|Starting episode info refresh for: [296762][XXXXX] 18-4-29 15:24:55.3|Info|RefreshEpisodeService|Finished episode refresh for series: [296762][XXXXX]. 18-4-29 15:24:55.3|Info|DiskScanService|Scanning disk for XXXXX 18-4-29 15:24:55.3|Info|DiskScanService|Completed scanning disk for XXXXX 18-4-29 15:24:55.5|Info|MetadataService|Series folder does not exist, skipping metadata creationXXXXX= hide on purpose

I may be wrong but I had similar issues when the files weren't named with their extension in the name e.g. .mkv or whatever the media type is

-

On 5/5/2018 at 9:42 AM, bally12345 said:

Will be sticking with the WD Reds but at £167.99 each I really wish I had another spare bay instead of taking out the perfectly functioning 4tb drive.

Sent from my SM-G930F using Tapatalk

Keep hold of it, you may be able to find a use for it in a different build or something. You could also sell it on the forums here to make some money back on it towards the new drive

-

On 5/5/2018 at 12:15 AM, John_M said:

Any differences there are mostly irrelevant for unRAID.

Yes true the ironwolf coming out on top by just a few MB/s and if you really need extra MB/s you would probably be looking at different drives anyway

-

Please let me know whether it work as I'm interested in a pair of these cards

-

-

If you have only one network card hooked up and you configured pfsense to run through it wont you need to disable that to allow the server to use that port again?

WD drive giving errors but not reallocated sectors. What should I do about it?

in General Support

Posted

Okay, thank you. So what exactly is the problem?