-

Posts

810 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by casperse

-

-

Hi All

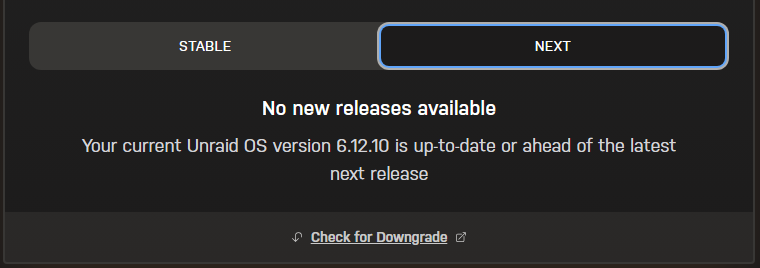

I finally have a backup/test Unraid server and would like to update this server with the new preview 6.13.0-beta.1

But when I go to the Unraid connect update like instructed here:

https://unraid.net/blog/new-update-os-tool

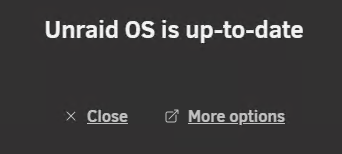

I just get this:

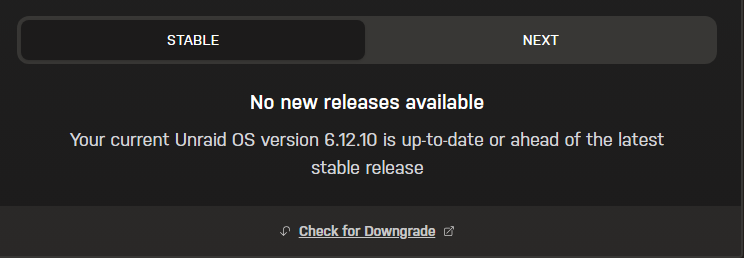

Going to Option I get this:

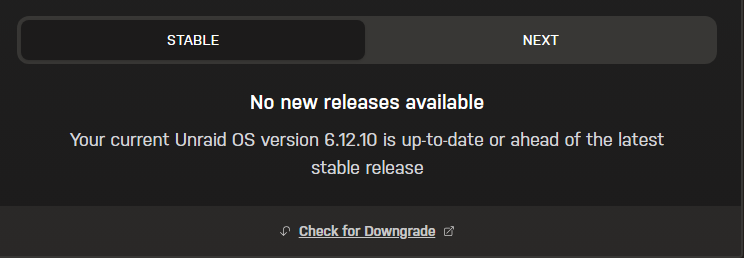

Pressing Next:

Please advice on how to test 6.13 (I would like to test Intel ARC support and need this release to do this 🙂 )

QuoteOption 2 – Prereleases / Changing release branch

Many of our users like to run our release candidates (RCs), which are on our "next" branch of releases.This will be the method for you if you want to test these releases.

Go to Tools → Update OS or click Check for Update in the top-right dropdown, then choose More Options in the modal that appears.

Clicking one of these links will take you to account.unraid.net to look at new OS release options.

The new updater has an account sign-in requirement for downloading “next” releases. We made this change to ensure that users don’t accidentally swap into the next branch and that everyone running a prerelease has a forum account to provide feedback.

The new updater now supports multiple releases of a “mainline” branch. For example, we can potentially release a Stable 6.12.x along with a Stable 6.13.x release. -

5 hours ago, Michael_P said:

The word is it's going to be in 6.13

Someone told me that there is a preview 6.13.0-beta.1 out and that it works 🙂

-

Just wondering are we getting really close to getting native ARCH support (This thread was started in 2022 and we have ZFS now 😀) and reading all the posts we have workarounds if you dare to create your own modified kernel - But I really would like to know are we really close? - I thought the new 14th gen CPU was supporting AV1 encoding but seems it’s only decoding. So native AV1 HW encoding is limited to Nvidia support in Unraid but every post here states that Intel just do a better job? (And Nvidia is crazy expensive)

I think like many others we just dream about the cheap option a 380 card…..

Missed this post:

“Word on the street is it's in 6.13 whenever that gets released“

-

-

Hi All

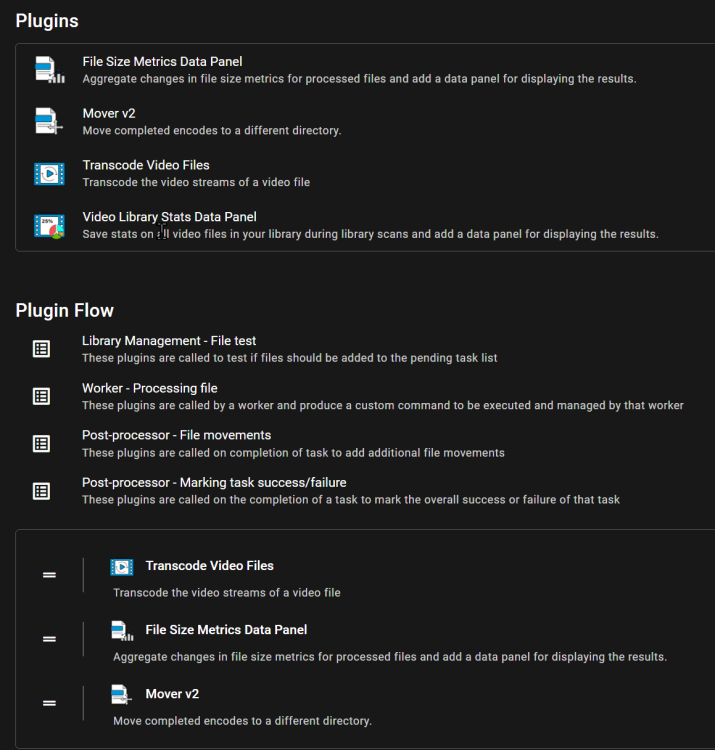

Just went back to Unmanic to try AV1 (I am a new patreon 🙂)

But I just cant get this working, have some help from the great discord channel, but I think this is more an Unraid problem!

I have build a new system using the new 14th gen iGPU and removed the NVIDIA card.

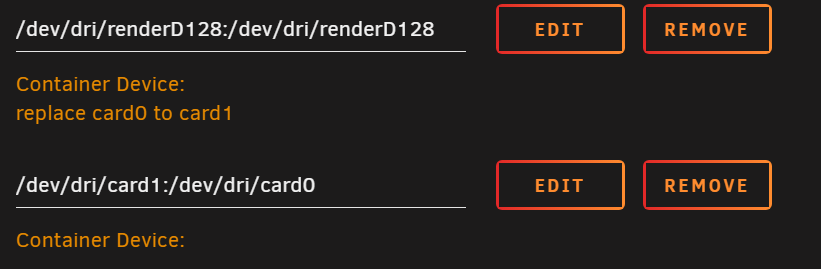

But when I do a list I have the following cards listed:

# ls /dev/dri

by-path/ card0 card1 renderD128IS THERE ANYWAY TO DELETE CARD1 so I only have one CARD0?

And I can see that the iGPU is listed as Card1 - and Unmanic uses card0

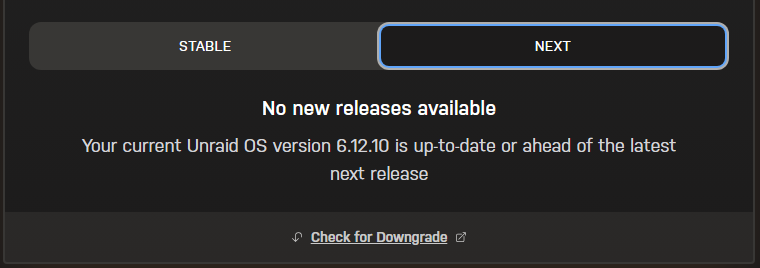

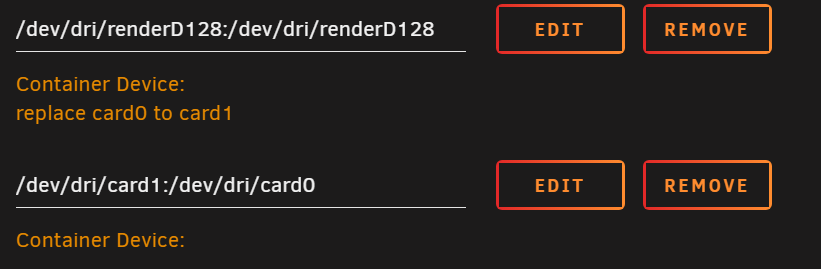

I have tried some suggested workarounds:

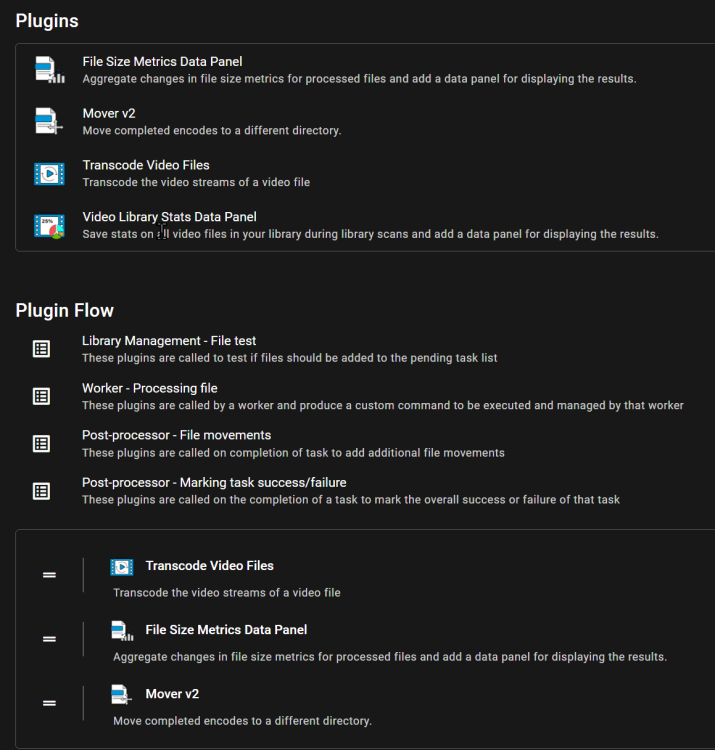

But no matter what I do it does not encode anything even with a very simple flow:

Generic auto on encoding settings:

It just jumps through them as failed:RUNNER: Transcode Video Files [Pass #1] Executing plugin runner... Please wait Plugin runner requested for a command to be executed by Unmanic COMMAND: ffmpeg -hide_banner -loglevel info -hwaccel qsv -hwaccel_output_format qsv -init_hw_device qsv=hw -filter_hw_device hw -i /library/movies/#unmanic_test/Season 05/Star Trek - Discovery (2017) - S05E03 - Jinaal [WEB-DL.1080p][8bit][x264][AC3 5.1]-EGEN.mkv -strict -2 -max_muxing_queue_size 4096 -map 0:v:0 -map 0:a:0 -map 0:s:0 -map 0:s:1 -map 0:s:2 -map 0:s:3 -c:v:0 av1_qsv -preset slow -global_quality 23 -look_ahead 1 -c:a:0 copy -c:s:0 copy -c:s:1 copy -c:s:2 copy -c:s:3 copy -y /tmp/unmanic/unmanic_file_conversion-lketm-1712931432/Star Trek - Discovery (2017) - S05E03 - Jinaal [WEB-DL.1080p][8bit][x264][AC3 5.1]-EGEN-lketm-1712931432-WORKING-1-1.mkv LOG: libva info: VA-API version 1.20.0 libva info: Trying to open /usr/lib/jellyfin-ffmpeg/lib/dri/iHD_drv_video.so libva info: Found init function __vaDriverInit_1_20 libva info: va_openDriver() returns 0 libva info: VA-API version 1.20.0 libva info: Trying to open /usr/lib/jellyfin-ffmpeg/lib/dri/iHD_drv_video.so libva info: Found init function __vaDriverInit_1_20 libva info: va_openDriver() returns 0 libva info: VA-API version 1.20.0 libva info: Trying to open /usr/lib/jellyfin-ffmpeg/lib/dri/iHD_drv_video.so libva info: Found init function __vaDriverInit_1_20 libva info: va_openDriver() returns 0 libva info: VA-API version 1.20.0 libva info: Trying to open /usr/lib/jellyfin-ffmpeg/lib/dri/iHD_drv_video.so libva info: Found init function __vaDriverInit_1_20 libva info: va_openDriver() returns 0 Input #0, matroska,webm, from '/library/movies/#unmanic_test/Season 05/Star Trek - Discovery (2017) - S05E03 - Jinaal [WEB-DL.1080p][8bit][x264][AC3 5.1]-EGEN.mkv': Metadata: encoder : libebml v1.4.4 + libmatroska v1.7.1 creation_time : 2024-04-12T06:04:22.000000Z Duration: 00:53:22.16, start: 0.000000, bitrate: 8330 kb/s Stream #0:0: Video: h264 (High), yuv420p(tv, bt709, progressive), 1920x1080 [SAR 1:1 DAR 16:9], 25 fps, 25 tbr, 1k tbn (default) Metadata: BPS : 7879644 DURATION : 00:53:22.160000000 NUMBER_OF_FRAMES: 80054 NUMBER_OF_BYTES : 3153985407 _STATISTICS_WRITING_APP: mkvmerge v77.0 ('Elemental') 64-bit _STATISTICS_WRITING_DATE_UTC: 2024-04-12 06:04:22 _STATISTICS_TAGS: BPS DURATION NUMBER_OF_FRAMES NUMBER_OF_BYTES Stream #0:1(eng): Audio: ac3, 48000 Hz, 5.1(side), fltp, 448 kb/s (default) Metadata: BPS : 448000 DURATION : 00:53:22.144000000 NUMBER_OF_FRAMES: 100067 NUMBER_OF_BYTES : 179320064 _STATISTICS_WRITING_APP: mkvmerge v77.0 ('Elemental') 64-bit _STATISTICS_WRITING_DATE_UTC: 2024-04-12 06:04:22 _STATISTICS_TAGS: BPS DURATION NUMBER_OF_FRAMES NUMBER_OF_BYTES Stream #0:2(dan): Subtitle: subrip Metadata: BPS : 71 DURATION : 00:51:03.855000000 NUMBER_OF_FRAMES: 607 NUMBER_OF_BYTES : 27347 _STATISTICS_WRITING_APP: mkvmerge v77.0 ('Elemental') 64-bit _STATISTICS_WRITING_DATE_UTC: 2024-04-12 06:04:22 _STATISTICS_TAGS: BPS DURATION NUMBER_OF_FRAMES NUMBER_OF_BYTES Stream #0:3(fin): Subtitle: subrip Metadata: BPS : 76 DURATION : 00:51:03.855000000 NUMBER_OF_FRAMES: 863 NUMBER_OF_BYTES : 29194 _STATISTICS_WRITING_APP: mkvmerge v77.0 ('Elemental') 64-bit _STATISTICS_WRITING_DATE_UTC: 2024-04-12 06:04:22 _STATISTICS_TAGS: BPS DURATION NUMBER_OF_FRAMES NUMBER_OF_BYTES Stream #0:4(nor): Subtitle: subrip Metadata: BPS : 73 DURATION : 00:51:03.855000000 NUMBER_OF_FRAMES: 685 NUMBER_OF_BYTES : 28096 _STATISTICS_WRITING_APP: mkvmerge v77.0 ('Elemental') 64-bit _STATISTICS_WRITING_DATE_UTC: 2024-04-12 06:04:22 _STATISTICS_TAGS: BPS DURATION NUMBER_OF_FRAMES NUMBER_OF_BYTES Stream #0:5(swe): Subtitle: subrip Metadata: BPS : 76 DURATION : 00:51:03.855000000 NUMBER_OF_FRAMES: 749 NUMBER_OF_BYTES : 29446 _STATISTICS_WRITING_APP: mkvmerge v77.0 ('Elemental') 64-bit _STATISTICS_WRITING_DATE_UTC: 2024-04-12 06:04:22 _STATISTICS_TAGS: BPS DURATION NUMBER_OF_FRAMES NUMBER_OF_BYTES Stream mapping: Stream #0:0 -> #0:0 (h264 (h264_qsv) -> av1 (av1_qsv)) Stream #0:1 -> #0:1 (copy) Stream #0:2 -> #0:2 (copy) Stream #0:3 -> #0:3 (copy) Stream #0:4 -> #0:4 (copy) Stream #0:5 -> #0:5 (copy) Press [q] to stop, [?] for help [av1_qsv @ 0x555a64876e00] Selected ratecontrol mode is unsupported [av1_qsv @ 0x555a64876e00] Current frame rate is unsupported [av1_qsv @ 0x555a64876e00] Current picture structure is unsupported [av1_qsv @ 0x555a64876e00] Current resolution is unsupported [av1_qsv @ 0x555a64876e00] Current pixel format is unsupported [av1_qsv @ 0x555a64876e00] some encoding parameters are not supported by the QSV runtime. Please double check the input parameters. [vost#0:0/av1_qsv @ 0x555a64877540] Error initializing output stream: Error while opening encoder for output stream #0:0 - maybe incorrect parameters such as bit_rate, rate, width or height Conversion failed!

Also the iGPU works fine in PLex/Emby/Jellyfin just not Unmanic -

17 hours ago, dlandon said:

Yes, when the remote share has issues, I've tried to set up UD to cleanly unmount and remount the remote share so it will not hang.

That's generally from a name resolution issue. Is your remote server on a static IP address? You might try using the IP address of the remote server but it needs to be static.

I have put a lot of time on sorting out name resolution issues and changing IP addresses when the server is not on a static IP address. It is pretty hard to test and it may be missing a server IP address change.

Great all my servers have a static IP, so I will just change that - THANKS!

-

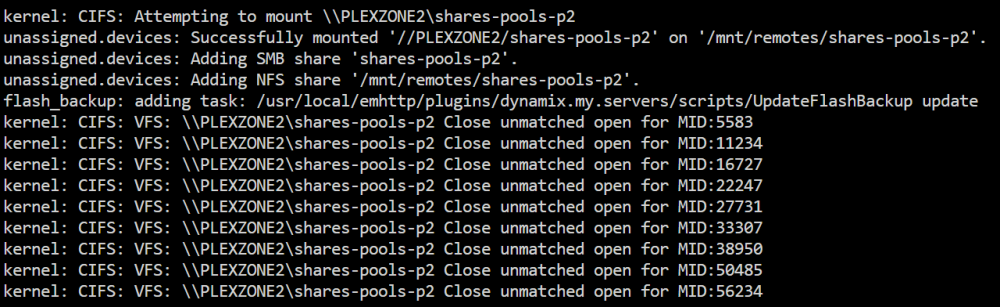

On 3/27/2024 at 11:57 AM, casperse said:

Okay I went back to the PC mappings and found that it used the windows user account matching an old Unraid one (Old setup local VM PC) but after using this instead of the administrator account it connected.

I also made sure that the two local root shares was renamed. - Since server p2 is a clone of the old UNraid server it had the same old accounts.

I did found that the root share pool-shares did NOT list on any server. I just had to write it manually in the UI of UAD.

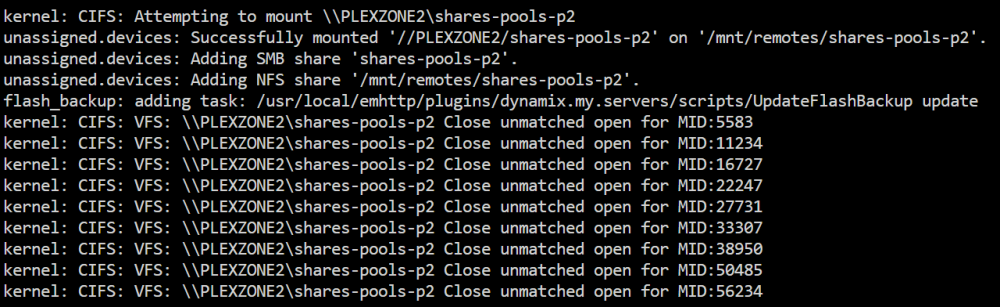

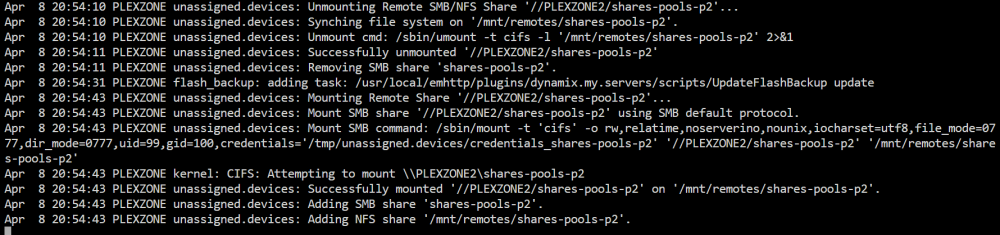

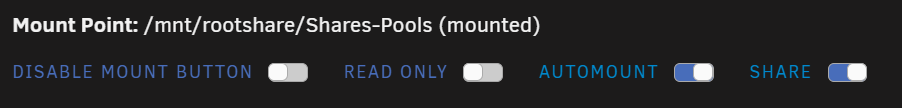

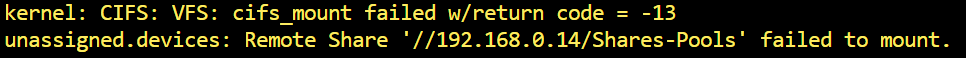

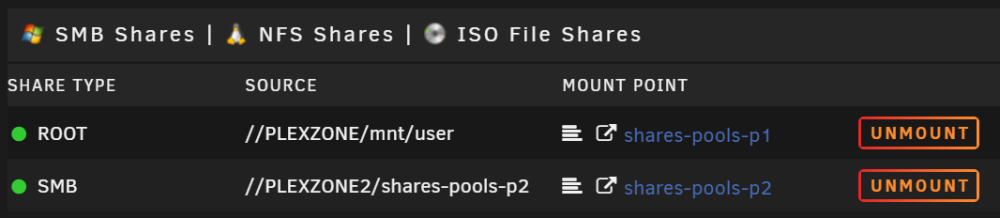

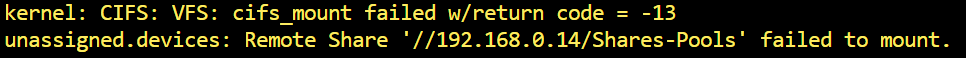

Looking at the logs I get this now:

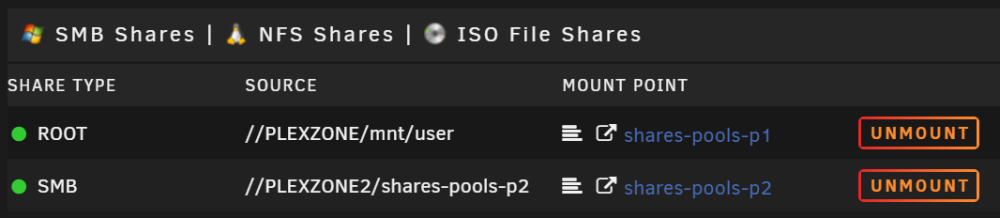

But its working:

I haven't found the difference between the two accounts? both have access to the same directories.

Only difference is that the old one was created when I started using Unraid - cant be sure I didnt do something to this account so long time ago.

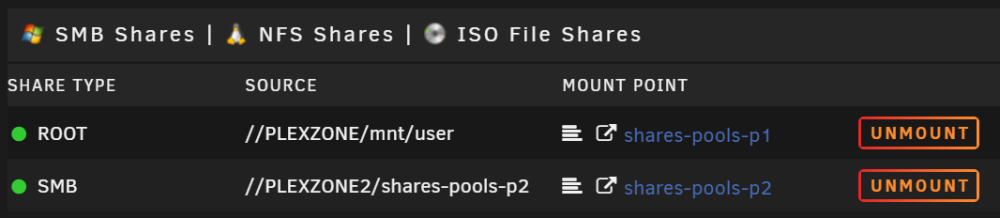

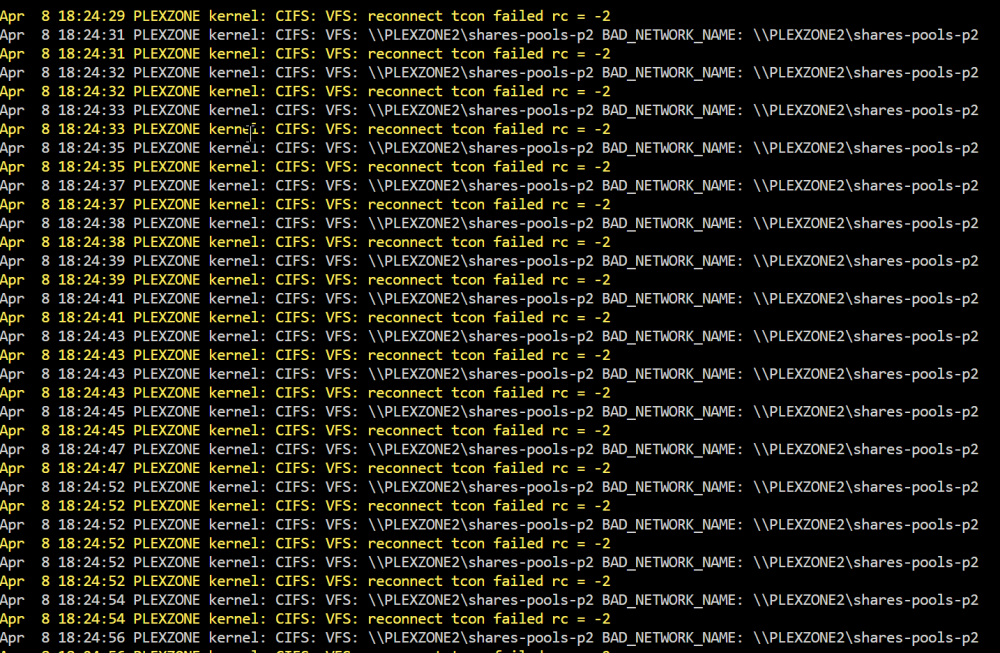

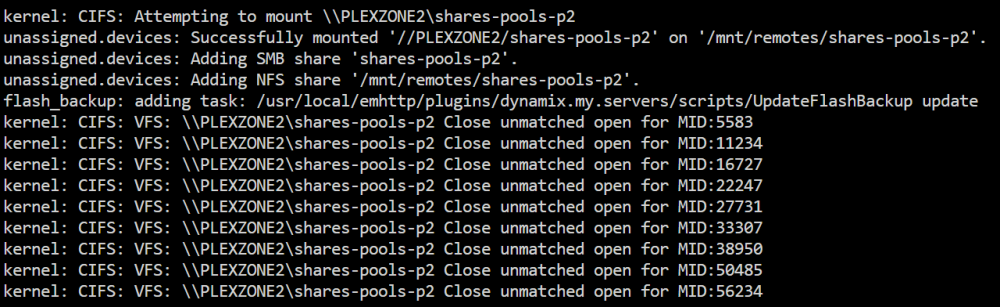

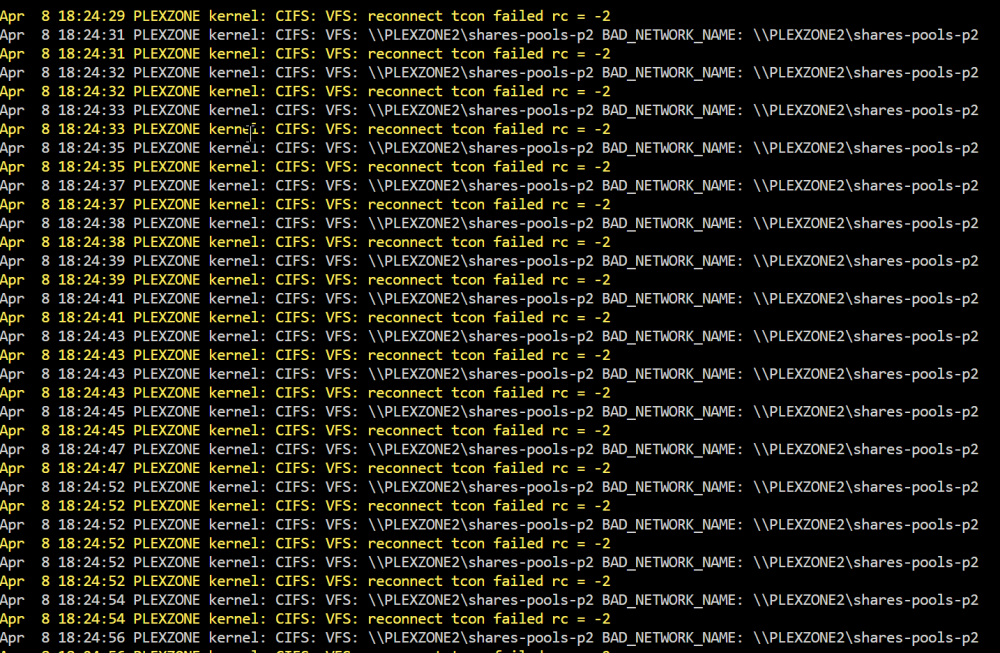

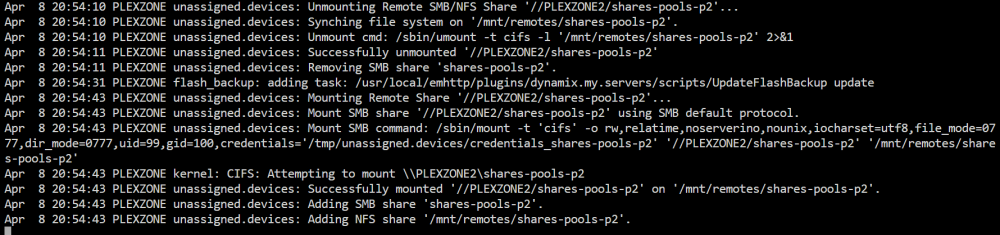

Thanks again for your help this was a strange one 🙂So every is working but after some time I get this error:

But the mount is working!

And I can easily unmount and mount the share again:

BAD_NETWORK_NAME?

Thanks I really thought I had it now 🙂

-

This might be by design? 🙂

But I just noticed that any shared folder that I have created as "Exclusive access" on my cache drive

Is not accessible or visible when accessing the ROOT mount.

Changing it to preferred cache I get it back

-

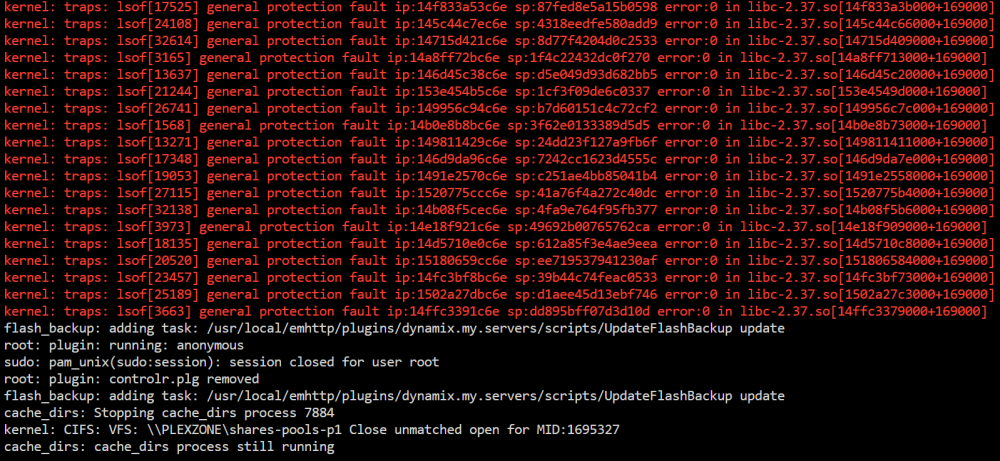

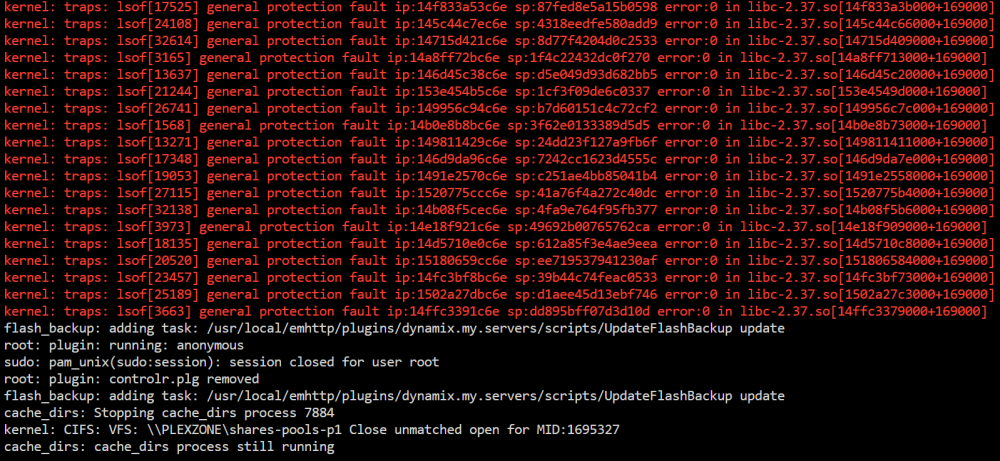

Disabled the dir cache plugin but still keep getting errors

I am copying between servers using the guide from SPX Labs

And it works great, but seems I only get these errors when running a copy?

Sofar its not crashing..... -

On 3/25/2024 at 1:51 PM, dlandon said:

You have the cache dirs plugin installed. Be sure you set it to include only the shares you want it to operate on. If you don't set up include shares, it will cache all shares including UD and cause issues like this.

Thanks this post really helped me! Disabled while transferring between servers stopped this.

Using the guide to use Luckybackup moving TB of data

-

16 hours ago, dlandon said:

This means the rootshare can be mounted and should be available to mount on an Unraid server.

Some more things to try:

- Try a simpler password on the administrator account. Letters and numbers only.

- You have multiple IP addresses listed in the testparm output. This is where Samba is listening:

interfaces = 192.168.0.6 192.XXX.XXX.6 127.0.0.1 100.XXX.XXX.115 fd7a:115c:a1e0::27a:ae73- Is the server visible on one of these interfaces? You have tailscale installed. Confirm it is set up properly.

- Can you see the individual shares and can those be mounted?

- You have a huge amount of plugins installed. One may be interferring. Boot your server in safe mode and try mounting the root share.

Okay I went back to the PC mappings and found that it used the windows user account matching an old Unraid one (Old setup local VM PC) but after using this instead of the administrator account it connected.

I also made sure that the two local root shares was renamed. - Since server p2 is a clone of the old UNraid server it had the same old accounts.

I did found that the root share pool-shares did NOT list on any server. I just had to write it manually in the UI of UAD.

Looking at the logs I get this now:

But its working:

I haven't found the difference between the two accounts? both have access to the same directories.

Only difference is that the old one was created when I started using Unraid - cant be sure I didnt do something to this account so long time ago.

Thanks again for your help this was a strange one 🙂 -

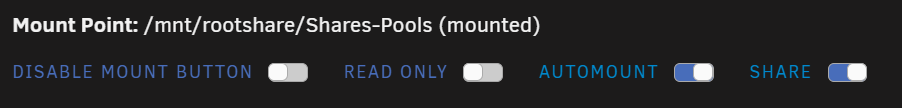

2 hours ago, dlandon said:

Did you turn on the share switch for the rootshare in device settings? It won't be shared unless you turn it on.

Not sure I follow you?

Yes the share is enabled in the settings of the mount

And root access is possible from a windows PC, some other place? -

1 hour ago, dlandon said:

No, that is not your problem.

Possible reasons for this error could include:

- Incorrect credentials (username/password) provided for accessing the CIFS share.

- Insufficient permissions to access the CIFS share from the system where the error occurred.

- Network connectivity issues preventing access to the CIFS share.

- Misconfiguration of the mount command or options used for mounting the CIFS share.

I don't think 2 or 3 apply because UD shows the remote share as being online and available. 4 is not an issue.

That leaves 1 - credentials. Several things to look for:

- Use UD to search the server and select the correct server from the drop down. Don't enter the IP address.

- Let UD search for the remote server shares after entering the credentials in UD. If UD does not list the shares, the credentials are an issue,

- Watch out for special characters. They can cause php issues.

- Choose the share from the drop down. Don't enter it.

- You can't use the 'root' user credentials.

- You are probably overthinking this issue. Let UD work out the details for you. Let it find the server and the share for you and use what it finds.

You said you could map the rootshare in Unraid?

But the rootshare path is never listed in UAD only all the normal shares?

I can confirm that UAD will list all the individual shares one by one, but what eludes me is the rootshare mapping between servers inside Unraid, that isnt listed as a option for a mapping shared!

- UAD will never list the root access share will it?

- I have created a separate user (administrator) for every shared folder, like you suggested, but I dont know how to enable this new user access to the rootshare mapping? (Some command in a terminal?)

Again thanks so much for helping out having two servers is allot of work when you cant move things more easy between them 🙂

-

1 hour ago, JorgeB said:

No.

No.

So the only difference then is that if I in the future install a new docker and keep/forget to change the path of /mnt/user/appdata

The exclusive share option will make sure its running without FUSE -

Hi All

I have read the nice write-up on the new "exclusive shares" feature here:

https://reddthat.com/post/224445

I have forgotten to change the path sometimes when installing a new docker, so I would actually like to setup the "Exclusive share" for my appdata cache. (I Have plenty of spare cache space, and my cache pool is mirrored and setup to use snapshot with ZFS to the array)

-

What I am missing is do I have to change all my dockers back from the mnt/[poolname]/appdata/ back to the mnt/user/appdata/....

- And is there any other difference in enabling exclusive shares vs having changed all the path to mnt/[poolname]/appdata/ ?

Hope someone can help answer my question, I didn't really get this reading around the forum

-

What I am missing is do I have to change all my dockers back from the mnt/[poolname]/appdata/ back to the mnt/user/appdata/....

-

On 3/24/2024 at 11:34 PM, dlandon said:

I might be doing it wrong, then?

QuoteThe SMB mapping to a root share on windows is working with:

Server A: \\192.168.0.6\Shares-PoolsServer B: \\192.168.0.14\Shares-Pools

But doing the same on Unraid, does not work.

Do I need the full path?

//192.168.0.14/mnt/rootshare/Shares-PoolsI get error when trying to mount the share

Diagnostic attached.

-

4 hours ago, dlandon said:

I think I understand what you are trying to accomplish here. It looks like a credentials issue. You can't use the "root" credentials. You should set up a user with priveledges to read and write all of your shares on PLXEZONE2. I have one set as what I call an "Administrator".

You will know this works when you go to set up the share on PLEXZONE and the shares are listed when you click the "Load Shares" button using your "Administrator" credentials. If the shares are not listed, your credentials are wrong.

Thanks! I did this and all my shares are listed.

But I cant map the smb rootshare I still get an error when trying the path of :

Servername\Shares-Pools and I dont want to map each 22 mapped shares 1 by 1

Is it only from a Windows SMB you can map the rootshare and not between Unraid servers?

Maybe some linux magic you can do to enable a mount between them?

I am also experimenting with 2 x 10Gbit lan card with direct connection between eth2 - eth2

But for some reason I am also running into eroors when trying this? -

Hi I really need some input on how to accomplish this using UAD.

I already have enabled a rootshare on each of my Unraid servers- I really want a rootshare mapped between my 2 Unraid servers on UAD (So far I keep getting errors)

- I really would like this to utilize the direct 10Gb LAN connection between them 192.168.11.6 & 192.168.11.14 if possible?

- SMB or NFS?

So far I haven't been able to accomplish this using the UAD, do I need some Linux terminal commands to accomplish this?

I am planning to use the "luckybackup" docker to move large amount of data between them, but having a rootshare mount between them would be really helpful

UPDATE:

Mapping the two rootshares on windows works!

But trying to mount a SMB rootshare between the Unraid servers does NOT work?

Same SMB part

\\SERVERNAME\Shares-Pools or\\IP\Shares-Pools

-

So my troubleshooting have located my problem.

The Bios is always set to use the internal iGPU (Correct) and I get all the startup during boot to my monitor but when it should start the GUI I get a prompt in the upper left corner.

Removing my Nvidia card and placing my HBA controller in the first PCIe slot WORKED! (Removing all other cards) and after some time I finally got the GUI on my monitor. JUHU!

I then tried placing my Nvidia GPU (NVIDIA GeForce RTX 3060) in the 3 Pci slot (8x) and after boot I am back to the prompt and no UI?

Something breaks during boot (The GPU have no output to my monitor).

Any suggestion on what I should do next?

UPDATE: I found a new Bios from 2024 (My board is from 2019) I updated the Bios and set everything up from scratch.

Same thing. Cursor in top left corner of the monitor no UI. -

SOLVED: I am an IDIOT....

Checking the network settings on the ubuntu:

sudo nano /etc/netplan/00-installer-config.yaml

Somehow the gateway was wrong?

Also installing the Guest service:

Step 1: Log in using SSH

You must be logged in via SSH as sudo or root user. Please read this article for instructions if you don’t know how to connect.Step 2: Install qemu guest agent

apt update && apt -y install qemu-guest-agent

Step 3: Enable and Start Qemu Agent

systemctl enable qemu-guest-agent

systemctl start qemu-guest-agent

Step 4: Verify

Verify that the Qemu quest agent is running -

Hi All

All my VM's work except the AMP VM for my gaming server?

I can see it doesn't get any IP? (This configuration is the same as my Windows VM and that worked perfectly after moving?)<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm'> <name>AMP_Game_server</name> <uuid>106257ad-bf64-1305-df79-880b565808af</uuid> <description>Ubuntu server 20.04LTS</description> <metadata> <vmtemplate xmlns="unraid" name="Ubuntu" icon="/mnt/user/domains/AMP/amp.png" os="ubuntu"/> </metadata> <memory unit='KiB'>16777216</memory> <currentMemory unit='KiB'>16777216</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>10</vcpu> <cputune> <vcpupin vcpu='0' cpuset='12'/> <vcpupin vcpu='1' cpuset='13'/> <vcpupin vcpu='2' cpuset='14'/> <vcpupin vcpu='3' cpuset='15'/> <vcpupin vcpu='4' cpuset='16'/> <vcpupin vcpu='5' cpuset='17'/> <vcpupin vcpu='6' cpuset='18'/> <vcpupin vcpu='7' cpuset='19'/> <vcpupin vcpu='8' cpuset='20'/> <vcpupin vcpu='9' cpuset='21'/> </cputune> <os> <type arch='x86_64' machine='pc-q35-5.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/106257ad-bf64-1305-df79-880b565808af_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='5' threads='2'/> <cache mode='passthrough'/> </cpu> <clock offset='utc'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/user/domains/AMP/vdisk1.img'/> <target dev='hdc' bus='virtio'/> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <controller type='pci' index='0' model='pcie-root'/> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x10'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x11'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0x12'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0x13'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0x14'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x4'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <controller type='sata' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/> </controller> <interface type='bridge'> <mac address='52:54:00:84:10:41'/> <source bridge='br0'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <graphics type='vnc' port='-1' autoport='yes' websocket='-1' listen='0.0.0.0' keymap='da'> <listen type='address' address='0.0.0.0'/> </graphics> <audio id='1' type='none'/> <video> <model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0'/> </video> <memballoon model='virtio'> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </memballoon> </devices> </domain>

I can connect to the Webserver UI? But it have no internet connection, so strange

-

On 2/12/2024 at 5:53 PM, Veah said:

Did this work? I've had the same issue since about 6.12.1(ish)

This is actually strange, cloned my Unraid flash for a second server (New license).

On the new server it now works (With the settings above), I get the GUI output on the iGPU HDMI port (MB)

BUT!

Same cloned USB on my old server gives me a prompt with a "_" blinking screen in the top left corner of the monitor?

On this MB its a Displayport again with iGPU output (Displayport) and I get the full boot on the screen right up to the end?

Both servers have the iGPU as the primary and only output!

-

My problem is that i occurs every 10-12 hours so with the amount of dockers I have this would be very hard to do.

Update:So this could be caused by a single docker with a memory limit that breaks it?

Anyway to identify the docker, from the error message? -

Please can anyone help me?

- I have installed the swapfile plugin

- I have set a memory limit on all my dockers to 1G (If all dockers 0bey the rules of the limit then I shouldn't see anymore errors?)

- I have tried stopping all dockers and only some dockers but I still get the Memory error?

Is there anyway to find out what is causing this?

Would syslog be able to find out?This happened again today:

Systems are still running, but the error is resulting in Unraid killing random processes?

Connect access to preview releases?

in Connect Plugin Support

Posted

Ok so my only option is to install this?

https://github.com/thor2002ro/unraid_kernel/releases