-

Posts

810 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by casperse

-

-

Hi All

Can anyone confirm that this card is supported by Unraid (latest version 6.8.3) ?

Intel Ethernet Converged Network Adapter X710-T4 (RJ45 4x10GbE LAN)

I am looking to split this into a Pfsense router 2 x 10Gbit and the other two for Unraid - data transfer

Hope someone have some experience with this card?

Or another with 4 x 10Gb ports (RJ45)

Best regards to all the Unraid server builders!

UPDATE: I cant find the difference between these two?

Intel X520-T2 Dual Port - 10GbE RJ45 Full Height PCIe-X8 Ethernet

Intel X540-T2 Dual Port - 10GbE RJ45 Full Height PCIe-X8 Ethernet

Both are with RJ45!

-

On 10/17/2020 at 11:32 AM, JorgeB said:

The "Up to 6Gb/s" just means SAS2/SATA3 link for devices, that's never the bottleneck with disks, even 3Gb/s (SATA2) would be enough for most disks, see here for some benchmarks to give you a better idea of the possible performance increase going with a PCIe 3.0 HBA.

Ok thanks! So I am back to upgrading my cache instead - That would give some speed increase having the files longer on cache before moving files to the array! (But have to wait for 4TB being available and lower pricing)

And of course upgrade to 10Gbit network (After talking with @debit lagos I think money is better spend here, thanks!)

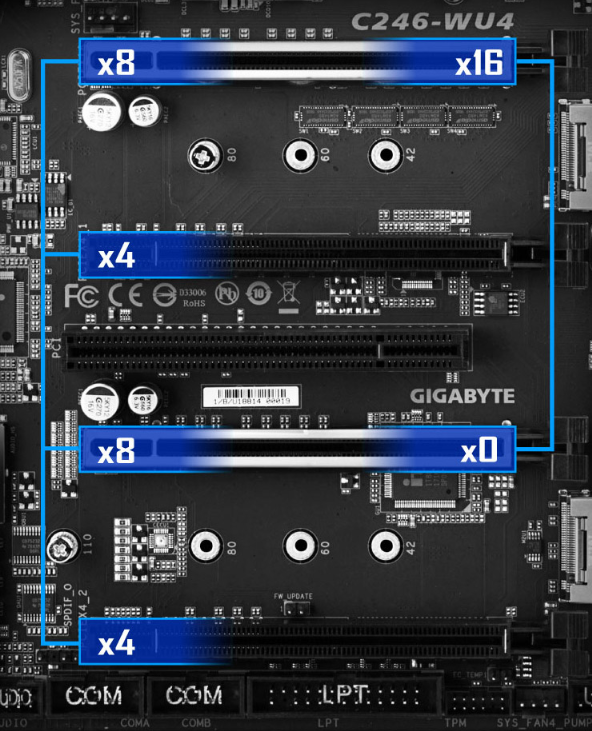

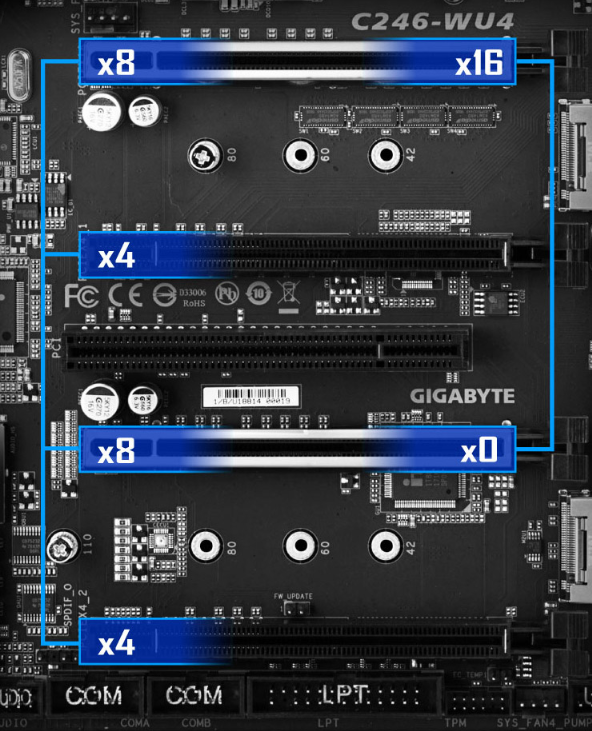

But since I am using the CPU Xeon E-2176G I have limited PCI-e lanes! 😩

So do you guys think I can run my Quadro 2000P on a 4x slot and swap my cards like this:

Slot 1 PCI x8 - LSI Logic SAS 9201-16i PCI-e 2.0 x8 SAS 6Gbs HBA Card

M2A x2: - N/A (PCIe Gen3 x2 /SATA Mode! - x2 = half speed? 1750MB/s) - Share BW Sata port 3_1 not available? (Not in use)

Slot 2 PCI x4 - Nvidia Quadro P2000 (VCQP2000-PB) (Manage with only x4 lanes?)

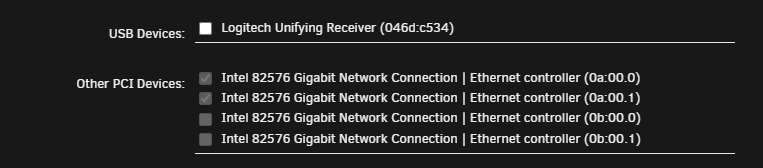

Slot 3 PCI x8 - Intel Ethernet Converged Network Adapter X710-T4 (RJ45 4x10GbE LAN) - Need two for pfSense router & two for local LAN

M2M x4: - Samsung MZ-V7S2T0BW 970 Evo Plus SSD [2 TB] (PCIe Gen3 x4) - SHARES BW with PCIEX_4x below (should be ok! not big impact)

Slot 4 PCIEX_x4 - Intel Pro 1000 VT Quad Port NIC (EXPI9404VTG1P20) - SHARES BW with M2M (4 x LAN for XPenology)

-

On 10/12/2020 at 9:33 PM, mgutt said:

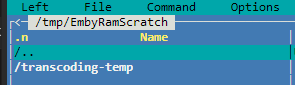

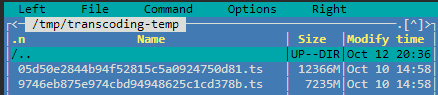

Yes, but why does Emby write those files only if you change the path of the docker.img. Where did Emby wrote those .ts files before you changed the path to "PlexRamScratch"?

Sorry for the delay replying you!

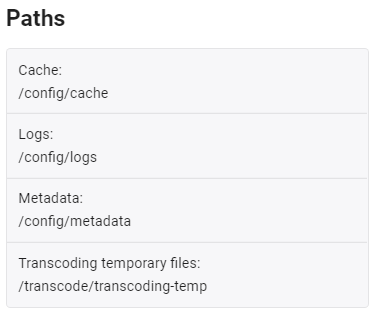

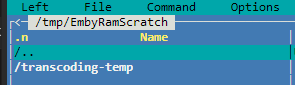

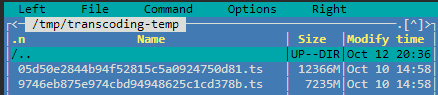

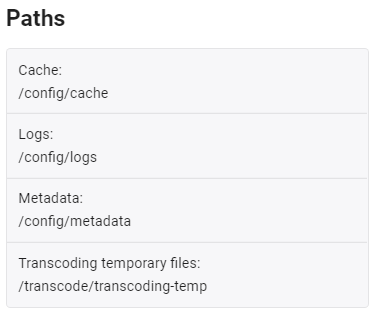

I found that no matter what path I write in Emby it will create a subdir in the tmp/ram dir as "transcoding-temp"

I deleted this and used your script to create a EmbyRamScratch that it can create the "transcoding-temp" under

So far no problems after this change! works great!

QuoteThanks I also added this to my docker setting running Krusader looks ok now!

QuoteOk, now things become clearer. My original tweak is only to change the plex config path to /mnt/cache and not the general docker.img path. This means you quoted my tweak in your "warning", but you even don't use it:

Sorry I have updated my "warning" in the topic for tweaks that I created - The changes have made the biggest speed improvement to my setup so far!

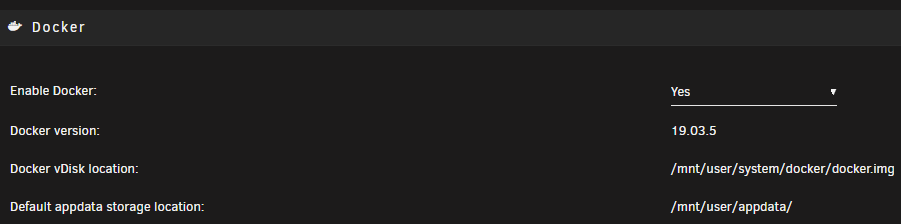

No the docker.img have just always been on the cache (Just not used the cache in the path, only the prefer on cache setting before)

QuoteChanging the general docker.img path is a different tweak, but I didn't even write a guide for this. I use it, but wasn't able to test it with multiple dockers. So its more a "beta" thing. Maybe Emby has a problem with that. If I find the time, I will test that. Can you send me a screenshot of your Emby settings so I can test this with the same settings?

Sure thing this really utilize the NVMe

(Only drawback is that I had to upgrade it to a Samsung MZ-V7S2T0BW 970 Evo Plus [2 TB] $$$ and my limit in PCIe slots I can only have 1 NVMe)

Quote

QuoteI have no experience if Emby is able to clean up the dir as Plex does it. I would suggest to add an additional ramdisk for Emby alone. The steps are the same (could be added to your existing script):

Done! and thanks!

QuoteNo idea. Maybe you find the answer here:

https://emby.media/community/index.php?/topic/54676-how-often-is-transcoding-temp-cleaned/

As stated before Emby creates a "transcoding-temp" dir (Must be hardcoded) but I have pointed that to the new "EmbyRamScratch/trancoding-temp/"

QuoteIf it does, then you could do that. But do not forget. If your SSD becomes full, Plex is not able to write any more data (will probably crash). Because of that I wrote in my guide, that it is useful to set a "Min. free space" in the Global Share Settings > Cache Settings, so caching through usual shares will not fill up your NVMe. Of course it does not influence the Plex usage. If Plex alone fully utilizes the NVMe, then you should leave it to /mnt/user or buy a bigger NVMe.

No only one NVMe and so far this is okay. I have around 8-900G for cache a day and rest for VM/Docker/Appdata/docker.img

QuoteSide note: I hope you set up a backup of your NVMe

Yes I have the excellent community tools that creates backup of the VM's/Appdata/docker.img

I have excluded the 800G Plex appdata folder, have a old yearly backup - and worst case is just that it needs to do a rescan of the library

Again thanks for all your help! much appreciated! 🙏

BTW: I have created a local shared temp that I have as prefer on cache. So if I want to have something permanently on the cache drive I can put it there also used for special files on NeXTcloud that requires fast access and transfer speeds 🙂Would it also be good to change path to /cache/ here?

-

23 hours ago, JorgeB said:

Should work fine, you need the appropriate cables, also you only need a 16port HBA for dual link (if supported by the backplanes), you can't use 24 ports with that chassis.

No gains in that part, unless used with a Datablod support LSI expander, it might still be a little faster because the HBA is PCIe 3.0

This doesn't sound promising - but thanks for the info. My hopes was that a newer card and the PCI 3.0 and the statement on the old card was "Up to 6Gb/s" would give me a speed increase (+20-30Mb)

-

Hi All

I currently have the following setup:

- MB - Gigabyte C246-WU4 (PCI gen3) -> 10 x Sata 3 ports drives

- LSI Logic SAS 9201-16i PCI-e 2.0 x8 SAS 6Gbs HBA Card --> 16 drives

- Total of 26 drives + Nvme

- Case: Servercase UK SC-4324S - Norco RPC-4224 4U (Backplane with Mini-SAS 36 Pin SFF-8087 til Mini-SAS 36 Pin SFF-8087)

So I have (Like most people limited PCI slots available) and I have "alot" of older enterprise drives that I might (future) want to put in this bad boy case:

Chenbro-NR40700-4U-Storinator-48-bay - Maybee Unraid will support a dual arrays in the future :-)

I have googled and it looks like the SAS 9305-24i Host Bus Adapter - x8, PCIe 3.0, 8000 MB/s

Is useable with the backplane on the above case

Questions

- Can anyone confirm this controller will work with this case? - (Chenbro-NR40700-4U-Storinator-48-bay + Expansion module backplate)?

- Would I see any speed increase when using this new controller in my existing system? (Parity time? - when all the drives is spindles but all drives are 7200rpm and newer faster drives - the old: LSI Logic SAS 9201-16i say "Up to 6Gb/s" and the new one is 12 GB/s but the case says "24x hot-swappable SATA/SAS 6G drive bays" so I am not really sure this will provide any gain?

Then again might be better to spend the money on newer and bigger drives replacing older and smaller ones, not adding a bunch of smaller drives (Cost: case+control card) also adding increase in power consumption

As always your input is much appreciated

-

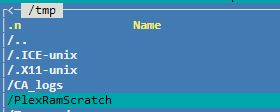

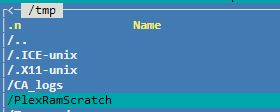

Can I just delete this? No idea where its from?

Deleting these old transcode folders (Shouldnt they be delete automatically?)

Update looks like this is from Emby:

After deleting this folder, and pointing EMBY transcode to the same "PlexRamscratch"

I now get:

du -sh /tmp

183M /tmpAnd my memory is now: down to normal JAHUU!

I don't know enough about this, but isn't memory temporary and turning your PC of should flush the tmp right?

-

du -h --max-depth=3 /tmp 0 /tmp/mc-root 0 /tmp/PlexRamScratch/Transcode/Detection 0 /tmp/PlexRamScratch/Transcode/Sessions 0 /tmp/PlexRamScratch/Transcode 0 /tmp/PlexRamScratch 0 /tmp/CA_logs 0 /tmp/Transcode/Detection 0 /tmp/Transcode/Sessions 0 /tmp/Transcode 20G /tmp/transcoding-temp <-------------------------------- Dont know why this is here? 8.0K /tmp/user.scripts/tmpScripts/PlexRamScratch 8.0K /tmp/user.scripts/tmpScripts 0 /tmp/user.scripts/running 4.0K /tmp/user.scripts/finished 20K /tmp/user.scripts 7.0M /tmp/fix.common.problems 0 /tmp/ca_notices 4.0K /tmp/emhttp 0 /tmp/unassigned.devices/scripts 16K /tmp/unassigned.devices/config 28K /tmp/unassigned.devices 16K /tmp/recycle.bin 0 /tmp/community.applications/tempFiles/templates-community-apps 7.9M /tmp/community.applications/tempFiles 7.9M /tmp/community.applications 0 /tmp/ca.backup2/tempFiles 0 /tmp/ca.backup2 4.0K /tmp/nvidia 0 /tmp/.X11-unix 0 /tmp/.ICE-unix 536K /tmp/plugins 20G /tmpThe " /tmp/transcoding-temp" might be Emby? can I remove this folder from RAM (Emby is just for test and no one is using this server)

I use the Plex container from Plexinc so far this has worked best for me (Have tried them all)

Don't know did the Krusader install from a spaceinvader video long time ago?

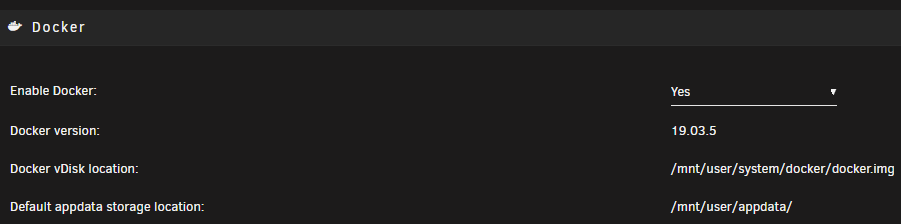

Docker:

Plex: I didn't think I needed to change this to cache, but I guess that would be best since I have all 800G in my cache NVMe

So I will change this now:

(I just changed this for Emby also, using the same transcoding dir in RAM)

-

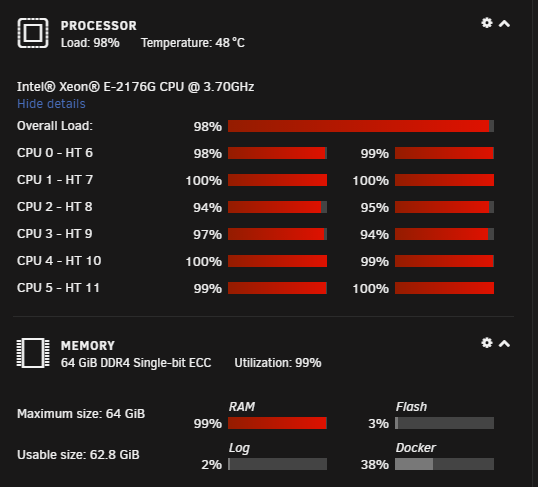

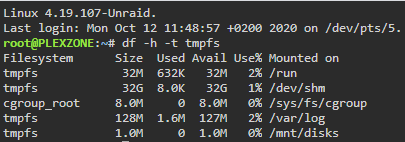

I went back and enabled the cache path for the docker.img :-)

Only activity I can see is Plex scanning libraries - no transcoding

So the CPU could be indexing and preview thumbnails?

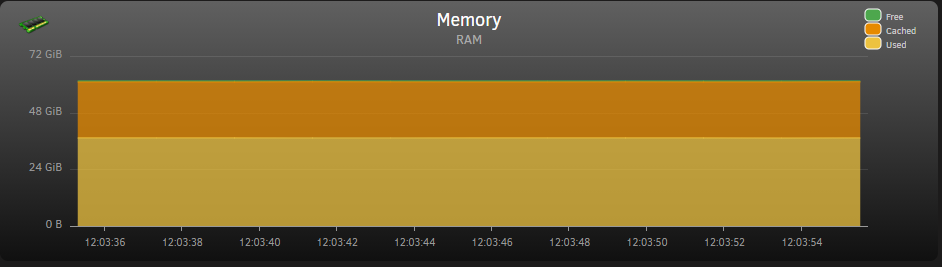

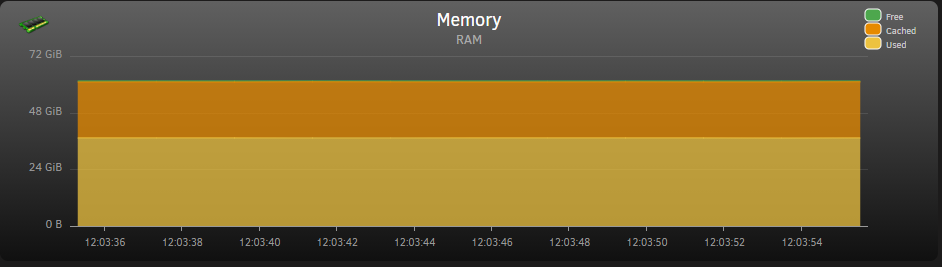

But memory is back to 99%-100%

du -sh /tmp20G /tmp

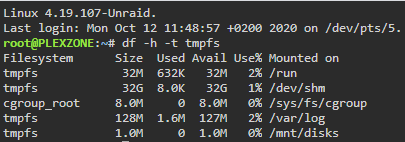

df -h -t tmpfsFilesystem Size Used Avail Use% Mounted on

tmpfs 32M 632K 32M 2% /run

tmpfs 32G 8.0K 32G 1% /dev/shm

cgroup_root 8.0M 0 8.0M 0% /sys/fs/cgroup

tmpfs 128M 1.9M 127M 2% /var/log

tmpfs 1.0M 0 1.0M 0% /mnt/disks

tmpfs 8.0G 0 8.0G 0% /tmp/PlexRamScratch(Yes I did set the PlexRamScratch to 8G just to be sure.... like you did I have a P2000 doing the trancodes and I don't want the RAM to be a bottleneck)

ps aux | awk '{print $6/1024 " MB\t\t" $11}' | sort -n0.00390625 MB sh 0.0546875 MB /usr/sbin/crond 0.0546875 MB /usr/sbin/crond 0.0625 MB /bin/sh 0.0625 MB /bin/sh 0.0625 MB curl 0.0664062 MB /bin/sh 0.0664062 MB /bin/sh 0.0703125 MB /bin/sh 0.0703125 MB /bin/sh 0.0703125 MB /bin/sh 0.0703125 MB /bin/sh 0.0703125 MB /bin/sh 0.0703125 MB xargs 0.0742188 MB /usr/bin/tini 0.0820312 MB /bin/sh 0.0820312 MB /usr/bin/tini 0.0820312 MB /usr/bin/tini 0.0820312 MB sleep 0.0859375 MB /bin/sh 0.0859375 MB /bin/sh 0.0859375 MB grep 0.09375 MB /bin/sh 0.09375 MB /bin/sh 0.09375 MB /bin/sh 0.09375 MB /bin/sh 0.09375 MB /bin/sh 0.09375 MB /bin/sh 0.09375 MB /usr/sbin/acpid 0.0976562 MB /bin/sh 0.101562 MB /bin/sh 0.101562 MB /usr/sbin/wsdd 0.105469 MB /bin/sh 0.109375 MB /bin/sh 0.109375 MB /usr/sbin/avahi-dnsconfd 0.117188 MB /bin/sh 0.152344 MB php7 0.15625 MB /bin/sh 0.167969 MB dbus-run-session 0.167969 MB logger 0.171875 MB pgrep 0.191406 MB curl 0.195312 MB bash 0.253906 MB bash 0.257812 MB grep 0.257812 MB grep 0.261719 MB avahi-daemon: 0.277344 MB /usr/sbin/crond 0.320312 MB /usr/bin/dbus-daemon 0.320312 MB xcompmgr 0.339844 MB dbus-daemon 0.339844 MB drill 0.417969 MB curl 0.417969 MB curl 0.417969 MB curl 0.425781 MB curl 0.457031 MB curl 0.457031 MB curl 0.46875 MB /bin/bash 0.46875 MB /bin/bash 0.492188 MB /bin/bash 0.492188 MB /bin/bash 0.496094 MB curl 0.53125 MB /usr/bin/privoxy 0.570312 MB /bin/bash 0.585938 MB /bin/bash 0.59375 MB /bin/bash 0.644531 MB /bin/bash 0.730469 MB /bin/timeout 0.734375 MB /bin/timeout 0.734375 MB /bin/timeout 0.734375 MB /bin/timeout 0.738281 MB /bin/timeout 0.738281 MB sleep 0.742188 MB sleep 0.753906 MB /usr/local/sbin/shfs 0.757812 MB Plex 0.792969 MB /bin/timeout 0.792969 MB /bin/timeout 0.792969 MB /bin/timeout 0.796875 MB /bin/timeout 0.800781 MB /bin/timeout 0.800781 MB /bin/timeout 0.824219 MB nginx: 0.851562 MB curl 0.851562 MB curl 0.851562 MB curl 0.855469 MB curl 0.910156 MB curl 0.945312 MB curl 0.980469 MB nginx: 0.980469 MB nginx: 0.980469 MB nginx: 1 MB find 1.01562 MB nginx: 1.17969 MB /usr/bin/privoxy 1.1875 MB nginx: 1.32031 MB nginx: 1.32812 MB /usr/bin/openvpn 1.33594 MB /usr/bin/openvpn 1.39453 MB nginx: 1.40234 MB nginx: 1.40625 MB /usr/bin/docker-proxy 1.40625 MB /usr/bin/docker-proxy 1.40625 MB /usr/bin/docker-proxy 1.40625 MB /usr/bin/docker-proxy 1.41016 MB /usr/bin/docker-proxy 1.41016 MB /usr/bin/docker-proxy 1.41016 MB /usr/bin/docker-proxy 1.41016 MB /usr/bin/docker-proxy 1.41016 MB /usr/bin/docker-proxy 1.41016 MB /usr/bin/docker-proxy 1.46875 MB /usr/sbin/atd 1.50781 MB nginx: 1.55078 MB /usr/sbin/inetd 1.64453 MB init 1.67578 MB /sbin/agetty 1.69531 MB /sbin/agetty 1.71875 MB /usr/lib/plexmediaserver/Plex 1.74219 MB /sbin/agetty 1.76562 MB sort 1.77734 MB /sbin/agetty 1.78125 MB /sbin/agetty 1.78516 MB /sbin/agetty 1.79297 MB /usr/sbin/crond 2.01562 MB /sbin/rpcbind 2.01562 MB kdeinit5: 2.07812 MB /usr/bin/dbus-daemon 2.17578 MB /usr/sbin/rpc.mountd 2.28125 MB find 2.29297 MB /usr/sbin/rsyslogd 2.30078 MB /usr/bin/docker-proxy 2.32031 MB /bin/bash 2.32422 MB ps 2.36328 MB /usr/bin/docker-proxy 2.36328 MB /usr/bin/docker-proxy 2.36719 MB find 2.37109 MB /usr/bin/docker-proxy 2.37109 MB /usr/bin/docker-proxy 2.39062 MB find 2.39453 MB /bin/bash 2.40625 MB find 2.42578 MB /usr/bin/docker-proxy 2.51562 MB find 2.54688 MB find 2.57422 MB find 2.57812 MB /bin/bash 2.57812 MB /bin/bash 2.59766 MB /usr/sbin/sshd 2.62891 MB find 2.63672 MB /bin/bash 2.63672 MB find 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.64062 MB /bin/bash 2.79297 MB /bin/bash 2.84375 MB /usr/lib/kf5/klauncher 2.89062 MB awk 2.92969 MB find 3.02344 MB /usr/bin/tint2 3.08594 MB php-fpm: 3.24219 MB /bin/bash 3.57812 MB avahi-daemon: 3.71875 MB /usr/bin/docker-proxy 3.72266 MB nginx: 3.74219 MB /usr/bin/docker-proxy 3.76562 MB /usr/bin/docker-proxy 3.76562 MB /usr/bin/docker-proxy 3.77344 MB /usr/bin/docker-proxy 3.78906 MB /usr/bin/docker-proxy 3.80469 MB /usr/bin/docker-proxy 3.83594 MB /usr/bin/docker-proxy 3.84375 MB /usr/bin/docker-proxy 3.87891 MB /usr/bin/docker-proxy 3.90625 MB /usr/sbin/virtlockd 3.92188 MB /usr/bin/docker-proxy 3.97266 MB /sbin/udevd 4.01953 MB -bash 4.08203 MB /usr/local/sbin/emhttpd 4.26562 MB /usr/bin/docker-proxy 4.30469 MB /usr/bin/openbox 4.61719 MB /usr/bin/docker-proxy 4.77344 MB /usr/bin/docker-proxy 4.78125 MB /app/unpackerr 4.85156 MB /usr/sbin/ntpd 5.16797 MB ttyd 5.66797 MB /sbin/rpc.statd 5.98047 MB containerd-shim 5.99219 MB /bitwarden_rs 6.04688 MB containerd-shim 6.05859 MB nginx: 6.09766 MB containerd-shim 6.10547 MB /sbin/haveged 6.19922 MB containerd-shim 6.21094 MB containerd-shim 6.23438 MB containerd-shim 6.30859 MB containerd-shim 6.36328 MB containerd-shim 6.44141 MB nginx: 6.44922 MB containerd-shim 6.44922 MB nginx: 6.45312 MB nginx: 6.46094 MB containerd-shim 6.50781 MB containerd-shim 6.5625 MB containerd-shim 6.62109 MB containerd-shim 6.62109 MB containerd-shim 6.88672 MB nginx: 6.98828 MB nginx: 7.03125 MB /usr/sbin/nmbd 7.28516 MB php-fpm: 7.29297 MB /usr/sbin/smbd 7.42578 MB containerd-shim 7.52734 MB /usr/sbin/virtlogd 7.86719 MB php-fpm: 7.86719 MB php-fpm: 8.125 MB /usr/sbin/smbd 8.22656 MB containerd-shim 8.42578 MB containerd-shim 8.43359 MB containerd-shim 8.4375 MB /usr/bin/slim 8.79297 MB containerd-shim 8.83594 MB containerd-shim 8.96094 MB containerd-shim 8.98828 MB nginx: 9.08203 MB containerd-shim 9.74609 MB containerd-shim 9.79297 MB /usr/sbin/winbindd 9.89062 MB php-fpm: 10.1094 MB php-fpm: 10.3555 MB /usr/bin/python3 10.9336 MB /usr/sbin/winbindd 11.3438 MB php-fpm: 12.2266 MB /usr/local/emhttp/plugins/unbalance/unbalance 12.5273 MB /usr/sbin/winbindd 13.0664 MB php-fpm: 13.0664 MB php-fpm: 13.0742 MB php-fpm: 13.3711 MB php-fpm: 13.4844 MB php-fpm: 13.5625 MB php-fpm: 13.5625 MB php-fpm: 13.5977 MB /usr/local/emhttp/plugins/controlr/controlr 14.6719 MB /usr/bin/python 14.7695 MB php-fpm: 14.8789 MB /usr/bin/python 14.9844 MB /usr/bin/python 15.0859 MB /usr/sbin/smbd 15.1484 MB /usr/sbin/smbd 15.1992 MB /usr/sbin/smbd 17.4922 MB /usr/bin/python 17.9062 MB /usr/sbin/smbd 20.4023 MB /usr/lib/plexmediaserver/Plex 20.6523 MB /usr/sbin/smbd 21.168 MB /usr/bin/python 22.4844 MB /usr/sbin/libvirtd 25.3398 MB Plex 26.5938 MB python 34.2461 MB /usr/libexec/Xorg 34.8789 MB Plex 35.5273 MB docker 37.1523 MB Plex 37.3242 MB Plex 37.9258 MB containerd 38.168 MB /usr/bin/python 39.0625 MB deluge-web 40.3359 MB php7 43.6914 MB php-fpm: 43.8945 MB php-fpm: 45.5078 MB php-fpm: 51.5078 MB /usr/sbin/python3 52.168 MB /usr/sbin/Xvnc 54.707 MB python3 64.25 MB Plex 79.7578 MB /usr/bin/dockerd 104.973 MB python3 110.766 MB /app/radarr/bin/Radarr 136.883 MB /usr/local/sbin/shfs 141.602 MB /app/Jackett/jackett 144.734 MB /opt/ombi/Ombi 146.723 MB mono 167.867 MB /app/radarr/bin/Radarr 188.879 MB mono 280.812 MB mono 293.574 MB system/EmbyServer 332.719 MB /usr/sbin/mysqld 359.27 MB java 788.543 MB rslsync 914.41 MB /usr/lib/plexmediaserver/Plex 2107.17 MB /usr/bin/qemu-system-x86_64 3276.16 MB /usr/bin/qemu-system-x86_64 4268.11 MB /usr/bin/qemu-system-x86_64 4302 MB /usr/bin/qemu-system-x86_64 5088.28 MB krusader 16605.5 MB /usr/bin/qemu-system-x86_64Again thanks for your help! much appreciated

-

7 minutes ago, mgutt said:

I would say the guide was wrong since beginning. It was never clever to use the full ram size as transcoding path (which "/tmp" does as far as I know).

In my last post you find the link to the full guide to create a ramdisk with a specific size.

I have created the script in the "User Scripts" and updated the path (Thanks!) its working

Only thing I can't figure out is if I have to enable schedule for this script here?

UPDATE: Excellent guide thanks all is running now!

-

1 hour ago, mgutt said:

It seems you did not setup a separate transcoding ramdisk. Do you use /dev/shm? Then this could be your problem. /dev/shm has a total size of 32GB and Plex will fill it over time. Not only for live transcoding, even for optimized versions and live tv streams etc. This is a general temp folder for all transcodings. Its better to define a separate folder with a specific size as described here:

I used 4GB in the past which works flawlessly up to 3 or 4 parallel running 4K transcodings. After I did a benchmark and hit the maximum of my iGPU, I changed it to 8GB as I received an "out of storage" error in Plex, but finally this shouldn't be needed in usual usage. 4GB is sufficient because Plex cleans up the folder automatically when it occupies >95% of the total size.

Plex uses multiple processes to load-balance them across multiple cores/threads. Maybe Emby has only one single-thread process?!

Repeat the command later, so we can compare the results.

Another command to check the size of your /tmp folder (which targets your RAM, too):

du -sh /tmp

I used this guide when I first created my Plex server to use my RAM for transcoding:

Guess things have evolved since then

UPDATE: Did the script and its working

One question should I schedule this to run in the User Scripts?

-

58 minutes ago, mgutt said:

Next time you should investigate the RAM usage before restarting your server / containers. Use this command:

I don't get the error anymore but Memory is going up

..... 0.00390625 MB sh 0.0507812 MB /usr/sbin/crond 0.0664062 MB /bin/sh 0.0664062 MB /bin/sh 0.0664062 MB /usr/bin/tini 0.09375 MB /usr/sbin/acpid 0.101562 MB /usr/sbin/wsdd 0.109375 MB /usr/sbin/avahi-dnsconfd 0.167969 MB dbus-run-session 0.167969 MB logger 0.214844 MB /usr/sbin/crond 0.261719 MB avahi-daemon: 0.289062 MB xcompmgr 0.296875 MB dbus-daemon 0.300781 MB /bin/sh 0.316406 MB /usr/bin/dbus-daemon 0.347656 MB /usr/bin/tini 0.359375 MB bash 0.367188 MB bash 0.449219 MB /usr/sbin/crond 0.464844 MB /bin/bash 0.464844 MB /usr/bin/tini 0.5625 MB /bin/bash 0.679688 MB sleep 0.695312 MB sleep 0.710938 MB sleep 0.714844 MB sleep 0.726562 MB /usr/local/sbin/shfs 0.734375 MB /bin/timeout 0.738281 MB sleep 0.753906 MB sleep 0.984375 MB nginx: 0.984375 MB nginx: 0.984375 MB nginx: 1.01953 MB nginx: 1.1875 MB nginx: 1.21875 MB /usr/bin/privoxy 1.32812 MB /usr/bin/openvpn 1.39453 MB nginx: 1.39453 MB nginx: 1.39453 MB nginx: 1.46875 MB /usr/sbin/atd 1.53906 MB /bin/bash 1.55078 MB /usr/sbin/inetd 1.64453 MB init 1.67578 MB /sbin/agetty 1.69531 MB /sbin/agetty 1.74219 MB /sbin/agetty 1.77734 MB /sbin/agetty 1.78125 MB /sbin/agetty 1.78516 MB /sbin/agetty 1.78906 MB sort 1.79297 MB /usr/sbin/crond 2.01562 MB /sbin/rpcbind 2.03906 MB /bin/bash 2.04297 MB /bin/bash 2.07812 MB /usr/bin/dbus-daemon 2.14844 MB nginx: 2.15234 MB nginx: 2.17578 MB /usr/sbin/rpc.mountd 2.19141 MB /bin/bash 2.24609 MB /usr/lib/plexmediaserver/Plex 2.26562 MB /bin/bash 2.29297 MB /usr/sbin/rsyslogd 2.36328 MB /bin/bash 2.42188 MB /usr/bin/privoxy 2.42578 MB ps 2.51172 MB /bin/bash 2.59766 MB /usr/sbin/sshd 2.60156 MB /usr/bin/openvpn 2.80469 MB /bin/bash 2.84766 MB /usr/bin/tint2 2.89453 MB /bin/bash 2.93359 MB find 3.05469 MB awk 3.39844 MB /bin/bash 3.57812 MB avahi-daemon: 3.72266 MB nginx: 3.73438 MB /usr/bin/docker-proxy 3.73438 MB /usr/bin/docker-proxy 3.73438 MB /usr/bin/docker-proxy 3.73438 MB /usr/bin/docker-proxy 3.76953 MB /usr/bin/openbox 3.77344 MB /usr/bin/docker-proxy 3.80469 MB /usr/bin/docker-proxy 3.80469 MB /usr/bin/docker-proxy 3.80469 MB /usr/bin/docker-proxy 3.80469 MB /usr/bin/docker-proxy 3.83594 MB /usr/bin/docker-proxy 3.83594 MB /usr/bin/docker-proxy 3.83594 MB /usr/bin/docker-proxy 3.84375 MB /usr/bin/docker-proxy 3.85156 MB /usr/bin/docker-proxy 3.85156 MB /usr/bin/docker-proxy 3.85938 MB /usr/bin/docker-proxy 3.85938 MB /usr/bin/docker-proxy 3.86719 MB /usr/bin/docker-proxy 3.89062 MB /usr/bin/docker-proxy 3.89062 MB /usr/bin/docker-proxy 3.89062 MB /usr/bin/docker-proxy 3.96094 MB /usr/bin/docker-proxy 3.97266 MB /sbin/udevd 3.98828 MB /usr/bin/docker-proxy 3.98828 MB /usr/sbin/virtlockd 4.01953 MB -bash 4.07812 MB /usr/local/sbin/emhttpd 4.08594 MB /usr/bin/docker-proxy 4.08984 MB /usr/bin/docker-proxy 4.21875 MB /usr/bin/docker-proxy 4.29688 MB /usr/bin/docker-proxy 4.85156 MB /usr/sbin/ntpd 4.89844 MB /bitwarden_rs 4.98438 MB /usr/bin/docker-proxy 5.10938 MB php-fpm: 5.16016 MB ttyd 5.66797 MB /sbin/rpc.statd 5.91797 MB /usr/bin/docker-proxy 5.98047 MB nginx: 6.10547 MB /sbin/haveged 6.19531 MB /usr/bin/docker-proxy 6.24609 MB nginx: 6.24609 MB nginx: 6.24609 MB nginx: 6.90625 MB /usr/sbin/nmbd 7.23047 MB /usr/sbin/virtlogd 7.28125 MB /usr/sbin/smbd 7.51953 MB php-fpm: 7.90234 MB php-fpm: 8.04297 MB nginx: 8.08203 MB /usr/sbin/smbd 8.08203 MB nginx: 8.4375 MB /usr/bin/slim 8.93359 MB containerd-shim 8.96484 MB containerd-shim 8.98828 MB nginx: 9.29297 MB containerd-shim 9.30859 MB containerd-shim 9.34766 MB /usr/local/emhttp/plugins/unbalance/unbalance 9.38281 MB containerd-shim 9.39844 MB containerd-shim 9.4375 MB containerd-shim 9.48047 MB containerd-shim 9.48828 MB containerd-shim 9.51953 MB containerd-shim 9.51953 MB containerd-shim 9.51953 MB containerd-shim 9.53516 MB containerd-shim 9.54297 MB containerd-shim 9.57812 MB containerd-shim 9.58594 MB containerd-shim 9.61328 MB containerd-shim 9.61719 MB containerd-shim 9.66406 MB containerd-shim 9.82031 MB containerd-shim 9.92969 MB /usr/bin/python3 9.96484 MB /usr/sbin/winbindd 10.7812 MB php-fpm: 10.8203 MB /usr/sbin/winbindd 11.0234 MB /app/unpackerr 11.3438 MB php-fpm: 11.5898 MB containerd-shim 11.7188 MB containerd-shim 11.793 MB containerd-shim 11.9727 MB php-fpm: 12.3555 MB /usr/sbin/winbindd 12.7773 MB php-fpm: 12.8008 MB php-fpm: 13.3047 MB php-fpm: 13.418 MB /usr/local/emhttp/plugins/controlr/controlr 13.4375 MB php-fpm: 13.4375 MB php-fpm: 13.5039 MB php-fpm: 13.5039 MB php-fpm: 13.8086 MB php-fpm: 14.9961 MB /usr/sbin/smbd 15.0273 MB /usr/sbin/smbd 15.418 MB /usr/bin/python 16.5586 MB /usr/bin/python 16.6602 MB /usr/bin/python 17.1328 MB /usr/sbin/smbd 17.6523 MB /usr/bin/python 21.6797 MB /usr/sbin/smbd 22.8477 MB /usr/sbin/libvirtd 26.3164 MB python 26.4609 MB Plex 34 MB docker 34.2461 MB /usr/libexec/Xorg 35.1953 MB /usr/sbin/Xvnc 36.5742 MB krusader 38.4258 MB Plex 39.2656 MB /usr/bin/python 39.6094 MB containerd 39.6484 MB php-fpm: 39.7539 MB php-fpm: 39.9492 MB php-fpm: 41.4102 MB deluge-web 43.1602 MB Plex 51.0469 MB /usr/sbin/python3 54.082 MB python3 69.332 MB python3 81.8398 MB /usr/bin/dockerd 138.496 MB /app/radarr/bin/Radarr 146.586 MB /usr/local/sbin/shfs 158.039 MB /opt/ombi/Ombi 166.699 MB /usr/lib/plexmediaserver/Plex 167.602 MB /app/Jackett/jackett 178.035 MB /app/radarr/bin/Radarr 192.16 MB mono 197.223 MB mono 230.992 MB rslsync 291.141 MB mono 329.223 MB system/EmbyServer 331.602 MB /usr/sbin/mysqld 350.137 MB java 2120.78 MB /usr/bin/qemu-system-x86_64 3245.57 MB /usr/bin/qemu-system-x86_64 4245.45 MB /usr/bin/qemu-system-x86_64 4271.35 MB /usr/bin/qemu-system-x86_64 16604 MB /usr/bin/qemu-system-x86_64The last 5 is the VM's with a total of 30 Gigs is there anything else above docker weise that stands out?

(I was surprised that Emby uses so much and that Plex have so many different processes)

-

45 minutes ago, mgutt said:

This tweak has nothing to do with your RAM usage. The source of your problem must be something else (or you used a path, that targeted your RAM). Do you use transcoding to RAM and use the /tmp or /shm folder? Than this is your problem.

Next time you should investigate the RAM usage before restarting your server / containers. Use this command:

ps aux | awk '{print $6/1024 " MB\t\t" $11}' | sort -nThanks! much appreciated! - I will try this next time I try the "cache" path again. (Yes I transcode to RAM but this was not the issue! - I had no trancodes and I even tried stopping PLEX)

-

3 minutes ago, mgutt said:

This has nothing to do with the tweak as it does not even touch your RAM:

https://forums.unraid.net/topic/97726-memory-usage-very-high/?tab=comments#comment-901835

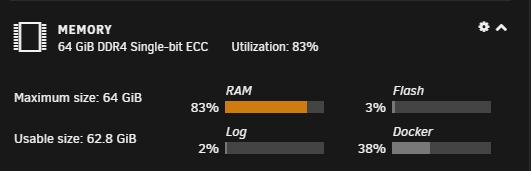

I can just say that changing it back from cache to user my memory dropped and I have rebooted my servers more than 10 times and this is the only change?

Could it be because I have a plex appdata that is near 800G?

-

On 10/1/2020 at 12:54 AM, mgutt said:

I found by accident another tweak:

Direct disk access (Bypass Unraid SHFS)

Usually you set your Plex docker paths as follows:

/mnt/user/Sharename

For example this path for your Movies

/mnt/user/Movies

and this path for your AppData Config Path (which contains the thumbnails, frequently updated database file, etc):

/mnt/user/appdata/Plex-Media-Server

But instead, you should use this path as your Config Path:

/mnt/cache/appdata/Plex-Media-Server

By that you bypass unraid's overhead (SHFS) and write directly to the cache disk.

UPDATE:

This works! - My high memory usage was related to the encoding to RAM and thanks to @mgutt this is not a problem anymore!

Actually I have never seen such speed scrolling through covers like I have now, both for Plex and Emby

(I will make a post about this just to share my experience running both Emby and Plex in this thread soon)

Again! thank you so much @mgutt your tweak really helped me allot - And also the trouble shooting here:

-

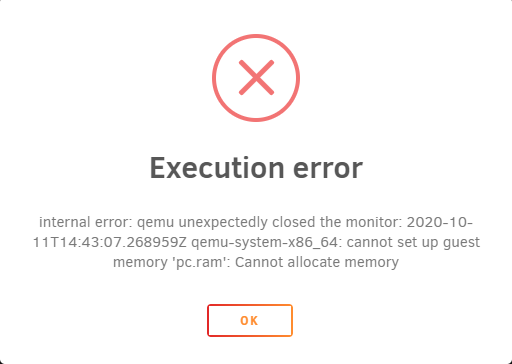

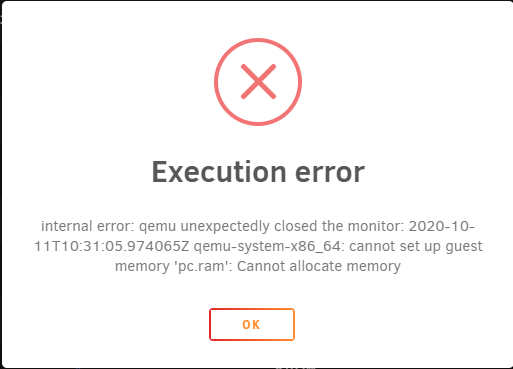

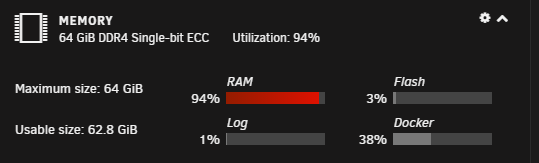

UPDATE

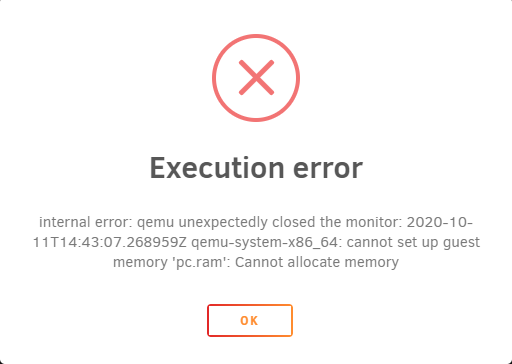

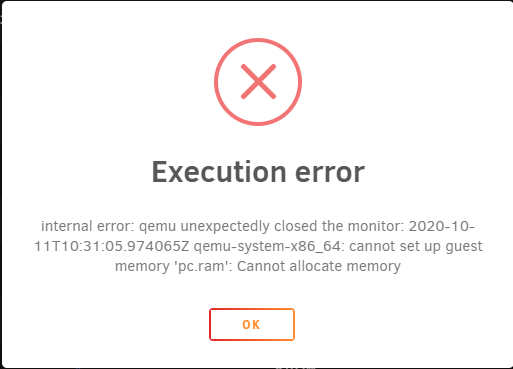

Found the problem - I can now run all the VM I have without getting above error after changing this back to "normal"

This was caused by changing the docker to cache instead of user. This used a lot of memory:

-

12 minutes ago, jonathanm said:

Keep in mind that you are excluding the host (containers included) from using any RAM you give to VM's. Many times it's better to give the VM absolute minimum required resources for performance and allow the host to manage everything you can give it.

Yes I Agree, but having:

My VM's

2 x Windows 10 = 3072 + 6144 MB = 9G

1 x pfSense = 3072 MB = 3G

1 x Hassio = 2048 MB = 2G

1 x Xpenology = 16384 MB = 16G (I found that I need to keep allocation = The orig. HW)

A Total of: 30G - out of a total of 64G?

I can't even start the last WIN 10 with 3G now without getting the :

Shouldn't this be possible and then letting the host to use the last 34 Gigs ?

What is the recommended memory value for a windows 10 for office work?

-

Yes but something is using more RAM than before never got this before?

-

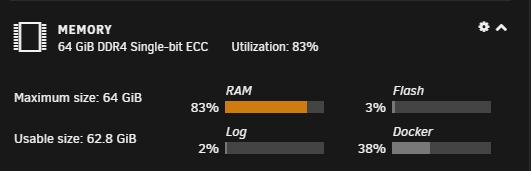

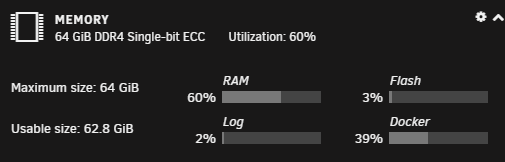

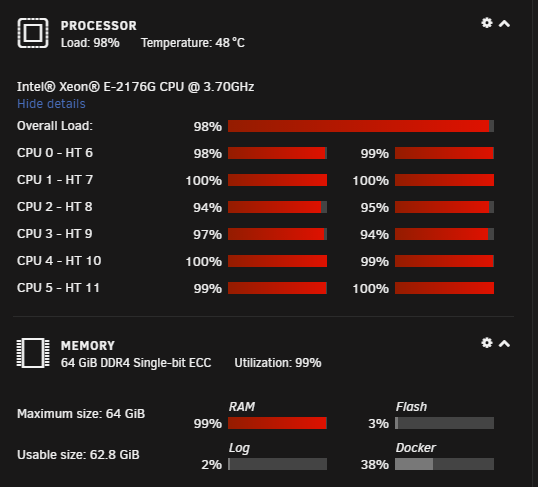

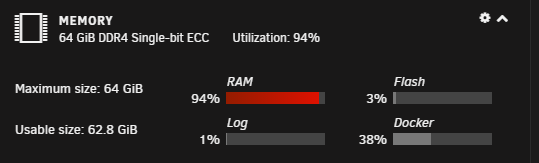

Hi All

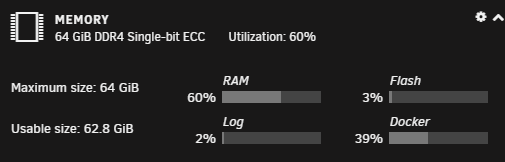

I have 64 Gigs of RAM (Useable 62.8 GiB)

I have multiple Dockers (Not been a issue before adding new VM's)

My VM's

2 x Windows 10 = 6144 MB = 12G

1 x pfSense = 3072 MB = 3G

1 x Hassio = 2048 MB = 2G

1 x Xpenology = 8192 MB = 8G

Total: 25G

Docker 38% of 62.8G = 24G

Left = 13G

The Load:

TOP:

Do I just need more RAM? (This system have a max of 64G 😞 )

Any tips to where I can cut memory usage?

I got the CPU usage down by selecting different cores for each system

As always your insight and expertise is much appreciated!

-

16 hours ago, mgutt said:

No, as my Plex config folder is located on the NVMe and I use the direct access tweak for the config folder AND the docker.img, everything feels really fast (/mnt/cache instead of /mnt/user):

Next step would be locking the covers in the RAM through vmtouch, but as the server has really much free RAM, they should be already cached, thanks to Linux.

Hi thanks for the Tips!

I have always had both Docker and Appdata on the same Nvme and because of the size I had to upgrade to a 2TB 😞

I just can't see any difference in the loading time of the posters etc...

Between the above and the default

This could be because I always had everything on the NVme?

Is there any down size of keeping the changes using cache path?

-

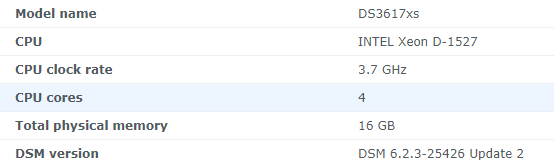

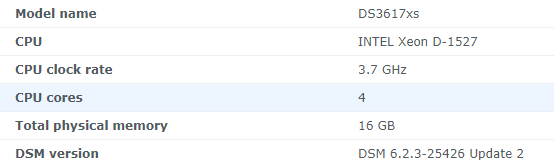

Finally got the update working also on the 3617x - You need to run this script in order to do the latest DSM updates:

https://xpenology.com/forum/topic/28183-running-623-on-esxi-synoboot-is-broken-fix-available/

However, if Jun's script is re-run after the system is fully started, everything is as it should be. So extracting the script from the loader, and adding it to post-boot actions appears to be a solution to this problem:

Download the attached FixSynoboot.sh script (if you cannot see it attached to this post, be sure you are logged in)

Copy the file to /usr/local/etc/rc.d

chmod 0755 /usr/local/etc/rc.d/FixSynoboot.sh

Thus, Jun's own code will re-run after the initial boot after whatever system initialization parameters that break the first run of the script no longer apply. This solution works with either 1.03b or 1.04b and is simple to install. This should be considered required for a virtual system running 6.2.3, and it won't hurt anything if installed or ported to another environment.1) Check file is in temp dir

ls /volume1/Temp/FixSynoboot.sh

sudo -i

cp /volume1/Temp/FixSynoboot.sh /usr/local/etc/rc.d

chmod 0755 /usr/local/etc/rc.d/FixSynoboot.sh

ls -la /usr/local/etc/rc.d/FixSynoboot.sh

-rwxr-xr-x 1 root root 2184 May 18 17:54 FixSynoboot.sh

Ensure the file configuration is correct, reboot the nas and FixSynoboot will be enabled.

That's it I am now running:

I even did a test running the new migration tool, migrating all 8-9TB and all apps and configuration from my own Synology 3617

Just wanted to try to see if I could create a full running backup on my Unraid server

Sofar only thing that doesn't seem to work is my

But again not sure I want to run virtual machines on a virtual NAS LOL!

But anyway if someone knows how to fix this it could be fun to do a benchmark

-

1

1

-

1

1

-

-

3 hours ago, bastl said:

Today I got a info from "Fix Common Problems" the container "letsencrypt" is deprecated. So far so good, I had already read a couple weeks ago that you guys have to switch the name for the container, but I never changed my setting until today.

What I did so far:

1. stop the letsencrypt container

2. backup the config folder in appdata (copied to new folder called swag)

3. edit the old "letsencrypt" container

4. changed the name to swag

5. switch to "linuxserver/swag" repo

6. adjusted the config path to the new folder

7. starting the swag container

8. adjusting "trusted_proxies" in the nextcloud config.php in /appdata/nextcloud/www/nextcloud/config to swag

Did I miss something?

I did almost the same, started by just changing name and repository, and then just renaming the app folder to swag

Everything seem to work but I can see differences between a new swag XML install and the old install

Example I have this in my "updated one" from the old one:

Which is from the old one, and I still have the old icon?

But everything works

Oh and I did apply the fix after running "Fix commen problems" for some config path.... (No errors anymore)

-

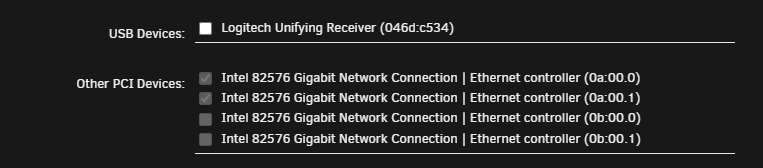

Hi All

I have a working PCI 4 LAN intel passthrough to a pfSense (2 of the PCI lan group)

I wanted to use the other two for two Windows 10 VM's (wanted them as separate machines on the network)

And it works! sort off after 5 or 10 minutes it just disconnects - the Install on the VM and the drivers look okay?

Have anyone any idea about what could be causing this? (The pfsense works perfectly) so maybe a Windows 10 passthrough problem

I have already turned the suspend PCI off in windows 10. I added the PCI lan by the GUI not the XML editor:

<?xml version='1.0' encoding='UTF-8'?>

<domain type='kvm'>

<name>03 Windows 10 - THO</name>

<uuid>b9d78a9a-2255-322f-db63-1656cbefe6e2</uuid>

<description>Windows 10 X64 Pro Version 2004 Build 19041.264</description>

<metadata>

<vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/>

</metadata>

<memory unit='KiB'>6291456</memory>

<currentMemory unit='KiB'>6291456</currentMemory>

<memoryBacking>

<nosharepages/>

</memoryBacking>

<vcpu placement='static'>4</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='3'/>

<vcpupin vcpu='1' cpuset='9'/>

<vcpupin vcpu='2' cpuset='5'/>

<vcpupin vcpu='3' cpuset='11'/>

</cputune>

<os>

<type arch='x86_64' machine='pc-i440fx-4.2'>hvm</type>

<loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader>

<nvram>/etc/libvirt/qemu/nvram/b9d78a9a-2255-322f-db63-1656cbefe6e2_VARS-pure-efi.fd</nvram>

</os>

<features>

<acpi/>

<apic/>

<hyperv>

<relaxed state='on'/>

<vapic state='on'/>

<spinlocks state='on' retries='8191'/>

<vendor_id state='on' value='none'/>

</hyperv>

</features>

<cpu mode='host-passthrough' check='none'>

<topology sockets='1' cores='2' threads='2'/>

<cache mode='passthrough'/>

</cpu>

<clock offset='localtime'>

<timer name='hypervclock' present='yes'/>

<timer name='hpet' present='no'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>restart</on_crash>

<devices>

<emulator>/usr/local/sbin/qemu</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='raw' cache='writeback'/>

<source file='/mnt/user/domains/03 Windows 10/vdisk1.img'/>

<target dev='hdc' bus='virtio'/>

<boot order='1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/>

</disk>

<disk type='file' device='cdrom'>

<driver name='qemu' type='raw'/>

<source file='/mnt/user/isos/01-W10X64.2004.DA-DK.iso'/>

<target dev='hda' bus='ide'/>

<readonly/>

<boot order='2'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<disk type='file' device='cdrom'>

<driver name='qemu' type='raw'/>

<source file='/mnt/user/isos/virtio-win-0.1.173-2.iso'/>

<target dev='hdb' bus='ide'/>

<readonly/>

<address type='drive' controller='0' bus='0' target='0' unit='1'/>

</disk>

<controller type='usb' index='0' model='ich9-ehci1'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci1'>

<master startport='0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci2'>

<master startport='2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci3'>

<master startport='4'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/>

</controller>

<controller type='pci' index='0' model='pci-root'/>

<controller type='ide' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='virtio-serial' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</controller>

<serial type='pty'>

<target type='isa-serial' port='0'>

<model name='isa-serial'/>

</target>

</serial>

<console type='pty'>

<target type='serial' port='0'/>

</console>

<channel type='unix'>

<target type='virtio' name='org.qemu.guest_agent.0'/>

<address type='virtio-serial' controller='0' bus='0' port='1'/>

</channel>

<input type='tablet' bus='usb'>

<address type='usb' bus='0' port='1'/>

</input>

<input type='mouse' bus='ps2'/>

<input type='keyboard' bus='ps2'/>

<graphics type='vnc' port='-1' autoport='yes' websocket='-1' listen='0.0.0.0' keymap='da'>

<listen type='address' address='0.0.0.0'/>

</graphics>

<sound model='ich9'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/>

</sound>

<video>

<model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

<hostdev mode='subsystem' type='pci' managed='yes'>

<driver name='vfio'/>

<source>

<address domain='0x0000' bus='0x0a' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/>

</hostdev>

<memballoon model='none'/>

</devices>

</domain> -

11 hours ago, BigDaddyNehi said:

Just submitted a support ticket to PIA. Everyone put in a ticket and maybe we won't have to switch providers!

Yes agree just got a reply

QuoteThank you for contacting PIA Support.

I am sorry to hear that you cannot connect to PIA's port-enabled Next-Generation server.

Our DevOps team is still on the process of updating the Port-Forwarding API to connect to the Next-Generation server. Unfortunately, we don't have an ETA about this.

We apologize for the inconvenience.

-

1

1

-

-

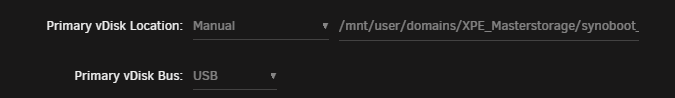

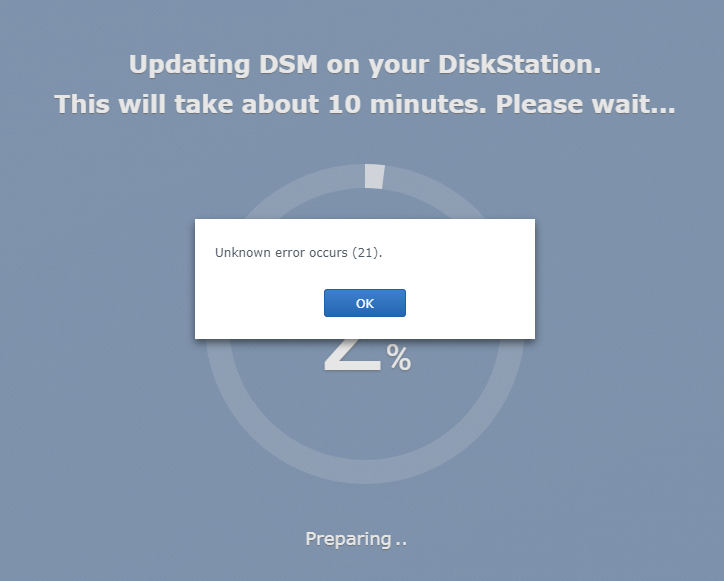

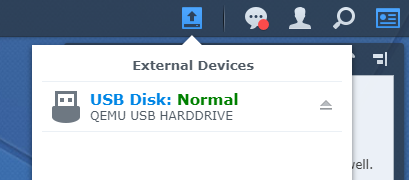

Update:

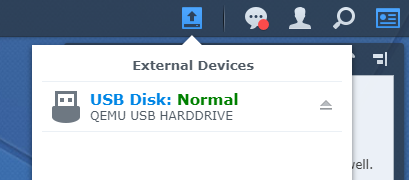

I wanted to get "Real LAN speed" so I have now ported two "Intel LAN ports directly to the VM image:

Seem to be working (The other two LAN ports are for a pfSense setup :-)

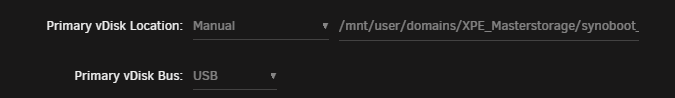

But I have problems updating, for some reason I can only get it working when I set the boot as USB (SATA does not work anymore)

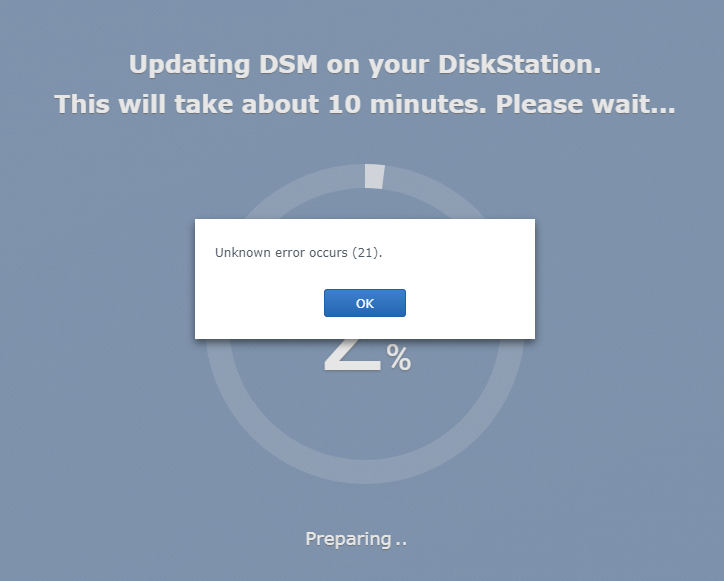

Alos I am stuck at DSM_DS3615xs_25423_6.2.3.pat

When I try to update to: DSM_DS3615xs_25426_6.2.3.pat

And I now have a USB device attached:

Anyone have any input to solving this?

Memory usage very HIGH? [Solved]

in General Support

Posted

Thanks for all this information I have to read more to grasp it all

Not sure how you write directly to cache from a windows PC... (For Nextcloud I just added the path as cache to the docker temp folder)

If I go to my \\IP\cache\temp I can't connect to it - do you have to modify the SMB config in order to make your cache drive directly accessible?