-

Posts

810 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by casperse

-

-

On 6/4/2023 at 12:42 PM, SimonF said:

Connected out side means the device was connected outside USBM. Can you provide the output of

cat /usr/local/emhttp/state/usb.ini | grep error

or

cat /usr/local/emhttp/state/usb.ini | grep vir

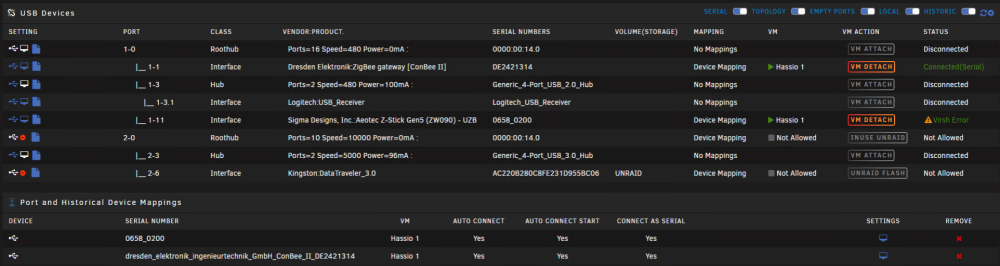

Thanks (Everything worked before the Unraid update)

root@:~# cat /usr/local/emhttp/state/usb.ini | grep error

virsherror = 1

virsh = "error: Failed to attach device from /tmp/libvirthotplugusbbybusHassio 1-001-059.xml

error: XML error: Duplicate USB address bus 0 port 4

root@~# cat /usr/local/emhttp/state/usb.ini | grep vir

virsherror = 1

virsh = "error: Failed to attach device from /tmp/libvirthotplugusbbybusHassio 1-001-059.xml

root@:~#UPDATE (I am really trying to get this working not a happy house when Hassio isnt running 🙂

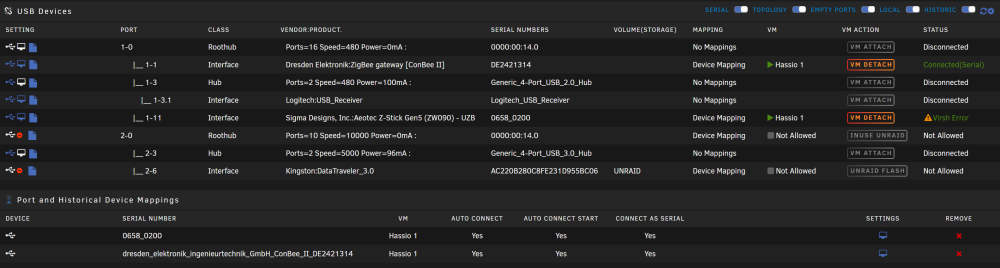

I have tried detach and attach and now get this:

The one that says connected dosent work in HA?

I Dont now what the correct configuration should be in HA?

If anyone knows what the Blue line should be then let me know 🙂

-

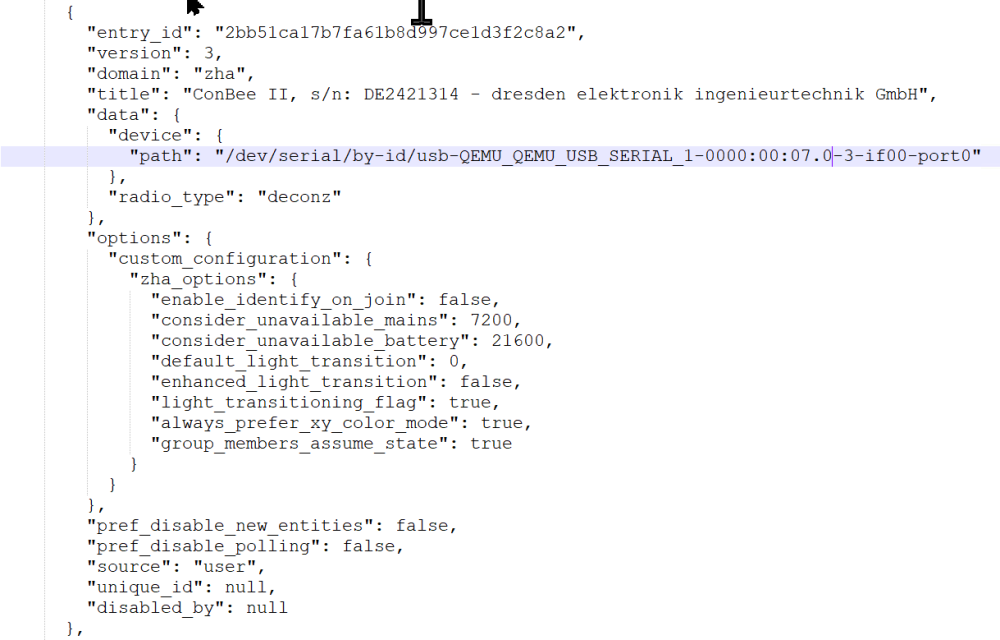

I found the HA settings, not sure what to change:

{ "entry_id": "2bb51ca17b7fa61b8d997ce1d3f2c8a2", "version": 3, "domain": "zha", "title": "ConBee II, s/n: DE2421314 - dresden elektronik ingenieurtechnik GmbH", "data": { "device": { "path": "/dev/serial/by-id/usb-QEMU_QEMU_USB_SERIAL_1-0000:00:07.0-4-if00-port0" }, "radio_type": "deconz" },The mappings now looks like this:

-

-

-

I am looking at also building with the i5, but I really want 128 GB ECC ram, but so far haven't found any modules? (4x32)

If anyone have a link then please let me know?

I also just noticed that Corsair is coming out with 192 GB DDR5 RAM? (But is not ECC) but it should have some of the ECC funct.?Pushing the Boundaries of DDR5 – CORSAIR® Launches New 48GB, 96GB and 192GB Memory Kits

-

On 5/13/2023 at 8:17 AM, OrneryTaurus said:

That's a beefy chassis. Expander backplanes should allow you to use a single cable per backplane to see the drives IIRC. You will need at least 2, maybe 3 cables to connect to it (not sure if they split the front into two backplanes or not).

I was looking at something similar from the same place for more storage with full height slots. However, I personally picked up a 36-bay supermicro superchassis and upgraded from a P2000 GPU to a Tesla P4 instead ($800 all in for the two).

Only issues I can think of would be related to shipping and availability of replacement parts / racking the damn thing.

$800 Nice price! (I haven't found anything in that pricerange) I did 'nt know about the Tesla 4 card but I have other cards and I dont want to replace them all with other ones that are 1/2 height 🙂

Also the future of cooling new CPU looks like it will requires bigger fans so the extra height would allow me to use something like a Noctua NH-D15.

Yes the shipping and quality of the case would be a risk I agree. But so was buying my last case from the UK and so far that has been working great

Old Norco RPC-4224 4U (UK SC-4324S) I am talking to them about another case with 36 drives that they claim have full PCie height?

I have written to them again and they say it is full height?I think this one is better build quality and the price of this i allot higher! But I will get 2xPSU (1200W) with me in this buy! (Not sure this would

have all the new power cables needed for new CPU's & Motherboards/GPU etc.

Drawbacks: "Only 36 drives and not room for the extreme high CPU fan coolers"

I just cant believe that this is really full height PCie? (The seller claims this, but the pictures doesn't look it? or does it?)

So either go for this or try a the Innovision case?

Thanks for your feedback! @OrneryTaurus much appreciated!

I just really want one new case to "rule them all" the 72 is a beast and if the quality is ok, I might dare to roll the dice on it🙂 or buy some spare parts when ordering?

I could even have two Unraid servers running in the same case, and use the old server for backup and experimenting with Proxmox and VM's

-

Hi All

I always wanted a Storinator case but the cost is just to high!Lately I might have found a alternative, that might solve allot of my problems?

Most cases that have +36 drive bays and above only have half heights on PCie slots! (You cant even have a P2000 in it!)

Also the new CPU's are requiring allot more cooling, so having half the Hight for a fan cooler isn't really ideal.So This might be a solution? (I would appreciate any input to possible problems?)

I am new to backplane extenders and not sure my existing HBA would be compatible with this case?

My HBA is a LSI Logic SAS 9305-24i Host Bus Adapter - x8, PCIe 3.0, 8000 MB/s

Would this be able to utilize all these bays?

I also have, from my last build:

LSI Logic SAS 9201-8i PCI-e Controller / 9211-8i (IT-mode) / Full height bracket (2 connectors = 8 Sata)

LSI Logic SAS 9201-16i PCI-e 2.0 x8 SAS 6Gbs HBA Card (4 connectors = 16 Sata)

Again I really like the airflow and height of this case for a full height CPU air cooler!

and the 8 x 120mm fans (According to the specs) - noise is not an issueHigh Dense Rack Mount 8U 72bay Top-loaded Storage Server Chassis

-

Hi Jorge B.

It just finished it must have speeded up in the end....and it says that the array is ok for both drives now?

When it was running it only stated it was rebuilding the 18TB drive and not Drive 1 (12TB) but according to Unraid my array is fine now?

Anyway I am now looking into building another Unraid server as a Backup server (This was kind of a wakeup call)

Again thanks for all your help so happy to be up and running again with parity drives on all drives!

-

Hi JorgeB

Speed is at highest 120MB/sec and now pretty low 8.6 MB/sec most of the time:

QuoteYou can try a different PCIe slot for the HBA, if it's in a CPU slot try a PCH one, or vice versa.

My current PCie slot and placement of HW:PCIe slot 1: x8 NVIDIA Quadro P2000

PCIe slot 2: x4 NVIDIA GeForce RTX 3060

PCIe slot 3: x8 LSI Logic SAS 9305-24i Host Bus Adapter

PCIe slot 4: x4 M.2. NVMe ICY BOX: IB-PCI215M2-HSL adapter

New placement?

PCIe slot 1: x8 LSI Logic SAS 9305-24i Host Bus Adapter

PCIe slot 2: x4 NVIDIA Quadro P2000

PCIe slot 3: x8 NVIDIA GeForce RTX 3060

PCIe slot 4: x4 M.2. NVMe ICY BOX: IB-PCI215M2-HSL adapter

-

4 hours ago, JorgeB said:

You can try a different PCIe slot for the HBA, if it's in a CPU slot try a PCH one, or vice versa.

Thanks I will try that after rebuild is done. I think the HBA is in one of the x8 slots

Unfortunately look like it is going to take (4-5 days) a very long time for the 18TB + 12TB drives to rebuild.

Is there any tweaks I can use to do this faster? (Thinking of the disk settings, most is larger and faster drives)

So far I think its very standard values (I have tried to search the forum but haven't found any newer post about this subjetc

-

-

It only appeared at start up and I have not seen it since. I can see that it it did a backup of the USB to "My server" so that also worked!

That's a good thing right.Like this:

So the error is not related to drive failures but nvme drive errors (because of the SMART transfer warnings)

Again thanks for helping me out! I would never have guessed that my Platinum Corsair AX860i power supply would cause problems, actually think the have a very long warranty have to check that.

-

Okay @JorgeBI got a brand new 1000W Corsair PSU and I just booted the server now I get a new error message:

I am pretty sure I have a backup on my Unraid account?

BUT I can see the drive 18 is started to rebuild! and the logs doesn't show any errors! so far so good!Getting new errors again, but its still rebuilding (ETA 4 days!)

So what should I do now? wait for two drives to rebuild?

Do I need a new USB for Unraid?

-

@JorgeB That could explain it! I dont believe its cables or the controller. So PSU actually makes sence!

So I dont dare to turn it on before I have a replacement PSU (I report get back when I have been out shopping for one)

-

Cables look fine, temperature fine, controller LSI Logic SAS 9305-24i Host Bus Adapter have connection to all drives.

-

- I stopped the array removed disabled drive 1 and started array without VM & Docker

- I then added drive 1 and started the array again and it started a rebuild of drive 18 and emulated drive 1

- BUT then it stopped and when I looked in the log file I got this:

Now its writing error on drive 2 & 6

I have shutdown the server and now I dont know how to proceed?

New diagnostic files attached here:

-

-

Exactly the same thing just happened to me Disk 1 is disabled? (Could be a coincidence?)

ERRORS: Emulating two drives during a rebuild! :-( - General Support - Unraid

-

50 minutes ago, JorgeB said:

That NVMe device still looks good, SMART warning is because it's at 101% predicted life, doesn't mean it's going to fail soon, I have one close to 200% still going, and you cannot run SMART tests on NVMe devices.

Thanks! - I really didn't have the budget to buy one right now, but I thought this was the main reason for my other errors and wouldn't risc it crashing.

Also I dint know that you couldnt run SMART tests on NVMe devices.

Thanks JorgeB you just made my day! - I guess you then run with it until it fails or do you have two in a raid 1 setup?

-

Hi Everyone

I was wondering when replacing my failed cache pool "cache_appdata" if I can do it quick by moving it to my other cache drive?

Moving all these small file back to the array and then back again to the new cache drive would take days!

If the mover can NOT do this between cache pools could I then do this manually:

- Stop Dockers and VM's

-

Change pool from cache_appdata to cache_shares

- Run: rsync -avX /mnt/cache_appdata/ /mnt/cache_shares/

- remove failed cache pool "cache_appdata"

- Insert new replacement cache drive and naming it cache_appdata

- Run: rsync -avX /mnt/cache_shares/ /mnt/cache_appdata/

- Change "select cache pool" back from cache_shares to cache_appdata

- Start VM & docker

Or is the only way to wait for all the files to be copied to the array and back again?

-

Seem the Western Digital is "Build for a NAS" and have 5100 TB writes under warranty = 5.1 PB so this might be my best option.

Looking at the Samsung who failed after 2.1 PB writes.

Any recommendation would be most welcome 🙂

Only drawback is that this is gen 3 and they are coming out with gen 5 - but speed isn't everything. reliabilitet is more important

-

-

!diagnostics-20230421-1305.zipHi All

GHOST IN THE MACHINE?

I really need some help to fix my Unraid server, seeing many new errors and not sure what's the cause of it?

So far Unraid have ben pretty stable.

Most of this just started during a rebuild of a new replacement drive nr. 18 I got this error:

And log from Disk 1:

But I guess it best to wait for the drive 18 to finish rebuild before trying to do a reboot and rebuild drive 1?

Since it looks like I am emulating 2 drives! dISK 1 and disk 18

Drive 1 now shows up under Unassigned drives?

On top of this I am getting some strange other strange errors and behaviors:

Run out of memory message:

Diagnostic attached

New Diag disk 1 was removed?

!diagnostics-20230421-1305.zip

-

On 4/15/2023 at 1:52 PM, ich777 said:

What did you upgrade?

The driver?

That makes no difference at all, both drivers are supporting both of your cards.

Yes I updated the driver to 5.30.....

- RED arrow to the latest version and got 2 GPU listed). 5.20. to 5.30

[Plugin] USB_Manager

in Plugin Support

Posted

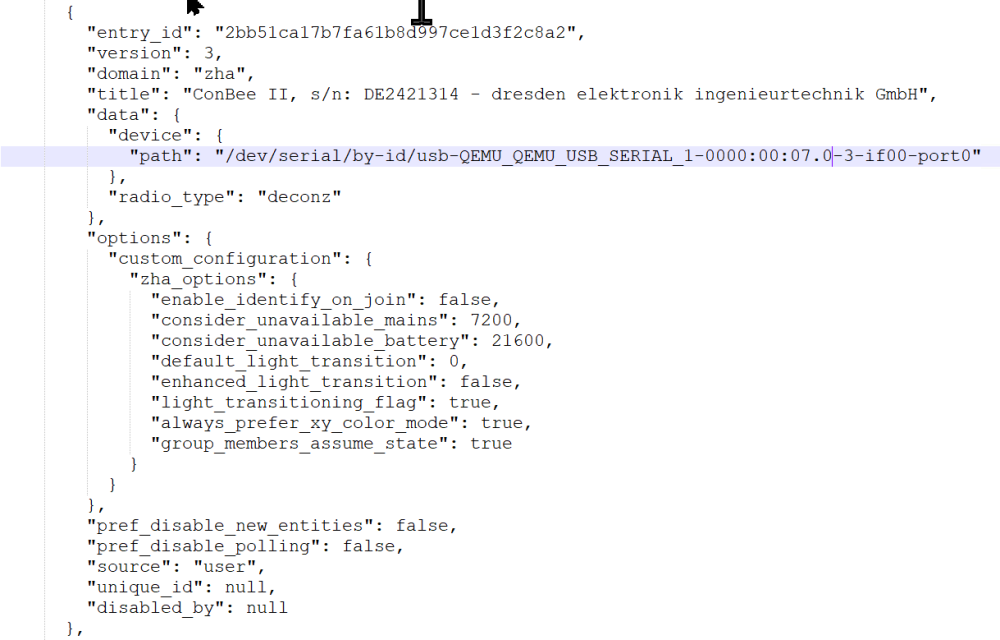

I finally took the courage to delete and re-add the ZHA integration in HA (Hoping it would recover all my devices again!)

And the new path is:

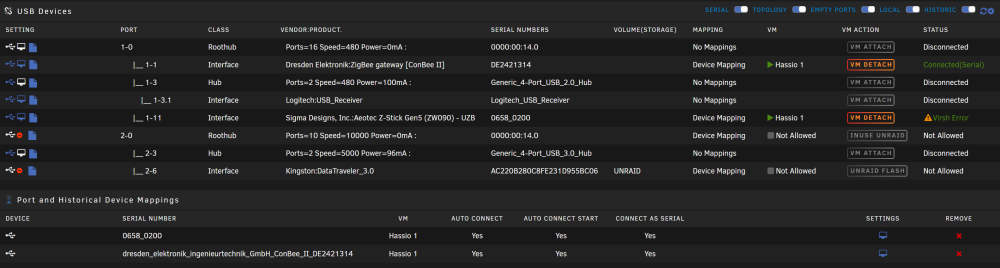

{ "entry_id": "1c7ac4a53b85198687c853f65f3a7fa6", "version": 3, "domain": "zha", "title": "/dev/ttyUSB0", "data": { "device": { "path": "/dev/serial/by-id/usb-QEMU_QEMU_USB_SERIAL_1-0000:00:07.0-4-if00-port0" }, "radio_type": "deconz" }, "options": {}, "pref_disable_new_entities": false, "pref_disable_polling": false, "source": "user", "unique_id": null, "disabled_by": null }And it seem to be working for both USB now! 🙂

But only one is listed as connected? the other one still says "Virsh Error"?

Both use the port 4 as "Connect as serial" I have not been able to change them.

Can I just ignore this error? it looks like it working ok in HA