Kaizac

-

Posts

470 -

Joined

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Kaizac

-

-

13 minutes ago, Lebowski89 said:

This is what I'm aiming for, btw. Missed this post before I made mine. I'm just about there, just dealing with some permission issues with the docker containers trying to access the mergerfs folder. In my case, I have:

The remote gdrive files correctly show up on the remote mountpoint and are successfully merged in the mergerfs mount point. With radarr and co's docker data path pointed at: /mnt/user/data/

But I just tried to add the mergerfs folder as the root folder in radarr and it was a no-go. Permission issue. 'Folder '/data/mergerfs/Media/Movies/' is not writable by user 'hotio' (using hotio containers for the most part). Will double check permissions and mount points and will and go from there.

You're merging a subfolder with its parent folder. That doesn't work.

-

3 hours ago, Lebowski89 said:

I see. Well I assume deleting the files would be something Google would let me do as they're the ones wanting me to free-up space. I'm not actually at the read-only stage yet, but it happens eventually. To quote some random from Reddit:

So I'll call it 'read-only' in the Google, offered unlimited storage for years and years and is no longer doing so, sense of the word. What I'm thinking is, maybe I can have unionfs/mergefs mount the local media folder and cloud files, so that radarr/lidarr/sonarr think they're all housed in the same directory on the same file system, and then just not doing the next step that seedbox/cloud solutions (like Cloudbox/Saltbox) do and uploading the files to the cloud with cloudplow. I assume that radarr/lidarr/sonarr would still be able to delete the previous file (the one that would be located in the cloud), and then simply store the new one locally on UnRaid.

What @JonathanM means is the technical correct explanation for how read only permissions work with file systems.

What Google is doing is just limiting uploading and calling it "read only". So if you have set up your system as written down in this topic, you can just disable your upload script. It will then see it still as 1 folder, but just keep your new files locally stored. Just make sure /mnt/user/local/ is not cache only, or you will run out of space eventually.

-

12 hours ago, xokia said:

file plays fine so I doubt its corrupt. I can transfer it back to dekstop and plays fine. Only using 1 test file to limit complexity and time it takes to do things. I'm suspecting maybe a read-only issue worth looking into. Not sure what it needs to do to the file to scan it but I will check this later when I get back home.

*edit*

This is what I get when I try to analyze the video fails detecting credits and length unsure of why

ok feel like a boob.........file was corrupted. Played when transferred over to desktop but I didn't go to the end of the file which appears to be missing. Which explains why it did not know EOF.

I retransferred a fresh copy from ubuntu directory and now its scanning the file as expected and streams correctly.

So had 2 issues:

1. Missing updated drivers -> Would help if this was in the driver directory2. corrupted file

Appreciate all the help!

Glad it works! Does it now show up in your Dashboard with direct play? All problems solved?

-

9 minutes ago, xokia said:

Have tried that it spins and completes quickly. That could potentially be where the issue is. This should force a scan of credits and a scan of the movie. I am not seeing it do that. Maybe a wierd permission issue since I keep my movies separate from the plex install directory. Let me look into that.

I should be able to log into the container and change permissions to plex.plex

You don't set permissions within the container, but within Unraid. That's the mapping of /movies for within your docker template.

It could be that the files itself are limited in access, but then it wouldn't be able to play it in the first place.

You could try the unraid file explorer (top right icon) and go to your plex media folder and select it and set permissions to everything read write. The file could also be corrupt, so are all your test files having this issue?

-

1

1

-

-

1 minute ago, xokia said:

in plex it shows the correct runtime. If you see that is shows 2hours 42 min

However the "play bar" shows 0.19 -> -0.19 this will change as the movie plays.. If you try an skip forward the movie restart from time 0.

for reference here is how the movie shows up under a ubuntu box using the same hardware. Notice the time starts at 0 and goes to -2.41:52 as expected. The little circle in the play bar can be moved forward no issue at all.

Try running analyze on the movie in Plex.

-

1

1

-

-

5 minutes ago, xokia said:

yes. Disk1 is just the host path. under plex it gets mapped to /movies

The movie would not show up at all if this was wrong.Did you see my edit?

-

1

1

-

-

39 minutes ago, xokia said:

I have the Wan binding option unchecked. But yes I added the IP/subnet. So I don't think they would play at all if it tried to go to WAN. I also have a 1Gbps WAN connection so BW "shouldn't be" an issue anyway. Thanks for the suggestion though!

The video does direct play and it plays in 4k just fine. The issues is the video does not show up in the plex dashboard when direct playing. Also I get incorrect video length when direct playing on the shield plex player. If I try to fast forward the movie starts over from the beginning. If I force a transcode all these issues go away.

So I'm still doubtful about that Disk1. Normally you name shares after the files or category you store. A share name would be Media for example and the path would be /mnt/user/Media. So you are sure you have a share named Disk1 in which you have the folder plex-media?

And what library path do you use for the library which contains the files you're testing with?

EDIT: if you check the movie in Plex, does it show the correct runtime?

-

1

1

-

-

33 minutes ago, xokia said:

--

Oh and I forgot the Network settings within Plex. Did you put in your LAN networks there? Like 192.168.1.1/24 or whatever your subnet(s) are? The Shields are direct playing, but maybe they don't find the direct route to your Unraid box and going through WAN back to your Unraid server, which can cause bandwidth limits.

-

1

1

-

-

19 minutes ago, xokia said:

What you are seeing is just a general frustration with getting it to work. I am fully willing to accept something wrong with my config. I am not new to plex or plex server I have been using it for a couple years. I am aware of how its supposed to work as I have set this up on ubuntu and have zero of these issues.

I am aware audio and and subtitle can cause cpu transcode of those files. However the video will still transcode in the GPU. I have no issue with these files transcoding in the CPU. It's a non issue. The workload to do that is very minimal.

/dev/dri -> shows up both card0 and render128

I use nvidia shield devices for playback because I have found they are the most compatible with video and audio codecs. These are all hardwired using 1GB ethernet. I am not trying to playback anything that hasn't been previously tested as fully functional under ubuntu plex-server. Just trying to replicate the same results under a different OS.

A question on the video driver. Does unraid 6.14 not come with updated video drivers preinstalled? I missed "Intel-GPU-TOP" as it did not show up under the "driver" tab. Maybe this is the issue.

Unraid 6.14? Probably a spelling error, but 6.12.4 is the latest (stable) version. And it's not so much a driver missing when installing Intel_GPU_TOP. It's exposing the GPU readings to Unraid, so you can check them with the GPU statitics plugin for example. That way you know to what capacity your iGPU is being used.

For the internal Plex settings, try: both transcoder quality as hardware transcoding device on auto. And Background preset on Very Fast.

In the Docker template change "plexpass" to "docker". And did you make sure to use the plex claim function? Just checking, I expect you did and just put in the link for anonymization.

Another thing that is odd to me is that you are apparently using a Share called "Disk1"? Is that correct?

-

1

1

-

-

9 hours ago, xokia said:

I downloaded 1.32.5

If I stream a movie directly nothing shows in my dashboard as playing anyone know why? Even though the bandwidth clearly shows something streaming.

Also if I stream directly i'm unable to fast forward and the incorrect length of movie is shown.

+0.19 -> -0.19

If I force a transcode by changing the resolution then it correctly shows up in the dashboard. The correct movie length also shows up. I really want to like unraid there are a bunch of features I actually like. But I can't seem to get ANY of the plex-server apps on unraid to work correctly. I think I have tried them all. Maybe its a quirk of running in Docker? Running on linux these issues do not exits. Is there any stable version on unraid that works 1/2 correctly? Any help is appreciated.

Definitely a problem with your setup, not Unraid. Comments like "I really want to like Unraid, but......" are not going to engage people to try and help out. We're not here to motivate you to use a product. Your comments and what I've seen in your other topic is some lack of understanding the technology. Not a problem at all, but right now you're pointing at all other factors as the problem instead of your own lack of understanding.

I'm running Plex on the latest Unraid stable version and also latest Plex version (plex pass) and my HW transcodes even still work. So I don't even have the issues the others here have.

- Firstly, in your own topic, I see that you're playing a file that has both audio transcoded and a PGS subtitle. PGS subtitles are always CPU transcodes, and single core at that as well. So CPU spikes during playback of such files is normal.

- Second, I'm not seeing your GPU stats, so I wonder which plugins you actually installed for your GPU? You should have the plugin "Intel-GPU-TOP". And with the plugin "GPU Statistics" you can see your GPU workload

- You're in the linuxserver Plex topic right now. So use that docker, not the other ones. After you've installed it properly and can access your Plex server, click on the Plex docker and then > console. Enter:

ls /dev/driWhat is the output in the console?

- How are you playing a video, like in the screenshot above? What device? What app?

- What are your Plex transcoder settings?

-

1

1

-

1

1

-

-

10 hours ago, fzligerzronz said:

will I have to keep deleting this all the time or will it permanently stop the cehcking.

No, it's often when you had an unclean shutdown, or the script didn't finish correctly. So in that case you need to remove the file manually, or with some automation, for example at start of array.

If the script runs correctly it will delete the file at the end. It's to prevent to have the same script starting a second instance of the same job.

-

15 minutes ago, fzligerzronz said:

i have a problem. I have my uploader script set to run every 2 hours or so, to keep my drives not too full.

When it runs tho, it says that script is already running, and I have to run the rclone unmount script just to get the uploader to properly run.

Has anyone had this problem before?

It's a checker file in appdata/other/rclone/renote/gdrive_media_vfs. Just delete that file and you can start the upload.

-

5 hours ago, remserwis said:

Thank You for taking a time Kaizac.

I am sorry for a little confusion...it's because I didn't master or completely understand background mechanics of rclone nor mergefs.

I just learn by setting and watching results.

So this cache I was talking about...looks like it's this mergefs mount created with accessed folders structure for fast access. I don't care to much of it ( it's faking the system so the cloud files looks offline ) Originally I thought it was content and files downloaded by plex or RR's. Looks like my RR's through sabnzbd download properly to local folder in my data share and it's merged with online files provided by rclone in unionfs folder. LOVE IT.

I will check today but my unraid cache should be irrelevant....data folder will keep freshly downloaded files in unraid cache until mover will put them in array but it will work invisible on the share level so shouldn't affect merged unionfs etc.

I will set up this mergefs cache mount to keep it in cache all the time (backed to array only) so mover won't touch it and access should be very fast.

And finally I mentioned my 350TB library as in last 2 months Google shut down unlimited plan for cloud....Dropbox being only alternative is limiting/shutting it down as we speak....so I know (also from previous replies in this post) there is plenty of data hoarders like me with libraries between 100TB and 1PB for Plex purposes which are looking for a way out.

Yes only way is costly $8K-$10K minimum, investment in to drives, SAS cards and better servers.... My hybrid approach suppose to be "one disk at the time" solution for them when you can upgrade your unraid server gradually as you download data from Gdrive. I think google will give some time on notice before deleting all currently read-only accounts with hundreds TB's on them.

Thank you also for the script to copy folders. It won't work or at least will be hard to use by folder for me. I have only 6 of them.

Hopefully there is other option, maybe moving (not copying) data until for ex 20TB quota (when one disk added) so when executed again month later with another disk added, it will move next batch of files.

I manage directories and files in plex for years now...and splitting into many folders while managing it with RR's is pain in the ass.

Constantly changing root folders to match RR, Plex and genre ( kids, movies etc) doesn't work well with 15K+ movies and 3K+ TV shows.

To make things funnier I intend to encode some old stuff to H265, after having them locally...some of it RR's will redownload in this format, but as library of 4K HDR UHD movies with lossless quality is growing (every one is 60-80GB). I am looking towards higher total space needed.

Anyway this post derailed from main topic...I am sorry for that...but as reading past replies to learn I've seen lot of similar interest from others.

I think you're talking about rclone VFS cache? That's just to keep recently played files cached locally to not have to download them everything you start accessing the files. It is not some file system you can access.

I was talking about the Unraid cache, which you use for a Share.

About the moving and Google deleting accounts. I think people are expecting too long of a period where they can keep access to the files. Maybe Google has some kind of legal obligation to keep access to the files as long as the user is paying, but I doubt it. I would count on 6 months maximum before accounts get deleted.

I think you are also one of the VERY few who will be going the full local route. The investment is just way too big, and there are much cheaper solutions which cause a lot less headaches.

Moving files when you have space again is still foreign to me. I think most will make a selection first of what data they want to save local, instead of just moving everything over without prioritizing. But if that's what you really want to do, you could use the rclone move script to move from your cloud to your /mnt/disks/diskXXX once you added a new one. Once that drive is full, the script won't work anymore.

I still believe the 2 root paths per folder with the RR's is still the way to go for most people who want to move files locally, but selectively. Setting that up and adding the paths in Plex would take 15 minutes maximum. After that, you can go through Radarr and Sonarr per item.

Obviously, with your volume, this is time-consuming. But like I said, your plan is not something many will do. I don't know your personal and financial situation, so I don't want to make too many assumptions. But knowing that I just purchased 88 TB of storage with deals, yet still spending 1300 euros, I don't think going to 300+ TB is reasonable. And I don't even plan to go local, I just needed drives for backups and such.

I don't think you need to worry about the main post anymore. It's not possible anymore, so now it's mostly about moving local again ;).

-

9 hours ago, remserwis said:

Moving from Google Drive to UnRaid - SaltBox

Problem:

I think I am not the only one with same problem as stated in the previous post.

Plex/Emby users with huge libraries got stuck after recent changes.

Google put Workspace accounts in read-only mode and surely will delete them at some point.

Dropbox was temp solution as they are limiting "unlimited" plan (after everyone started moving there) and will shut it down eventually also.

My local UnRaid server can't handle 350TB yet and will require significant investments to do so.

I've decided to do hybrid setup for now and start spending money to go local completely.

This script is the key for that. Thank you for creating it as before I found it I was hopeless couldn't afford one time investment to move all data.

I've had saltbox server (modern version of Cloudbox) so I was able to copy rclone config with SA accounts and it's mounting TeamDrives nicely.

Media were split within few TeamDrives which are mounting using "45.Union" option from Rclone and show up basically as one Media folder which is great. (same as SaltBox).

Idea:

Merge local folder with cloud drive under the name unionfs to keep all Plex/RR's/etc configs when moving Apps.

In that case:

1) all new files would be downloaded locally to "mnt/user/data/local/downloads" folder

2) RR's would rename it locally to "mnt/user/data/local/Media" folder and never got uploaded

3) old GDrive files would be mounted as "mnt/user/data/remote/Media"

4) Merged folder would be "mnt/user/data/unionfs/Media"

5) Plex and RR's would use "mnt/user/data/" linked as "/mnt" in Docker settings (this is just to keep folder scheme from SaltBox).

Questions:

- How to avoid cache mount ? I would love RR's or Plex write directly to "mnt/user/data/local/Media" folder. if I create there a file or directory in command line it works like intended being visible in merged "mnt/user/data/unionfs/Media". But when Plex scanned one library (using "mnt/user/data/unionfs/Media" path) it created metafiles with proper directory structure but in cache mount.

- What would be the script/command etc to start moving data from Gdrive to this "mnt/user/data/local/Media" folder which at the end of this long process will have all the Media? If it can be somehow manually controlled by folder or data cap it would be great as I would love to do it when adding one or few disks at the time (budget restricted).

So far only thing I've had to change in the script was to delete "$RcloneRemoteName from path in all 3 variables (to have local and Teamdrives root content directly in merged "mnt/user/data/unionfs" folder.

RcloneMountLocation="$RcloneMountShare"

LocalFilesLocation="$LocalFilesShare"

MergerFSMountLocation="$MergerfsMountShare"I hope my thoughts can be useful to someone... gladly can help with the part of the process I was able to figure out (with my limited linux skills) and I am hoping for some insights/help also.

I'm a bit confused by your post, since you seem to be sharing info and also asking questions?

Firstly, I think people with big libraries should obviously first decide what they want to do with their cloud media. Do you need all those files, or can you sanitize? Secondly, I think something that many people now haven't done (there was no need to) is being more restrictive on media quality and file size. Another possibility now, that I think not many used, is to add something like Tdarr to your flow to re-encode your media.

So what I think the problem with moving local right now is that we have often nested the download location within our merge/union folder. Now that you disable the downloading, the files are stuck on your local drive (often a cache drive for speed). So when your mover starts moving your media to your array, your download folder structure also gets moved, breaking dockers like Sab/NZBget.

I've thought a lot about this and how to work around this. Some idea might be to create a separate share and add it to the merged folder. But I still expect problems with the RR's not being able to move the files, because your local folder is still the write folder.

I think the easiest choice is to leave everything as it is. Install the moving tuner plugin from the app store. In which you can input through a file with paths in it, which files/directories need to be ignored by the mover. This way you can just keep native Unraid functionality with the mover, no need for rclone. And you can keep your structure intact whilst making your share cache to array instead of cache only.

And in case you didn't know, you can use the /mnt/user0/ path to write directly to your array, bypassing your cache. However, within the union/merger folder I don't see how we can use that and also have the speed advantages of using the merged folder with a root structure of /mnt/user/ as /user.Regarding the script, I think you have no other option than to define the directories you want to move over manually. Something like this:

#!/bin/bash rclone copy gdrive_media_vfs:Movies '/mnt/user0/local/gdrive_media_vfs/Movies' -P --buffer-size 128M --drive-chunk-size 32M --checkers 8 --fast-list --transfers 6 --bwlimit 80000k --tpslimit 12 exitI don't see why you would want to pump all the media over without being selective, though. Getting 350TB local is not something you will be achieving without a big investment in drives and a place to put them. You'd almost certainly need to move to server equipment to store this big number of drives. And with current prices, you'd be looking at about 5k of drives you need.

So you could also add another folder for each category, like Movies_Keep. And then add that folder to your Plex libraries. Then within the RR's you can determine per item if you want it local or not. The RR's will take care of moving the files, and Plex won't notice a difference. And you can just run your rclone copy script to move those folder's contents, without need for more specification.

-

8 minutes ago, aesthetic-barrage8546 said:

I mean I can`t run two scripts at one port at same time.

I need some help how to map my scripts to differrent ports or merge two rclone mounts into one script.

Now i try add custom command --rc-addr :5573 and seems all started, bu I don`t test this thing yetThe port problem, you can fix it the way you do now. But I wonder if it's not another rclone script (maybe the upload?) that's using the --rc. I can't find the remote command in the mount script.

Anyway, that's not what you are trying to do. You want to combine multiple mounted remotes into 1 merged folder. That's perfectly possible, but not with this script. You will need a custom script for which does another mount and then change the merge. You could try to copy part of the script to the top for the first mounting.

The snippet for the merge would look like this:

mergerfs /mnt/user/local/gdrive_media_vfs:/mnt/user/mount_rclone/gdrive_media_vfs:/mnt/user/mount_rclone/onedrive /mnt/user/mount_mergerfs/gdrive_media_vfs -o rw,async_read=false,use_ino,allow_other,func.getattr=newest,category.action=all,category.create=ff,cache.files=partial,dropcacheonclose=trueTest this first with some separate test folders to make sure it works as you'd expect.

-

11 minutes ago, KeyBoardDabbler said:

I just tried running the below command, it did copy the correct files from the shared drive to my local directory but the folder/ file names are now obscured in the local folder. Is it possible to remove the crypt on copy?

#!/bin/bash # Relocate moviesclasic > local rclone copy tdrivesportsppv:crypt/6uub5b0iurd8j74m2dddn77dcs /mnt/user0/sports-ppv \ --user-agent="transfer" \ -vv \ --buffer-size 512M \ --drive-chunk-size 512M \ --tpslimit 8 \ --checkers 8 \ --transfers 4 \ --order-by modtime,ascending \ --exclude *fuse_hidden* \ --exclude *_HIDDEN \ --exclude .recycle** \ --exclude .Recycle.Bin/** \ --exclude *.backup~* \ --exclude *.partial~* \ --drive-stop-on-upload-limit exitI tried the built in file explorer via the unraid webui and copied some files from "/mnt/user/mount_rclone/tdrive_sports_ppv_vfs/sports_ppv" to "/mnt/user0/sports-ppv". This worked as expected but i dont think this is the best method to transfer large folder.

Transferring from within your folder structure is more risky. You won't get the Google Drive feedback signals and in case your mount drops connection it might also corrupt.

You need to change --drive-stop-on-upload-limit to --drive-stop-on-download-limit. You're not uploading but downloading, which has a 10TB limit per day. Not something you can achieve with a gigabit connection, so not really needed to put it in.

Regarding encrypted data, when you transfer from the crypt mount to your local storage it should be the decrypted data. I'm doing that as we speak. But your rclone mount and folder structure is strange to me. It seems you are copying from your regular mount since you use an encrypted folder name. You need to copy from your crypt mount. So lets say you have gdrive: as regular mount and your crypt is pointed to gdrive: named gdrive_crypt: then you would transfer from gdrive_crypt: to your local storage.

-

6 hours ago, fzligerzronz said:

Thanks. Upload speed restored to full speed!

Just a heads up for you and others considering moving to Dropbox. Since 1 or 2 days ago Dropbox took the policy to only grant 1TB additional storage per user per month. So getting allocated storage beforehand and then increasing it rapidly for migrating will be impossible, or you must be very lucky with the support rep you get. Dropbox can't handle the big influx from Google refugees.

But there have also been strong rumors that Dropbox is moving to the same offering as Google, limiting to 10TB per user. So be warned, that you might end up in the same situation as now with Google.

-

1

1

-

-

14 hours ago, KeyBoardDabbler said:

So i have also recently received the email notice that my drive will change to read only due to being over the storage limit. It has been a great service over the last few years considering the cost, but i knew this day would come. Luckily i have been building up my local storage in this time.

What is the best way for me to start copying files from gdrive to local storage. Should i just use rsync and copy from the rclone mounts to the relevant local folder.

Thanks

Just rclone copy back from the mount to your local share. I would advise using the user0 path to bypass cache.

1 hour ago, fzligerzronz said:So I've been using the upload script for awhile, but im trying to optimise it for Dropbox now. Do i need to take-off the -- in these to work?

# process files

rclone $RcloneCommand $LocalFilesLocation $RcloneUploadRemoteName:$BackupRemoteLocation $ServiceAccount $BackupDir \

--user-agent="$RcloneUploadRemoteName" \

-vv \

--dropbox-batch-mode sync

--buffer-size 5G \

--drive-chunk-size 128M \

--tpslimit 8 \

--checkers 8 \

--transfers 32 \

--order-by modtime,$ModSort \

--min-age $MinimumAge \

$Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \

--exclude *fuse_hidden* \

--exclude *_HIDDEN \

--exclude .recycle** \

--exclude .Recycle.Bin/** \

--exclude *.backup~* \

--exclude *.partial~* \

--drive-stop-on-upload-limit \

--bwlimit "${BWLimit1Time},${BWLimit1} ${BWLimit2Time},${BWLimit2} ${BWLimit3Time},${BWLimit3}" \

--bind=$RCloneMountIP $DeleteEmptyThanks

No, you need to add some more \ after each command. Dropbox batch is missing one at least.

-

20 minutes ago, MonsterBandit04 said:

well that worked for all of 2 seconds, then docker didn't mount. second reboot back to not seeing anything at all. so lost

What do you mean with Docker didn't mount?

You could also try to use my simple mount script from 1 or 2 pages back. Just mount the cloud share first, see if that works. Only then continue with the merger part.

-

13 minutes ago, MonsterBandit04 said:

[gdrive-media] type = drive client_id = xxxxxxxxxxxx client_secret = xxxxxxxxxx scope = drive root_folder_id = XXXXXXXXXXXXXX token = XXXXXXXXXXXX team_drive = [gdrive-media-crypt] type = crypt remote = gdrive-media:/media password = xxxxxxxxxx password2 = xxxxxxxxxxx [dropbox-media] type = dropbox client_id = xxxxxxxx client_secret = xxxxxxxxxxxx token = xxxxxxxxxxx [dropbox-media-crypt] type = crypt remote = dropbox-media:/media password = xxxxxxxxxxxxx password2 = xxxxxxxxxxxxxYes when I go to /mnt/user/gmedia and /mnt/user/gmedia-cloud i see encrypted stuff not 'Movies' 'TV Shows' etc. But if I rclone lsd gdrive-media-crypt: its unencrypted.

For the google drive config remove the "/" with the crypt. Just gdrive-media:media. After that, reboot and run the mount script again, see if that helps.

-

28 minutes ago, Michel Amberg said:

So this is over now? I got an email from gdrive that states that I have ran out of storage space and all my files will be put in read only in 60 days. I setup a business account and pay for it and it has worked great in couple of years now. I only have 15.6TB on my gdrive and it is full. Can I do something to fix this or is it just bite the bullet and move on?

I've posted this before:

They already started limiting new Workspace accounts a few months ago to just 5TB per user. But recently they also started limiting older Workspace accounts with less than 5 users, sending them a message that they either had to pay for the used storage or the account would go into read-only within 30 days. Paying would be ridiculous, because it would be thousands per month. And even when you purchase more users, so you get the minimum 5, there isn't a guarantee you will actually get your storage limit lifted. People would have to chat with support to request 5TB additional storage, and would even be asked to explain the necessity, often refusing the request.

So yes, not much you can do at this point. And with 16TB, you're better off just buying the drives yourself. If it's for backup purposes, then you can look at Backblaze and other offerings. Don't expect to media stream from those though. You'll need Dropbox Advanced for that, with 3 accounts minimum.

-

3 minutes ago, MonsterBandit04 said:

Post your rclone config, but anonymize the important stuff before posting.

To be clear. When you go to both /mnt/user/gmedia and /mnt/user/gmedia-cloud you get shown encrypted files?

-

11 hours ago, MonsterBandit04 said:

First, thank you for everything you've done with these scripts. Maybe, I'm doing something wrong, but I believe I have everything configured correctly. I run the script with my edits and can see my Google Drive folder in the Shares section, but its not decrypted. When I "rclone lsd gdrive-media-crypt:" through terminal, everything is there and decrypted so I know it's not a password thing. Is the script pulling a diffrent rclone.conf file or am I just missing something or doing something wrong? Any help would be greatly appreciated.

#!/bin/bash ###################### #### Mount Script #### ###################### ## Version 0.96.9.3 ## ###################### ####### EDIT ONLY THESE SETTINGS ####### # INSTRUCTIONS # 1. Change the name of the rclone remote and shares to match your setup # 2. NOTE: enter RcloneRemoteName WITHOUT ':' # 3. Optional: include custom command and bind mount settings # 4. Optional: include extra folders in mergerfs mount # REQUIRED SETTINGS RcloneRemoteName="gdrive-media-crypt" # Name of rclone remote mount WITHOUT ':'. NOTE: Choose your encrypted remote for sensitive data RcloneMountShare="/mnt/user/gmedia-cloud" # where your rclone remote will be located without trailing slash e.g. /mnt/user/mount_rclone RcloneMountDirCacheTime="1000h" # rclone dir cache time LocalFilesShare="/mnt/user/plex-pool" # location of the local files and MountFolders you want to upload without trailing slash to rclone e.g. /mnt/user/local. Enter 'ignore' to disable RcloneCacheShare="/mnt/user0/mount_rclone" # location of rclone cache files without trailing slash e.g. /mnt/user0/mount_rclone RcloneCacheMaxSize="150G" # Maximum size of rclone cache RcloneCacheMaxAge="12h" # Maximum age of cache files MergerfsMountShare="/mnt/user/gmedia" # location without trailing slash e.g. /mnt/user/mount_mergerfs. Enter 'ignore' to disable DockerStart="sabnzbd plex sonarr radarr overseerr" # list of dockers, separated by space, to start once mergerfs mount verified. Remember to disable AUTOSTART for dockers added in docker settings page MountFolders=\{"movies,tv shows,kid shows,sports"\} # comma separated list of folders to create within the mount # Note: Again - remember to NOT use ':' in your remote name above # OPTIONAL SETTINGS # Add extra paths to mergerfs mount in addition to LocalFilesShare LocalFilesShare2="ignore" # without trailing slash e.g. /mnt/user/other__remote_mount/or_other_local_folder. Enter 'ignore' to disable LocalFilesShare3="ignore" LocalFilesShare4="ignore" # Add extra commands or filters Command1="--rc" Command2="vfs-cache-mode=full" Command3="" Command4="" Command5="" Command6="" Command7="" Command8="" CreateBindMount="N" # Y/N. Choose whether to bind traffic to a particular network adapter RCloneMountIP="192.168.1.252" # My unraid IP is 172.30.12.2 so I create another similar IP address NetworkAdapter="eth0" # choose your network adapter. eth0 recommended VirtualIPNumber="2" # creates eth0:x e.g. eth0:1. I create a unique virtual IP addresses for each mount & upload so I can monitor and traffic shape for each of them ####### END SETTINGS #######QuoteCommand2="vfs-cache-mode=full"

There is your problem. It's missing "--". But I don't know why you are adding it, since it's already part of the default mount scripts from DZMM?

-

15 minutes ago, Playerz said:

@Kaizac

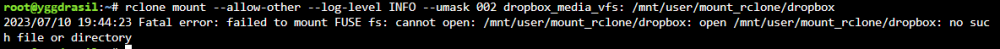

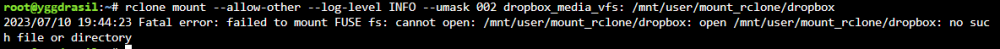

i rebooted my system without running my mount script, and this is what i got from it.

and this is my dropbox config:

the base32768 is to shorten the naming scheme when uploading.#!/bin/bash ###################### #### Mount Script #### ###################### ## Version 0.96.9.2 ## ###################### ####### EDIT ONLY THESE SETTINGS ####### # INSTRUCTIONS # 1. Change the name of the rclone remote and shares to match your setup # 2. NOTE: enter RcloneRemoteName WITHOUT ':' # 3. Optional: include custom command and bind mount settings # 4. Optional: include extra folders in mergerfs mount # REQUIRED SETTINGS RcloneRemoteName="dropbox_media_vfs" # Name of rclone remote mount WITHOUT ':'. NOTE: Choose your encrypted remote for sensitive data RcloneMountShare="/mnt/user/mount_rclone" # where your rclone remote will be located without trailing slash e.g. /mnt/user/mount_rclone RcloneMountDirCacheTime="720h" # rclone dir cache time LocalFilesShare="/mnt/user/local" # location of the local files and MountFolders you want to upload without trailing slash to rclone e.g. /mnt/user/local. Enter 'ignore' to disable RcloneCacheShare="/mnt/disks/scratch" # location of rclone cache files without trailing slash e.g. /mnt/user0/mount_rclone RcloneCacheMaxSize="200" # Maximum size of rclone cache RcloneCacheMaxAge="480h" # Maximum age of cache files MergerfsMountShare="/mnt/user/mount_mergerfs" # location without trailing slash e.g. /mnt/user/mount_mergerfs. Enter 'ignore' to disable DockerStart="plex" # list of dockers, separated by space, to start once mergerfs mount verified. Remember to disable AUTOSTART for dockers added in docker settings page MountFolders=\{"downloads/complete,downloads/intermediate,movies,tv,anime,moviesanime"\} # comma separated list of folders to create within the mount # Note: Again - remember to NOT use ':' in your remote name above # OPTIONAL SETTINGS # Add extra paths to mergerfs mount in addition to LocalFilesShare LocalFilesShare2="ignore" # without trailing slash e.g. /mnt/user/other__remote_mount/or_other_local_folder. Enter 'ignore' to disable LocalFilesShare3="ignore" LocalFilesShare4="ignore" # Add extra commands or filters Command1="--rc" CreateBindMount="N" # Y/N. Choose whether to bind traffic to a particular network adapter RCloneMountIP="192.168.1.252" # My unraid IP is 172.30.12.2 so I create another similar IP address NetworkAdapter="eth0" # choose your network adapter. eth0 recommended VirtualIPNumber="1" # creates eth0:x e.g. eth0:1. I create a unique virtual IP addresses for each mount & upload so I can monitor and traffic shape for each of them ####### END SETTINGS ####### ############################################################################### ##### DO NOT EDIT ANYTHING BELOW UNLESS YOU KNOW WHAT YOU ARE DOING ####### ############################################################################### ####### Preparing mount location variables ####### RcloneMountLocation="$RcloneMountShare/$RcloneRemoteName" # Location for rclone mount LocalFilesLocation="$LocalFilesShare/$RcloneRemoteName" # Location for local files to be merged with rclone mount MergerFSMountLocation="$MergerfsMountShare/$RcloneRemoteName" # Rclone data folder location ####### create directories for rclone mount and mergerfs mounts ####### mkdir -p /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName # for script files mkdir -p $RcloneCacheShare/cache/$RcloneRemoteName # for cache files if [[ $LocalFilesShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating local folders as requested." LocalFilesLocation="/tmp/$RcloneRemoteName" eval mkdir -p $LocalFilesLocation else echo "$(date "+%d.%m.%Y %T") INFO: Creating local folders." eval mkdir -p $LocalFilesLocation/"$MountFolders" fi mkdir -p $RcloneMountLocation if [[ $MergerfsMountShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating MergerFS folders as requested." else echo "$(date "+%d.%m.%Y %T") INFO: Creating MergerFS folders." mkdir -p $MergerFSMountLocation fi ####### Check if script is already running ####### echo "$(date "+%d.%m.%Y %T") INFO: *** Starting mount of remote ${RcloneRemoteName}" echo "$(date "+%d.%m.%Y %T") INFO: Checking if this script is already running." if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Exiting script as already running." exit else echo "$(date "+%d.%m.%Y %T") INFO: Script not running - proceeding." touch /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running fi ####### Checking have connectivity ####### echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if online" ping -q -c2 google.com > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") PASSED: *** Internet online" else echo "$(date "+%d.%m.%Y %T") FAIL: *** No connectivity. Will try again on next run" rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi ####### Create Rclone Mount ####### # Check If Rclone Mount Already Created if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Success ${RcloneRemoteName} remote is already mounted." else echo "$(date "+%d.%m.%Y %T") INFO: Mount not running. Will now mount ${RcloneRemoteName} remote." # Creating mountcheck file in case it doesn't already exist echo "$(date "+%d.%m.%Y %T") INFO: Recreating mountcheck file for ${RcloneRemoteName} remote." touch mountcheck rclone copy mountcheck $RcloneRemoteName: -vv --no-traverse # Check bind option if [[ $CreateBindMount == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if IP address ${RCloneMountIP} already created for remote ${RcloneRemoteName}" ping -q -c2 $RCloneMountIP > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") INFO: *** IP address ${RCloneMountIP} already created for remote ${RcloneRemoteName}" else echo "$(date "+%d.%m.%Y %T") INFO: *** Creating IP address ${RCloneMountIP} for remote ${RcloneRemoteName}" ip addr add $RCloneMountIP/24 dev $NetworkAdapter label $NetworkAdapter:$VirtualIPNumber fi echo "$(date "+%d.%m.%Y %T") INFO: *** Created bind mount ${RCloneMountIP} for remote ${RcloneRemoteName}" else RCloneMountIP="" echo "$(date "+%d.%m.%Y %T") INFO: *** Creating mount for remote ${RcloneRemoteName}" fi # create rclone mount rclone mount \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --allow-other \ --umask 000 \ --dir-cache-time $RcloneMountDirCacheTime \ --log-level INFO \ --poll-interval 15s \ --cache-dir=$RcloneCacheShare/cache/$RcloneRemoteName \ --vfs-cache-mode full \ --vfs-cache-max-size $RcloneCacheMaxSize \ --vfs-cache-max-age $RcloneCacheMaxAge \ --bind=$RCloneMountIP \ $RcloneRemoteName: $RcloneMountLocation & # Check if Mount Successful echo "$(date "+%d.%m.%Y %T") INFO: sleeping for 5 seconds" # slight pause to give mount time to finalise sleep 5 echo "$(date "+%d.%m.%Y %T") INFO: continuing..." if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Successful mount of ${RcloneRemoteName} mount." else echo "$(date "+%d.%m.%Y %T") CRITICAL: ${RcloneRemoteName} mount failed - please check for problems. Stopping dockers" docker stop $DockerStart rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi ####### Start MergerFS Mount ####### if [[ $MergerfsMountShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating mergerfs mount as requested." else if [[ -f "$MergerFSMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Check successful, ${RcloneRemoteName} mergerfs mount in place." else # check if mergerfs already installed if [[ -f "/bin/mergerfs" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs already installed, proceeding to create mergerfs mount" else # Build mergerfs binary echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs not installed - installing now." mkdir -p /mnt/user/appdata/other/rclone/mergerfs docker run -v /mnt/user/appdata/other/rclone/mergerfs:/build --rm trapexit/mergerfs-static-build mv /mnt/user/appdata/other/rclone/mergerfs/mergerfs /bin # check if mergerfs install successful echo "$(date "+%d.%m.%Y %T") INFO: *sleeping for 5 seconds" sleep 5 if [[ -f "/bin/mergerfs" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs installed successfully, proceeding to create mergerfs mount." else echo "$(date "+%d.%m.%Y %T") ERROR: Mergerfs not installed successfully. Please check for errors. Exiting." rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi # Create mergerfs mount echo "$(date "+%d.%m.%Y %T") INFO: Creating ${RcloneRemoteName} mergerfs mount." # Extra Mergerfs folders if [[ $LocalFilesShare2 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare2} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare2=":$LocalFilesShare2" else LocalFilesShare2="" fi if [[ $LocalFilesShare3 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare3} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare3=":$LocalFilesShare3" else LocalFilesShare3="" fi if [[ $LocalFilesShare4 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare4} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare4=":$LocalFilesShare4" else LocalFilesShare4="" fi # make sure mergerfs mount point is empty mv $MergerFSMountLocation $LocalFilesLocation mkdir -p $MergerFSMountLocation # mergerfs mount command mergerfs $LocalFilesLocation:$RcloneMountLocation$LocalFilesShare2$LocalFilesShare3$LocalFilesShare4 $MergerFSMountLocation -o rw,async_read=false,use_ino,allow_other,func.getattr=newest,category.action=all,category.create=ff,cache.files=partial,dropcacheonclose=true # check if mergerfs mount successful echo "$(date "+%d.%m.%Y %T") INFO: Checking if ${RcloneRemoteName} mergerfs mount created." if [[ -f "$MergerFSMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Check successful, ${RcloneRemoteName} mergerfs mount created." else echo "$(date "+%d.%m.%Y %T") CRITICAL: ${RcloneRemoteName} mergerfs mount failed. Stopping dockers." docker stop $DockerStart rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi fi ####### Starting Dockers That Need Mergerfs Mount To Work Properly ####### # only start dockers once if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/dockers_started" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: dockers already started." else # Check CA Appdata plugin not backing up or restoring if [ -f "/tmp/ca.backup2/tempFiles/backupInProgress" ] || [ -f "/tmp/ca.backup2/tempFiles/restoreInProgress" ] ; then echo "$(date "+%d.%m.%Y %T") INFO: Appdata Backup plugin running - not starting dockers." else touch /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/dockers_started echo "$(date "+%d.%m.%Y %T") INFO: Starting dockers." docker start $DockerStart fi fi rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running echo "$(date "+%d.%m.%Y %T") INFO: Script complete" exit

and thanks for the info about the code block, i don't usually use forums.

Sorry about the script. You need to create the folder first and then run the script.

But are you using Dropbox Enterprise or Advanced, whatever it's called, the business one? If so you need to put a / before your folder in your rclone config for the crypt.

So:

dropbox:/dropbox_media_vfs

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

in Plugins and Apps

Posted

Sorry, the mobile view screwed your Quote, and it seemed like you merged everything to /mnt/user/data/ which is the parent folder. But it merges to /mnt/user/data/mergerfs, which is fine.

The permission problems have been discussed a couple of times in this topic, you don't need to go back too far for it.

Can you show your docker container template for radarr? And are you downloading with Sabnzbd or something? What path mapping is used there?

Also, you can go to the seperate /mnt/user/data/xx folders (local/remote/mergerfs) with your terminal

cd /mnt/user/data/local/and type

ls -laIt shows you the owners of the folder. I would expect it to say "nobody/users".