Kaizac

-

Posts

470 -

Joined

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Kaizac

-

-

19 hours ago, 00b5 said:

did you mean rsync instead of copy? I've been using this "copy script" for multiple things for years.

The workflow is like this:

- *darr apps run on my home server, and request files

- requests are put into a folder, which syncs with a seedbox

- Seedbox downloads files to a specific folder, which then syncs back to home server

- *darr apps process/move/etc files and everything is good

- the copy script is run on a 3rd server that is running plex and rclone to host a 2nd plex server for sharing (i don't share my home plex server) the copy script is just grabbing files (every xx mins) and copying them to the mergerFS folder so they can then be also available for the plex cloud instance.

I don't run the *darr apps on the seedbox, it really only seeds, and moves files around with ResilioSync. I used to rent a server to host plex in the cloud tied to gdrive (for when I am remote, and for sharing) since my home upload bandwidth is subpar. Now I have been able to co-locate a server on a nice fiber connection, so I'm trying to move toward using it. The main difference is moving from an online rented server with linux to an owned server running unraid, and this rclone plugin to keep plex using the gdrive source files (at least until it gets killed off).

I was letting the copy script run every 2 mins to make sure it would grab any files in that sync folder before the other end

cleaned up and processed them. I'll try slowing it down, or only letting it run every 10 mins or something and see if I can avoid these weird errors.

I'm not talking about Rsync, but about Rclone. Rclone can both sync, copy and move. The upload script from this topic uses rclone move which will move the file and then delete it at source when it's validated to have been moved correctly. With copy you still keep the source file, which could be what you want. Rclone sync just keeps 2 folders in sync one way to another.

So am I understanding it right, you are using the copy script to copy directly into your rclone google drive mount? Or are you using an upload script for that as well? Copying directly into the rclone mount (would be mount_rclone/) is problematic. Copying into mount_mergerfs/ and then using the rclone upload script is fine.

In general, I would really advise against using cp since it's not validating the transfer and is basically copy-paste. You can then also put in values as minimum file age to transfer. Rsync is also a possibility when you are familiar with it, but for me rclone is easier because I know how to run it.

-

1 hour ago, 00b5 said:

You mean the main mount script, or the one that copies files into the merger folder?

Main Mount Script

#!/bin/bash ###################### #### Mount Script #### ###################### ## Version 0.96.9.3 ## ###################### ####### EDIT ONLY THESE SETTINGS ####### # INSTRUCTIONS # 1. Change the name of the rclone remote and shares to match your setup # 2. NOTE: enter RcloneRemoteName WITHOUT ':' # 3. Optional: include custom command and bind mount settings # 4. Optional: include extra folders in mergerfs mount # REQUIRED SETTINGS RcloneRemoteName="google" # Name of rclone remote mount WITHOUT ':'. NOTE: Choose your encrypted remote for sensitive data RcloneMountShare="/mnt/user/mount_rclone" # where your rclone remote will be located without trailing slash e.g. /mnt/user/mount_rclone RcloneMountDirCacheTime="720h" # rclone dir cache time #LocalFilesShare="/mnt/user/local" # location of the local files and MountFolders you want to upload without trailing slash to rclone e.g. /mnt/user/local. Enter 'ignore' to disable LocalFilesShare="ignore" # location of the local files and MountFolders you want to upload without trailing slash to rclone e.g. /mnt/user/local. Enter 'ignore' to disable RcloneCacheShare="/mnt/user0/mount_rclone" # location of rclone cache files without trailing slash e.g. /mnt/user0/mount_rclone RcloneCacheMaxSize="600G" # Maximum size of rclone cache RcloneCacheMaxAge="336h" # Maximum age of cache files MergerfsMountShare="/mnt/user/mount_mergerfs" # location without trailing slash e.g. /mnt/user/mount_mergerfs. Enter 'ignore' to disable # DockerStart="nzbget plex sonarr radarr ombi" # list of dockers, separated by space, to start once mergerfs mount verified. Remember to disable AUTOSTART for dockers added in docker settings page DockerStart="plex" # list of dockers, separated by space, to start once mergerfs mount verified MountFolders=\{"downloads/complete,downloads/intermediate,downloads/seeds,movies,tv"\} # comma separated list of folders to create within the mount # Note: Again - remember to NOT use ':' in your remote name above # OPTIONAL SETTINGS # Add extra paths to mergerfs mount in addition to LocalFilesShare LocalFilesShare2="ignore" # without trailing slash e.g. /mnt/user/other__remote_mount/or_other_local_folder. Enter 'ignore' to disable LocalFilesShare3="ignore" LocalFilesShare4="ignore" # Add extra commands or filters Command1="--rc" Command2="" Command3="" Command4="" Command5="" Command6="" Command7="" Command8="" CreateBindMount="N" # Y/N. Choose whether to bind traffic to a particular network adapter RCloneMountIP="192.168.1.252" # My unraid IP is 172.30.12.2 so I create another similar IP address NetworkAdapter="eth0" # choose your network adapter. eth0 recommended VirtualIPNumber="2" # creates eth0:x e.g. eth0:1. I create a unique virtual IP addresses for each mount & upload so I can monitor and traffic shape for each of them ####### END SETTINGS ####### ############################################################################### ##### DO NOT EDIT ANYTHING BELOW UNLESS YOU KNOW WHAT YOU ARE DOING ####### ############################################################################### ####### Preparing mount location variables ####### RcloneMountLocation="$RcloneMountShare/$RcloneRemoteName" # Location for rclone mount LocalFilesLocation="$LocalFilesShare/$RcloneRemoteName" # Location for local files to be merged with rclone mount MergerFSMountLocation="$MergerfsMountShare/$RcloneRemoteName" # Rclone data folder location ####### create directories for rclone mount and mergerfs mounts ####### mkdir -p /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName # for script files mkdir -p $RcloneCacheShare/cache/$RcloneRemoteName # for cache files if [[ $LocalFilesShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating local folders as requested." LocalFilesLocation="/tmp/$RcloneRemoteName" eval mkdir -p $LocalFilesLocation else echo "$(date "+%d.%m.%Y %T") INFO: Creating local folders." eval mkdir -p $LocalFilesLocation/"$MountFolders" fi mkdir -p $RcloneMountLocation if [[ $MergerfsMountShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating MergerFS folders as requested." else echo "$(date "+%d.%m.%Y %T") INFO: Creating MergerFS folders." mkdir -p $MergerFSMountLocation fi ####### Check if script is already running ####### echo "$(date "+%d.%m.%Y %T") INFO: *** Starting mount of remote ${RcloneRemoteName}" echo "$(date "+%d.%m.%Y %T") INFO: Checking if this script is already running." if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Exiting script as already running." exit else echo "$(date "+%d.%m.%Y %T") INFO: Script not running - proceeding." touch /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running fi ####### Checking have connectivity ####### echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if online" ping -q -c2 google.com > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") PASSED: *** Internet online" else echo "$(date "+%d.%m.%Y %T") FAIL: *** No connectivity. Will try again on next run" rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi ####### Create Rclone Mount ####### # Check If Rclone Mount Already Created if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Success ${RcloneRemoteName} remote is already mounted." else echo "$(date "+%d.%m.%Y %T") INFO: Mount not running. Will now mount ${RcloneRemoteName} remote." # Creating mountcheck file in case it doesn't already exist echo "$(date "+%d.%m.%Y %T") INFO: Recreating mountcheck file for ${RcloneRemoteName} remote." touch mountcheck rclone copy mountcheck $RcloneRemoteName: -vv --no-traverse # Check bind option if [[ $CreateBindMount == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if IP address ${RCloneMountIP} already created for remote ${RcloneRemoteName}" ping -q -c2 $RCloneMountIP > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") INFO: *** IP address ${RCloneMountIP} already created for remote ${RcloneRemoteName}" else echo "$(date "+%d.%m.%Y %T") INFO: *** Creating IP address ${RCloneMountIP} for remote ${RcloneRemoteName}" ip addr add $RCloneMountIP/24 dev $NetworkAdapter label $NetworkAdapter:$VirtualIPNumber fi echo "$(date "+%d.%m.%Y %T") INFO: *** Created bind mount ${RCloneMountIP} for remote ${RcloneRemoteName}" else RCloneMountIP="" echo "$(date "+%d.%m.%Y %T") INFO: *** Creating mount for remote ${RcloneRemoteName}" fi # create rclone mount rclone mount \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --allow-other \ --umask 000 \ --dir-cache-time $RcloneMountDirCacheTime \ --attr-timeout $RcloneMountDirCacheTime \ --log-level INFO \ --poll-interval 10s \ --cache-dir=$RcloneCacheShare/cache/$RcloneRemoteName \ --drive-pacer-min-sleep 10ms \ --drive-pacer-burst 1000 \ --vfs-cache-mode full \ --vfs-cache-max-size $RcloneCacheMaxSize \ --vfs-cache-max-age $RcloneCacheMaxAge \ --vfs-read-ahead 1G \ --bind=$RCloneMountIP \ $RcloneRemoteName: $RcloneMountLocation & # Check if Mount Successful echo "$(date "+%d.%m.%Y %T") INFO: sleeping for 15 seconds" # slight pause to give mount time to finalise sleep 15 echo "$(date "+%d.%m.%Y %T") INFO: continuing..." if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Successful mount of ${RcloneRemoteName} mount." else echo "$(date "+%d.%m.%Y %T") CRITICAL: ${RcloneRemoteName} mount failed - please check for problems. Stopping dockers" docker stop $DockerStart rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi ####### Start MergerFS Mount ####### if [[ $MergerfsMountShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating mergerfs mount as requested." else if [[ -f "$MergerFSMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Check successful, ${RcloneRemoteName} mergerfs mount in place." else # check if mergerfs already installed if [[ -f "/bin/mergerfs" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs already installed, proceeding to create mergerfs mount" else # Build mergerfs binary echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs not installed - installing now." mkdir -p /mnt/user/appdata/other/rclone/mergerfs docker run -v /mnt/user/appdata/other/rclone/mergerfs:/build --rm trapexit/mergerfs-static-build mv /mnt/user/appdata/other/rclone/mergerfs/mergerfs /bin # check if mergerfs install successful echo "$(date "+%d.%m.%Y %T") INFO: *sleeping for 5 seconds" sleep 5 if [[ -f "/bin/mergerfs" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs installed successfully, proceeding to create mergerfs mount." else echo "$(date "+%d.%m.%Y %T") ERROR: Mergerfs not installed successfully. Please check for errors. Exiting." rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi # Create mergerfs mount echo "$(date "+%d.%m.%Y %T") INFO: Creating ${RcloneRemoteName} mergerfs mount." # Extra Mergerfs folders if [[ $LocalFilesShare2 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare2} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare2=":$LocalFilesShare2" else LocalFilesShare2="" fi if [[ $LocalFilesShare3 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare3} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare3=":$LocalFilesShare3" else LocalFilesShare3="" fi if [[ $LocalFilesShare4 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare4} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare4=":$LocalFilesShare4" else LocalFilesShare4="" fi # make sure mergerfs mount point is empty mv $MergerFSMountLocation $LocalFilesLocation mkdir -p $MergerFSMountLocation # mergerfs mount command mergerfs $LocalFilesLocation:$RcloneMountLocation$LocalFilesShare2$LocalFilesShare3$LocalFilesShare4 $MergerFSMountLocation -o rw,async_read=false,use_ino,allow_other,func.getattr=newest,category.action=all,category.create=ff,cache.files=partial,dropcacheonclose=true # check if mergerfs mount successful echo "$(date "+%d.%m.%Y %T") INFO: Checking if ${RcloneRemoteName} mergerfs mount created." if [[ -f "$MergerFSMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Check successful, ${RcloneRemoteName} mergerfs mount created." else echo "$(date "+%d.%m.%Y %T") CRITICAL: ${RcloneRemoteName} mergerfs mount failed. Stopping dockers." docker stop $DockerStart rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi fi ####### Starting Dockers That Need Mergerfs Mount To Work Properly ####### # only start dockers once if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/dockers_started" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: dockers already started." else # Check CA Appdata plugin not backing up or restoring if [ -f "/tmp/ca.backup2/tempFiles/backupInProgress" ] || [ -f "/tmp/ca.backup2/tempFiles/restoreInProgress" ] ; then echo "$(date "+%d.%m.%Y %T") INFO: Appdata Backup plugin running - not starting dockers." else touch /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/dockers_started echo "$(date "+%d.%m.%Y %T") INFO: Starting dockers." docker start $DockerStart fi fi rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running echo "$(date "+%d.%m.%Y %T") INFO: Script complete" exitCopy script (copies files from a folder that is syncing with another server via Resilio Sync), runs about every 5 mins or so

# btsync capture SCRIPT #!/bin/bash # exec 3>&1 4>&2 # trap 'exec 2>&4 1>&3' 0 1 2 3 # Everything below will go to the file 'rsync-date.log': LOCKFILE=/tmp/lock.txt if [ -e ${LOCKFILE} ] && kill -0 `cat ${LOCKFILE}`; then echo "[ $(date ${date_format}) ] Rsync already running @ ${LOCKFILE}" exit fi # make sure the lockfile is removed when we exit and then claim it trap "rm -f ${LOCKFILE}; exit" INT TERM EXIT echo $$ > ${LOCKFILE} if [[ -f "/mnt/user/mount_rclone/google/mountcheck" ]]; then echo "[ $(date ${date_format}) ] INFO: rclone remote is mounted, starting copy" echo "[ $(date ${date_format}) ] #################################### ################" echo "[ $(date ${date_format}) ] ################# Copy TV Shows ################" echo "[ $(date ${date_format}) ] rsync-ing TV shows from resiloSync:" cp -rv /mnt/user/data/TV/* /mnt/user/mount_mergerfs/google/Media/TV/ echo "[ $(date ${date_format}) ] ################# Copy Movies ################" echo "[ $(date ${date_format}) ] rsync-ing Movies from resiloSync:" cp -rv /mnt/user/data/Movies/* /mnt/user/mount_mergerfs/google/Media/Movies/ echo "[ $(date ${date_format}) ] ###################################################" else echo "[ $(date ${date_format}) ] INFO: Mount not running. Will now abort copy" fi sleep 30 rm -f ${LOCKFILE}Here is 10 mins of the log where it tries to copy this file up:

2023/05/15 10:17:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:18:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:19:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) Script Starting May 15, 2023 10:20.01 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 15.05.2023 10:20:01 INFO: Not creating local folders as requested. 15.05.2023 10:20:01 INFO: Creating MergerFS folders. 15.05.2023 10:20:01 INFO: *** Starting mount of remote google 15.05.2023 10:20:01 INFO: Checking if this script is already running. 15.05.2023 10:20:01 INFO: Script not running - proceeding. 15.05.2023 10:20:01 INFO: *** Checking if online 15.05.2023 10:20:02 PASSED: *** Internet online 15.05.2023 10:20:02 INFO: Success google remote is already mounted. 15.05.2023 10:20:02 INFO: Check successful, google mergerfs mount in place. 15.05.2023 10:20:02 INFO: dockers already started. 15.05.2023 10:20:02 INFO: Script complete Script Finished May 15, 2023 10:20.02 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 2023/05/15 10:20:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:21:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycds6tAjl-sjvsxWVtbO2hr6eHhfX58FibGCOIPFijx8n5_LhEaKRKVeLAmdM7rdxiIM6AnlhInp9n8Bl1IGxgz4oBg": context canceled 2023/05/15 10:21:02 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:21:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.419Gi (was 596.419Gi) 2023/05/15 10:22:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.515Gi (was 599.515Gi) 2023/05/15 10:22:44 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:22:47 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:22:47 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:23:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:24:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:25:01 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdtO-3iUahxqOPfrLtJvUGzKbVbC_jIet8MR1hSM4t-JDEvJGPEXYgjVyO3alao3Jira9AI0ZWLeDbVKmtRXvy9FdQ": context canceled 2023/05/15 10:25:01 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:25:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.522Gi (was 596.521Gi) 2023/05/15 10:26:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.625Gi (was 599.625Gi) 2023/05/15 10:26:41 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:27:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:28:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:29:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) Script Starting May 15, 2023 10:30.01 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 15.05.2023 10:30:01 INFO: Not creating local folders as requested. 15.05.2023 10:30:01 INFO: Creating MergerFS folders. 15.05.2023 10:30:01 INFO: *** Starting mount of remote google 15.05.2023 10:30:01 INFO: Checking if this script is already running. 15.05.2023 10:30:01 INFO: Script not running - proceeding. 15.05.2023 10:30:01 INFO: *** Checking if online 15.05.2023 10:30:02 PASSED: *** Internet online 15.05.2023 10:30:02 INFO: Success google remote is already mounted. 15.05.2023 10:30:02 INFO: Check successful, google mergerfs mount in place. 15.05.2023 10:30:02 INFO: dockers already started. 15.05.2023 10:30:02 INFO: Script complete Script Finished May 15, 2023 10:30.02 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 2023/05/15 10:30:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:31:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdvY7OCd_9eS-M8zevNS2RwdMIUrvChpIfFvJbZwXkA3WTLZOSbQnxi03cunE_-VdMLlRHt4ElXs-7BokEs1s_V1yQ": context canceled 2023/05/15 10:31:02 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:31:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.452Gi (was 596.452Gi) 2023/05/15 10:32:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.526Gi (was 599.526Gi) 2023/05/15 10:32:44 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:33:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:34:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:35:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdsZfHAOT0kaMBaqBO-V9hllnnC1cj2FoFWxpu4k1ugT4MmBnWt5d-4ozDwEbcjp9STh-TGSnC9nmFamo3hhW1ueNw": context canceled 2023/05/15 10:35:02 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:35:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.583Gi (was 596.583Gi) 2023/05/15 10:36:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.645Gi (was 599.645Gi) 2023/05/15 10:36:41 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:37:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:38:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:39:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) Script Starting May 15, 2023 10:40.01 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 15.05.2023 10:40:01 INFO: Not creating local folders as requested. 15.05.2023 10:40:01 INFO: Creating MergerFS folders. 15.05.2023 10:40:01 INFO: *** Starting mount of remote google 15.05.2023 10:40:01 INFO: Checking if this script is already running. 15.05.2023 10:40:01 INFO: Script not running - proceeding. 15.05.2023 10:40:01 INFO: *** Checking if online 15.05.2023 10:40:02 PASSED: *** Internet online 15.05.2023 10:40:02 INFO: Success google remote is already mounted. 15.05.2023 10:40:02 INFO: Check successful, google mergerfs mount in place. 15.05.2023 10:40:02 INFO: dockers already started. 15.05.2023 10:40:02 INFO: Script complete Script Finished May 15, 2023 10:40.02 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 2023/05/15 10:40:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:41:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdt5s0GvZo7nDCoSPIB_BwQwa-PU1FSe0i8UWPJDOQ_cwFYx6WL33iTkh85OnXiegp5yn9OoRJLn8xAbe94O0fXcZQ": context canceled 2023/05/15 10:41:02 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:41:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.444Gi (was 596.444Gi) 2023/05/15 10:42:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.494Gi (was 599.494Gi) 2023/05/15 10:42:44 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:43:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:44:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:45:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycds-MUxpNB4t2OVXgjxdH8u9gUF4gTbJb8x_MmVSimgBiAxIl-txOpkWeOKxkJ2NvpBqHTvvYDLC1KwidTegrCt7lA": context canceled 2023/05/15 10:45:02 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:45:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.457Gi (was 596.457Gi) 2023/05/15 10:46:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.576Gi (was 599.576Gi) 2023/05/15 10:46:42 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:47:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:48:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:49:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) Script Starting May 15, 2023 10:50.01 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 15.05.2023 10:50:01 INFO: Not creating local folders as requested. 15.05.2023 10:50:01 INFO: Creating MergerFS folders. 15.05.2023 10:50:01 INFO: *** Starting mount of remote google 15.05.2023 10:50:01 INFO: Checking if this script is already running. 15.05.2023 10:50:01 INFO: Script not running - proceeding. 15.05.2023 10:50:01 INFO: *** Checking if online 15.05.2023 10:50:02 PASSED: *** Internet online 15.05.2023 10:50:02 INFO: Success google remote is already mounted. 15.05.2023 10:50:02 INFO: Check successful, google mergerfs mount in place. 15.05.2023 10:50:02 INFO: dockers already started. 15.05.2023 10:50:02 INFO: Script complete Script Finished May 15, 2023 10:50.02 Full logs for this script are available at /tmp/user.scripts/tmpScripts/unraid_rclone_mount/log.txt 2023/05/15 10:50:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:51:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdsy0omBKNyQQLp0swJqar7qlA531fiz4eHWL-ZtvsmkRTulOE9QsZkw_8RNZ4kHM8ZFoO220c3HDF06SM3K4nMcyg": context canceled 2023/05/15 10:51:02 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:51:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.540Gi (was 596.540Gi) 2023/05/15 10:52:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.569Gi (was 599.569Gi) 2023/05/15 10:52:42 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:53:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:54:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:55:01 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdsBnUc_AykzI7geN4fr0mzK34xZZkcuOCCDyX2SUFOl4GqYX80eS2xYcpVlqXqyqu3gnyYFJxYLNQbuW5_v1Bly6gJN1CG7": context canceled 2023/05/15 10:55:01 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: upload canceled 2023/05/15 10:55:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 596.478Gi (was 596.478Gi) 2023/05/15 10:56:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 0, total size 599.554Gi (was 599.554Gi) 2023/05/15 10:56:43 INFO : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: vfs cache: queuing for upload in 5s 2023/05/15 10:57:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:58:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) 2023/05/15 10:59:40 INFO : vfs cache: cleaned: objects 831 (was 831) in use 1, to upload 0, uploading 1, total size 599.694Gi (was 599.694Gi) Script Starting May 15, 2023 11:00.01

The context canceled is usually an error reporting a timeout. So maybe the files can not be accessed yet or can't be deleted?

I don't understand why you are using simple copy instead of rclone to move the files? With rclone you are certain that files arrive at their destination and has better error handling in case of problems.

-

30 minutes ago, 00b5 said:

I give up, does anyone know what the hell is this error actually about?

2023/05/15 10:11:02 ERROR : Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv: Failed to copy: Post "https://www.googleapis.com/upload/drive/v3/files?alt=json&fields=id%2Cname%2Csize%2Cmd5Checksum%2Ctrashed%2CexplicitlyTrashed%2CmodifiedTime%2CcreatedTime%2CmimeType%2Cparents%2CwebViewLink%2CshortcutDetails%2CexportLinks%2CresourceKey&supportsAllDrives=true&uploadType=resumable&upload_id=ADPycdt-Lz90u9Nes1YU9fbno82aTyk9La51mu1QEnq3UbWL3Shb2lLaFGQvwDdR76XjFluBGLd02Gls5nR90LwR_qVyvg": context canceledA script copies files into the merger_fs folder/share. Most stuff works fine, every now and again the above error happens.

'/mnt/user/data/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv' -> '/mnt/user/mount_mergerfs/google/Media/Movies/The.Covenant.2023.1080p.AMZN.WEB-DL.DDP5.1.Atmos.H.264-FLUX.mkv'

Hard to troubleshoot without the scripts you're running.

-

2 hours ago, fzligerzronz said:

With the advent demise of Google Drive in 2 months, looks like i'll be moving stuff over to dropbox.

Would this be the same setup as we do for Google Drive?

I didn't get the e-mail (yet). I'm also actually using Google Workspace for my business, so maybe they check for private and business accounts/bank accounts? Could be different factors, I have no clue. But in my admin console it says unlimited storage still, so they would be breaking the contract I suppose by disconnecting people. I've already anticipated a moment where it would shut down though, it's been happening with all the other services. I wouldn't count on Dropbox to stay unlimited. You'll have to ask yourself as well what your purpose for it is. Depending on your country's prizes, you can buy 50-100TB of drives each year you use Dropbox. That's permanent storage you can use for years to come.

And for media consumption, I pretty much moved over to Stremio with Torrentio + Debrid (Real-Debrid is a preferred one). For 3-4 euro's a month, you can watch all you want. The only caveat is that you can only use most Debrid services from 1 IP at the same time, but no limit on amount of users from that same IP. There is already a project called plex_debrid which you can use to run your Debrid through your Plex, so it will count as 1 IP for users from outside as well.

To answer your question, Dropbox has different API limits. I think (but it might have changed) they don't have the limits on how much you can upload and download, only on how often you hit the API. So using rotating service accounts and multiple mounts won't be needed (big plus). But you need to rate limit the API hits correctly to prevent temporary bans.

You can always request the trial and ask for more than the 5TB you get in the trial to see how things are. I've seen different experiences with Dropbox, sometimes it's very easy to get big amounts of storage immediately (100-200TB) other times you'll have to ask for every 5TB extra storage. Sometimes it is added automatically, apparently. So everyone's miles will vary, basically ;).

-

1 hour ago, JorgeB said:

Yes.

Thanks!

-

1

1

-

-

Currently, I have 1 cache pool of 2 SSD's mirrored in BTRFS. I'm thinking about the configuration of my new system in which I expect to have at least 4 NVME drives and perhaps 2 SATA SSD's.

I know it is possible to make multiple cache pools right now, but is that also possible with ZFS mirroring? So I would have 2 NVME as 1 mirrored ZFS cache pool, then the 2 other NVME drives would be another ZFS mirrored cache pool. And perhaps the SATA SSD's will be another ZFS cache pool, or just single drives.The array would stay in XFS as I want to keep the flexibility to grow my array easily.

Would this be possible, or is the cache pool with ZFS limited to 1?

-

On 4/27/2023 at 8:01 AM, Nanobug said:

The dropbox:/crypt part works, I can use the rclone commands in the terminal with it, so I'm happy with it. I'm not sure it's done the right/best way, but it works, and it took me a while to get it to work, so I don't want to mess with it

Regarding the /user path, why not just point it to /user/mount_mergerfs/crypt/movies instead of pointing it to /mnt/user?

It kinda makes sense so it sees it as one disk instead.

If someone knows a deeper explanation, I'd love to hear it and learn from it

Regarding the docker start, there's no commas in between. There dash ( - ) though, which is a part of the container/docker name that I use.

I'm reading your post back, and I now see I'm missing the conclusion. Did you get it to work as you wanted? If not, let me know what your issue is.

By the way regarding the /mnt/user and the performance increase of moving files, it's called "atomic moving". You can look that up if you want to know more about it.

-

2 hours ago, bubbadk said:

i just looked at my upload script log.

it apparently not uploading to google. can any1 see why

what files and logs do you need.

06.05.2023 09:30:03 INFO: *** Rclone move selected. Files will be moved from /mnt/user/local/gdrive_vfs for gdrive_vfs ***

06.05.2023 09:30:03 INFO: *** Starting rclone_upload script for gdrive_vfs ***

06.05.2023 09:30:03 INFO: Exiting as script already running.

Script Finished May 06, 2023 09:30.03

Full logs for this script are available at /tmp/user.scripts/tmpScripts/rclone_upload/log.txt

You probably rebooted your server and the checker file has not been deleted. Should be in your /mnt/user/appdata/other/rclone/remotes/gdrive_vfs/ directory called upload_running_daily (or something along those lines).

-

1

1

-

-

Same problem here on 6.11.5. It's not a problem on the Linuxserver dockers for me, but on the non-linuxserver ones mostly. Connectivity is fine, so no idea why that's pointed to as the issue.

-

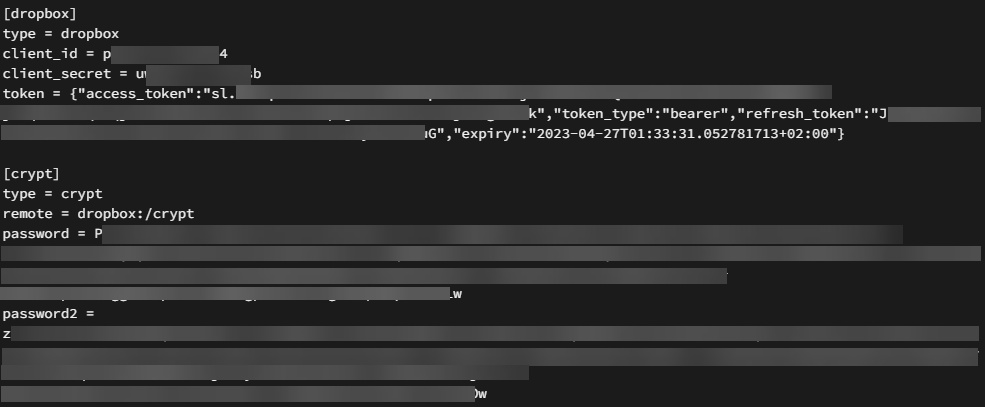

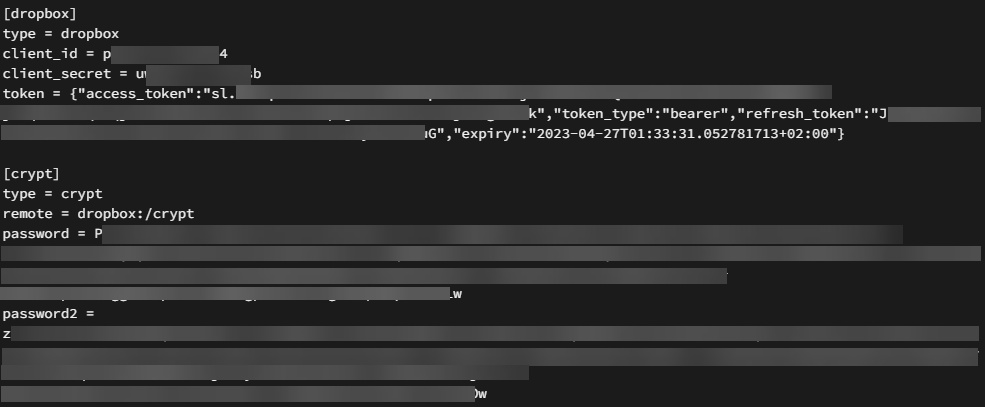

5 minutes ago, Nanobug said:

Hello,

I'm kinda stuck on this, and I read through the guide multiple times, but it seems like something is implicit that I don't get.For the rclone config, this is my setup (details are blurred):

The terminal rclone commands works just fine.

I'm not sure if this is done right:

(I know there's a lot of containers and paths)

and I read this sentence "To get the best performance out of mergerfs, map dockers to /user --> /mnt/user" I'm not sure what this means. Should I add a new path to Plex for with "/mnt/user/ and the same for Sonarr, Radarr and the Deluge containers, or what am I supposed to do?

Also, why?I don't quite get it.

I know how to setup the cronjob, but I want to make sure I get it right before I use it.

Maybe someone could walk me through it?

In your mount script change RcloneRemoteName to "crypt".

I'm wondering about your crypt config though. I'm not sure if the / before Dropbox works. Did you test that? I would just have dropbox:crypt for the crypt remote, / is not needed afaik.

Regarding the /user path. You have to create the path named /user which points to /mnt/user on your filesystem on your docker templates.

Then within the docker containers (the actual software like Plex) you start your file mappings from /user. For example /user/mount_mergerfs/crypt/movies for the movies path in Plex. You do the same for Radarr.

This helps performance because the containers are isolated, so they have their own paths and file system which they also use to communicate with each other. So if you have plex user /Films but Radarr /Movies then they won't be able to find that path because they don't know it.

And this also gives the system the idea that it's 1 share/folder/disk so it will move files directly instead of creating overhead by copy writing the file. This is not a 100% technical accurate explanation, but I hope it makes some sense.

Another thing: I'm not sure if the dockers for the docker start need a comma in between. You might want to test/double check that first as well.

-

6 minutes ago, bubbadk said:

No why would that be needed? You're just mounting all the folders you have on this Google Drive folder. This will also be available through the windows explorer for example. So if that is not a problem then you can just leave as is.

-

1 minute ago, bubbadk said:

holy monkey...u are a god..

it worked.

when i download. where do i point to. and what directory do i point plex too

You have to create a path in your docker templates to /mnt/user/ as /user. So within the dockers you have to point to /user/mount_mergerfs/gdrive_vfs/XX for the folder you want to be in. But if you want to download to /user/downloads/ that's also fine, just make sure you start all paths from /user so the file system will see it as the same storage. Otherwise it will copy + paste then delete instead of just moving straight away.

For plex you use the media folders for example /user/mount_mergerfs/gdrive_vfs/Movies for your movies and /user/mount_mergerfs/gdrive_vfs/Shows for your TV-shows. Make sure you read up in the start post and the github about which settings you should disable in Plex to limit your api hits.

-

1

1

-

-

-

5 minutes ago, bubbadk said:

ahh ok. well i'm running the script now. made sure all was deleted before running the script. crossing fingers.

i have run the script 20 times now. all with the same output. no mount.

Are you checking the logs?

And do you run the script through User Scripts as "run in background"?

-

7 minutes ago, bubbadk said:

ok just to be clear. when i use the script from here. I can't see my gdrive. what does it do to be exact. does it make directories on my google drive. am i suppose to move my existing folders with movies and tv to another directory or ?!?!?

i'm a bit confused as what it does.

What do you mean with "script from here"? When you run the mount script it should show your Google drive files in /user/mount_rclone/gdrive_vfs/ and your google drive files and local files combined in /user/mount_mergerfs/gdrive_vfs/. Your mount_mergerfs and mount_rclone folders should be empty before the mount script runs. Only /user/local/ is allowed to have file before the mount script is started.

-

3 hours ago, bubbadk said:

ok.. this is odd..

when i do this : rclone mount --allow-other --buffer-size 256M --dir-cache-time 720h --drive-chunk-size 512M --fast-list --log-level INFO --vfs-read-chunk-size 128M --vfs-read-chunk-size-limit off --vfs-cache-mode writes gdrive_vfs: /mnt/user/mount_rclone/google_vfs &

then i can see it.

but the script does nothing for me.

then i can't use the script and all the features...bummer

You're mounting /mnt/user/mount_rclone/ instead of /mnt/user/mount_mergerfs/ in that command so that's the reason why it does work. It's just mounting the Gdrive.

I think the problem lies in that you added --allow-non-empty in your mount script. That's not recommended and I see no reason why you would use it. Try removing that from the script, disable your dockers (from the settings menu, so they won't autostart at reboot). Then restart your server and run the mount script. See what it does, check the logging.

-

53 minutes ago, bubbadk said:

i'm going crazy here..

i followed every step. i don't use crypted because i have movies and tv shows at google.

first time it worked. then i ghad to reboot. now nothing works. i tried deleting everything that had to do with rclone. every directories.

i can do a rclone lsd gdrive:

every directories show up. but when i run the script no mount's now...

i don't get it

Paste your mount script. And explain which folders you use. Does the mount script give any errors?

-

1

1

-

-

20 hours ago, 00b5 said:

I seem to have messed up this install/etc.

My main issue is that files seem to be left in the .../mount_rclone/google/.... and the script won't mount again, since there the path isn't empty.

I've noticed it twice, one was files in the "movies" folder, which I just deleted and let it mount. Second time it was the "TV" folder, and I updated the main script to just mount if not empty. The issue "occured" because the server rebooted/hard powered off (power issues that I can't really remedy with getting a UPS for this backup unraid server).

The only thing I think I do out of the ordinary is copy files directly into the /mount/mergerfs/google/TV or Movies folder with an automated script.

I'm only running the main script: unraid_rclone_mount

I don't run any "upload script". I'm pretty sure that the files "broken" (left in the mount_rclone) folder never upload to gdrive, they are available to "plex" for example, and listed in mergerfs folder, but not actually in the cloud.

Any ideas or directions?

This normally happens when you have a docker starting up before the mount script is finished so it will create folders already in the mount_rclone/gdrive folder. Dockers which download like Sabnzbd and torrent clients often do this so they create the folder structure they need when it's not available. So try booting without autostart of dockers and then see if it happens again.

-

17 hours ago, privateer said:

Well finally did enough testing etc and after walking through it with the sonarr team, it's a bug!

Nice find then! Hope they fix it soon so you will can finally set and forget again :).

-

On 4/11/2023 at 9:58 PM, privateer said:

Discovered a new problem! If I attempt to manually import something that's on my cloud drive (Manage episodes -> import, due to misnamed files), it can't execute the command but keeps the task running.

This is interesting because the automatic import works on the cloud drive, it's only for files that aren't correctly named and have to be manually added.

Encounter this before, or have any thoughts?

No, not when Sonarr uses the /mnt/user base and not direct paths. If you use direct paths like /downloads it will first download files from your cloud and then re-upload them again when importing.

Your logs should mention something about it though.

-

On 4/9/2023 at 9:22 PM, Nindahr said:

Hello together. Recently a small problem occured, and I'm not sure what caused it or how to get rid of it. I can't open the Web-GUI anymore on my main PC and Notebook with Firefox. I only see the empty KasmVNC-Screen (see Screenshot). The only device where I see the Calibre-GUI with Firefox is my Steamdeck.

I've tried opening the GUI with Edge, which worked on my Notebook, but not on my Desktop.Using the GUI over https doesn't help either. I've tried disableing all plug-ins in Firefox and using Private mode. Clearing the Cache and saved Website-Dara changed nothing. I've reinstalled Firefox completly to make sure it's not a problem with settings or something I've configured. I've also reinstalled the docker multiple times, without intalling it from previous apps and removed the appdata folder.

I'm running Unraid Ver. 6.11.5, the Docker-Container is the newest version (log: Xvnc KasmVNC 1.1.0 - built Apr 9 2023 00:38:52).Here are all the Browsers I've tested:

Win 11 Pro Ver. 22H2

Firefox Ver. 111.0.1 (64-Bit) doesn't work

Edge Ver. 112.0.1722.34 (64-Bit) doesn't work

Win 10 Pro Ver. 22H2

Firefox Ver. 111.0.1 (64-Bit) doesn't work

Edge Ver. 111.0.1661.62 (64-Bit) WORKS

SteamOS Ver. 3.4.6

Firefox Ver. 110.0.1 (64bit) Flatpack WORKS

Android 13

Firefox Ver. 111.1.1 doesn't work

It seems like there is a bug in the newer browser-versions, or something changed that hinders viewing the Calibre-GUI. Anyone else noticed the same behavior or has an idea how to solve this?

If you need any further information, please let me know and I'll try to provide it.Looking forward to read your reply,

nindahr

It's a common issue if you read the Githubs. Sometimes Firefox works, but 90% of the time it doesn't. Firefox based browsers do see to work (like Mullvad browser). So no clue what it is. I'm not happy at all with this decision to switch to KasmVNC and devs don't seem to acknowledge the Firefox issues. I'll give it a few weeks and then switch to another docker if not resolved. And with Linuxserver's decision to move away from Guacamole and towards KasmVNC more dockers might become unusable.

-

1

1

-

-

1 hour ago, privateer said:

Do you do anything with the "Rescan Series Folder After Refresh" option in Sonarr? I've moved mine to after manual refresh, but I'm not sure if I needed to do that.

Yes it should be on "always", otherwise sonarr/radarr won't pick up changes or new downloads you made. Using manual is only useful for never changing libraries.

-

9 hours ago, privateer said:

@Kaizac FIXED!!!!

Solution was permissions were FUBAR majorly, and I finally moved enough of the file structure to 99:100 which solved it. Thank you so much for working through this with me.

Weirdly, I still have had an error a few times with sonarr container not stopping because of mono as shown above.

Glad to hear!

Did the permissions persist now through mounting again?

I still want to advise to not use the analzye video options in sonarr/radarr. It doesn't give you anything you should need and it takes time and api hits. I don't see any advantage for that.

Mono is what Sonarr is built upon/dependent off, so it could be that it was running jobs or maybe the docker already had errors/crashes and then you often get the error in my experience. Now that it's running well, does stopping it still give you this error?

I would advise to add UMASK again to your docker template again in case you removed it. That way you stick closed to the templates of the docker repo (linuxserver in this case).

Regarding your mount script not working the first time. It is because it sees the file "mount_running" in /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName. This is a checker to prevent it from running multiple times simultaneously. So apparantly this file doesn't get deleted when your script finishes. Maybe your unmount script doesn't remove the file? Or are you running the script twice during starting of your system/array? Maybe 1 on startup of array and another one on a cron job?

I would check your rclone scripts and the checker files they use and the way they are scheduled. Something must be conflicting there.

-

46 minutes ago, privateer said:

The analyze files is checked.

How are you installing mergerfs? Mine runs through the script as noted above. In order to run mergerfs like you have I can run the partial set of commands in the script but I want to make sure we're doing things 1:1 since this is so problematic.

EDIT: additional permissions question. File path is /mnt/user/mount_unionfs/gdrive_media_vfs/tv_shows/. Should /mount_unionfs read 99/100 and /gdrive_media_vfs read 99/100 etc all the way down to the individual file?

Yes I have it all on 99/100 down to the file.

Can you try the library import without analyze video checked on? This causes a lot of CPU strain and also api hits, because it reads the file which is like it's streaming your files.

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

in Plugins and Apps

Posted

Crypt shouldn't matter. Post your mount and upload scripts please. What do you mean with "can't access the file anymore"?