-

Posts

1895 -

Joined

-

Last visited

-

Days Won

8

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by jbartlett

-

-

I can reproduce. I have three VM's configured. Everything is OK in the Web UI until all three are started, then they vanish from the Web UI. If I shut one of the VM's down, then they display again.

-

Probably could use a change to the default label to add something like "non-UEFI only" to prevent against confusion.

-

1

1

-

-

I'd take your unraid stick and plug it in your regular PC to test it for errors.

-

@limetech - I've been experiencing network drops on my 10G network card and from my investigation, it's due to a memory leak in the driver which was patched in the 1.6.13 version. It looks like unraid is loading 1.5.44.

Ref: https://bugzilla.redhat.com/show_bug.cgi?id=1499321 - The meat of the discussion is just a little past half way. Affects onboard NICs & PCIe cards.

Nov 12 01:42:57 VM1 kernel: atlantic: link change old 10000 new 0 Nov 12 01:42:57 VM1 kernel: br0: port 1(eth0) entered disabled state Nov 12 01:43:11 VM1 kernel: atlantic: link change old 0 new 10000 Nov 12 01:43:11 VM1 kernel: br0: port 1(eth0) entered blocking state Nov 12 01:43:11 VM1 kernel: br0: port 1(eth0) entered forwarding state

root@VM1:~# ethtool -i eth0 driver: atlantic version: 5.3.8-Unraid-kern firmware-version: 1.5.44 expansion-rom-version: bus-info: 0000:0a:00.0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: yes supports-priv-flags: no

-

1

1

-

-

I'll take this off-thread for any further notes and share it on my thread for this MB.

-

9 hours ago, bastl said:

@jbartlett Which "CPU Scaling Governor" are u using?

I don't really even know what that is other than I've seen it posted in the forums a few times. Did some searching, installed the Tips & Tweaks plugin. Governor was set to "On Demand". I set it to Performance, rebooted & restarted the VM to make sure it still loaded as intended, then OC'ed the CPU to 6% using it's wizard. It took a couple minutes before I saw the BIOS load and I could see the text outputting character by character, probably a quarter second per line. It reminds me of the old 2400 baud modem days.

Did some tinkering around, creating a blank VM with no drives attached, 2 CPUs, and 2GB of RAM. It was all slow until I removed the Quadro P2000, the VNC client showed it booted real fast.

I actually DO want to squeeze as much juice out of this as I can. I'm actually a little stoked because I found a combination that allowed my multiple Brio 4K cams to work without flickering. My goal with this build is to have a 32 cpu running Livestream Studio for my 24x7 Foster Kitten Cam on the two main numa nodes and smaller Ubuntu VM's running OBS on the off-nodes feeding other streams to YouTube (multi-cam viewing ya'll!).

I'm going to dig up the MB manual and dig into the BIOS to see if I can see anything causing the slowdown on OC with video passthrough.

-

Meh, I've got something going on here. Tried OC'ing with SeaBios and got the same laggy thing. I'm going to wipe my hands of this and stick to stock speeds.

-

Just now, bastl said:

@jbartlett Maybe you have set some cores which are not directly attached to the memory? Did you manualy changed the topology for the VM?

<topology sockets='1' cores='8' threads='2'/>

I always do this for all my VMs to match the actual core/thread count. Default is always all selected cores and 1 thread.

I had the VM pinned directly to the two numa nodes that had the memory attached (all CPUs).

Hrm, the emulatorpin'ed CPU was on one of the other numa nodes but it didn't pose any other issues if that is the root cause. The infinity fabric on the Threadripper 2 chips seems to be so fast that I couldn't find any degregation even pinning a VM off node forcing everything to go through the infinity fabric.

-

2 minutes ago, bastl said:

So you only experiencing this with i440fx VMs? Maybe I have some time tomorrow to test i440 on 6.8 RC. I'am running all my VMs on Q35 for quiet some time now without any problems.

Q35-4.0.1 + OVMF + Emulated CPU. I just started tinkering with i440fx VMs, haven't yet met some test scenarios yet to even try an OC.

-

Just now, bastl said:

@jbartlett Never experienced this issue on my 1950x with OC. Windows VMs with and without passthrough, default template or tweaked settings, I never noticed something you described. Maybe an temp issue?! What cooling solution are you using?

Noctua cooler & fans. Had no issues with other types of VM's with OVMF BIOS or bare metal, even with a much higher OC. Just started tinkering with Seabios to test if ingesting two Brio 4k cams set to 1080p@60 is more steady via a passthrough USB3 4 controller card. A Quadro P2000 is also passed through.

-

Just an observation on my Threadripper 2990WX with a test VM using QEMU Emulated CPU's. If I overclock the CPU via the BIOS, even just a teeny little bit, my Win10 VM becomes extremely sluggish, even just to start the spinning circles at boot. The one time it actually managed to get to the Desktop, the mouse pointer was jumping around like it was running at 5fps. Go back to stock settings in the BIOS, no issues.

-

1

1

-

-

Now here's something interesting. Switching bond from N to Y worked. Immediately changing it from Y to N also worked. Repeated successfully several times. Rebooted, was able to still change it successfully.

vm1-diagnostics-20191108-1140.zip

Removed the two network cfg files and rebooted. I was once again not able to change bonding from Y to N as above. Rebooted and was able to repeat the issues above - after rebooting, I was able to switch between bonding & non-bonding dynamically at-will with no issues.

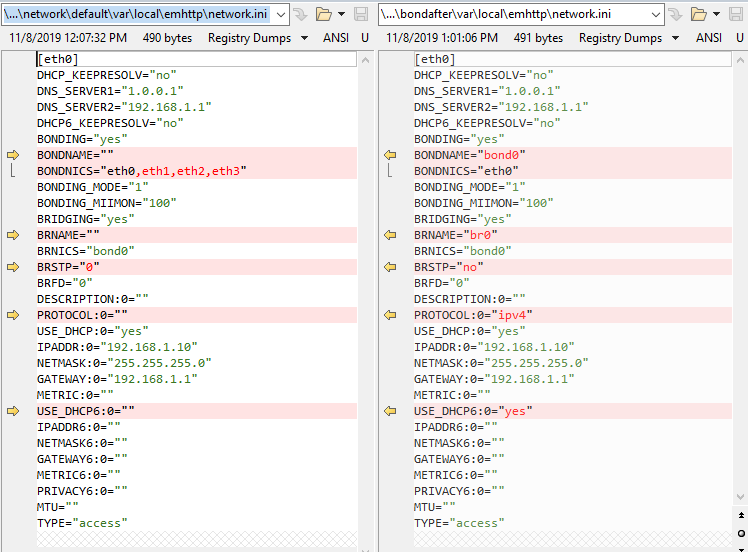

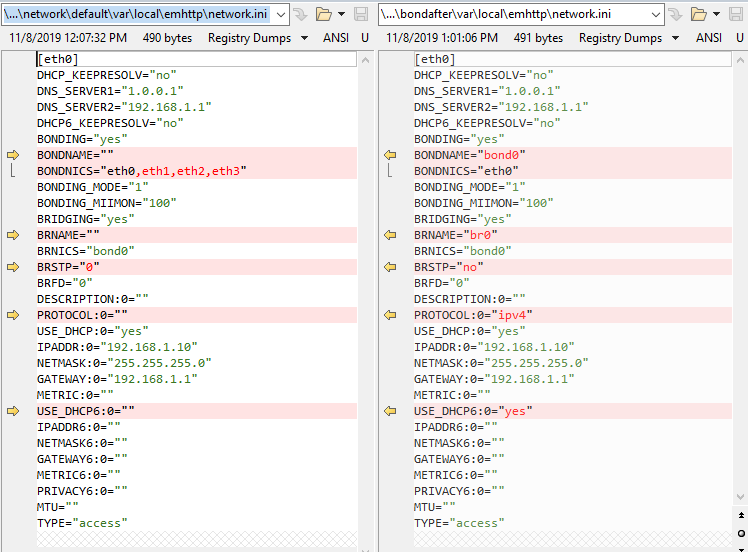

So I looked into what's different between the two. I took a snapshot of /boot/config, /usr/local/emhttp, and /var/local/emhttp and compared them before & after.

Rebooting after removing the network*.cfg files caused things to default. network.cfg did not exist after booting, probably because no changes were made yet. The notable differences that I could see before & after was with network.ini with bonding enabled again.

I don't think there is anything wrong with your code. I suspect the issue is in the defaults applied if no network.cfg file exists.

-

It was already set to eth0.

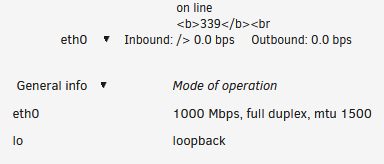

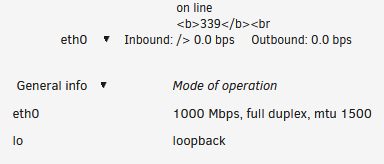

Changing it to "lo" and then back to "eth0" corrected it. It worked properly again after a reboot. I'm assuming that there's a persistent setting that gets updated when the interface to monitor is changed but when changing the bonding from Y to N, that setting isn't updated to something other than "bond0".

-

Spoke too soon. Pulled up the Dashboard after rebooting and I see this.

<br />

<b>Warning</b>: file_get_contents(/sys/class/net/bond0/statistics/rx_bytes): failed to open stream: No such file or directory in <b>/usr/local/emhttp/plugins/dynamix/include/DashUpdate.php</b> on line <b>338</b><br />

<br />

<b>Warning</b>: file_get_contents(/sys/class/net/bond0/statistics/tx_bytes): failed to open stream: No such file or directory in <b>/usr/local/emhttp/plugins/dynamix/include/DashUpdate.php</b> on line <b>339</b><br />

0.0 bps -

2 minutes ago, IamSpartacus said:

Just tried GUI mode, no dice. Reset the CMOS, no dice. I'm on the latest version of my BIOS which is pretty new. I'm stuck on RC3 for the foreseeable future.

If you're feeling spunky, try downgrading your BIOS a version.

-

15 minutes ago, bonienl said:

The above is the 1G interface. Didn't you disable the 10G interface?

Sorry, I missed that. I did disable the 10G interface, there wasn't an option in the BIOS to disable the 1G. The 1G is indeed active and accessible as eth0 prior to changing bonding from Y to N. The diagnostics is after I made the change.

Understanding the potential confusion there, I created two diagnostics, one prior, one after, changing the bonding from Y to N. I gave it roughly 15 minutes after hitting the Apply button before taking the diagnostics for due diligence. The last comment in the syslog was deleting the interface for br0 and then nothing for the 15 minutes I waited.

I issued a reboot via the command line without removing the network cfg files and the system booted with an IP and with bonding disabled. So it seems the settings is valid but switching between the two live isn't working.

Before settings change:

vm1-diagnostics-20191108-1005.zip

After settings change:

vm1-diagnostics-20191108-1020.zip

After reboot:

vm1-diagnostics-20191108-1023.zip

-

I don't recall reading if you had or not but did you check for a BIOS update?

-

8 hours ago, bonienl said:

It looks like something hardware related. The interface isn't active.

I disabled it in the BIOS.

-

1 minute ago, bonienl said:

Note: it is not recommended to bond interfaces with different speeds, in general this doesn't work reliably.

I kinda don't gotta choice. The motherboard has two NICs and they're different speeds.

But back to the original issue. I removed the network cfg files and disabled the 10G NIC via the BIOS. On boot, the 1G NIC was assigned to eth0, no sign of eth1. All's looking good, right? Bonding is still enabled. I disable bonding, hit apply, and I lose network connectivity though this time the 1G port doesn't go solid orange. The GUI says it's acquiring an IP but doesn't.

-

eth0 is binding to the 10G NIC which has no cable connected because that port is tricky and temporary disables itself if the cable is jiggled. I have the cable plugged into the 1G NIC which unraid is binding to eth1.

Shouldn't it bind eth0 to the one with the cable connected to it if the system has more than one NIC and not all have cables? You know other people are going to do the same thing, thinking "Why should I have bonding enabled if I'm only using one of the NICs?" and then lose access because they have their LAN cable plugged into the secondary NIC.

I tried switching which Mac ID was tied to which port in the "Interface Rules" selection and that seemed to work. The 1G port was being assigned to eth0. However, when I disabled bonding, eth0 simply went away. The 10G switch's LED for that cable turned off and then solid orange a few seconds later. ifconfig showed no eth0. Looking at the syslog, it seemed that unsetting the bond was not successful. I've attached the diagnostics from after that happened.

-

This might be related to the qcow on XFS issue which was resolved of sorts in RC5

-

Sounds like it was to eliminate disk spin up delays. If they're backup copies and not your live version, it doesn't matter how you store it or where.

-

Verified. Was able to successfully create a VM with Ubuntu on a dynamically expanding qcow2 drive hosted on an UD mounted XFS SSD.

-

3 minutes ago, limetech said:

So it looks like that as long as the qcow2 vdrive doesn't need to expand, there's no corruption. Thus why my Win10 VM didn't have any issues under RC4.

6.8.0 RC6 - Starting "ALL" VM's causes VM's to disappear from Dashboard and VM tab

-

-

-

-

-

in Prereleases

Posted

Having just one VM configured and running is having all VM's running.