-

Posts

105 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Roudy

-

-

On 10/5/2023 at 9:52 AM, m1rc0 said:

Hi everybody,

sorry if its a stupid question. But I'm trying to setup keycloak behind a reverse proxy (NginxProxyManager in my case). On my local network everything is fine but if I try to access keycloak via my domain the admin gui won't load. A bit of googling got me the following reasonable hint:

docker exec keycloak /opt/jboss/keycloak/bin/jboss-cli.sh --connect "/subsystem=undertow/server=default-server/http-listener=default:write-attribute(name=proxy-address-forwarding, value=true)"

Sadly in the docker jboss-cli.sh isn't present under /opt/bitnami/keycloak/bin nor can I find it anywhere else. Any hints, please?

Did you ever get this working, m1rc0? I may have a fix for you if you haven't.

-

That's simple to add. But it's not necessary if you are <1gbit downloading, you won't hit the download cap.

But if you have an unstable connection, you can use this to run on a cron schedule.

#!/bin/bash####### Check if script already running ##########if [[ -f "/mnt/user/appdata/other/rclone/rclone_download_gdrive_vfs" ]]; thenecho "$(date "+%d.%m.%Y %T") INFO: Exiting as script already running."exitelsetouch /mnt/user/appdata/other/rclone/rclone_download_gdrive_vfsfi####### End Check if script already running ################# check if rclone installed ##########if [[ -f "/mnt/user/mount_rclone/gdrive_vfs/mountcheck" ]]; thenecho "$(date "+%d.%m.%Y %T") INFO: rclone installed successfully - proceeding with download."elseecho "$(date "+%d.%m.%Y %T") INFO: rclone not installed - will try again later."rm /mnt/user/appdata/other/rclone/rclone_download_gdrive_vfsexitfi####### end check if rclone installed ########### sync filesrclone move XXMOUNTXX /mnt/user/local --vv --buffer-size 512M --drive-chunk-size 512M --tpslimit 8 --checkers 8 --fast-list --transfers 4 --exclude *fuse_hidden* --exclude *_HIDDEN --exclude .recycle** --exclude .Recycle.Bin/** --exclude *.backup~* --exclude *.partial~* --drive-stop-on-download-limit# remove dummy filerm /mnt/user/appdata/other/rclone/rclone_download_gdrive_vfsexit

Yeah, I was running it without but we’ve been having a lot of rolling electrical outages where I’m at so I kept getting input/output errors. Had it nearly complete before leaving. I appreciate you knocking it out for everyone. If it works well, add it to GitHub and request a merge. I’m sure there will be more people who’ll need to mass download their data soon. I’ve been syncing for the time being since I haven’t received my notice yet.

Sent from my iPhone using Tapatalk -

Download limit for Google is 10TB per day, upload is 750gb. I think you need to change the --drive-stop-on-upload flag with the --drive-stop-on-download-limit one. I think the script will then switch to the next service account to download once you hit 10TB. But with a gigabit line you won't be able to hit the 10TB per day. So you're better off to just use a simple script and keep that running.

Something like:rclone move XXMOUNTXX /mnt/user/local --vv --buffer-size 512M --drive-chunk-size 512M --tpslimit 8 --checkers 8 --fast-list --transfers 4 --exclude *fuse_hidden* --exclude *_HIDDEN --exclude .recycle** --exclude .Recycle.Bin/** --exclude *.backup~* --exclude *.partial~* --drive-stop-on-download-limit

I was working on it but left for vacation. The upload script has a few extra measures that prevents it from running twice by creating a file to reference before running. I’ll try to finish it up in the next few days if no one beats me to it. Then you can set it to run every 10 minutes or whatever to ensure it keeps running all day.

Sent from my iPhone using Tapatalk -

On 6/25/2023 at 3:51 AM, DZMM said:

I actually think if I do move, I'll try and encourage people to use the same collection e.g. just have one pooled library and if anyone wants to add anything, just use the web-based sonarr etc. It seems silly all of us maintaining separate libraries when we can have just one.

I actually do this with a friend of mine at the moment. It makes thins so easy to have a shared cloud storage for both of us to access since we are geographically separated. We mainly use Overseerr for requests and both have access to Starr apps to fix anything. I have 1 box that does all the media management and has the R/W service accounts and he has a few RO service accounts so it doesn't get out of sync. It's been working well for years.

-

1

1

-

-

I personally store everything on a team drive but I don't have as much data on there as most (around 30TB) and everything is still working for me and I haven't received any emails about it. I've added some additional drives to my setup and have been converting video files to H265 and moving them locally but still using the mount to stream. I'll continue to use it as long as I can. Once it's gone I have a friend who is interested in the unlimited Dropbox to share the cost with me. Just need one more haha!

-

5 hours ago, binhex said:

Hi all, I have made some nice changes to the core code used for all the VPN docker images I produce, details as follows:-

- Randomly rotate between multiple remote endpoints (openvpn only) on disconnection - Less possbility of getting stuck on a defunct endpoint

- Manual round-robin implementation of IP addresses for endpoints - On disconnection all endpoint IP's are rotated in /etc/hosts, reducing the possibility of getting stuck on a defunct server on the endpoint.

I also have a final piece of work around this (not done yet), which is to refresh IP addresses for endpoints on each disconnect/reconnect cycle, further reducing the possibility of getting stuck on defunct servers.

In short the work above should help keep the connection maintained for longer periods of time (hopefully months!) without the requirement to restart the container.

The work was non-trivial and it is possible I have introduced some bugs (extensively tested) so please keep an eye out of for unexpected issues as I roll out the this change (currently rolled out to SABnzbdVPN and PrivoxyVPN), if you see a new image released then it will include the new functionality.

We appreciate all the hard work and effort you put into your containers!

-

1

1

-

-

On 1/19/2023 at 9:21 PM, BudgetAudiophile said:

Thanks! Sorry, one more dumb question...in this scenario would I replace all of the IPs in the NAME_SERVERS variable with the new DNS servers or just add in the additional IPs?

Replace all of the IPs with the DNS servers you want to use. I currently have 1.1.1.1,1.0.0.1 in mine.

-

1

1

-

-

2 hours ago, BudgetAudiophile said:

I'm a bit of a networking noob, did you change your DNS settings on your router or is this a configuration specific to your Unraid machine/Sab docker?

You change them in the Docker settings under the NAME_SERVERS variable.

-

Ever find a solution? Trying to play with my son after taking a long break and getting the same error. Just curious if you had found a fix.

-

On 1/2/2023 at 8:51 AM, Nanobug said:

Hello,

I've got Rclone to work, and I'm currently uploading my content to the provider.

There's one thing I can't get to work though.

That's getting Sonarr (and the other *arr's) to see the files on the folder I've got in the cloud.

How do I make it show the encrypted files and folders, without the encryption so I can hardlink it and read the files and folders?Do you have MergerFS set up? You should be able to see the rclone mount there and all the files unencrypted.

-

1

1

-

-

5 hours ago, coltonc18 said:

Good morning,

I have been using sabnzbdvpn for a while without any problems, but here recently I have started getting the connection failed....and I searched it, but the issues in the passed seem to tie to the cipher issue, and I dont see that in my log.

I was thinking maybe change my endpoint, but the faq said different endpoints should show in the supervisord log, but I dont see any in there? Would someone mind taking a look and helping me out here?

Thank you

-ChaseFirst, please delete the file attached to you message. Your username and password is throughout the file. I was having issues as well and updating the DNS fixed it for me. Try updating your nameservers to Cloudflare (1.1.1.1,1.0.0.1) and see if you still have the issue.

-

1 hour ago, workermaster said:

Thanks! Good to know that the shared drive I have already created is a team drive. That clears up some confusion for me.

While I have created the group, I have trouble adding the service accounts to the group. I hope that this is the last of the problems I encounter, so I can start the upload process. As I mentioned, I need to enable a SDK API, and then run a command to open the quickstart.py and give permission to something. But I get an error when I do that.

I hope @Kaizac or someone else can help with that error. I get the feeling that his is the last of the problems still in my way. Then again, I am a professional idiot and tend to find all the problems that you can find, so who knows.

You don't have to do the sample part of that website, just enable the API. You should be able to continue on.

-

On 10/8/2022 at 6:04 PM, francrouge said:

I did try right now and it does not seem to be effective.

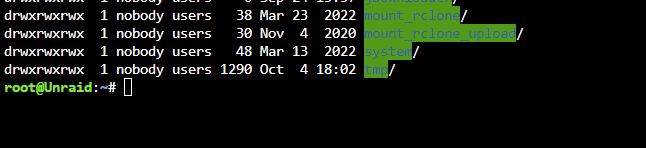

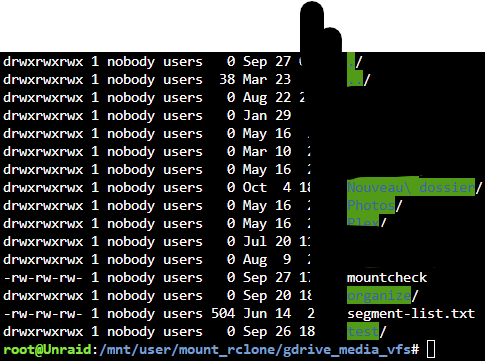

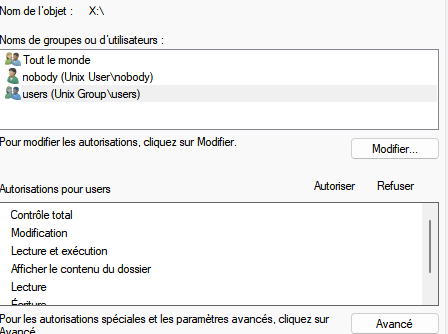

I can create files folders but i cant delete or rename them and only on my moun_rclone folder.

thx

Trying to circle back on what I've missed. Have you gotten it to work? You might try running the "Docker Safe New Perms" located under the "Tools" tab and see if that helps at all. You might want to give it a restart as well. If that still doesn't work, we can try to look at your SMB settings.

-

On 10/8/2022 at 10:20 AM, FabrizioMaurizio said:

#!/bin/bash ###################### #### Mount Script #### ###################### ## Version 0.96.9.2 ## ###################### ####### EDIT ONLY THESE SETTINGS ####### # INSTRUCTIONS # 1. Change the name of the rclone remote and shares to match your setup # 2. NOTE: enter RcloneRemoteName WITHOUT ':' # 3. Optional: include custom command and bind mount settings # 4. Optional: include extra folders in mergerfs mount # REQUIRED SETTINGS RcloneRemoteName="gdrive_t1_1" # Name of rclone remote mount WITHOUT ':'. NOTE: Choose your encrypted remote for sensitive data RcloneMountShare="/mnt/user/mount_rclone" # where your rclone remote will be located without trailing slash e.g. /mnt/user/mount_rclone RcloneMountDirCacheTime="720h" # rclone dir cache time LocalFilesShare="/mnt/user/gdrive_upload" # location of the local files and MountFolders you want to upload without trailing slash to rclone e.g. /mnt/user/local. Enter 'ignore' to disable RcloneCacheShare="/mnt/user0/mount_rclone" # location of rclone cache files without trailing slash e.g. /mnt/user0/mount_rclone RcloneCacheMaxSize="100G" # Maximum size of rclone cache RcloneCacheMaxAge="336h" # Maximum age of cache files MergerfsMountShare="/mnt/user/mount_mergerfs" # location without trailing slash e.g. /mnt/user/mount_mergerfs. Enter 'ignore' to disable DockerStart="" # list of dockers, separated by space, to start once mergerfs mount verified. Remember to disable AUTOSTART for dockers added in docker settings page #MountFolders=\{"downloads/complete,downloads/intermediate,downloads/seeds,movies,tv"\} # comma separated list of folders to create within the mount # Note: Again - remember to NOT use ':' in your remote name above # OPTIONAL SETTINGS # Add extra paths to mergerfs mount in addition to LocalFilesShare LocalFilesShare2="ignore" # without trailing slash e.g. /mnt/user/other__remote_mount/or_other_local_folder. Enter 'ignore' to disable LocalFilesShare3="ignore" LocalFilesShare4="ignore" # Add extra commands or filters Command1="--vfs-read-ahead 30G" Command2="" Command3="" Command4="" Command5="" Command6="" Command7="" Command8="" CreateBindMount="N" # Y/N. Choose whether to bind traffic to a particular network adapter RCloneMountIP="192.168.1.252" # My unraid IP is 172.30.12.2 so I create another similar IP address NetworkAdapter="eth0" # choose your network adapter. eth0 recommended VirtualIPNumber="2" # creates eth0:x e.g. eth0:1. I create a unique virtual IP addresses for each mount & upload so I can monitor and traffic shape for each of them ####### END SETTINGS ####### ############################################################################### ##### DO NOT EDIT ANYTHING BELOW UNLESS YOU KNOW WHAT YOU ARE DOING ####### ############################################################################### ####### Preparing mount location variables ####### RcloneMountLocation="$RcloneMountShare/$RcloneRemoteName" # Location for rclone mount LocalFilesLocation="$LocalFilesShare/$RcloneRemoteName" # Location for local files to be merged with rclone mount MergerFSMountLocation="$MergerfsMountShare/$RcloneRemoteName" # Rclone data folder location ####### create directories for rclone mount and mergerfs mounts ####### mkdir -p /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName # for script files mkdir -p $RcloneCacheShare/cache/$RcloneRemoteName # for cache files if [[ $LocalFilesShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating local folders as requested." LocalFilesLocation="/tmp/$RcloneRemoteName" eval mkdir -p $LocalFilesLocation else echo "$(date "+%d.%m.%Y %T") INFO: Creating local folders." eval mkdir -p $LocalFilesLocation fi mkdir -p $RcloneMountLocation if [[ $MergerfsMountShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating MergerFS folders as requested." else echo "$(date "+%d.%m.%Y %T") INFO: Creating MergerFS folders." mkdir -p $MergerFSMountLocation fi ####### Check if script is already running ####### echo "$(date "+%d.%m.%Y %T") INFO: *** Starting mount of remote ${RcloneRemoteName}" echo "$(date "+%d.%m.%Y %T") INFO: Checking if this script is already running." if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Exiting script as already running." exit else echo "$(date "+%d.%m.%Y %T") INFO: Script not running - proceeding." touch /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running fi ####### Checking have connectivity ####### echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if online" ping -q -c2 google.com > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") PASSED: *** Internet online" else echo "$(date "+%d.%m.%Y %T") FAIL: *** No connectivity. Will try again on next run" rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi ####### Create Rclone Mount ####### # Check If Rclone Mount Already Created if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Success ${RcloneRemoteName} remote is already mounted." else echo "$(date "+%d.%m.%Y %T") INFO: Mount not running. Will now mount ${RcloneRemoteName} remote." # Creating mountcheck file in case it doesn't already exist echo "$(date "+%d.%m.%Y %T") INFO: Recreating mountcheck file for ${RcloneRemoteName} remote." touch mountcheck rclone copy mountcheck $RcloneRemoteName: -vv --no-traverse # Check bind option if [[ $CreateBindMount == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if IP address ${RCloneMountIP} already created for remote ${RcloneRemoteName}" ping -q -c2 $RCloneMountIP > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") INFO: *** IP address ${RCloneMountIP} already created for remote ${RcloneRemoteName}" else echo "$(date "+%d.%m.%Y %T") INFO: *** Creating IP address ${RCloneMountIP} for remote ${RcloneRemoteName}" ip addr add $RCloneMountIP/24 dev $NetworkAdapter label $NetworkAdapter:$VirtualIPNumber fi echo "$(date "+%d.%m.%Y %T") INFO: *** Created bind mount ${RCloneMountIP} for remote ${RcloneRemoteName}" else RCloneMountIP="" echo "$(date "+%d.%m.%Y %T") INFO: *** Creating mount for remote ${RcloneRemoteName}" fi # create rclone mount rclone mount \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --allow-other \ --dir-cache-time $RcloneMountDirCacheTime \ --log-level INFO \ --poll-interval 15s \ --cache-dir=$RcloneCacheShare/cache/$RcloneRemoteName \ --vfs-cache-mode full \ --vfs-cache-max-size $RcloneCacheMaxSize \ --vfs-cache-max-age $RcloneCacheMaxAge \ --bind=$RCloneMountIP \ $RcloneRemoteName: $RcloneMountLocation & # Check if Mount Successful echo "$(date "+%d.%m.%Y %T") INFO: sleeping for 5 seconds" # slight pause to give mount time to finalise sleep 5 echo "$(date "+%d.%m.%Y %T") INFO: continuing..." if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Successful mount of ${RcloneRemoteName} mount." else echo "$(date "+%d.%m.%Y %T") CRITICAL: ${RcloneRemoteName} mount failed - please check for problems. Stopping dockers" docker stop $DockerStart rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi ####### Start MergerFS Mount ####### if [[ $MergerfsMountShare == 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Not creating mergerfs mount as requested." else if [[ -f "$MergerFSMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Check successful, ${RcloneRemoteName} mergerfs mount in place." else # check if mergerfs already installed if [[ -f "/bin/mergerfs" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs already installed, proceeding to create mergerfs mount" else # Build mergerfs binary echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs not installed - installing now." mkdir -p /mnt/user/appdata/other/rclone/mergerfs docker run -v /mnt/user/appdata/other/rclone/mergerfs:/build --rm trapexit/mergerfs-static-build mv /mnt/user/appdata/other/rclone/mergerfs/mergerfs /bin # check if mergerfs install successful echo "$(date "+%d.%m.%Y %T") INFO: *sleeping for 5 seconds" sleep 5 if [[ -f "/bin/mergerfs" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Mergerfs installed successfully, proceeding to create mergerfs mount." else echo "$(date "+%d.%m.%Y %T") ERROR: Mergerfs not installed successfully. Please check for errors. Exiting." rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi # Create mergerfs mount echo "$(date "+%d.%m.%Y %T") INFO: Creating ${RcloneRemoteName} mergerfs mount." # Extra Mergerfs folders if [[ $LocalFilesShare2 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare2} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare2=":$LocalFilesShare2" else LocalFilesShare2="" fi if [[ $LocalFilesShare3 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare3} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare3=":$LocalFilesShare3" else LocalFilesShare3="" fi if [[ $LocalFilesShare4 != 'ignore' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Adding ${LocalFilesShare4} to ${RcloneRemoteName} mergerfs mount." LocalFilesShare4=":$LocalFilesShare4" else LocalFilesShare4="" fi # make sure mergerfs mount point is empty mv $MergerFSMountLocation $LocalFilesLocation mkdir -p $MergerFSMountLocation # mergerfs mount command mergerfs $LocalFilesLocation:$RcloneMountLocation$LocalFilesShare2$LocalFilesShare3$LocalFilesShare4 $MergerFSMountLocation -o rw,async_read=false,use_ino,allow_other,func.getattr=newest,category.action=all,category.create=ff,cache.files=partial,dropcacheonclose=true # check if mergerfs mount successful echo "$(date "+%d.%m.%Y %T") INFO: Checking if ${RcloneRemoteName} mergerfs mount created." if [[ -f "$MergerFSMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Check successful, ${RcloneRemoteName} mergerfs mount created." else echo "$(date "+%d.%m.%Y %T") CRITICAL: ${RcloneRemoteName} mergerfs mount failed. Stopping dockers." docker stop $DockerStart rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running exit fi fi fi ####### Starting Dockers That Need Mergerfs Mount To Work Properly ####### # only start dockers once if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/dockers_started" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: dockers already started." else # Check CA Appdata plugin not backing up or restoring if [ -f "/tmp/ca.backup2/tempFiles/backupInProgress" ] || [ -f "/tmp/ca.backup2/tempFiles/restoreInProgress" ] ; then echo "$(date "+%d.%m.%Y %T") INFO: Appdata Backup plugin running - not starting dockers." else touch /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/dockers_started echo "$(date "+%d.%m.%Y %T") INFO: Starting dockers." docker start $DockerStart fi fi rm /mnt/user/appdata/other/rclone/remotes/$RcloneRemoteName/mount_running echo "$(date "+%d.%m.%Y %T") INFO: Script complete" exitSorry for the late reply, I've been away. Have you gotten it to work yet? I noticed you're on 96.9.2 instead of the 96.9.3 which fixed some permissions with the umask. Update your script to the latest version and let us know if you're still having issues.

-

7 hours ago, FabrizioMaurizio said:

Hi all, I updated from 3.9 to 3.11 recently and now I don't have write permission to the mergerfs folder. I tried Docker Safe New Perm but it didn't help. Any suggestions?

Can you post your mount script and file permissions of your directories?

-

14 hours ago, DZMM said:

Is anyone else having a problem (I think since 6.11) where files become briefly unavailable? I used to run my script via cron every couple of mins, but I've found over the last couple of days or so that the mount will be up but Plex etc need manually restarting i.e. I think the files became unavailable for such a short period of time, that my script missed the event and didn't stop and restart my dockers?

I'm wondering if there's a better way than looking for the mountcheck file e.g. write a plex script that sits alongside the current script that says stop plex if files become unavailable and they rely on the existing script to restart plex?

I haven't been having issues with that. It's been running pretty good actually. Maybe add a log file and change the log-level to debug to see if there is any indication of what's going on when it happens? It could also be some issue with mergerfs.

--log-level DEBUG \

--log-file=/var/log/rclone \

-

1

1

-

-

-

On 10/4/2022 at 6:07 PM, francrouge said:

hi yes on krusader i got no problem but on windows with network shares its not working anymore

i can't edit or rename delete etc. on the gdrive mount on windows my local shares are ok

I will add also my mount and upload script

Maybe i'm missing something

should i try the new permission feature you think ?

thx

upload.txt 10.07 kB · 0 downloads mount.txt 11.42 kB · 3 downloads unraid-diagnostics-20221004-1818.zip 295.72 kB · 0 downloads

Are you accessing your content through the "mount_rclone" folder? Is it shown under the unRAID shares tab? If so, what are the share settings for the folder?

-

Has anybody had any problems running the scripts on 6.11? Does the mergerfs install still work without NerdPack?

So far, I haven’t had any issues. I actually thought it was loading some content faster, but that’s just my opinion with what I’ve tested. No proof in that statement.

Sent from my iPhone using Tapatalk -

23 hours ago, DZMM said:

Wow! That's taking advantage of the service haha! I hope the unlimited sticks. If they switch to the 5TB for every user, it's going to kind of suck. May have to look at establishing a library and share the encryption keys amongst people who pay to have access. I have something like that set up for a remote server at the moment.

-

-

7 hours ago, DZMM said:

Did you notice they updated services summary to 5TB for 5 or more users instead of unlimited? I feel like restrictions will be coming in the future...

-

1 hour ago, francrouge said:

1gbi up 1gbp down fiber 1080p

Envoyé de mon Pixel 5 en utilisant Tapatalk

How long is the loading time for your movie? Is the file over 30GB? Could you post your mount script?

[Support] A75G Repo

in Docker Containers

Posted

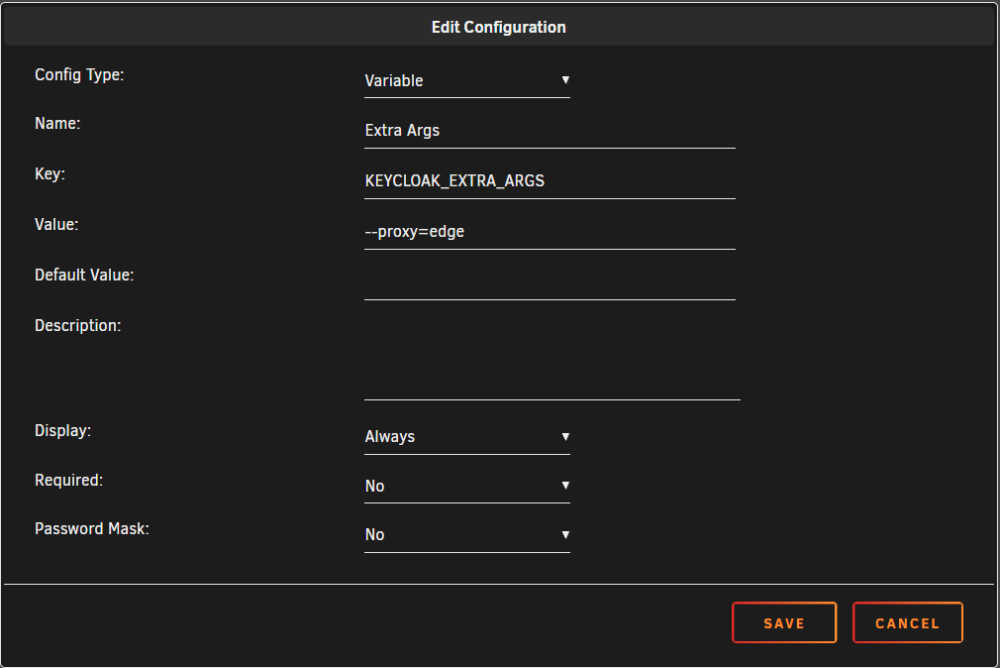

If you have everything else working and just the Admin page fails to load, you need to add the Extra Argument variable that is mentioned in the bitnami documentation with the --proxy=edge value. Here is a screen shot of it for reference.