gberg

-

Posts

132 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by gberg

-

-

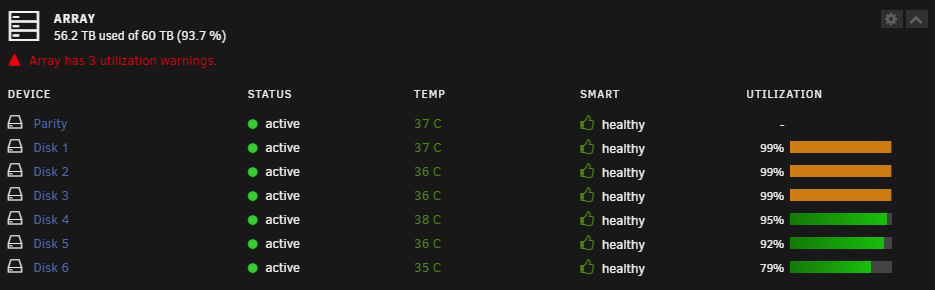

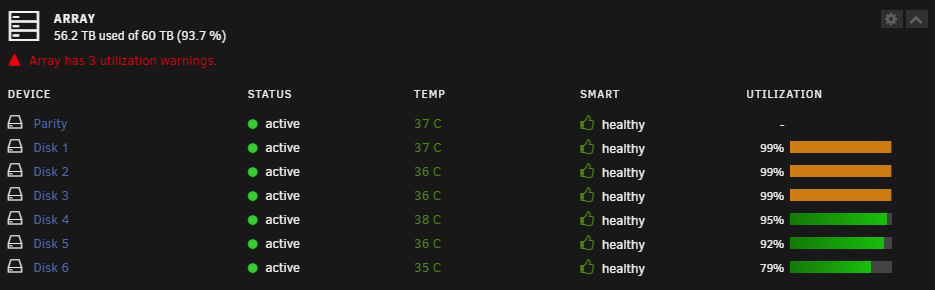

I got this issue since upgrading from unraid version 6.10.3 to version 6.12.6 that I get array utilization warnings.

I get the warning on tree disks, and I have set the "Default warning disk utilization threshold (%):" to 99%, and the tree disks getting the warning are 10TB with 9.88TB of used disk space which means there is more than 1% free space.

But still it says the disks are using 99% at the dashboard?

Does this mean I need to have more than 150GB free space for the utilization not to be rounded to 99% ?

I think it would be nice if it was possible chose to set the utilization thesholds in GB rather then percentage, what do you think?

-

I just upgraded to version 6.12.6 and everything seems to have gone alright.

-

I haven't updated my unraid in a while and currenty I'm running 6.10.3.

I read in the 6.12.6 release notes the issue with Out of date plugins, an I have three plugins that I can't update because I'm running to low unraid version, and the out of date plugins are:

Community Applications (2023.02.17), SNMP (2021.05.21) and Unassigned Devices (2023.02.20)

Do you think this will cause any issues?

-

It would be rally nice if it was possible to get the APC UPS Daemon dashboard widget to show a historic graph of the UPS load, and also the average load.

Maybe it 's possible to set that up somehow?

-

No ideas, it's not a very powerful CPU, but should it be this bad?

-

Hi,

Is it normal to have really high CPU utilization when copying files from one share to another?

I'm on a Windows computer on the network and copy files from one share to another on my Unraid NAS, and thins is what the CPU utilizaton looks like:

-

Well, you can run pfSense without AES-NI, but AES-NI sure will help, specially if you're running VPN:s

-

-

I would also like to see this, perhaps there is a plugin that can do this?

-

-

I'm planing to ad a new larger parity drive to my array.

Today I have all 10TB drives one parity drive and six data drives, and I plan to add a new 18TB parity to be able to swap my existing data drives to larger ones in the future, and after the pari check is don I'll add the old parity drive as a data drive.

My question is how will the parity check work when the th parity drive is 18TB, will it do a 18TB parity check taking almost twice the time as previous even thoght the data drives are only 10TB, or will it do a 10TB parity check untill I actually add a larger data drive?

-

Earlaier I bought WD Mybooks beacuse they were the cheapest alternative, but now they are not.

I'm thinking about getting Seagate Exos X18 18TB which are about the same price/TB as I payed for the 10TB WD My Books.

-

No, I don't currently use it, but I'm planning on add a new lager Parity drive as I'm running out of drive bays.

But what would the Parity Check Tuning plugin do, I mean it can't make the disk faster?

-

Anyone using 18TB parity drive,if so, how long does it take to complete?

For me using 10TB parity drive the parity check usually takes takes about 19 hours and 30 minutes if there is not too much disk actvity during the check.

-

Ok, that sounds promising, thanks!

-

I have 7 shucked WD Mybook 10TB white drives running in my unraid, the oldest oldest 4 of them are just over two years old now and running just fine, and the newest one have been running for about 6 months.

How long have you had yours running, and how long do you think one can expect them to last?

-

-

I'm not sure there are any issues with the NUT plugin, many peaple uses it.

Personally I prefer APCUPSD, but mainly works on APC UPS models.

-

If you can't read the flash content from unraid, I guess you have a issue there, have you tried it in another USB port.

But I'm not really sure how unraid reacts when you unplug an plug the flash drive again while running, maybe Unraid need to be rebooted for the flash drive to be detected?

-

What do yo umean by "disk 2 cannot be displayed in the disk selection in the sharing"?

-

Do you have any flash drive backups, I always try to do that once a moth or whenever I do config changes?

-

It would be great if /mnt/user and/or /mnt/user0 also was reported over SMNP, is that possible to fix?

-

Is it possible for Unraid to show the size and free disk size of entire array over SNMP, instead of just showing the individual disks?

This is what it looks like using Observium:

-

14 hours ago, Frank1940 said:

I use the preclear Docker and I try to get about 70+ hours on the hard drive. My objective is to make sure that the HD is not going to fail because of infant mortality. I do this for two reasons.

1-- I test as soon as I get the disk. I only purchase from vendors who provide for 'no-hassle' returns with in X days of purchase for DOA drives. This means I don't have to deal with warranty issues with the manufacturer.

2-- I have a policy that I want a cold spare ready for installation if a disk 'red-balls'. I pull the disk and replace with the cold spare and start the rebuild. When that is complete and the server is working normally again, I can take my time to test and determine what to do with 'defective' disk...

Ok, that seems like a smart strategy.

For my 10TB WD My Book it takes about 56 hours for each pass (pre-read, zeroing and post-read), so two passes might be more than enough.

Ana I have also thought about having a cold spare at hand, maybe not a bad idea.

Disk utilization - is the percentage rounded?

in General Support

Posted

I've tested this now, to free upp some more space on the disk and once I get more than 150GB free on my 10TB disks it goes to 98% full and the utization warnings goes away.