-

Posts

78 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by timekiller

-

-

Ok, this started with issues last week discussed in this thread. Well, today I rebooted my server and it took WAY too long to boot up. I attached a monitor and saw the attached screen. When it did come up the array started ok, but after a few minutes I got a notification saying

USB flash drive failure: 20-09-2019 17:19 Alert [STORAGE] - USB drive is not read-write Ultra_Fit (sda)

So....time to replace my usb stick?

Diagnostics attached.

-

Ok, final update:

The system finished booting and the rclone plugin was back in the plugins section. I removed it (again) and the `rclone.plg` file disappeared. For good measure I also deleted the 2 rclone folders in the plugins directory on the USB stick. I rebooted (non safe mode) and the system came up in a normal time frame. All the drives mounted ok, and the shares are back! So now it looks like I'm ok. Going to let the parity sync complete before I mess around anymore. Should be about 2 days to finish.

I'm assuming USB stick corruption is the root cause here. Not sure how that happened. When the system settles down I'll try the rclone plugin again.

Thanks for all the help!

-

After the fsck I reinserted the usb drive and rebooted without safe mode and the boot is still hung where it was hanging before. I took a picture of the screen where it's stuck. I also was able to ssh in at this point so I got a process list:

root@Storage:~# ps auxwwwww USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 1 22.1 0.0 2460 764 ? Ss 09:42 0:16 init root 2 0.0 0.0 0 0 ? S 09:42 0:00 [kthreadd] root 3 0.0 0.0 0 0 ? I< 09:42 0:00 [rcu_gp] root 4 0.0 0.0 0 0 ? I< 09:42 0:00 [rcu_par_gp] root 5 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/0:0-events] root 6 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/0:0H-kblockd] root 7 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/u16:0-events_freezable_power_] root 8 0.0 0.0 0 0 ? I< 09:42 0:00 [mm_percpu_wq] root 9 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/0] root 10 0.0 0.0 0 0 ? I 09:42 0:00 [rcu_sched] root 11 0.0 0.0 0 0 ? I 09:42 0:00 [rcu_bh] root 12 0.0 0.0 0 0 ? S 09:42 0:00 [migration/0] root 13 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/0] root 14 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/1] root 15 0.0 0.0 0 0 ? S 09:42 0:00 [migration/1] root 16 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/1] root 17 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/1:0-events] root 18 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/1:0H-kblockd] root 19 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/2] root 20 0.0 0.0 0 0 ? S 09:42 0:00 [migration/2] root 21 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/2] root 22 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/2:0-events] root 23 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/2:0H-kblockd] root 24 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/3] root 25 0.0 0.0 0 0 ? S 09:42 0:00 [migration/3] root 26 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/3] root 27 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/3:0-rcu_gp] root 28 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/3:0H-kblockd] root 29 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/4] root 30 0.0 0.0 0 0 ? S 09:42 0:00 [migration/4] root 31 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/4] root 32 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/4:0-rcu_gp] root 33 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/4:0H] root 34 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/5] root 35 0.0 0.0 0 0 ? S 09:42 0:00 [migration/5] root 36 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/5] root 37 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/5:0-events] root 38 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/5:0H-kblockd] root 39 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/6] root 40 0.0 0.0 0 0 ? S 09:42 0:00 [migration/6] root 41 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/6] root 42 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/6:0-pm] root 43 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/6:0H-kblockd] root 44 0.0 0.0 0 0 ? S 09:42 0:00 [cpuhp/7] root 45 0.0 0.0 0 0 ? S 09:42 0:00 [migration/7] root 46 0.0 0.0 0 0 ? S 09:42 0:00 [ksoftirqd/7] root 47 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/7:0-events] root 48 0.0 0.0 0 0 ? I< 09:42 0:00 [kworker/7:0H-kblockd] root 49 0.0 0.0 0 0 ? S 09:42 0:00 [kdevtmpfs] root 50 0.0 0.0 0 0 ? I< 09:42 0:00 [netns] root 51 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/u16:1-nvme-wq] root 55 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/u16:2-nvme-wq] root 60 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/1:1-mm_percpu_wq] root 64 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/u16:3-nvme-wq] root 65 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/0:1] root 71 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/2:1-mm_percpu_wq] root 90 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/5:1-mm_percpu_wq] root 97 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/4:1-mm_percpu_wq] root 101 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/7:1-mm_percpu_wq] root 152 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/6:1-mm_percpu_wq] root 321 0.0 0.0 0 0 ? S 09:42 0:00 [oom_reaper] root 322 0.0 0.0 0 0 ? I< 09:42 0:00 [writeback] root 324 0.0 0.0 0 0 ? S 09:42 0:00 [kcompactd0] root 325 0.0 0.0 0 0 ? SN 09:42 0:00 [ksmd] root 326 0.0 0.0 0 0 ? SN 09:42 0:00 [khugepaged] root 327 0.0 0.0 0 0 ? I< 09:42 0:00 [crypto] root 328 0.0 0.0 0 0 ? I< 09:42 0:00 [kintegrityd] root 330 0.0 0.0 0 0 ? I< 09:42 0:00 [kblockd] root 333 0.0 0.0 0 0 ? I 09:42 0:00 [kworker/3:1-mm_percpu_wq] root 655 0.0 0.0 0 0 ? I< 09:42 0:00 [ata_sff] root 677 0.0 0.0 0 0 ? I< 09:42 0:00 [edac-poller] root 679 0.0 0.0 0 0 ? I< 09:42 0:00 [devfreq_wq] root 829 0.0 0.0 0 0 ? S 09:43 0:00 [kswapd0] root 955 0.0 0.0 0 0 ? I< 09:43 0:00 [kthrotld] root 1064 0.0 0.0 0 0 ? I< 09:43 0:00 [vfio-irqfd-clea] root 1146 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:4-events_power_efficient] root 1165 0.0 0.0 0 0 ? I< 09:43 0:00 [ipv6_addrconf] root 1194 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/6:2-events] root 1197 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_0] root 1198 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_0] root 1199 0.2 0.0 0 0 ? D 09:43 0:00 [usb-storage] root 1359 0.3 0.0 9132 3652 ? Ss 09:43 0:00 /sbin/udevd --daemon root 1426 0.0 0.0 0 0 ? S< 09:43 0:00 [loop0] root 1427 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/2:1H-kblockd] root 1428 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/5:1H-kblockd] root 1429 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/4:1H-kblockd] root 1430 0.0 0.0 0 0 ? S< 09:43 0:00 [loop1] root 1431 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/3:1H-kblockd] root 1454 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/0:1H-kblockd] root 1469 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/1:1H-kblockd] root 1481 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/7:1H-kblockd] root 1488 0.0 0.0 0 0 ? I< 09:43 0:00 [kworker/6:1H-kblockd] root 1489 0.0 0.0 0 0 ? I< 09:43 0:00 [acpi_thermal_pm] root 1490 0.0 0.0 0 0 ? I< 09:43 0:00 [nvme-wq] root 1491 0.0 0.0 0 0 ? I< 09:43 0:00 [nvme-reset-wq] root 1492 0.0 0.0 0 0 ? I< 09:43 0:00 [nvme-delete-wq] root 1496 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_1] root 1497 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_1] root 1498 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_2] root 1499 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_2] root 1500 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_3] root 1501 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_3] root 1502 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_4] root 1503 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_4] root 1504 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:5-events_unbound] root 1505 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:6-events_freezable_power_] root 1506 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:7-events_freezable_power_] root 1507 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:8-events_power_efficient] root 1508 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_5] root 1509 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_5] root 1510 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_6] root 1511 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_6] root 1512 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_7] root 1513 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_7] root 1514 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_8] root 1515 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_8] root 1516 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:9-events_freezable_power_] root 1517 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:10-events_freezable_power_] root 1518 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:11-events_freezable_power_] root 1519 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:12-events_freezable_power_] root 1520 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_9] root 1521 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_9] root 1522 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_10] root 1523 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_10] root 1524 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_11] root 1525 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_11] root 1526 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_12] root 1527 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_12] root 1528 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:13-events_freezable_power_] root 1529 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:14-events_freezable_power_] root 1530 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:15-events_unbound] root 1531 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:16-events_freezable_power_] root 1532 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:17-events_unbound] root 1533 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_13] root 1534 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_13] root 1535 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_14] root 1536 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_14] root 1537 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_15] root 1538 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_15] root 1539 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_16] root 1540 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_16] root 1541 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:18-events_unbound] root 1542 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:19-events_unbound] root 1543 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:20-events_unbound] root 1544 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_17] root 1545 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_17] root 1546 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_18] root 1547 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_18] root 1548 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_19] root 1549 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_19] root 1550 0.0 0.0 0 0 ? S 09:43 0:00 [scsi_eh_20] root 1551 0.0 0.0 0 0 ? I< 09:43 0:00 [scsi_tmf_20] root 1552 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:21-events_freezable_power_] root 1553 0.0 0.0 0 0 ? D 09:43 0:00 [kworker/u16:22+flush-8:0] root 1554 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:23-events_freezable_power_] root 1555 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:24-nvme-wq] root 1580 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/u16:25] root 1613 0.0 0.0 0 0 ? S 09:43 0:00 [nv_queue] root 1614 0.0 0.0 0 0 ? S 09:43 0:00 [nv_queue] root 1618 0.0 0.0 0 0 ? S 09:43 0:00 [nvidia-modeset/] root 1619 0.0 0.0 0 0 ? S 09:43 0:00 [nvidia-modeset/] root 1659 0.0 0.0 3956 3168 ? Ss 09:43 0:00 /bin/sh /etc/rc.d/rc.M root 1677 0.0 0.0 215500 2216 ? Ssl 09:43 0:00 /usr/sbin/rsyslogd -i /var/run/rsyslogd.pid root 1774 0.3 0.0 8212 6392 ? Ss 09:43 0:00 /sbin/haveged -w 1024 -v 1 -p /var/run/haveged.pid root 1790 0.0 0.0 0 0 ? I< 09:43 0:00 [bond0] message+ 1829 0.0 0.0 3508 240 ? Ss 09:43 0:00 /usr/bin/dbus-daemon --system rpc 1838 0.0 0.0 3336 1952 ? Ss 09:43 0:00 /sbin/rpcbind -l -w rpc 1843 0.0 0.0 7268 5884 ? Ss 09:43 0:00 /sbin/rpc.statd root 1853 0.0 0.0 2524 1572 ? Ss 09:43 0:00 /usr/sbin/inetd root 1861 0.0 0.0 8996 2400 ? Ss 09:43 0:00 /usr/sbin/sshd ntp 1873 0.0 0.0 76988 4348 ? Ssl 09:43 0:00 /usr/sbin/ntpd -g -u ntp:ntp root 1879 0.0 0.0 2464 92 ? Ss 09:43 0:00 /usr/sbin/acpid root 1894 0.0 0.0 2532 1640 ? Ss 09:43 0:00 /usr/sbin/crond daemon 1898 0.0 0.0 2520 104 ? Ss 09:43 0:00 /usr/sbin/atd -b 15 -l 1 root 1905 0.0 0.0 23000 5060 ? Ss 09:43 0:00 /usr/sbin/nmbd -D root 1907 0.0 0.0 44692 14000 ? Ss 09:43 0:00 /usr/sbin/smbd -D root 1910 0.0 0.0 41560 5368 ? S 09:43 0:00 /usr/sbin/smbd -D root 1911 0.0 0.0 41552 3740 ? S 09:43 0:00 /usr/sbin/smbd -D root 1913 0.0 0.0 30956 8736 ? Ss 09:43 0:00 /usr/sbin/winbindd -D root 1915 0.0 0.0 31504 10832 ? S 09:43 0:00 /usr/sbin/winbindd -D root 1917 0.0 0.0 3932 3232 ? S 09:43 0:00 /bin/sh /etc/rc.d/rc.local root 2682 0.0 0.0 0 0 ? I 09:43 0:00 [kworker/4:2] root 5152 0.0 0.0 31456 8824 ? S 09:43 0:00 /usr/sbin/winbindd -D root 7000 0.0 0.0 3836 128 ? Ss 09:43 0:00 /usr/sbin/vnstatd --config /etc/vnstat.conf --daemon root 7776 0.0 0.0 3752 2940 ? S 09:43 0:00 /bin/bash /usr/local/sbin/installplg /boot/config/plugins/rclone.plg root 7777 0.0 0.0 2540 1604 ? S 09:43 0:00 logger root 7778 0.0 0.0 113580 24556 ? S 09:43 0:00 /usr/bin/php -q /usr/local/sbin/plugin install /boot/config/plugins/rclone.plg root 7901 0.0 0.0 3784 2760 ? S 09:43 0:00 sh -c echo ' ping -q -c2 8.8.8.8 >/dev/null if [ $? -eq 0 ] then echo "Downloading rclone" curl --connect-timeout 5 --retry 3 --retry-delay 2 --retry-max-time 30 -o /boot/config/plugins/rclone-beta/install/rclone-beta-latest.zip https://beta.rclone.org/rclone-beta-latest-linux-amd64.zip echo "Downloading certs" curl --connect-timeout 5 --retry 3 --retry-delay 2 --retry-max-time 30 -o /boot/config/plugins/rclone-beta/install/ca-certificates.new.crt https://raw.githubusercontent.com/bagder/ca-bundle/master/ca-bundle.crt else ping -q -c2 1.1.1.1 >/dev/null if [ $? -eq 0 ] then echo "Downloading rclone" curl --connect-timeout 5 --retry 3 --retry-delay 2 --retry-max-time 30 -o /boot/config/plugins/rclone-beta/install/rclone-beta-latest.zip https://beta.rclone.org/rclone-beta-latest-linux-amd64.zip echo "Downloading certs" curl --connect-timeout 5 --retry 3 --retry-delay 2 --retry-max-time 30 -o /boot/config/plugins/rclone-beta/install/ca-certificates.new.crt https://raw.githubusercontent.com/bagder/ca-bundle/master/ca-bundle.crt else echo "No internet - Skipping download and using existing archives" fi fi; if [ -f /boot/config/plugins/rclone-beta/install/ca-certificates.new.crt ]; then rm -f $(ls /boot/config/plugins/rclone-beta/install/ca-certificates.crt 2>/dev/null) mv /boot/config/plugins/rclone-beta/install/ca-certificates.new.crt /boot/config/plugins/rclone-beta/install/ca-certificates.crt fi; if [ -f /boot/config/plugins/rclone-beta/install/rclone-beta-latest.zip ]; then unzip /boot/config/plugins/rclone-beta/install/rclone-beta-latest.zip -d /boot/config/plugins/rclone-beta/install/ rm -f $(ls /boot/config/plugins/rclone-beta/install/rclone-beta-latest.old.zip 2>/dev/null) mv /boot/config/plugins/rclone-beta/install/rclone-beta-latest.zip /boot/config/plugins/rclone-beta/install/rclone-beta-latest.old.zip elif [ -f /boot/config/plugins/rclone-beta/install/rclone-beta-latest.old.zip ]; then unzip /boot/config/plugins/rclone-beta/install/rclone-beta-latest.old.zip -d /boot/config/plugins/rclone-beta/install/ else echo "Download failed - No existing archive found - Try again later" exit 1 fi; if [ -f /boot/config/plugins/rclone-beta/install/rclone-v*/rclone ]; then cp /boot/config/plugins/rclone-beta/install/rclone-v*/rclone /usr/sbin/rcloneorig if [ "$?" -ne "0" ]; then echo "Copy failed - is rclone running?" if [ -d /boot/config/plugins/rclone-beta/install/rclone-v*/ ]; then rm -rf /boot/config/plugins/rclone-beta/install/rclone-v*/ fi; rm -f $(ls /boot/config/plugins/rclone-beta/install/rclone*.txz 2>/dev/null | grep -v '\''2018.08.25'\'') exit 1 fi; else echo "Unpack failed - Please try installing/updating the plugin again" rm -f $(ls /boot/config/plugins/rclone-beta/install/rclone-beta-latest.old.zip 2>/dev/null) exit 1 fi; rm -f $(ls /boot/config/plugins/rclone-beta/install/rclone*.txz 2>/dev/null | grep -v '\''2018.08.25'\'') if [ -d /boot/config/plugins/rclone-beta/install/rclone-v*/ ]; then rm -rf /boot/config/plugins/rclone-beta/install/rclone-v*/ fi; chown root:root /usr/sbin/rcloneorig chmod 755 /usr/sbin/rcloneorig mkdir -p /etc/ssl/certs/ cp /boot/config/plugins/rclone-beta/install/ca-certificates.crt /etc/ssl/certs/ if [ ! -f /boot/config/plugins/rclone-beta/.rclone.conf ]; then touch /boot/config/plugins/rclone-beta/.rclone.conf; fi; mkdir -p /boot/config/plugins/rclone-beta/logs; mkdir -p /boot/config/plugins/rclone-beta/scripts; cp /boot/config/plugins/rclone-beta/install/scripts/* /boot/config/plugins/rclone-beta/scripts/ -R -n; mkdir -p /mnt/disks/; echo "" echo "-----------------------------------------------------------" echo " rclone-beta has been installed." echo "-----------------------------------------------------------" echo "" ' | /bin/bash root 7903 0.0 0.0 3916 3112 ? S 09:43 0:00 /bin/bash root 7913 0.7 0.0 5684 2592 ? D 09:43 0:00 unzip /boot/config/plugins/rclone-beta/install/rclone-beta-latest.zip -d /boot/config/plugins/rclone-beta/install/ root 7916 0.0 0.0 113580 24500 ? SN 09:43 0:00 php /etc/rc.d/rc.diskinfo root 8035 0.0 0.0 3840 3048 ? SN 09:43 0:00 sh -c timeout -s 9 60 smartctl --info --attributes -d sat,auto '/dev/sda' 2>/dev/null root 8036 0.0 0.0 2640 816 ? SN 09:43 0:00 timeout -s 9 60 smartctl --info --attributes -d sat,auto /dev/sda root 8037 0.0 0.0 6724 4272 ? DN 09:43 0:00 smartctl --info --attributes -d sat,auto /dev/sda root 8038 0.0 0.0 9216 5616 ? Rs 09:43 0:00 sshd: root@pts/0 root 8040 0.0 0.0 8136 4984 pts/0 Ss 09:43 0:00 -bash root 8047 0.0 0.0 3744 2836 ? S 09:44 0:00 /bin/sh -c /usr/local/emhttp/plugins/dynamix/scripts/monitor &> /dev/null root 8048 0.0 0.0 113516 23892 ? D 09:44 0:00 /usr/bin/php -q /usr/local/emhttp/plugins/dynamix/scripts/monitor root 8049 0.0 0.0 5856 2352 pts/0 R+ 09:44 0:00 ps auxwwwww

What's interesting about this is it's still running rclone related commands. I uninstalled the rclone plugin, and when I did the fsck, it purged the rclone plugin directory (at least it seemed to). But the files are still there:

root@Storage:/boot/config/plugins# ls rclone* rclone.plg* rclone: logs/ scripts/ rclone-beta: install/ logs/ scripts/

When the system finally finishes booting I'll be able to tell if the plugin is still showing in the UI.

Is it safe to delete these rclone files manually?

-

Yes, I created the usb on a linux machine. fsck.vfat handles fat32 just fine. I ran it and it did find some issues:

jdonahue@desktop:~$ sudo fsck.vfat /dev/sdd1 fsck.fat 4.1 (2017-01-24) 0x41: Dirty bit is set. Fs was not properly unmounted and some data may be corrupt. 1) Remove dirty bit 2) No action ? 1 /CONFIG/PLUGINS/rclone-beta/install/rclone-v1.49.0-074-gc49a71f4-beta-linux-amd64 Start does point to root directory. Deleting dir. Perform changes ? (y/n) y /dev/sdd1: 683 files, 48116/1876010 clusters jdonahue@desktop:~$ sudo fsck.vfat /dev/sdd1 fsck.fat 4.1 (2017-01-24) Orphaned long file name part "rclone-v1.49.0-074-gc49a71f4-beta-linux-amd64" 1: Delete. 2: Leave it. ? 1 Perform changes ? (y/n) y /dev/sdd1: 682 files, 48116/1876010 clusters jdonahue@desktop:~$ sudo fsck.vfat /dev/sdd1 fsck.fat 4.1 (2017-01-24) /dev/sdd1: 682 files, 48116/1876010 clusters

-

No windows machines, just linux. Will a fsck do the trick?

Edit: Also, why do I need to shutdown? I should be able to pull the USB while the system is up since unraid runs in memory, right?

-

I should also mention all my UI settings have been lost. Dark mode, banner, etc. I'm looking at the default UI (even when not in safe mode).

-

Thanks ti-ti jorge. I rebooted in safe mode and was able to get the shares back. I should also note when not booting in safe mode, it takes a LONG time to finish booting - something like 15 minutes. But booting in safe mode is nice and fast. I assume whatever plugin is block the mount is also delaying the boot.

I suspected it might be rclone-beta, but after removing it I still have the same issues. I've attached another diagnostic. Is there any way to disable, but not remove all the plugins (to enable one at a time)? Or is there a better way to track down the culprit?

-

4 hours and nothing?

-

ok, sorry for the drama, as far as I can tell my data still exists, but my shares are all gone.

Background:

I've been doing some upgrading and the most recent step was to remove some old drives I have little faith in. I followed the steps on the unraid wiki for shrinking the array at https://wiki.unraid.net/Shrink_array. Specifically I followed the "Rebuild Parity" method since I removed 3 drives.

Everything went as expected and when I started the array it started to rebuild parity (and yes, I verified the drives were in the right order (especially the parity drives).

Everything is great, right? NOPE. No shares, and docker/libvirt services were unable to start!

I ssh'd in and checked `/mnt/user` and it is EMPTY. I went into full on panic mode!!! I calmed down when I started looking at the individual disks and saw the data is still there (Thank god!).

Now the parity drives are rebuilding, but I don't know how to get the data back!

diagnostic info attached. Please help!

-

Nevermind! I just needed to be patient. It finished botting, but took a REALLY long time!

-

Hello!

I have an unraid pro install with a single 1TB SSD cache and 16 platter hard drives for data. I bought 2 M.2 drives with the intention of replacing the single SSD. I plan was to mirror the SSD to an M.2 drive, remove the SSD, then remirror to the other M.2.

I installed both M.2 drives, now the server doesn't fully come up. I attached a monitor to see where it gets stuck and have attached a picture.

The system pings, and I can SSH in and see both nvme drives, but the unraid web interface never becomes available and none of the dockers/vms start.

Obviously without the web gui, I can't attached diagnostic files.

-

Reboot did it, thanks. I'll get new cables and swap them out.

-

I just realized my cache disk is mounted read-only. Not sure what happened, but I need to know the proper procedure here. I have not rebooted and I'm attaching my diagnostics output. What's the right steps to troubleshoot/repair the problem here?

-

Perfect! Thanks for the quick turn around!

-

-

1 minute ago, dmacias said:9 minutes ago, timekiller said:Getting an error trying to use strace:

root@Storage:~# stracestrace: error while loading shared libraries: libdw.so.1: cannot open shared object file: No such file or directory

Is there another tool I need to install?

What version of UnRAID?

6.7.0-rc4

-

Getting an error trying to use strace:

root@Storage:~# strace strace: error while loading shared libraries: libdw.so.1: cannot open shared object file: No such file or directoryIs there another tool I need to install?

-

5 minutes ago, trurl said:

This was already discussed on this very page.

Apologies, I searched the topic for '6.6.7', but not '6.7.0-rc5' before posting.

-

Any eta on when this plugin will get 6.6.7 and 6.7.0-rc5 support?

-

Hello!

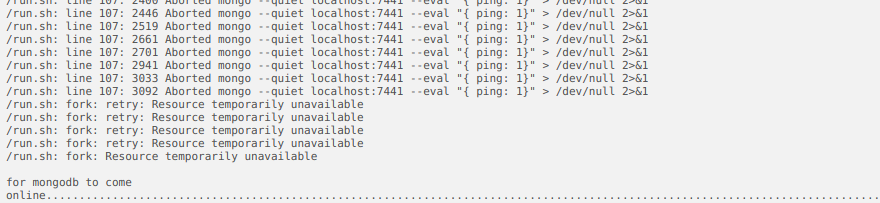

I'm having issues getting unifi-video to start. I got the service started initially, got my cameras configured, etc. My issue is that when I stop/start the array or reboot, the service starts fine, but unifi-video gets stuck on starting the Database service. I checked the service log and I see a lot of

(unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files... (unifi-video) checking for system.properties and truststore files...Sometimes restarting the service fixes this, but currently it's not helping.

-

1

1

-

-

5 hours ago, brainbudt said:

does your cpu need to have VT-D in order to get this to work ?

No, my server has VT-D disabled (incompatible with my sata card) and I can still do hardware transcoding just fine.

-

Thanks Constructor - I definitely have unraid open on several laptops, so I'm sure that explains the csrf errors. As for the docker size, I knew I would have a bunch of containers and didn't want to run out of space. Besides, I have a 1TB SSD, I figured I had the room.

As for the actual problem. I had a feeling this was not a common problem as searching turned up nothing unraid related. I think Unifi-Video is pushing me over whatever limit I am hitting. I stopped the container and haven't seen any errors for a few hours. The app is the NVR for my 8 security cameras, so there is a lot of disk i/o there. Still, this is nothing different from what I had running on my previous (Ubuntu) server, and I checked the ulimits there and they were actually much lower in a lot of cases.

My only thought here is it has something to do with how the unraid fuse file system works. I know as data is written to a drive, it also has to read from all drives to calculate parity. Maybe the strain of 8 video streams plus my disk to disk rsync is just too much? I did not have any problems when I copied the bulk of my data from the old server (over gigabit ethernet).

My concern here is that if I schedule a regular parity check I have to knock my security system offline for the 24+ hours it takes to finish. I am still on the trial license as I wanted to give the system time to show me any potential issues, and this is a pretty big one for me. I'm hoping I'm not going to be given the run around on this - I could easily open the unifi-video support thread, but we both know the maintainer just packages Ubiquiti's video app in a docker, so he's not going to know much about that proprietary application. Ubiquity is a large company (that honestly is focusing on their new hardware NVR) and they are just going to blame the OS since it works on Ubuntu without hitting a resource limit - and they'd be right, they can't support every OS under the sun. Hitting a resource limit is an OS restriction placed on user space applications. Being Linux, my go to was to look at ulimit, but that didn't seem to help.

Is there anything unraid specific I can look at to try and resolve this?

-

-

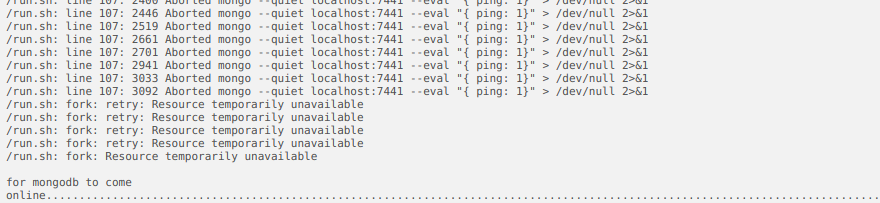

I am almost done setting up my first unraid server and have a problem. As the title says, I'm getting

fork: retry: Resource temporarily unavailable

EVERYWHERE.

A little background:

High level:

i7-6700K 4Ghz

32GB RAM

13 Drives totaling 42TB usable, 1TB SSD Cache

Bunch of dockers, no running VMs (yet)

Details:

So that's the setup. No a little history: I am migrating from a home built storage server that was running on hardware RAID 6. I cobbled together enough old drives on the unraid server to move my data. I setup the new server and everything was looking good. I rsync'd my data from the old server (about 19TB) without issue. Once the rsync was done, I decommissioned the server and pulled the drives for the new server.

Here is where the problems started. While preclearing the new drives I started seeing 'fork: retry: Resource temporarily unavailable' when trying to tab-complete from the terminal. Then I started seeing issues with my dockers. Unifi-video especially would be stopped every night. I'd look at the log for the docker and see

I'm getting a ton of emails from cron with the subject 'cron for user root /etc/rc.d/rc.diskinfo --daemon &> /dev/null' with the same fork error.

I got through the preclear and add the new drives, but then I had more data to sync back. I added a 10TB drive and mounted it with unassigned drives so I can directly rsync the data. The fork error got WAY out of control to the point no docker containers are working. I did some googling and found the error means I'm hitting a resource limit (obvious) and to look at `ulimit -a` to see what my limits are:

root@Storage:~# ulimit -a core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 127826 max locked memory (kbytes, -l) unlimited max memory size (kbytes, -m) unlimited open files (-n) 40960 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) unlimited cpu time (seconds, -t) unlimited max user processes (-u) 127826 virtual memory (kbytes, -v) unlimited file locks (-x) unlimitedI suspected open files was the issue and doubled (then doubled again) the number. I also increased max user processes and tried to increase pipe size, but it wouldn't let me. I though this helped, but now I'm have the same issues and I'm not sure if it helped, or I just was looking at a time when the issue temporarily stopped...

The problem seems to be tied to high disk I/O. I've seen the issue now during preclear, disk to disk sync, and during a parity rebuild (I rearranged my drives after installing the new ones).

It COULD be that this will all go away when I'm done the massive data moves, but I want to know why it's happening and fix it now.

Any help is appreciated.

Any way to share an rclone mount?

in General Support

Posted

I am using the rclone plugin to mount my Google Drive. I would love to be able to share the mount through unraid so I can access my google drive over my local network. Any way to accomplish this?