-

Posts

152 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Mihle

-

-

So I did this with Nextcloud (Linuxserver) /tmp (not SWAG) a while ago by remapping it to a folder inside Nextclouds own Appdata folder. It worked fine at the time. But after a container/Nextcloud(v28) update, I started having issues with the photos app/viewing photos in Nextcloud making Nextcloud stop responding with few logs until I restarted my NAS, and then the docker service had to be turned on again manually.

After a lot of testing I figured out removing the path remapping, fixed the freezing/stop responding issue.

But Now I am back to /tmp being in the docker image.Anyone have heard about this before and know how to fix it or work around it? What about remapping a folder could cause problems with Nextcloud?

-

So a question, I am on unraid 6.12 and didnt update to the new one yet because I didnt get around to it, but I am having problems and want to roll back appdata.

Will the old version still work, even if it is not supported anymore? -

On 1/17/2024 at 10:16 PM, Mihle said:

The last while I have gotten this error now and then:

Allowed memory size of 536870912 bytes exhausted (tried to allocate 608580088 bytes) at /app/www/public/lib/private/Files/Storage/Local.php#327

How do I fix that?

How do I increase that limit to something higher?How do I find the file in the path the error mentions, I have tried looking for it it in Appdata but I havent found it yet. it is somewhere else?

I am no longer getting that warning, but nextcloud stops responding every time the photo app in nextcloud tries to generate previews of photos. Nothing helps exept restaring the whole NAS. I have tried to find logs about it everywhere, nextcloud.log, docker.log, other logs, unraid syslog but there is zero logs that tells me anything about what is going on. At this point I think my only option is to try to roll back to an earlier appdata version.

-

The last while I have gotten this error now and then:

Allowed memory size of 536870912 bytes exhausted (tried to allocate 608580088 bytes) at /app/www/public/lib/private/Files/Storage/Local.php#327

How do I fix that?

How do I increase that limit to something higher?How do I find the file in the path the error mentions, I have tried looking for it it in Appdata but I havent found it yet. it is somewhere else?

-

On 8/7/2023 at 8:59 PM, Mihle said:

I just updated (27.0.1, latest docker image I think), and I am getting the same errors, should I delete them manually or do something else or just wait?

After another update, its indeed fixed itself.

-

I just had an issue where Jellyfin on custom network would not start with error that port was already in use, after a lot of trying different things, I figured out that it was conflicting with itself. This started after some update.

In the configuration, it had both a field named "Webui - HTTPS:" and one named "WebUI (HTTPS) [optional]:" that was both set to 8920. I changed the last one to something else and it then started up fine.

Is this an issue with that its an older docker that has been updated as its basically the same name, or is it an issue with the docker itself?

EDIT: I just noticed my Docker is not the linuxserver one, opsie.

-

On 7/16/2023 at 12:58 AM, bumpkin said:

I have another EXTRA_FILE question. I'd happily delete the files below, but I can't seem to find them using the container's console. Any tips?

Technical information ===================== The following list covers which files have failed the integrity check. Please read the previous linked documentation to learn more about the errors and how to fix them. Results ======= - core - EXTRA_FILE - core/js/tests/specs/appsSpec.js - dist/files_trashbin-files_trashbin.js - dist/files_trashbin-files_trashbin.js.LICENSE.txt - dist/files_trashbin-files_trashbin.js.map - lib/private/Files/ObjectStore/NoopScanner.php - lib/private/Updater/ChangesResult.php - lib/public/WorkflowEngine/IEntityCompat.php - lib/public/WorkflowEngine/IOperationCompat.php Raw output ========== Array ( [core] => Array ( [EXTRA_FILE] => Array ( [core/js/tests/specs/appsSpec.js] => Array ( [expected] => [current] => cf1ff76b5129943a1ffd6ea068ce6e8bc277718b9d0c81dccce47d723e1f290be20f197b6543f17f3b2ac78d8d4986354db4103de2b5e31c76e00e248984605b ) [dist/files_trashbin-files_trashbin.js] => Array ( [expected] => [current] => 24e537aff151f18ae18af31152bcfd7de9c96f0f6fdcca4c1ad975ece80bb35a2ab7d51c257af6a9762728d7688c9ba37a5359a950eb1e9f401d4b9d875d92b2 ) [dist/files_trashbin-files_trashbin.js.LICENSE.txt] => Array ( [expected] => [current] => 2e40e4786aa1f3a96022164e12a5868e0c6a482e89b3642e1d5eea6502725061e2077786c6cd118905e499b56b2fc58e4efc34d6810ff96a56c54bf990790975 ) [dist/files_trashbin-files_trashbin.js.map] => Array ( [expected] => [current] => 65c2a7ddc654364d8884aaee8af4e506da87e54ae82f011989d6b96a625b0dbfba14be6d6af545fa074a23ccf2cc29043a411dc3ac1f80a24955c8a9faa28754 ) [lib/private/Files/ObjectStore/NoopScanner.php] => Array ( [expected] => [current] => 62d6c5360faf2c7fca90eaafa0e92f85502794a42a5993e2fe032c58b4089947773e588ad80250def78f268499e0e1d9b6b05bc8237cc946469cd6f1fb0b590c ) [lib/private/Updater/ChangesResult.php] => Array ( [expected] => [current] => d2e964099dfd4c6d49ae8fc2c6cbc7d230d4f5c19f80804d5d28df5d5c65786a37ea6f554deacecad679d39dbba0d6bd6e4edadca292238f45f18f080277dad0 ) [lib/public/WorkflowEngine/IEntityCompat.php] => Array ( [expected] => [current] => ea1856748e5fcf8a901597871f1708bdf28db69d6fa8771c70f38212f028b2ff744b04019230721c64b484675982d495a2c96d1175453130b4563c6a61942213 ) [lib/public/WorkflowEngine/IOperationCompat.php] => Array ( [expected] => [current] => 6c09c15e9d855343cc33a682b95a827546fa56c20cc6a249774f7b11f75486159ebfe740ffcba2c9fa9342ab115e7bf99b8c25f71bb490c5d844da251b9751ed ) ) ) )On 7/16/2023 at 2:37 AM, iXNyNe said:Core files are in /app/www/public inside the container. The best thing to do is recreate the container. I don't know of a short way to do this on unRAID, but when the container image updates (usually weekly) it should solve itself.

I just updated (27.0.1, latest docker image I think), and I am getting the same errors, should I delete them manually or do something else or just wait?

-

On 1/26/2023 at 4:40 AM, emeybee said:

For those having issues with the stream error, check your Swag logs and it will tell you which config files need to be updated. Most likely you need to update your nginx.conf and ssl.conf files using the new sample files in appdata. That fixed the issue for me.

Same, only required to do ot with nginx.conf tho, not ssl.conf.

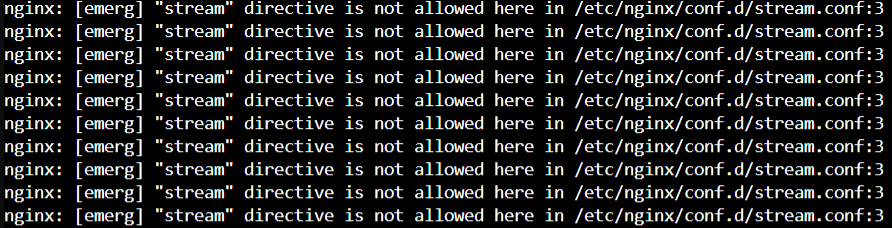

For reference for other people, is is how the warning looks like in Swag log:

I shut swag down and replaced the .sample with .conf and its working again

-

On 1/26/2023 at 5:18 AM, Alemiser said:

This is what i did to fix it.

Stop Swag docker

Go to \\<server>\appdata\swag\nginx folder

rename original nginx.conf to nginx.conf.old

copy nginx.conf.sample to nginx.conf

rename ssl.conf to ssl.conf.old

copy ssl.conf.sample to ssl.conf

restart swag docker

This worked for me

I only did the ngnx.conf and it worked, did not touch ssl.conf, and it worked.

Thanks! -

On 1/25/2023 at 9:30 AM, DrBobke said:

Anyone that can chime in on this? Would like to get it sorted as soon as possible...

I figured it out, its not an issue with MariaDB at all. The issue is with SWAG.

1. Stop SWAG docker (dont know if this is required, but seems like a good practice to do?)

2. Go in to appdata/swag/nginx

3. Rename the nginx.conf like for example add .old (so you still have it, just in case)4. Rename the nginx.conf.sample to just nginx.conf

5. Start SWAG docker

6. Profit.EDIT: Found this thread after the fact right now:

-

-

Maybe stupid question, but where is $LOGFILE, how do you view it? (Krusader?)

EDIT: I see its mentioned it is in /tmp but the /tmp I am looking in, doesnt have it, maybe there is multiple ones and I looked in the wrong one?

-

1 minute ago, dlandon said:

When unmounting the disk, $DEVICE is set to '/dev/mapper/mountpoint' and this is what gets unmounted on an encrypted disk. An Unraid api call is used to spin down disks and it does this by the 'sdX' device designation.

Like I said, when the disk is encrypted, use the $LUKS variable. This is the physical device. The $DEVICE variable is the mounted device on an encrypted disk.

Why is it better to use alias than $LUKS for example?

-

15 minutes ago, dlandon said:

Your command is wrong. It should be:

/usr/local/sbin/rc.unassigned spindown $DEVICEIf the disk is an encrypted disk, use $LUKS, if the disk is not encrypted, use $DEVICE. An encrypted disk's device is /dev/mapper/mountpoint and not /dev/sdX.

The best way to handle disk spin down is to set a disk name (alias) and use that.

My mistake, the command is what you say there, it was an error by copying it over.

But yes, it is encrypted. $DEVICE do work for unmount tho so weird it doesnt for spindown, while using sde works?

When using sde, it does say dev/sde and not /dev/mapper/mountpoint? Even tho its supposed to be an encrypted disk?

EDIT: $LUKS works for spin down, Why is it better to use disk alias? -

I have a problem, in device script I have this:

'REMOVE' ) # Spinning down disk logger Spinning disk down -t$PROG_NAME /usr/local/sbin/rc.unassigned $DEVICEBut the disk do not spin down, it does do a notification I have after that part, and the system log does say:

Dec 23 21:45:36 NAS OfflineBackup: Spinning disk downBut if I replace the "$DEVICE" with "sde", it does spin down and the system log says:

Dec 23 21:50:03 NAS OfflineBackup: Spinning disk down Dec 23 21:50:03 NAS emhttpd: spinning down /dev/sdeWhy doesnt "$DEVICE" work? Its probably not ideal to use "sde" in that script?

-

On 11/24/2016 at 9:26 AM, HellDiverUK said:

I'd also recommend swapping out the 140mm fan in the front, while also losing the dust filter. I also tend to not use the top fan, it's not at all required for a NAS box. I've had great results from the Noctua NF P14s Redux 1200 http://noctua.at/en/nf-p14s-redux-1200-pwm fans, which are quiet and plenty of airflow.

Sorry for the necro but I have question:

I have the case with stock fans but dont yet know if its worth changing to lower HDD temp. But the question is:

Do you HAVE to remove the dust filter when you change the front fan, or is it just something you choose to do? -

On 7/8/2022 at 4:59 PM, JorgeB said:

That's an unsigned disk, danger is user shares and disk shares for array/pool disks.

unassigned disk dont have any user shares anyway.

-

On 8/19/2019 at 6:54 PM, willmx said:

After a ton of troubleshooting, yes. Unfortunately, Cloudflare official documentation is at least 16 months outdated. Also, the official README for the ddclient Docker container says to look at Cloudflare documentation.

Anyway, here's the ddclient.conf that FINALLY worked for me! This works with the latest linuxserver/ddclient as of August 19th, 2019.

Pay close attention when commas are used and when they are not.

## ## CloudFlare (www.cloudflare.com) ## use=web protocol=cloudflare, \ zone=mydomain.com, \ ttl=10, \ [email protected], \ password=CLOUDFLARE-GLOBAL-API-KEY \ sub1.mydomain.com,sub2.mydomain.comStandard config in ddclient dont have the use=web in the cloudflare section, but after struggling to figure it out, as ddclient log only said something about missing IP, I came over this and adding that seemed to make it work.

Had an easier time setting it up for Namecheap that I used before than Cloudflare.

-

Trying to make a script I can run that backs stuff up to an unassigned devices HDD.

#!/bin/bash #Destination of the backup: destination="/mnt/disks/OfflineBackup" #Name of Backup disk drive=dev1 ######################################################################## #Backup with docker containers stopped: #Stop Docker Containers echo 'Stopping Docker Containers' docker stop $(docker ps -a -q) #Backup Docker Appdata via rsync echo 'Making Backup of Appdata' rsync -avr --delete "/mnt/user/appdata" $destination #Backup Flash drive backup share via rsync echo 'Making Backup of Flash drive' rsync -avr --delete "/mnt/user/NAS Flash drive backup" $destination #Backup Nextcloud share via rsync echo 'Making Backup of Nextcloud' rsync -avr --delete "/mnt/user/NCloud" $destination #Start Docker Containers echo 'Starting up Docker Containers' /etc/rc.d/rc.docker start ######################################################################## #Backup of the other stuff: #Backup Game Backup share via rsync echo 'Making Backup Game Backup share' rsync -avr --delete "/mnt/user/Game Backups" $destination #Backup Photos and Videos share via rsync echo 'Making Backup of Photos and Videos share' rsync -avr --delete "/mnt/user/Photos and Videos" $destination ######################################################################## #Umount destination echo 'Unmounting and spinning down Disk....' /usr/local/sbin/rc.unassigned umount $drive /usr/local/sbin/rc.unassigned spindown $drive #Notify about backup complete /usr/local/emhttp/webGui/scripts/notify -e "Unraid Server Notice" -s "Backup to Unassigned Disk" -d "A copy of important data has been backed up" -i "normal" echo 'Backup Complete'

I am not sure if I should use echo or logger?

Also, in a test script, I didnt manage to get the

2>&1 >> $LOGFILEthat I see some use at the end of rsync commands to do anything, what does it do and should I try to get it to work? Some do

>> var/log/[something].log(together with some date command)

What do they do?For reference, I set the drive to automount, then I want to manually have to start the script, but when its done running I want to be ready to just unplug without having to press anything more. (and then take to another location until its time to update it) (offline backup)

-

This is my current scrip, havent tested the hole script at once yet:

#!/bin/bash #Destination of the backup: destination="/mnt/disks/OfflineBackup" #Name of Backup disk drive=dev1 ######################################################################## #Backup with docker containers stopped: #Stop Docker Containers echo 'Stopping Docker Containers' docker stop $(docker ps -a -q) #Backup Docker Appdata via rsync echo 'Making Backup of Appdata' rsync -avr --delete "/mnt/user/appdata" $destination #Backup Flash drive backup share via rsync echo 'Making Backup of Flash drive' rsync -avr --delete "/mnt/user/NAS Flash drive backup" $destination #Backup Nextcloud share via rsync echo 'Making Backup of Nextcloud' rsync -avr --delete "/mnt/user/NCloud" $destination #Start Docker Containers echo 'Starting up Docker Containers' /etc/rc.d/rc.docker start ######################################################################## #Backup of the other stuff: #Backup Game Backup share via rsync echo 'Making Backup Game Backup share' rsync -avr --delete "/mnt/user/Game Backups" $destination #Backup Photos and Videos share via rsync echo 'Making Backup of Photos and Videos share' rsync -avr --delete "/mnt/user/Photos and Videos" $destination ######################################################################## #Umount destination echo 'Unmounting and spinning down Disk....' /usr/local/sbin/rc.unassigned umount $drive /usr/local/sbin/rc.unassigned spindown $drive #Notify about backup complete /usr/local/emhttp/webGui/scripts/notify -e "Unraid Server Notice" -s "Backup to Unassigned Disk" -d "A copy of important data has been backed up" -i "normal" echo 'Backup Complete'

I am not sure if I should use echo or logger?

Also, in a test script, I didnt manage to get the

2>&1 >> $LOGFILEthat I see some use at the end of rsync commands to do anything, what does it do and should I try to get it to work?

for reference, I set the drive to automount, then I want to manually have to start the script, but when its done running I want to be ready to just unplug without having to press anything more. (and then take to another location until its time to update it) (offline backup) -

I have seen some scripts include "echo", "logger" and behind some commands they write 2>&1 >>[some adress to a log file], and a date command, what do they do?

Any website I havent found yet that explains it? (might be searching for the wrong things)

-

On 12/5/2021 at 6:11 PM, trurl said:

User shares includes data from any pools.

So, If I used:

rsync -avr --delete /mnt/cache/NcloudIt would only do data on the cache, if I did;

rsync -avr --delete /mnt/disk1/NcloudIt would only do the data on the array disk, but if I do:

rsync -avr --delete /mnt/user/NcloudIt will do everything no matter where its located?

(same with appdata) -

Would it work fine if you just do the Nextcloud share and Appdata share for Nextcloud? do you need to turn off Nextcloud while it runs?

-

On 12/29/2020 at 5:03 AM, Hoopster said:

You don't create anything; let rsync do it. I just formatted the destination drive as NTFS and let it run the backup script when plugged in. The first time the destination folders (same name as the shares) are created.

I only made minor modifications to the UD backup script by adding the rsync commands for backing up the additional shares.

/mnt/user/Pictures for example just becomes Pictures on the USB drive and all these folders appear just fine in Windows.

#!/bin/bash PATH=/usr/local/sbin:/usr/sbin:/sbin:/usr/local/bin:/usr/bin:/bin ## Available variables: # AVAIL : available space # USED : used space # SIZE : partition size # SERIAL : disk serial number # ACTION : if mounting, ADD; if unmounting, UNMOUNT; if unmounted, REMOVE; if error, ERROR_MOUNT, ERROR_UNMOUNT # MOUNTPOINT : where the partition is mounted # FSTYPE : partition filesystem # LABEL : partition label # DEVICE : partition device, e.g /dev/sda1 # OWNER : "udev" if executed by UDEV, otherwise "user" # PROG_NAME : program name of this script # LOGFILE : log file for this script case $ACTION in 'ADD' ) # # Beep that the device is plugged in. # beep -l 200 -f 600 -n -l 200 -f 800 sleep 2 if [ -d $MOUNTPOINT ] then if [ $OWNER = "udev" ] then beep -l 100 -f 2000 -n -l 150 -f 3000 beep -l 100 -f 2000 -n -l 150 -f 3000 logger Started -t$PROG_NAME echo "Started: `date`" > $LOGFILE logger Pictures share -t$PROG_NAME rsync -a -v /mnt/user/Pictures $MOUNTPOINT/ 2>&1 >> $LOGFILE logger Videos share -t$PROG_NAME rsync -a -v /mnt/user/Videos $MOUNTPOINT/ 2>&1 >> $LOGFILE logger Movies share -t$PROG_NAME rsync -a -v /mnt/user/Movies $MOUNTPOINT/ 2>&1 >> $LOGFILE logger Family Videos share -t$PROG_NAME rsync -a -v /mnt/user/FamVideos $MOUNTPOINT/ 2>&1 >> $LOGFILE logger Syncing -t$PROG_NAME sync beep -l 100 -f 2000 -n -l 150 -f 3000 beep -l 100 -f 2000 -n -l 150 -f 3000 beep -r 5 -l 100 -f 2000 logger Unmounting Backup -t$PROG_NAME /usr/local/sbin/rc.unassigned umount $DEVICE echo "Completed: `date`" >> $LOGFILE logger Backup drive can be removed -t$PROG_NAME /usr/local/emhttp/webGui/scripts/notify -e "Unraid Server Notice" -s "Server Backup" -d "Backup completed" -i "normal" fi else logger Backup Drive Not Mounted -t$PROG_NAME fi ;; 'REMOVE' ) # # Beep that the device is unmounted. # beep -l 200 -f 800 -n -l 200 -f 600 ;; 'ERROR_MOUNT' ) /usr/local/emhttp/webGui/scripts/notify -e "Unraid Server Notice" -s "Server Backup" -d "Could not mount Backup" -i "normal" ;; 'ERROR_UNMOUNT' ) /usr/local/emhttp/webGui/scripts/notify -e "Unraid Server Notice" -s "Server Backup" -d "Could not unmount Backup" -i "normal" ;; esacHow would I go about backing up Nextcloud database in a script like this where partal of the data could be on the cache? Would just selecting the hole share work? would Nextcloud have to be stopped?

Announcing New Unraid OS License Keys

in Announcements

Posted

If I currently have a basic licence, will I be able to upgrade to a plus, after the change has happened, or would I be forced to stay on basic/upgrade all the way to pro?