-

Posts

161 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by StanC

-

-

Are there any updated internal photos of the server?

In particular if the current motherboard being used is the SuperMicro X10SL7, I would like to see how the drives are connected - which ones are connected to the SATA3, SATA2 and SAS connectors.

The X10SL7 has 14 SATA ports:

2 x SATA3 and

4 x SATA2 provided by chipset

8 x SAS provided by onboard LSI chip (compatible with SATA2/3 devices)

The two SATA3 ports are connected to the far left 3.5" slot and the far right 3.5" slot.

The four SATA2 ports are connected to next 4 3.5" slots from the left.

One set of four SAS ports is connected to the four 2.5" slots.

Other set of four SAS ports is connected to remaining four 3.5" slots on right.

There's a diagram of all this somewhere which I can't find at the moment

It was set up this way thinking P drive would be on far left, Q drive on far right, and SSD's installed in 4x 2.5" slots.

Unfortunately AVS-10/4 is sold out with reorder lead time of about 6 weeks.

So the P drive is the parity drive in the far left and the Q drive is the cache drive or the first data drive in the far right? Then the 2.5 SSDs are used for the cache/app drives?

I currently own the LimeTech D-316 case, which is a very nice case. I am looking at replacing my currently motherboard with the X10SL7, due to some intermittent issues that I am seeing. So I was looking at this board and wanted to understand the connections.

-

Are there any updated internal photos of the server?

In particular if the current motherboard being used is the SuperMicro X10SL7, I would like to see how the drives are connected - which ones are connected to the SATA3, SATA2 and SAS connectors.

-

What is the hard drive setup for that motherboard?

Parity Drive - SATA3

Cache Drive - SATA3

Data 1-4 - SATA2

Data 5 - 12 - SAS

-

Update to 6.0 went smooth. Loving the Plex docker, working on migrating from Kodi to Plex. But may need to rethink and move my dockers or get a bigger cache drive -- current drive is a 512Gb. Just noticed my cache usage shot up to 85% while I was scanning in my libraries. I will know more once I move the shows off of the cache onto the array.

-

I am getting a continuous "Connection to server lost" red box in the upper left corner. Why is this?

Also can we take add-ons and add them the docker? Is it a manner of copying the add-on folder and settings over and then restart the docker? In particular, I would like to use this headless docker to run the daily library update and artwork downloader.

-

Trying to install KODI-headless docker and nothing is happening when I click on the Create button. I set my host path to /mnt/user/appdata/kodi-server/ which is on my cache drive. Changed the host port to 8081, so as not to interfere with unMenu. i am running unRAID v6-RC4. I have tried a reboot and still get the same results. Is there something wrong with the template.

nothing wrong with the template, click the advanced view button in the template and fill in the variables.

Thanks, that did the trick.

-

Trying to install KODI-headless docker and nothing is happening when I click on the Create button. I set my host path to /mnt/user/appdata/kodi-server/ which is on my cache drive. Changed the host port to 8081, so as not to interfere with unMenu. i am running unRAID v6-RC4. I have tried a reboot and still get the same results. Is there something wrong with the template.

-

Hey, sparkly. Thank you for this. This is one awesome docker. I installed it, made the change to my pfSense firewall and it was immediately up and running.

-

Am I doing something wrong?

Under Data, the only device that I see is my thumbdrive. None of the hard drives are populating into it.

What does

ls -las /dev/disk/by-id

show?

The disk list is built out of those devices that start with sata- (and don't contain -part).

If your devices began ata- for example, they wouldn't be found..

Why are ata devices being skipped?

Mine are all SATA devices (in a SAS Expander Tower)

theone - all SATA devices show as "ata" device when you run the command:

ls -las /dev/disk/by-id

You need to updated the code in your /usr/local/emhttp/plugins/serverlayout/php/serverlayout_constants.php file on line 198 from "sata-" to "ata-". As a test I did that on my server and now all of my drives are now showing up and I am able to assign them to the layout.

-

Am I doing something wrong?

Under Data, the only device that I see is my thumbdrive. None of the hard drives are populating into it.

I too can only see my thumb drive and one USB drive that I am testing with the Unassigned Devices plugin. This plugin shows promise, but also it would be nice if it could be configured with some different case layouts such as LimeTech's own AVS 10/4, which is the case that I am using.

-

Fixed ssh and denyhosts plugins for v6b12+

Fully compatible with the unRaid plugins page.

Install via unraid plugins -> install plugins tab.

Enter either of the following URLs to install;

SSH : https://raw.githubusercontent.com/overbyrn/unraid-v6-plugins/master/ssh.plg

Denyhosts : https://raw.githubusercontent.com/overbyrn/unraid-v6-plugins/master/denyhosts.plg

Check for updates and/or remove plugins from the Unraid plugins -> installed plugins page.

I have installed Denyhosts and change the working directory to /mnt/cache/services/denyhosts but it will not start. I am running v6b14b.

Overbyrn - never got an answer this?

No answer??? I am removing the Denyhosts plugin, since it does not work.

-

Fixed ssh and denyhosts plugins for v6b12+

Fully compatible with the unRaid plugins page.

Install via unraid plugins -> install plugins tab.

Enter either of the following URLs to install;

SSH : https://raw.githubusercontent.com/overbyrn/unraid-v6-plugins/master/ssh.plg

Denyhosts : https://raw.githubusercontent.com/overbyrn/unraid-v6-plugins/master/denyhosts.plg

Check for updates and/or remove plugins from the Unraid plugins -> installed plugins page.

I have installed Denyhosts and change the working directory to /mnt/cache/services/denyhosts but it will not start. I am running v6b14b.

Overbyrn - never got an answer this?

-

Fixed ssh and denyhosts plugins for v6b12+

Fully compatible with the unRaid plugins page.

Install via unraid plugins -> install plugins tab.

Enter either of the following URLs to install;

SSH : https://raw.githubusercontent.com/overbyrn/unraid-v6-plugins/master/ssh.plg

Denyhosts : https://raw.githubusercontent.com/overbyrn/unraid-v6-plugins/master/denyhosts.plg

Check for updates and/or remove plugins from the Unraid plugins -> installed plugins page.

I have installed Denyhosts and change the working directory to /mnt/cache/services/denyhosts but it will not start. I am running v6b14b.

-

Upgrade via the webgui was successful.

-

@Jencryzthers - any updates about updating DenyHosts?

-

Just upgraded with no issues.

Thank you.

-

How do I take a backup of my docker images just in case this happens again?

Stop docker and then copy the docker image file to another device.

Along with the app folder where I put the containers? - in my case /mnt/cache/appdata folder

-

Thanks, Eric.

I was not able to recover anything. So I decided to start over and scrap the cache pool and go back to a single cache drive. Which is what I have been wanting to do for a while now.

How do I take a backup of my docker images just in case this happens again?

-

As a followup I was able to resolve the 'unformatted' problem of my second cache drive with a program called Test disk after reverting back to beta12.

http://www.cgsecurity.org/wiki/TestDisk

It detected the partition and let me repair the partition table by setting the partition to the proper type. I haven't noticed any data loss yet, so I think it's good.

Did you install TestDisk on you unRAID server or did you pull the cache drive out and put it into another system? If installed on unRAID server how is that done? I am a Windows user, so this is all still new to me.

-

I have upgraded and my cache drive pool is also showing as unformatted. System log attached. What do I need to do to get it back?

-

Not sure where to report this, but since Dynamix is the new WebGUI I will place it here. If I am posting it in the wrong place let me know.

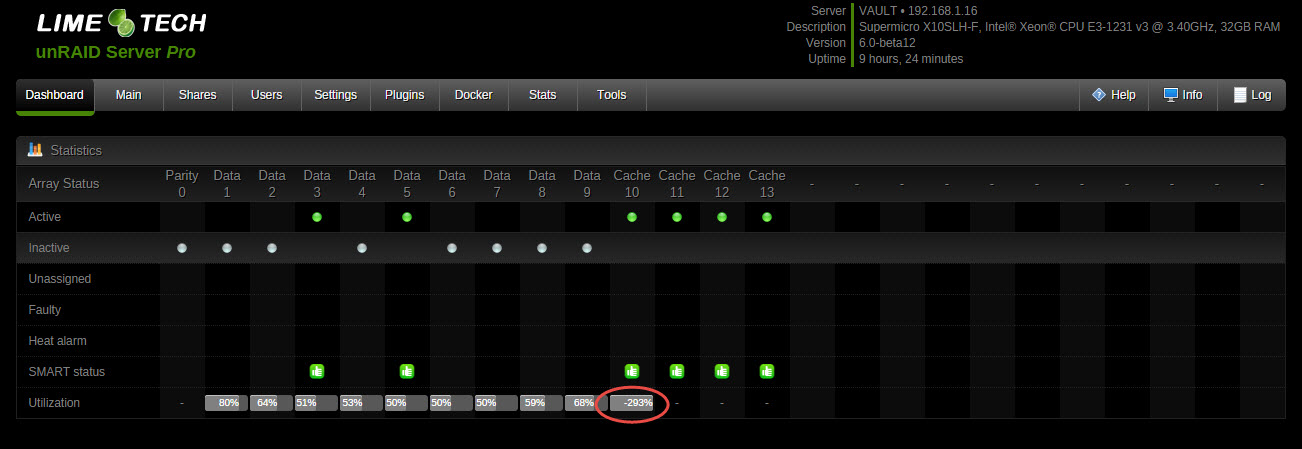

On the Dashboard the Utilization percentage for the Cache drive is incorrect, this could be due to my use of a cache pool and the code is not calculating that. It shows a -293%. This was also true for the Stats page, but bonienl has already corrected that. Anyway attached is a screenshot showing the error.

Odd. Can you click on the "Main" tab, then "Cache Devices", and then click on your first cache device. From that page, please copy and paste what you see under "Pool info." Thanks!

- Jon

Pool Info:

Label: none uuid: 88719609-11f1-42c8-a6f6-aef0a9e2ad3a

Total devices 4 FS bytes used 28.85GiB

devid 1 size 476.94GiB used 346.00GiB path /dev/sdl1

devid 3 size 476.94GiB used 346.03GiB path /dev/sdn1

devid 5 size 476.94GiB used 346.00GiB path /dev/sdm1

devid 6 size 476.94GiB used 0.00B path /dev/sdo1

Btrfs v3.17.2

Ok a few things real quick:

1) You it looks like you need to do a btrfs balance on your pool because devid 6 is showing no utilization yet.

2) I think the issue may have to do with a combination of the balance along with btrfs extents showing space consumption that isn't really an accurate reflection of your true btrfs free space.

3) Did you used to have other devices in this cache pool before? I find it odd that the devids are 1, 3, 5, and 6 as opposed to 1, 2, 3, 4. This can happen if you have added and then removed devices from the pool. Not an issue, just wanted to confirm my suspicion.

- Jon

Yes, I did remove one of the drives and added it back in a while back when I was trying out virtualization.

I have done a rebalance, but the dashboard still shows a negative number -- -286%

-

Not sure where to report this, but since Dynamix is the new WebGUI I will place it here. If I am posting it in the wrong place let me know.

On the Dashboard the Utilization percentage for the Cache drive is incorrect, this could be due to my use of a cache pool and the code is not calculating that. It shows a -293%. This was also true for the Stats page, but bonienl has already corrected that. Anyway attached is a screenshot showing the error.

Odd. Can you click on the "Main" tab, then "Cache Devices", and then click on your first cache device. From that page, please copy and paste what you see under "Pool info." Thanks!

- Jon

Pool Info:

Label: none uuid: 88719609-11f1-42c8-a6f6-aef0a9e2ad3a

Total devices 4 FS bytes used 28.85GiB

devid 1 size 476.94GiB used 346.00GiB path /dev/sdl1

devid 3 size 476.94GiB used 346.03GiB path /dev/sdn1

devid 5 size 476.94GiB used 346.00GiB path /dev/sdm1

devid 6 size 476.94GiB used 0.00B path /dev/sdo1

Btrfs v3.17.2

-

Not sure where to report this, but since Dynamix is the new WebGUI I will place it here. If I am posting it in the wrong place let me know.

On the Dashboard the Utilization percentage for the Cache drive is incorrect, this could be due to my use of a cache pool and the code is not calculating that. It shows a -293%. This was also true for the Stats page, but bonienl has already corrected that. Anyway attached is a screenshot showing the error.

-

Question about AVS-10/4X

in Pre-Sales Support

Posted

So with P on the far left as parity drive (sdb), then until Q is implemented then does that become data drive 1 (sdc), or does it really matter?

I will be making modifications in a few weeks to my system, I have my new X10SL7 motherboard. I also have a SAS expander so I may port SAS 0-3 from the motherboard to the expander using a reverse breakout cable that I have. Then run the SSDs off of the last 4 SAS ports 4-7. My preference to is run most of the data drives off of the SAS controller.