-

Posts

161 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by StanC

-

-

-

AWESOME

:)

:)

Downloading now. I would recommend that these be added as a sticky in the unRAID 6 Development or General Support so that we do lose sight of them

-

As dlandon has mentioned in the announcement post Disk Health is available from the 'Main' tab already. Just click on a disk and the Disk Health tab is there.

-

Awesome

Thanks to everyone for their hard work.

Thanks to everyone for their hard work. -

-

All these plugins are still under constructions, not final yet.

StanC: can you share your disks array content (see Tools -> Vars), that would help me correcting the stats calculations.

Do you need the whole content of the Vars or just a portion?

I have captured just the Disk Array variables and they are attached. If need more from the Vars file let me know.

-

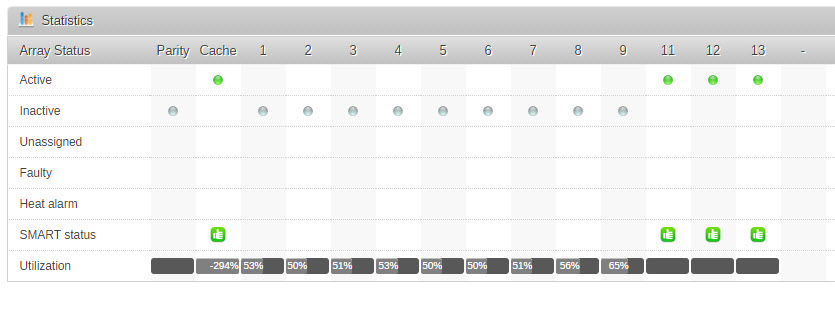

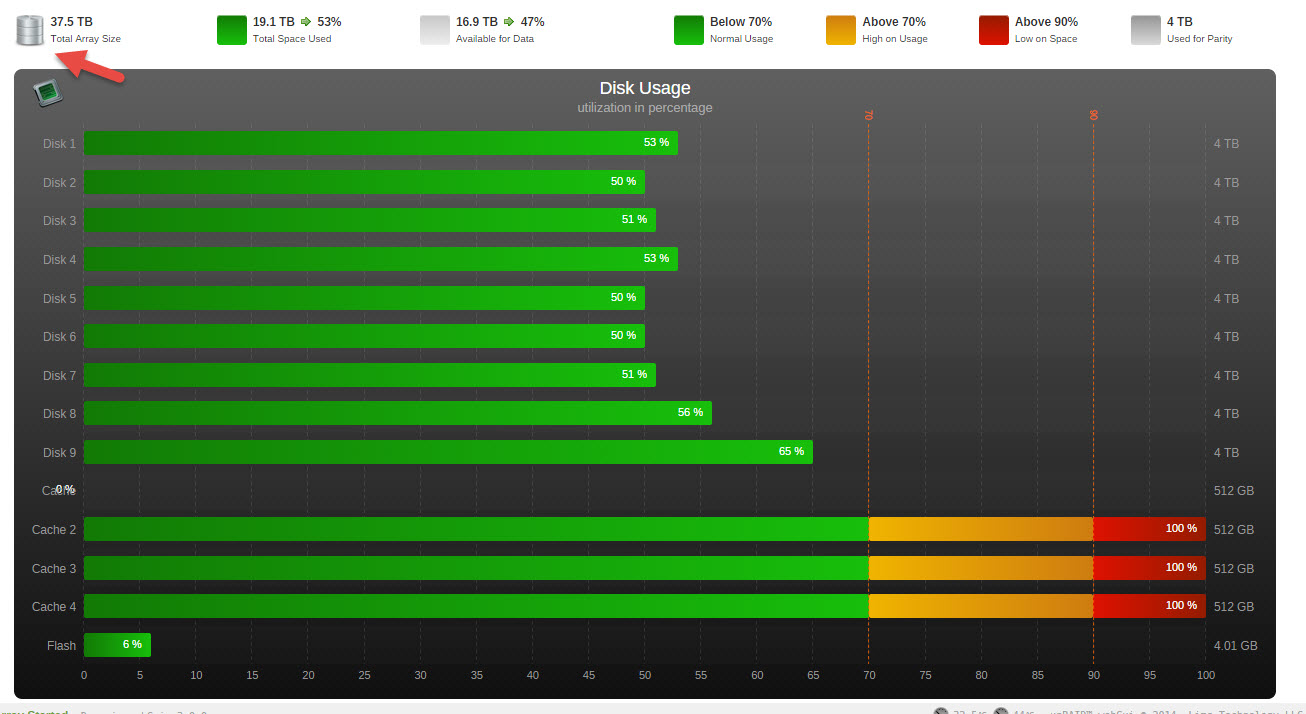

One concern and that is with the System Stats - Disk Usage for the Cache Pool. I have 4 SSDs for my cache pool, the first disk show 0% usage and for disk 2, 3 and 4 it shows 100%. Actually my cache pool is using 32Gb out of 2TB.

Also the total size seem to be off a bit - not sure what it counting. My array total is 36TB, where the System Status is reporting 37.5TB. It may be counting the cache pool as well.

I know that these plugins are still being worked on, I am just giving feedback at this point.

-

I just tried it, it work (not sure how stable it is tho)

Can you please outline which steps you took to get it working ? I would be VERY keen to have a play.

I am also on v6b10a and all I did was go to the Dynamix site (https://github.com/bergware/dynamix/tree/master/unRAIDv6) and look inside the text of each plugin and copied the URL inside and installed if from there.

Example for the WebGUI: https://raw.github.com/bergware/dynamix/master/unRAIDv6/webGui.plg

So now I have WebGui, Active Streams, Cache Dirs, Disk Health, Email Notify, System Info, System Stats and System Temp installed and all are running fine.

-

-

Can some please explain why I am receiving this email from my unRAID system everyday at 4:40AM?

cron for user root /usr/bin/run-parts /etc/cron.daily 1> /dev/null

How do I correct this?

-

I've had two hard crashes while on 6b7 while rsyncing 1.2 tb of data from a reiserfs array volume to a xfs array volume. Both happened after about 120 gb of data transfer; I have 4 GB of RAM. I got a screen shot of the first time, but not of the second. (see attached).

Funny thing is that both times a power button reboot failed to mount drives. I had to login and use powerdown -r to restart apparently normally.

I scrolled through my logs but don't see a copy of the log from either time in /boot/logs

I've restarted the rsync and will see if it happens again.

Dennis

I had a crash today on version 6b7, with a similar screen as Dennis. I did not get a a screen capture, sorry. I was doing a rsync from a reiserfs drive (disk1) to an empty xfs drive (disk9) - as part of my conversion from reiserfs to xfs. Also at the same time I was running the mover to move files from the cache to a different xfs drive (disk6) when it crashed.

Did a quick google search and got this link in 5.x forums - http://lime-technology.com/forum/index.php?topic=32563.0, which talks about this error version 6b5. Maybe a moderator can move the thread into this forum. So came into this forum and found this thread that talks a little further about the error.

Right now I am running a Memtest (SMP) to see if I have any RAM issues. I will update this post when it is complete.

Memory test complete no errors. Restarting unRAID.

-

Damn!

You'r right! I was looking for a Format button in the Disk detail page... Thanks

Now have 3 x 3TB of XFS FS drive in the array. What would be the easiest/fastest data move ?? take the content of 1st 3TB and rsync it to new drive via SSH ?? Any recommendation for switchs to use or other commands ?

I have been using rsync with:

rsync -av --progress --remove-from-source /mnt/diskX/ /mnt/diskY/

This allows you to restart if it gets interrupted for any reason and removes the source file once it is successfully copied.

Just a warning, I wouldn't use the shares when coping since it was found that under a certain case you can loose data if you copy from a drive to the share can truncate the data. Also best to run from screen so you wont have to worry about the session getting terminated..

I would seem that --remove-from-source is not an option.

rsync -av --progress --remove-from-source /mnt/disk8 /mnt/disk9 rsync: --remove-from-source: unknown option rsync error: syntax or usage error (code 1) at main.c(1554) [client=3.1.0]

-

I am migrating data from from my v5.05 system to my v6.0-beta6 system and during the mover job I am seeing the following:

Jul 28 21:18:20 VAULT kernel: ata6.00: exception Emask 0x10 SAct 0x0 SErr 0x280100 action 0x6 frozen (Errors) Jul 28 21:18:20 VAULT kernel: ata6.00: irq_stat 0x08000000, interface fatal error (Errors) Jul 28 21:18:20 VAULT kernel: ata6: SError: { UnrecovData 10B8B BadCRC } (Errors) Jul 28 21:18:20 VAULT kernel: ata6.00: failed command: READ DMA EXT (Minor Issues) Jul 28 21:18:20 VAULT kernel: ata6.00: cmd 25/00:00:c0:28:00/00:04:00:00:00/e0 tag 22 dma 524288 in (Drive related) Jul 28 21:18:20 VAULT kernel: res 50/00:00:57:a4:1c/00:00:87:01:00/e0 Emask 0x10 (ATA bus error) (Errors) Jul 28 21:18:20 VAULT kernel: ata6.00: status: { DRDY } (Drive related) Jul 28 21:18:20 VAULT kernel: ata6: hard resetting link (Minor Issues) Jul 28 21:18:20 VAULT kernel: ata6: SATA link up 6.0 Gbps (SStatus 133 SControl 300) (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: ACPI cmd ef/10:06:00:00:00:00 (SET FEATURES) succeeded (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: ACPI cmd f5/00:00:00:00:00:00 (SECURITY FREEZE LOCK) filtered out (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: ACPI cmd b1/c1:00:00:00:00:00 (DEVICE CONFIGURATION OVERLAY) filtered out (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: ACPI cmd ef/10:06:00:00:00:00 (SET FEATURES) succeeded (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: ACPI cmd f5/00:00:00:00:00:00 (SECURITY FREEZE LOCK) filtered out (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: ACPI cmd b1/c1:00:00:00:00:00 (DEVICE CONFIGURATION OVERLAY) filtered out (Drive related) Jul 28 21:18:20 VAULT kernel: ata6.00: configured for UDMA/133 (Drive related) Jul 28 21:18:20 VAULT kernel: ata6: EH complete (Drive related)So far I have received 4 of these within an hour. Should I be concerned that a potential problem is occurring on my drive or is it a bug?

-

I used the default setting. It was the easiest install I had.

Okay, then is the user and password the default? - user: root pwd: root

Just trying to figure out how to connect to it so that I can get it setup for XBMC.

-

I had trouble with this so it was the first plugin that I moved to a docker instance. The docker plugin works perfect

Can you share your docker file? I am having issues with the one in gfjardim's Docker Plugin - http://lime-technology.com/forum/index.php?topic=33965.0. Not able to connect to the database.

I use gfadjim's docker.

Okay, did you use the default settings? What network did you pick - bridge or host?

-

I had trouble with this so it was the first plugin that I moved to a docker instance. The docker plugin works perfect

Can you share your docker file? I am having issues with the one in gfjardim's Docker Plugin - http://lime-technology.com/forum/index.php?topic=33965.0. Not able to connect to the database.

-

I have attached the xml for my LMS docker install. I think you can change the .txt extension to .xml, put it in /boot/config/plugins/Docker and you will be able to select it as a template and make any changes you need....I am new to docker and see that you LMS working, would you mind sharing how you got it working?...

Awesome! It works, thank you.

:)

:)

-

Finally got around to dockerizing the apps I was running in a VM. This plugin made it very easy. Thanks.

I did have an issue with trying to run a logitechmediaserver I found on docker hub. I think the problem was that the docker run line was too long with this plugin. The repository was quite a long bit of text. I got it working by making some of the other parameters shorter.

I struggled for a couple of days trying to get transmission working. I know needo has a deluge container, but I am used to transmission, and I don't need any of the fancy functionality of deluge.

Long story short, I tried both botez and gfjardim dockers for transmission, and they mostly worked, but announcement wouldn't work with one of my trackers. This was working fine with the old-style plugin, and also on my archVM. I finally figured out that these dockers weren't working for announcement on https. I worked around this problem by reconfiguring my profile at the tracker to announce with http. Not ideal but I can live with it unless anyone has any other ideas.

Anyway, thanks again for this great plugin!

I am new to docker and see that you LMS working, would you mind sharing how you got it working?

I am struggling in getting my head around this new environment. I have tried to install MariaDB using the template in the plugin, but how does one connect to it? Do we use bridge or host network?

The apps that I am interested in are:

AirVideo - I believe the template will give me what I need. But if I need to make changes after it is installed how do we do that?

MySQL or MariaDB - this be used as my XBMC library

LMS

-

Love the plugin. But maybe I am missing something. I installed the MariaDB template, but I am not able to access it. What am I missing?

-

Yes but free of that drive should show triple maybe. Mine shows double. And used nan. The webgui isn't setup to handle a cache pool.

Yes, that is what I show as well. Thanks.

-

So it sounds like if I follow the commands posted by dmacias, I should be golden? Since I have 4 SSDs for the cache pool, I would use a RAID 0 or 5 instead of RAID 1, right?

The 'cache pool' feature is only going to support raid1 to start, which is slightly different than 'traditional' raid1, e.g., you can have 3 devices in the pool. raid0 is not recommended because loss of a single disk could mean losing all you data. raid5 is not recommended because that feature is not yet mature in btrfs.

If you want to remain compatible then you should create a partition on your cache disks and use them to form a btrfs raid1 pool.

Okay, I have my pool set up now following dmacias steps. However I did notice in the webgui that the only drive listed has the cache drive is the one that I picked, but the remaining of the drives in the pool are shown as "new disks not in array". Is that normal in the webgui? Just want to make sure that I did not mess something up.

-

So it sounds like if I follow the commands posted by dmacias, I should be golden? Since I have 4 SSDs for the cache pool, I would use a RAID 0 or 5 instead of RAID 1, right?

-

After you create your pool, type the following but replace sdx with your pool letter.

btrfs filesystem show /dev/sdx

you will get a read-out consisting of the following.

Label: 'VM-store' uuid: 569b8d06-5676-4e2d-9a22-12d85dd1648d Total devices 3 FS bytes used 1.96GiB devid 1 size 465.76GiB used 2.02GiB path /dev/sdf devid 2 size 465.76GiB used 3.01GiB path /dev/sdh devid 3 size 465.76GiB used 3.01GiB path /dev/sdg

you will then mount your cache pool using the uuid number in the go script with /dev/by-id.

Removed the other quotes from the reply. Is there a set of commands that I can run to set up 4 SSDs into one pool - I spend about an hour looking around and could not find anything helpful. What I am looking for is:

I have 4 500GB SSDs (/dev/sdf /dev/sdg /dev/sdh /dev/sdi) and I want to combine them into one pool (raiding them doesn't matter but those commands would also be helpful too). So I am looking for the complete command set to do this.

Thanks.

-

I am confused about all of the various steps in this thread for adding the additional drives to the cache pool. I have 4 SSDs that I would like to combine into a pool. How would I do that?

That's what I was wondering to but given its on the roadmap. I don't think it's possible. I can add drives to make a cache pool but on reboot I have to redo it. Think you'll have to wait for next beta.

There are ways to do it now but the next beta will make cache pooling an option where it wasn't before.

Thanks. I will wait for it then.

Dynamix - V6 Plugins

in Plugin Support

Posted

Attached is my disk array.

disk_array.txt