-

Posts

11265 -

Joined

-

Last visited

-

Days Won

123

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by mgutt

-

-

18 minutes ago, Unraidmule said:

Is there a solution to reduce it further

Yes. Read this post:

-

I found a new container which permanently wrote to my SSD:

-

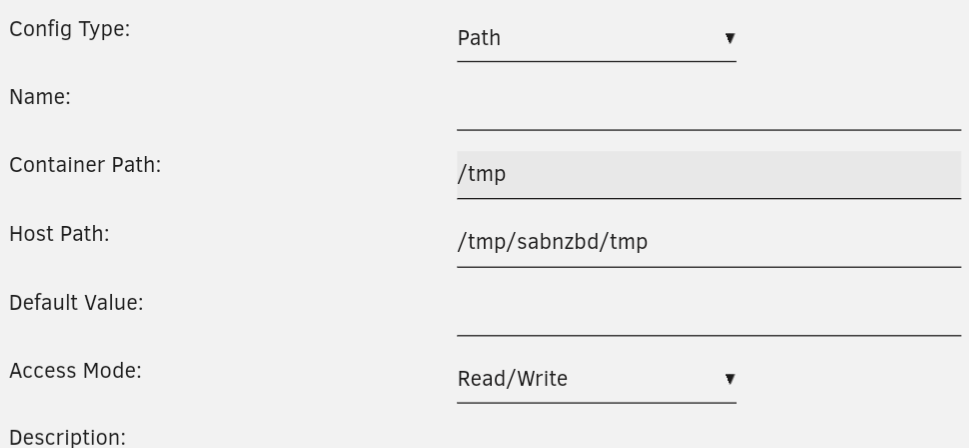

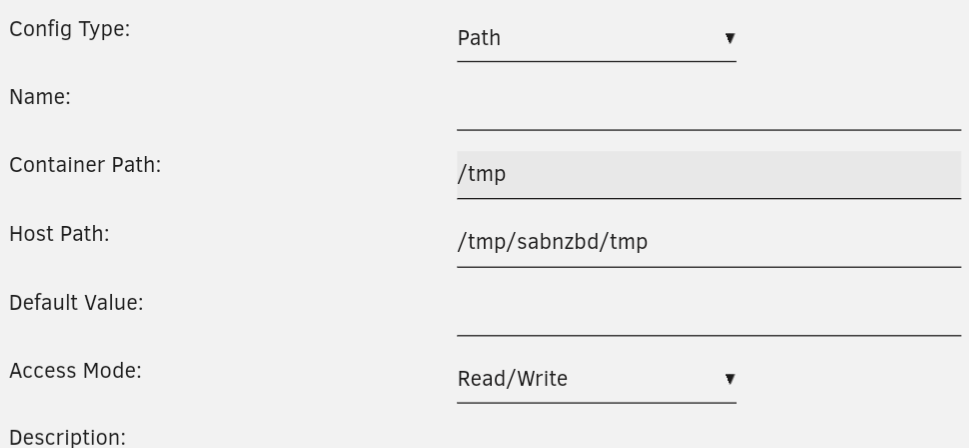

Could you please add this path to the default config to reduce wear on the SSD:

Sab is permanently writing to these files:

root@thoth:~# find /tmp/sabnzbd/ /tmp/sabnzbd/ /tmp/sabnzbd/tmp /tmp/sabnzbd/tmp/vpngatewayip /tmp/sabnzbd/tmp/vpnip /tmp/sabnzbd/tmp/getiptables /tmp/sabnzbd/tmp/watchdog-script-stderr---supervisor-jgbmg455.log /tmp/sabnzbd/tmp/watchdog-script-stdout---supervisor-dva2j1vt.log /tmp/sabnzbd/tmp/start-script-stderr---supervisor-5i8155s4.log /tmp/sabnzbd/tmp/start-script-stdout---supervisor-whva770j.log /tmp/sabnzbd/tmp/endpoints

And by using /tmp this are written to unRAID's RAM disk.

-

On 4/10/2024 at 12:15 PM, Unraidmule said:

Should they be removed so they don't interfere with the script?

This won't interfere, but you already avoid writing several log files. Maybe those are useful for you? Then you can now enable them by removing "--log-driver none" and "--log-driver syslog --log-opt syslog-address=udp://127.0.0.1:541" parts.

Even "--no-healthcheck" could be removed as well.

-

On 2/13/2024 at 5:40 AM, Mainfrezzer said:

6.12.8

Could you change this in your post to "6.12.8 to 6.12.10"? The link in the first post was updated.

-

1

1

-

-

Nur mal als Info: Ich habe eine Samsung 990 Pro 4TB verbaut und jetzt auch die Logs voller AER Fehler. Also Samsung alleine reicht nicht als Empfehlung. Kommt also auch auf das jeweilige Modell an. Nervig 😒

Hier übrigens die Latenz-Tabelle der 990 Pro:

Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 9.39W - - 0 0 0 0 0 0 1 + 9.39W - - 1 1 1 1 0 0 2 + 9.39W - - 2 2 2 2 0 0 3 - 0.0400W - - 3 3 3 3 4200 2700 4 - 0.0050W - - 4 4 4 4 500 21800

Ich versuche es jetzt mal mit der Kernel Option, um dem Einhalt zu gebieten:

nvme_core.default_ps_max_latency_us=15000

-

2

2

-

-

I replaced my SSD against a bigger one. I prepared this step by moving appdata from the SSD to the Array (while Docker was set to "no"). After replacing the SSD I was disappointed about the extremely low speed of the mover:

As docker is set to "no" I stopped the mover by the "mover stop" command and used this command instead:

rsync --remove-source-files --archive /mnt/disk6/appdata/ /mnt/cache/appdata & disown

This caused an extreme boost on moving the files, because a simple rsync - instead of the mover - does not check for every single file if it is in use:

Feature Request:

If the docker service has been stopped and "appdata" files are moved, it should not use the "fuser" overhead. Instead it should move the files directly without any additional checks. This should not happen if "appdata" is an smb/nfs share and starting the docker service should be greyed out as long the mover is active in this special mode.

The same could be done for the docker path and all other shares which are not smb/nfs shares I think.

Or:

Add a checkbox to the mover button, which allows starting the mover manually in "performance mode", which disables smb/nfs, docker and vm services.

-

Vom Prinzip spricht nichts gegen Klettband. Viele nutzen das auch in Desktop PCs, um SSDs an ungewöhnlichen Stellen zu platzieren. Ich würde nur welches für den Außenbereich nehmen bzw welches was hitzebeständig ist. Ansonsten löst sich der Kleber.

-

1

1

-

-

44 minutes ago, The_Holocron said:

thoughts?

Does this work?

https://community.synology.com/enu/forum/1/post/140590

QuoteThis is very well possible. The backup job is started as "root" users on the synology, so the only thing needed is a key pair in ~root/.ssh/

To make one, I did as follows:

go to Control Panel

select Terminal & SNMP

check "Enable SSH service" (port 22)

Now you SSH to the server and do:

use "sudo" to become root

ssh-keygen -t ed25519 #or just run "ssh-keygen" without the ed25519 option

cd to /root/.ssh/ and copy the public key. This is the pub key that the root user is using in Hyper Backup.

To check, you can make a Hyper Backup task with rsync for destination, set the correct server name, set "Transfer encryption" to "on", port to 22 and user name to the user that is going to receive the files. Leave "password" empty and click "shared folder". If everything goes well, the receiving server will have say something like "Accepted publickey for $your-username from $ip-address port $something ssh2: ED25519 SHA256:asdjf298euro23ji4iweuhroweijfaetceteraetceteraetcetera

-

Bedenke, dass der Docker Dienst läuft. Vielleicht hat er in dem Moment Daten in die Image Datei geschrieben. Das Backup der Datei wäre dann eh kaputt.

-

Das docker.img konnte nicht gelesen werden, was beim laufenden Docker Betrieb evtl die Ursache sein könnte. Allerdings macht es auch keinen Sinn das zu sichern. Die Docker Umgebung (also das Image) enthält keine Nutzerdaten. Die liegen in appdata.

-

1 hour ago, derjp said:

Kann mir jemand sagen warum er 3 mal gefailed ist?

Einfach im Log nach fail oder error suchen.

Ein Beispiel:

Quotersync: [sender] change_dir "/mnt/cache/syslog" failed: No such file or directory (2)

Den Pfad gibt es also nicht.

-

2 hours ago, jockebq said:

But even if I set NPM to 443 and 8080, and let Unraid WebUI use port 80. It won't start NPM:

bind() to 0.0.0.0:80 failed (98: Address already in use)

You are probably using the host network for your container. The container setting to set the port forwarding has no meaning in this context. It is only active for the bridge network.

Feel free to open a feature request at the official GitHub page of NPM to request for:

- disable port 80 / http

- Request a new VARIABLE to change the default ports 80 and 443

And/or use the bridge network.

-

1 hour ago, Maitresinh said:

Trying to figure out how to reset it

Delete the container and then delete the directory /mnt/user/appdata/nginx-bla-bla

The file manager plugin is useful for this.

-

On 3/10/2024 at 10:05 AM, HumanTechDesign said:

Kann berichten: die C-State-Suche hat sich gelohnt: im Disk-Spindown (Lüfter, IPMI, verschiedene laufende Docker etc.) geht es jetzt runter bis auf 22W

Du hast nicht geschrieben wie weit der C-State nun runter ging und warum?!

Ich würde erstmal eine Messung ohne alles machen. Manchmal sind es einzelne Slots und manchmal bestimmte Hardware.

-

10 hours ago, Cryd said:

Please choose an account

I would say: Delete everything and restart from the beginning. Everything else sounds complicated:

https://community.letsencrypt.org/t/certbot-renew-error-please-choose-an-account/206600/9

https://community.letsencrypt.org/t/please-choose-an-account-how-to-delete-an-account/212902

-

Ja, ich kann dir Komponenten raussuchen und bei Bedarf auch zusammensetzen. Am besten telefonieren wir mal und du beschreibst mir deinen Bedarf. Meine Kontaktdaten findest auf meiner Website:

-

1

1

-

-

-

Denkbar wären noch Nextcloud und Filebrowser.

-

Einfach nach der Modellbezeichnung googlen. Bei eBay gibt es zB was.

-

2 hours ago, eivissa said:

80W

Naja. Die kommen sicher nicht vom Board und der CPU. Da läuft doch noch anderes im Leerlauf. Was hast du alles verbaut?

-

BTRFS macht nicht von sich aus die Sektoren kaputt. Entweder ist der RAM defekt oder die PCIe Verbindung der NVMe gestört. Letzteres ist ebenfalls in den Logs zu sehen. Bitte mal eine Diagnostics posten.

-

1

1

-

-

3 hours ago, genesisdoeswhatnintendont said:

This isn't really helpful I think. I tested the Seasonic Titanium power supply and it's far away of being the best:

https://gutt.it/seasonic-titanium-netzteil-bei-niedriglast-keine-option/

-

18 minutes ago, Sptz87 said:

Has anyone used this at all in unraid?

Has no impact regarding my tests:

Wie ISO Datei mounten?

in Deutsch

Posted

Bitte im Terminal das ausführen:

find /mnt/user/isos -ls

Ergebnis?