S1dney

-

Posts

104 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by S1dney

-

-

8 minutes ago, deltaexray said:

So basically, All I have to do is compose my bitwarden docker and get it to run and do not tinker with any MSSQL Installation, given that Bitwarden does install that itself, right?

You don't really need to compose a docker neither.

Just download/curl the build script, run the commands and when you run ./bitwarden.sh start it will issue the compose commands needed to start anything.

And the override setting will allow you to override the default settings. -

13 hours ago, deltaexray said:

But speaking of putting in the work: I'm not stupid, like I can handle my fair share of work. But, how did you all got Bitwarden to run on Unraid? Like with docker Compose in a docker, or on a VM or how did you do it?

Because, for me, I cannot get it setup or find a way to do it for me, that in one way or another works. Like I'd like to not run a VM but then, how does Bitwarden run on a system it's not supporting?

I'm a bit out of my "I know what to do" zone here so if anyone as a starting point, I'd be more than gratefull for it.

Maybe I am just thinking to comlicated again but yeah, that's the state.

Thanks

You need to find the nerdpack plugin, it has docker-compose included, cause you are right indeed that bitwarden uses docker-compose to start everything up.

Then with that installed just follow the steps mentioned in the Bitwarden docs.

You have to complete:

- step 1

- step 2 is already done by running unraid (docker onboard) and installing the nerdpack for docker-compose

- create a directory in step 3 (I have mine on /mnt/user/appdata/bitwarden since the appdata resides on the cache only). Note that I did not create a bitwarden user at the time, may do that later for additional security, this has been running for 3 years+ lol.

- Get your key in step 4

- Install Bitwarden in step 5 by curling the build script into the directory you have created and then invoke some commands.

- Add the overrides I gave you in the mentioned post in step 6 so it will actually be able to start on unRAID

- Then start it and enjoy Bitwarden.

GL!

-

6 hours ago, deltaexray said:

Is anyone, who was previously interacting with this thread, stil on this?

I'm in the process of seting up Bitwarden on UnRaid and I'm not quite sure *how* to do it properly. Given this thread had a lot of the same crosspoints, It may makes sense to ask?:D

Also: Do I really need to run a MSSQL Databse for Bitwarden or is it doing it by itself?

Thanks in advanceI think I still have Bitwarden running using the override file specified in my comment:

I don’t recall touching that since and it had been working very well for me.

You cannot choose another SQL version, this is what the template from Bitwarden pulls for you.

Good luck!

-

Hmm, did not get a notification on these replies. Guess I wasn’t following my own topic yet lol. Following now.

Good suggestions tho! I’ll check those out and report back later on.

Cheers!

-

Hey Y'all!

I'm using a custom built bash script to update all my self built docker containers every once in a while.

It still requires me to login to the GUI and press the "Update All" button from the Docker tab tho..

I'm running these updates from the terminal so would rather have the code trigger this as well.

Have been searching through the php files etc to see if I can see how this is done but no luck yet.

I also have a script that allows my UPS to shutdown unraid in a clean manner which basically uses the CSRF token to control it.

echo 'Stopping unraid array.' CSRF=$(cat /var/local/emhttp/var.ini | grep -oP 'csrf_token="\K[^"]+') # Send this command to the backgroup so we can check the status of the unmount on the array (last step unraid takes) # We send everything to /dev/null cause we're not interested in nohup's output nohup curl -k --data "startState=STARTED&file=&csrf_token=${CSRF}&cmdStop=Stop" https://localhost:5443/update.htm >/dev/null 2>&1 &I assume that something similar must be possible for the Update All button?

Anyone able to assist here?

Appreciate the efforts!

Best regards,

Sidney

-

24 minutes ago, spgill said:

I've been using docker-compose successfully for a few weeks now, but I'm curious (to those that also use it) what is your strategy for auto-starting containers on boot / on array start? It's not a huge imposition to log in and do a `docker-compose up -d` in the rare instance when my server reboots, but it would be nice for it to be more hands-off.

Just add those lines to download docker-compose in the go file and have the user scripts plug-in run a few lines of docker-compose commands after array start?

I haven’t set it up tho as I manually unlock my encrypted array anyways.

Cheers

-

1

1

-

-

7 hours ago, maxstevens2 said:

Really have to give out probs already to @Niklas with the update suggestion. Otherwise I would have needed to apply the solution in the 'report' page 2.

The GO (and service.d file) modifications are not needed anymore in unraid 9.2 as they basically created a way to do exactly that from the GUI.

The GUI should now allow you to host docker files in their own directory directly on the cache (which what the workaround did via scripts instead of the GUI).

Haven't moved there myself yet as I still see enough issues with rc2 for now.

I believe that reformatting the cache is also advisable cause they have made some changes in the partition alignment there as well (you may or may not get lesser rights because of it, I'm using Samsung EVO's so I consider wiping and reformatting mine after the upgrade).

Cheers!

-

1

1

-

-

2 hours ago, Zentachi said:

Many thanks for your detailed answer and advice.

I have watched a few videos (mainly from the great spaceinvaderone) about the DockerMan GUI and it does seem like a nice tool. Definitely I won't be able to transfer everything since I do have linked network interfaces for some. I don't know if its worth splitting my docker-compose file migrating some and leaving the rest. However, I might try it for a couple containers initially to check whether it will be convenient for me.

Which method you use to make your docker-compose permanent?

The below from a previous post or is there another one available nowdays?

I've looked at my go file, I still have the pip3 way in place and it's working nice:

# Since the NerdPack does not include this anymore we need to download docker-compose outselves. # pip3, python3 and the setuptools are all provided by the nerdpack # See: https://forums.unraid.net/index.php?/topic/35866-unRAID-6-NerdPack---CLI-tools-(iftop,-iotop,-screen,-kbd,-etc.&do=findComment&comment=838361) pip3 install docker-composeThe second way via curl also works well as I equipped my duplicati container with it.

You don't have to setup any dependencies that pip3 needs, so for simplicity reasons this may be more perferable.

Cheers!

-

1

1

-

-

2 hours ago, Zentachi said:

Hey everyone,

First of all wishing you a happy 2021!

In the next couple of weeks I am going to build my first unraid server (migrating from ubuntu). I have a question in regards to docker-compose in unraid.

Currently I have 29 docker containers running which are managed with docker-compose as it makes it very convenient.

Going forward, do you guys believe is worth migrating those to the docker template system of unraid or is it better to install docker-compose.

If I use the latter will I still be able to use the unraid docker management to do some managing or everything will be based on my docker-compose config file?

ThanksBest wishes to you too 👍

Whether to use docker-compose or Unraid’s own DockerMan, depends on what you want and/or need.

The DockerMan templates give you an easy option to redeploy containers from the GUI, you’ll also be notified when a docker container has an update available. I like Unraid’s tools so I default to these and create most containers from the GUI, however certain scenarios cannot be setup via DockerMan (at least not when I checked), like linking the network interfaces of containers together (so that all the container’s traffic flows through one of them).

Therefore I use docker-compose for those and also have some containers I’ve built myself that I want to setup with some dependencies. I update them via a bash script I wrote and redeploy them afterwards, as the GUI is not able to modify their properties (which answers your second question, containers deployed outside of the DockerMan GUI do not get a template and cannot be managed/updated, you can start and stop them tho).

My advise would be: stay with the Unraid default tooling unless you find a limitation which might make you want to deviate from them.

-

1

1

-

-

3 hours ago, wazabees said:

Hm, probably because I had no idea that I could! Thanks, that's a great tip!

You're welcome.

3 hours ago, wazabees said:Is this the best solution though? Or does a reverse proxy make more sense?

Hahah well you're basically answering your self.

If you was exposing the services to the outside world it would make sense to send the traffic through a reverse proxy so you would only have to open up one port.

Another use case for that reverse proxy would be hosting two containers on the host's address that require the same port to function (like the common 80 or 443), the reverse proxy would be able to route traffic to those ports based on hostnames and allow you to use that port for the client application that expects the server to be available on that port.I have also looked at (or actually implemented it) the nginx reverse proxy, but decided just to put the container on a different IP and call it a day.

My todo list still has Traefik on it hahah, but too much on there atm

Also, I can so much relate to this statement hahah:

3 hours ago, wazabees said:I must admit that I enjoy poking at stuff for edutainment

That's why unraid is so much fun!

Cheers man.

-

1

1

-

-

Why don't you put the docker containers on br0 and assign their unique IP address so DNS is able to distinct based on IP.

If you want to route traffic to a different port on the same IP you would have to inspect the DNS address queried and route accordingly, which is where a reverse proxy would come into play.

The easiest solution for you (that does not require you to dive into the reverze proxy stuff as a networking n00b

)

)

-

1

1

-

-

On 4/23/2020 at 5:45 PM, juan11perez said:

docker-compose is available in community applications > nerd pack

edit: it's not there anymore ??

anyway adding the below to /boot/config/go will make it permanent

COMPOSE_VERSION=$(curl -s https://api.github.com/repos/docker/compose/releases/latest | grep 'tag_name' | cut -d\" -f4) curl -L https://github.com/docker/compose/releases/download/${COMPOSE_VERSION}/docker-compose-`uname -s`-`uname -m` -o /us> chmod +x /usr/local/bin/docker-compose

It's not anymore.

You can now select some prerequisites from the NERDpack and then use pip3 to handle the install.

I've edited to my go (/boot/config/go) file:

# Since the NerdPack does not include this anymore we need to download docker-compose outselves. # pip3, python3 and the setuptools are all provided by the nerdpack # See: https://forums.unraid.net/index.php?/topic/35866-unRAID-6-NerdPack---CLI-tools-(iftop,-iotop,-screen,-kbd,-etc.&do=findComment&comment=838361) pip3 install docker-composeI must say that you curl solution looks clean and doesn't require setting up additional dependencies via the NERDpack in advance.

Bookmarked in case the pip3 solution might fail later on

EDIT: The curl solution seems to have been truncated @juan11perez. I wanted to use this in my Duplicati container but noticed that the second line is missing its end. The docker docs noted the full command:

COMPOSE_VERSION=$(curl -s https://api.github.com/repos/docker/compose/releases/latest | grep 'tag_name' | cut -d\" -f4) curl -L https://github.com/docker/compose/releases/download/${COMPOSE_VERSION}/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-composeYou may want to edit your example in case anyone want to use.

Thanks!

-

3

3

-

-

8 hours ago, Moz80 said:

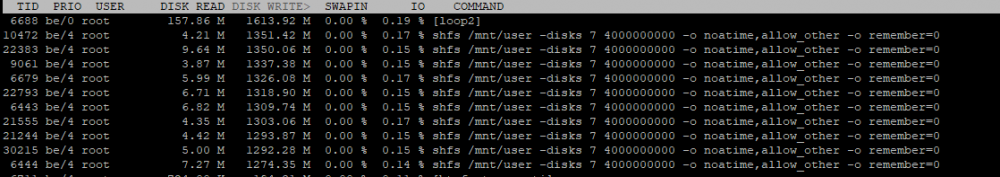

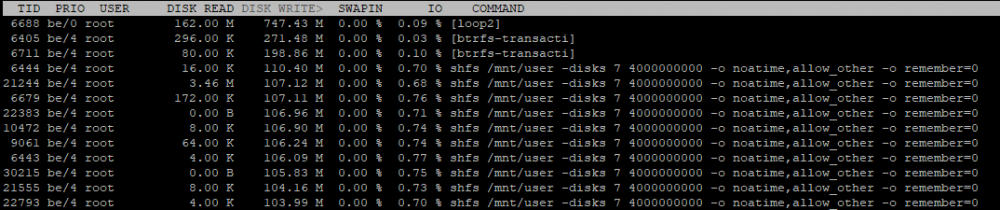

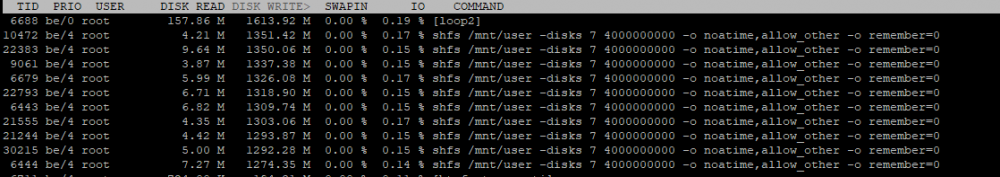

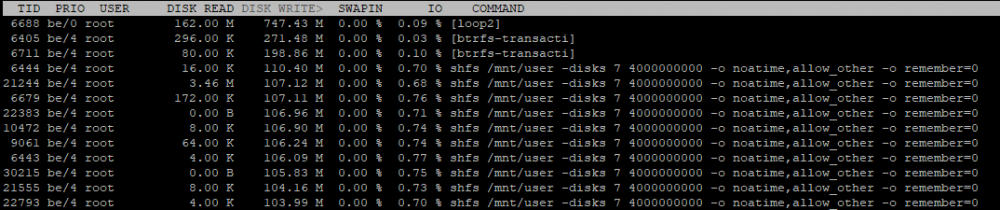

So now with 24 hours of iotop data, i have 120GB on loop2 (much better than the TB's i would have had on the official plex container)

Interestingly I can see a few Plex Media Server entries (that seem consistent with some watch activity that occured overnight)

44GB of transmission activity seems a bit up there, there was a single 8GB torrent overnight.

But if all this activity is seperate to the loop2 entry, what exactly is going on with loop2?

Actual DISK READ: 0.00 B/s | Actual DISK WRITE: 350.07 K/s TID PRIO USER DISK READ DISK WRITE SWAPIN IO> COMMAND 5041 be/0 root 1936.26 M 119.93 G 0.00 % 0.31 % [loop2] 5062 be/4 root 1968.00 K 3.21 G 0.00 % 0.08 % [btrfs-transacti] 5110 be/4 root 0.00 B 65.55 M 0.00 % 0.04 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5090 be/4 root 4.00 K 75.10 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5112 be/4 root 4.00 K 72.13 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5115 be/4 root 16.00 K 79.57 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5114 be/4 root 24.00 K 67.71 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5109 be/4 root 212.00 K 80.83 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5086 be/4 root 0.00 B 67.98 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 9424 be/4 root 484.43 M 54.46 M 0.00 % 0.03 % python Tautulli.py --datadir /config 4706 be/4 root 0.00 B 0.00 B 0.00 % 0.03 % [unraidd4] 5075 be/4 root 48.00 K 58.54 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5120 be/4 root 20.00 K 81.87 M 0.00 % 0.03 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5123 be/4 root 52.00 K 64.18 M 0.00 % 0.02 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5119 be/4 root 224.00 K 51.89 M 0.00 % 0.02 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5107 be/4 root 12.00 K 60.95 M 0.00 % 0.02 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 5087 be/4 root 48.00 K 55.74 M 0.00 % 0.02 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 7538 be/4 nobody 44.87 G 1104.00 K 0.00 % 0.02 % transmission-daemon -g /config -c /watch -f 8870 be/4 nobody 488.26 M 2.27 M 0.00 % 0.02 % mono --debug /usr/lib/sonarr/NzbDrone.exe -nobrowser -data=/config 5113 be/4 root 0.00 B 26.85 M 0.00 % 0.01 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 8267 be/4 nobody 3.07 G 20.00 K 0.00 % 0.00 % Plex Media Server 8268 be/4 nobody 3.02 G 12.00 K 0.00 % 0.00 % Plex Media Server 8869 be/4 nobody 3.51 G 27.51 M 0.00 % 0.00 % mono --debug /usr/lib/sonarr/NzbDrone.exe -nobrowser -data=/config 5108 be/4 root 0.00 B 4.16 M 0.00 % 0.00 % dockerd -p /var/run/dockerd.pid --storage-driver=btrfs --log-level=error 4865 be/4 root 252.00 K 67.11 M 0.00 % 0.00 % [btrfs-transacti] 8952 be/4 nobody 86.22 M 7.18 M 0.00 % 0.00 % mono --debug /usr/lib/radarr/Radarr.exe -nobrowser -data=/config 28612 be/4 root 90.55 M 0.00 B 0.00 % 0.00 % homebridge 31972 be/4 root 15.64 M 1756.00 K 0.00 % 0.06 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 32221 be/4 root 11.69 M 1528.00 K 0.00 % 0.06 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 8868 be/4 nobody 78.38 M 2040.00 K 0.00 % 0.00 % mono --debug /usr/lib/sonarr/NzbDrone.exe -nobrowser -data=/config 8953 be/4 nobody 79.08 M 1432.00 K 0.00 % 0.00 % mono --debug /usr/lib/radarr/Radarr.exe -nobrowser -data=/config 3728 be/4 root 4.11 M 352.00 K 0.00 % 0.14 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 5076 be/4 root 76.00 K 252.00 K 0.00 % 0.37 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 8160 be/4 nobody 298.57 M 624.00 K 0.00 % 0.00 % Plex Media Server 9133 be/4 nobody 781.49 M 1476.00 K 0.00 % 0.00 % mono --debug /usr/lib/lidarr/Lidarr.exe -nobrowser -data=/config 9134 be/4 nobody 317.68 M 1300.00 K 0.00 % 0.00 % mono --debug /usr/lib/lidarr/Lidarr.exe -nobrowser -data=/config 9135 be/4 nobody 212.10 M 1568.00 K 0.00 % 0.00 % mono --debug /usr/lib/lidarr/Lidarr.exe -nobrowser -data=/config 5077 be/4 root 236.00 K 140.00 K 0.00 % 0.28 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 28633 be/4 root 16.68 M 0.00 B 0.00 % 0.00 % homebridge-config-ui-x 8954 be/4 nobody 77.48 M 1108.00 K 0.00 % 0.00 % mono --debug /usr/lib/radarr/Radarr.exe -nobrowser -data=/config 8638 be/4 nobody 2.34 M 32.00 K 0.00 % 0.00 % tint2 -c /home/nobody/tint2/theme/tint2rc 4324 be/4 root 6.19 M 8.28 M 0.00 % 0.12 % qemu-system-x86_64 -name guest=Windows 10,debug-thread~n=deny,resourcecontrol=deny -msg timestamp=on [worker] 4931 be/4 root 5.45 M 128.00 K 0.00 % 0.18 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 28613 be/4 root 428.00 K 0.00 B 0.00 % 0.00 % tail -f /dev/null 18758 be/4 root 948.00 K 11.84 M 0.00 % 0.00 % [kworker/u12:3-btrfs-scrub] 4922 be/4 root 1608.00 K 104.00 K 0.00 % 0.15 % shfs /mnt/user -disks 63 2048000000 -o noatime,allow_other -o remember=0 9423 be/4 root 2.70 M 764.00 K 0.00 % 0.00 % python Tautulli.py --datadir /config 27586 be/4 root 110.12 M 68.00 K 0.00 % 0.00 % python Tautulli.py --datadir /config 9441 be/4 root 6.93 M 244.00 K 0.00 % 0.00 % python Tautulli.py --datadir /config 8114 be/4 root 0.00 B 304.00 K 0.00 % 0.00 % containerd --config /var/run/docker/containerd/containerd.toml --log-level error 8415 be/4 root 9.79 M 544.00 K 0.00 % 0.00 % python /usr/bin/supervisord -c /etc/supervisor.conf -n 24792 be/4 root 110.03 M 64.00 K 0.00 % 0.00 % python Tautulli.py --datadir /configI see you’re using the btrfs storage driver.

loop2 activity will probably related to this open Bugreport.

-

What are you trying to accomplish exactly, docker containers on the default bridge are able to locate each other on IP details.

If you want to have them be able to reach each other by name, then you have to use a custom network.

Now for pihole, I've put mine on the custom: br0 bridge and gave it an IP in my own segment, since this allows my to use port 80 of the host and still have pihole available on that same port. No VLAN was required at all for this.

Note that I do use VLANs, but that's mainly to be able to reach the host from a docker container.

I've created a macvlan driver network on that VLAN, without giving the host an IP in that same VLAN, this allows me to reach the unRAID host on its usual address from the newly created network.

-

33 minutes ago, dmacias said:

No mistake sorry. Newer versions started requiring too many dependencies. However with any python package that doesn't require compiling, you can install it with all its depends through pip or pip3. See here https://forums.unraid.net/index.php?/topic/35866-unRAID-6-NerdPack---CLI-tools-(iftop,-iotop,-screen,-kbd,-etc.&do=findComment&comment=838361)

Ok understood, I'll give that a go.

Sad that it had to go tho.

Thanks for the alternative way! Appreciate it.

-

Hey Guys,

I was wondering what happened to the docker-compose command?

Rebooted my server after 39 days today and the nerdpack apparently got an update, but this left me without docker-compose.

Running on 6.8.0rc7, I did notice some commits on the Github less then 39 days ago.

My docker setup greatly depends on docker-compose, so I'd like to have it back 🙂

Appreciate the effort!

Best regards.

EDIT:

Noticed that it was still listed at "https://github.com/dmacias72/unRAID-NerdPack/tree/master/packages/6.6", but not on

"https://github.com/dmacias72/unRAID-NerdPack/tree/master/packages/6.7" and above.

So I was able to get it for now by putting version 6.6 in the NerdPackHelper script.

I imagine this was a mistake?

Thanks again!

-

On 3/14/2020 at 11:47 AM, sdamaged said:

Old thread but yes this is indeed a problem. My 2 year old Samsung 850 Pro SSD (which was used for cache for only 12 months!) has over 450TB written which effectively means the warranty is now void...

There a bug report for this now:

-

1

1

-

-

10 minutes ago, Niklas said:

loop2 is the mounted docker.img, right? I have it pointed to /mnt/cache/system/docker/docker.img

What did you change in your rc.docker?Yeah it should indeed.

You can check with:

df -hm /dev/loop2This will probably show you mounted on /var/lib/docker.

Now the fact that you're moving the docker image between the user share appdata and the mountpoint directly doesn't really help, since docker is still running inside an image file, mounted via a loop device on your cache.

There seems to be a bug with using docker in this kind of setup, although I wasn't able to reproduce this on another linux distro. Might be a Slackware thing.

The only way (I could come up with) to get the writes down is to create a symlink between /mnt/cache/docker (or whatever cache only dir you create) and /var/lib/docker and then start docker.

The start_docker() function I've modified inside the rc.docker script does that and some other things, like checking whether the docker image is already mounted, and if so, unmount it.

-

13 hours ago, Niklas said:

Running MariaDB-docker pointed to /mnt/cache/appdata/mariadb or /mnt/user/appdata/mariadb (Use cache: Only) generates LOTS of writes to the cache drive(s). Between 15-20GB/h. iotop showing almost all that writing consumed by mariadb. Moving the databases to array, the writes goes down very much (using "iotop -ao"). I use MariaDB for light use of Nextcloud and Home Assistant. Nothing else.

This is iotop for an hour with mariadb databases on cache drive. /mnt/cache/appdata or /mnt/user/appdata with cache only:

When /mnt/cache/appdata is used, the shfs-processes will show as mysql(d?). Missing screenshot.

This is iotop for about an hour with databases on array. /mnt/user/araydata:

Still a bit much (seen to my light usage) but not even near the writing when on cache.

I don't know if this is a bug in Unraid, btrfs or something but I will keep my databases on array to save some ssd life. I will loose speed but as I said, this is with very light use of mariadb..

I checked tree different ways to enter the path to the location for databases (/config) and let it sit for an hour with freshly started iotop between the different paths. To calculate data used, I checked and compared the smart value "233 Lifetime wts to flsh GB" for the ssd(s). Running mirrored drives. I guess other stuff writing to the cache drive or share with cache set to only will have unnecessary high writes.

Sorry for my rumble. I get like that when I'm interested in a specific area.

Not native english speaker. Please, just ask if unclear.

Edit:My docs

On /mnt/cache/appdata/mariadb (direct cache drive)

2020-02-08 kl 22:02 - 23:04. 15 (!) GB written.

On /mnt/user/arraydata/mariadb (user share on array only)

2020-02-08 kl 23:04-00:02. 2 GB written.On /mnt/user/appdata/mariadb (Use cache: Only)

2020-02-09 kl 00:02-01:02. 22 GB (!) written.

Just ran this again to really see the differense and loook attt itttt.

On /mnt/user/arraydata/mariadb (array only, spinning rust)

2020-02-09 kl 01:02-02:02. 4 GB written.

You're saying you're moving the mariadb location between the array, the cache location (/mnt/user/appdata) and directly onto the mountpoint (/mnt/cache).

The screenshots seem to point out that the loop device your docker image is using is still the source of a lot of writes though (loop2)?

One way to avoid this behavior would be to have docker mount on the cache directly, bypassing the loop device approach.

I had similar issues and I'm running directly on the BTRFS cache now for quite some time. Still really happy with it.

I wrote about my approach in the bug report I did here:

Note that in order to have it working on boot automatically I modified the start_docker() function and copied the entire /etc/rc.d/rc.docker file to /boot/config/docker-service-mod/rc.docker. My go file copies that back over the original rc.docker file so that when the docker deamon is started, the script sets up docker to use the cache directly.

Haven't got any issues so far 🙂

My /boot/config/go file looks like this now (just the cp command to rc.docker is relevant here, the other lines before are for hardware acceleration on Plex)

#!/bin/bash # Load the i915 driver and set the right permissions on the Quick Sync device so Plex can use it modprobe i915 chmod -R 777 /dev/dri # Place the modified docker rc.d script over the original one to make it not use the docker.img cp /boot/config/docker-service-mod/rc.docker /etc/rc.d/rc.docker # Start the Management Utility /usr/local/sbin/emhttp &Cheers.

-

11 minutes ago, limetech said:

what patch?

Well, if I read your initial post on 01-11-2010, there is a security vulnerability discovered in the form-based authentication.

If all users are strongly encouraged to update/patch this flaw, I'd really also like to deploy it of course.

I wasn't able to find any of the releases that included the kernel 5.4 with it, they all seem to revert me back to a kernel version below 5.

Thanks again!

REF:

On 1/11/2020 at 9:36 AM, limetech said:This is a bug fix and security update release. Due to a security vulnerability discovered in forms-based authentication:

ALL USERS ARE STRONGLY ENCOURAGED TO UPGRADE

-

Hey Guys,

Is this patch also available for those holding on 6.8rc7?

I want to stay on kernel 5.4, but leaving my host vulnerable is also not that appealing.

Appreciate the help!

Cheers

-

18 hours ago, Mizerka said:

Thanks for flagging this, wasn't aware of it.

No problem! Cheers.

-

12 minutes ago, szymon said:

Thanks @S1dney, I was affected by the cache pool bug and just fixed it! Cheers!

You're welcome!

I think a lot of us are.. Without knowing.

If you're not troubleshooting the cache you'll likely not notice this, unless you're questioned about why the metrics are way off between disks

-

Also you might want to run "btrfs balance start -mconvert=raid1 /mnt/cache" against your pool cause your setup isn't that redundant at the moment 🙂

Data Metadata System

Id Path RAID1 single(!) single(!) Unallocated

-- --------- --------- -------- -------- -----------

1 /dev/sdt1 250.00GiB 2.01GiB 4.00MiB 213.75GiB

2 /dev/sdu1 250.00GiB 2.00GiB - 213.76GiB

-- --------- --------- -------- -------- -----------

Total 250.00GiB 4.01GiB 4.00MiB 427.51GiB

Used 219.61GiB 1.96GiB 64.00KiB

If one of your drives fails now, your in bad luck.

See:

-

1

1

-

1

1

-

MSSQL Docker on UnRaid

in Docker Engine

Posted

No, you will indeed do a lot of this from the terminal as you can't interact from the unRAID dockerman (GUI).

And you can go as deep as you want with unRAID, it doen't really hide anything for you, which is what I love about it.