-

Posts

180 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by JustOverride

-

-

I don't have the specific details as to what it does, other than it fixes an issue with the kernel for the GPU and other devices passthro. I can only speculate to be honest. I'm just happy the issue was fixed.

-

Isn't the address something that is set after the firmware update? I kinda don't wanna keep trying to remove stuff from it but at this point I guess I'll try it.

If this doesn't work out I may try the SAS 9207-8i as it appears to come pre-flashed to IT. However, I would like to know which card is better for unraid and/or overall, the SAS 9207-8i or the LSI 9211-8i? -

I have tried using a different keyboard, and changing the flash file to "it.bin" in case the issue was being caused by being too long or by the numbers and no luck. I've also try to use 'sas2flash.efi -o -f it.bin' and 'sas2flash.efi -o -b mptsas2.rom'. I still get the syntax error. Any other suggestions?

-

I bought a LSI 9211-8i and I've been trying to flash it using my x79 motherboard, and following a few guides like the ones below:

https://nguvu.org/freenas/Convert-LSI-HBA-card-to-IT-mode/

https://nullsec.us/raid-on-home-esxi-lab/The one that got me the farthest is the last one as I have to use UEFI. I've gotten all the way till the "fs0:/> sas2flash.efi -o -e 6" command; However, the "sas2flash.efi -o -f 2118ir.bin" (I'm not trying to install the .rom) isn't working. Even if I do it with the full "sas2flash.efi -o -f 2118ir.bin -b mptsas2.rom" command. I have my server up and can't turn it off as it will brick the card. Please advice what I should do here. Another question, which card is better for unraid and/oir overall, the SAS 9207-8i already flashed to IT or the LSI 9211-8i?

-

I guess this is for anyone who finds this threat in the future.

I finally FIXED IT

Special thanks to @peter_sm

I followed a bunch of his threads and the information from the Lv1Forums where I found that adding "pcie_no_flr=1022:149c,1022:1487" would solve this issue. They (2 of them) suspected that this is related to the x79 platform (which is also what I have).

Anyway, I have shut it down, restarted, and updated it more times that I cared to count without issues. I've also left the VM on over night, played heavy games on it, and there was still no issue. Now that everything is working as it should, I'm back to loving unraid.-

1

1

-

-

1 hour ago, clinkydoodle said:

I just had this same thing, although killing the VM didn't resolve it. I then disabled each docker container in turn to see what it was, still nothing. Then finally disabled docker, then enabled it again and it quietened down. First time its happened for me though, so could just be a one time thing. I did also have a container that corrupted on me and I had to re-install it not long before this behaviour started.

Try this:

-

I'm still experiencing this issue which is making it impossible for me to even have VM's. I have read around the forum and I can't find the solution as similar problems just don't get replied to and slowly just disappear. Anyone have any ideas?

At this time, I'm just testing if this is due to the GPU passthrough by using VNC as I've basically tried almost everything else. If this fails, I'm considering try to run ESXI and unraid on top solemmly for the data array.

(Edit) I have been turning it off and on without issues after using VNC only.

-

So its normal for it to say "ERROR Register 3 (rev 4)" ?

-

IOMMU group 36:[8086:0ea8] ff:0f.0 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 0 Target Address/Thermal Registers (rev 04)

[8086:0e71] ff:0f.1 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 0 RAS Registers (rev 04)

[8086:0eaa] ff:0f.2 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 0 Channel Target Address Decoder Registers (rev 04)

[8086:0eab] ff:0f.3 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 0 Channel Target Address Decoder Registers (rev 04)

[8086:0eac] ff:0f.4 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 0 Channel Target Address Decoder Registers (rev 04)

[8086:0ead] ff:0f.5 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 0 Channel Target Address Decoder Registers (rev 04)

IOMMU group 37:[8086:0eb0] ff:10.0 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 Thermal Control 0 (rev 04)

[8086:0eb1] ff:10.1 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 Thermal Control 1 (rev 04)

[8086:0eb2] ff:10.2 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 ERROR Registers 0 (rev 04)

[8086:0eb3] ff:10.3 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 ERROR Registers 1 (rev 04)

[8086:0eb4] ff:10.4 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 Thermal Control 2 (rev 04)

[8086:0eb5] ff:10.5 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 Thermal Control 3 (rev 04)

[8086:0eb6] ff:10.6 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 ERROR Registers 2 (rev 04)

[8086:0eb7] ff:10.7 System peripheral: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 Integrated Memory Controller 1 Channel 0-3 ERROR Registers 3 (rev 04)

-

I'm a bit new but I did looked over your diagnostics file and couldn't figure out what is causing your issue. I would suggest you try turning your VM's/Docker's one at the time until you can ping point the root cause a bit better. Hope someone can help you a little more.

-

Update;

[FIX to VM crashing unraid]Unraid was crashing whenever you sometimes reset/shutdown a VM due to the short 'disconnect' timer from unraid.

Go to Settings->VM Manager->VM shutdown time-out, and set it to 300 (5 minutes).

Go to Settings->Disk Settings->Shutdown time-out, and change the time-out to 420 (7 minutes).I'm not sure why the time-out would outright crash unraid, something must be wrong. If you're interested as to why this happens and want to know the full details, please read the post below:

(edit) Update 4/24/2020 - 12:20am - While they where shutting down without issue, today the whole server crash...

There appear to have been some corruption done... looks bad.. I can't even see my shares...

contemplating setting the server on fire and setting it outside at this point. -

I have been on the forums posting about an issue with 100% CPU usage and I have seen other people with THAT issue. However, yours is different. I had an issue like yours. Turned out to be a docker or a VM having an incorrect path and there was an error being thrown into the log until it eventually filled it.

When does this start? -

Update: So far, this is what I've found;

I actually have 2 issue, the VM crashing unraid, and the core(s) going to 100% are two separate issues. I thought it was one.

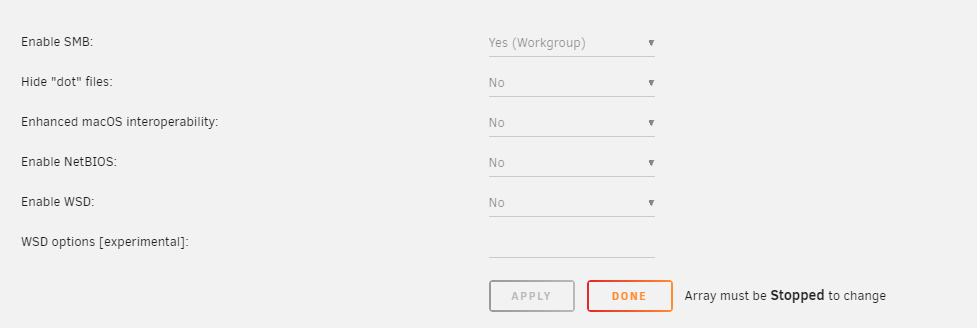

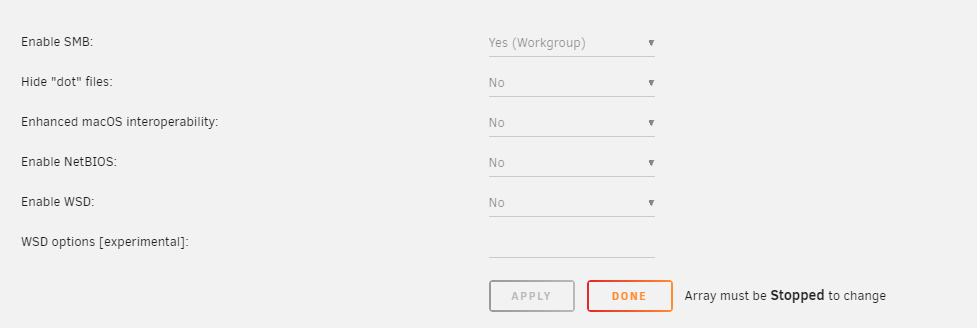

The core(s) going 100% appear to be caused by WSD. So I've disabled it to have fixed this issue. Before you ask, yes the server still appears under my network in Windows....after you've browsed to it directly, and it will disappear once you close the File Explorer. But who cares, you can still access it, or if you have it mapped it still there, still works, just not discovered automatically. Yes, its not great as we just got this feature recently (because it wasn't quiet working before on previous unraid versions unless you enabled SMB1 or something).

Settings -> SMB

I'm now looking at the issue of the VM crashing unraid upon shutting down. I found an old post here on the forums about a few things to try which is what I'm testing, so I will update on that soon.

-

@bastl That ISO was from a totallylegitamentwebsite. 😁 I was having the issue with my W10 and Windows Server 2019 both of which were different ISO's (obviously) acquire from different places. I think I may have fixed this issue. Just want to run it for 24-48 hrs before posting my findings and how I resolved it.

-

I would like to add that I would actually like to see a schedule section.

So that you can schedule unraid/dockers/vm's to restart/start/stop.-

1

1

-

-

Oh, that is something I missed. I'm going to try changing to Q35 machine type and see if it resolve an issue I'm having as well.

-

I'm having a similar issue. Now that you mention it, the issue with the cores does appear to happen when the VM is being pinged hard at 100% with some task. Here is what I'm experiencing.

Looking into this being looked into as well. -

Right after starting a VM (game oriented VM with GPU passthro) One of my cores goes to 100% usage. It stays like that even if I shutdown the VM. Sometimes starting the VM is fine, but then upon shutting it down, it causes the whole UNRAID server to crash.

I notice I can temporarily "fix" the issue, of one or more of the cores going to 100%, by going to Shares and editing ANY share at all. Even if is just the Share's comment section. Basically, as long as I can hit 'Apply' when editing a share it 'resolves' the issue and the CPU core(s) goes back to normal. Sometimes, more cores do this and eventually leading to unraid crashing and needing a forceful shutdown. All dockers were turned off. VM works fine by the way. This doesn't appear to be the case with the second VM that doesn't have a pass-throu GPU.

It may be connected to something that gets 'restarted/cleared' upon editing one of the shares. I've looked around and the cases I've found were not resolved.

This is my current diagnostics after the removal of the USB PCIE card, and a sound card I wasn't using. Currently, one of the cores is stuck at 100%. This happened after I started the VM. I shut it down, and it is still at 100%.

The server is currently running a parity check from the last server crash.

-

Disconnected the USB controlled PCIE card and the sound card (wasn't being used), and I'm still experiencing disconnects and crashes on unraid upon the VM being shutdown. There's also this error in the VM Logs

-blockdev '{"driver":"file","filename":"/mnt/disks/Windows_10/Windows 10/vdisk1.img","node-name":"libvirt-1-storage","cache":{"direct":false,"no-flush":false},"auto-read-only":true,"discard":"unmap"}' \ -blockdev '{"node-name":"libvirt-1-format","read-only":false,"cache":{"direct":false,"no-flush":false},"driver":"raw","file":"libvirt-1-storage"}' \ -

I forgot to add, Pi Hole was also installed but had crashed and burned to the point of me having to remove it. Not sure if this was causing/part of the issue.

I'm also experiencing my unraid crashing when I shutdown the VM with the graphics card. Same as described here:

I'm appearing to be having the same issue as described below which sadly does not appear to have ever been solved.

-

12 minutes ago, trurl said:

Your keys are in the config folder on the flash drive. You should only have the pro key.

Your system share, where your docker image and libvirt image are stored, has files on disk1 instead of all on cache, so your dockers and VMs could keep parity and disk1 spun up since they will be using those files.

Thanks! I had the Trial, Basic, and Pro. I saved the all 3 keys just in case, but deleted the Trial and Basic. That error is now gone!

8 minutes ago, Squid said:If it's not in IT mode, cross flash it to be such. Better results.

Yup yup, read that somewhere and also in Amazon's comments. I'll be sure to check the mode its in. Thanks for mentioning it!

-

I'm running into a few problems and I'm not sure how to resolve them. Issues below can be easily replicated after a server restart.

1 ) 'Multiple registration keys found' - This appeared after I upgraded to Unraid Pro from basic. Not sure where to address this issue.

2 ) Right after starting one of my VM's (game oriented VM with GPU passthro) One of my hyperthreads (after the same one) goes to 100%. Stays like that even if I shutdown the VM. I notice I can "fix" this issue temporarily by going to Shares and editing ANY share at all, even if just the Share's comment section. Basically, as long as I can hit 'Apply' when editing a share, it 'resolves' the issue and the CPU core goes back to normal. Sometimes, more cores do this leading eventually to unraid crashing and needing a forceful shutdown via the power button in the computer. All dockers were turned off. VM works fine by the way. This doesn't appear to be the case with the second VM.

VM's running from an Unassigned Device - SSD - 3 partitions, 1 per VM. (only 2 VM's actually made).

3 ) Just a few random questions.

3A ) Is the Parity hard drive always suppose to be active even when the rest are spin down due to no use?

My disk 1 does not spin down if a VM is on which is odd if it is not using it. Disk 2 spins down without issue VM's on or not.

3B ) I will be adding more hard drives, and I was wondering if I use 2 hard drive for my steam library, can more than 1 computer use the steam

folder to play a game without issues? Can 2 or more play the SAME game from this steam folder/share?

4 ) Random info of stuff I've just purchased and will be installed in the next week; thoughts?

SAS9211-8I 8PORT Int 6GB Sata+SAS Pcie 2.0 - for adding more hard drives.

Intel EXPI9404PTLBLK-1PK PRO/1000PT 4PORT - OEM SINGLE 10/100/1000 GBE PCIE LP QUAD NIC - To install pfSense and run it from unraid, and sell my router.

HP 530SFP+ 10Gb Dual Port PCIe Ethernet Adapter Card 656244-001 w/ SFP - For fast connections from unraid to my main computer.Unraid information below.

Syslinux configuration: already added the ID's for the video and audio of both GPU's in my system (one if not being used as it is needed for unraid), I also added the ID of the USB controller card I added.

Please advice, thanks!

-

Just wanted to share I have upgraded to the Pro version from Basic.

I can't recommend Unraid enough. Keep up the great work guys!-

2

2

-

2

2

-

-

I would really like to see L2ARC implemented

wsdd 100% using 1 core

in General Support

Posted

Yea, I just disabled WSDD.