gray squirrel

-

Posts

61 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by gray squirrel

-

-

Try passing just one of the USB controllers only and nothing else. No GPU, no sound etc.

use VNC to run the test, then add one device at a time.

out of curiosity, why do you need the wifi and the Audio? I have just noticed that the Audio devise is not reset capable.

-

Have you got overrides enabled, was that device in another group?

you might have luck removing overrides and passing the non essential instrumentation part as well.

also, did you try “VFIO allow unsafe interrupts” in the VM manager.

-

Also, are you able to boot your VM as a native machine. It might be worth checking you don't get the same behaviour in a non virtualized environment

edit: just re-read your OP, did you benchmark on the same hardwhere

-

So I’m still a little suspicious of the amd controller, can you try unbind it? And retest.

I notice on your template you have the USB emulation set to 3.0 (qemu XHCI). This is different from the default is 2.0 EHCI.

with a sata SSD in a USB caddy this made no difference to the speed. I don’t know but might be something to test.

I don’t have an NVME in a external drive to try and replicate unfortunately.

Are you seeing anything in unraid or VM logs, maybe try enabling allow unsafe interupts

-

Yes this is completely possible.

you will need to provide a vibios if your using it as a primary GPU.

I recommend dumping your own vibios using this method:

but there is a newer method (this didn’t work for me)

so what I would do.put your 1080 in the second slot, ensure unraid grabs the other GPU.

dump your vbios (for the 1080)

build a Window 10 VM using VNC

Old guide but works

check everything works as required

now add your GPU without vbios and reboot VM to check passthough works with the 1080 as the secondary.

now add you vbios to check that works.

move it to your primary slot (note your VFIO config might change) rebuild the VM template (point at old vdisk) from scratch and start your VM.

-

11 minutes ago, SimonF said:

You can define a port(s) in my plugin to hotplug into a vm withou passing the complete controller.

that’s a cool project as I struggle to pass my amd usb controllers on my 3900x. So I have a lot of unused ports. Can you post a link.however the quickest way to fix this issue is just to start again with a new VM template so nothing old is in there.

edit: is it this:

if so I will take a look-

1

1

-

-

So I think that is the usb controller from the cpu. If I remember correctly it sometimes struggles with passthough. On my Systems it locks the server up if I try to use it.

I notice you have another usb controller isolated. As a trouble shooting step can you try testing on that one and seeing if you get the same results?

edit, can as n you post you complete vfio settings.

-

Are you passing the drives or a whole USB controller. Can you show the rest of you template please.

-

On 8/11/2021 at 2:14 PM, ghost82 said:

Use acs override set to "both" and see if they split.

If they split you can passthrough one to the vm.

If they don't split you can't do nothing

sometimes in the motherbord bios there are multiple settings for IOMMU. Mine had auto, disabled and enabled. Auto and Enabled where different.an old sever board I was using for my first server had even more setting.

mix and match to see if you get the results you want

-

What is your system config

is it the only GPU in the system. Have you dumped your own vbios -

You need to load the virt-IO driver at install all the process can’t see the vdisk.

Follow this guide. Watch it all. But I guess you are at the 12:20 stage

-

1

1

-

-

Just rebuild the template from scratch pointing it at the original vdisk.

you can edit out the old ID and put the new one in using the XML editor but then you can’t use the template editor.

this will be much quicker than fiddling with it.

edit < it take me about a minute to fill out a new VM template now if I just need to change something for testing, I then add my custom XML edits later when I’m happy with the config >

also it is much better to pass a whole usb controller (even an add in one) as this enables simple hot plug support as you pass the whole controller to the VM.

-

Again good you found a solution but I would advise watching some of spaceinvader one videos on the subject. It is super easy when you know the process.

I would personally recommend running your GPU for the VM as a secondary video device first to check it works. Then Dump your own bios using the command line method. This his proven so much more reliable for me.

a few of ed’s video on the topic. Some are a little outdated now. However still relevant.

dumping your on vbiosnewer method (didn’t work for my RTX 3080)

Advanced GPU passthrough

vfio config and binding - now built into unraid

-

-

I whould always recommend dumping your own vbios via he command line over editing one.

-

Disabling SMT is a bad idea. Very poor multicore performance with no improvement to single core or gaming performance.

Unraid also doesn't like this, with very high utilization across the non isolated cores, even with docker service off.

I have noticed that assigning more emulator pin's does provide about a 15% uplift in CSGO.

However, GPU usage is still very low in COD and F1 2019 (in the 50-70% range) but no CPU core goes over 60% utilization

-

1

1

-

-

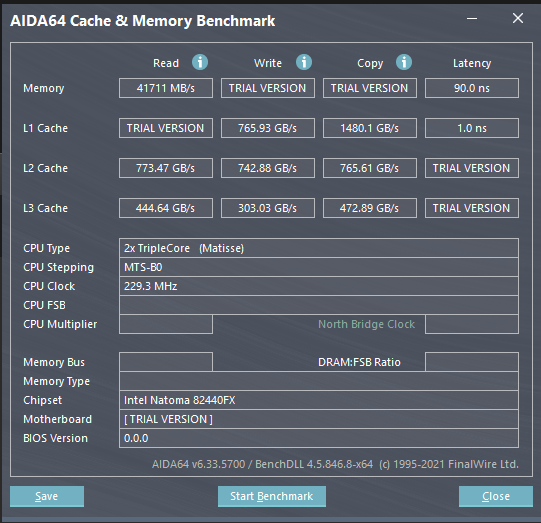

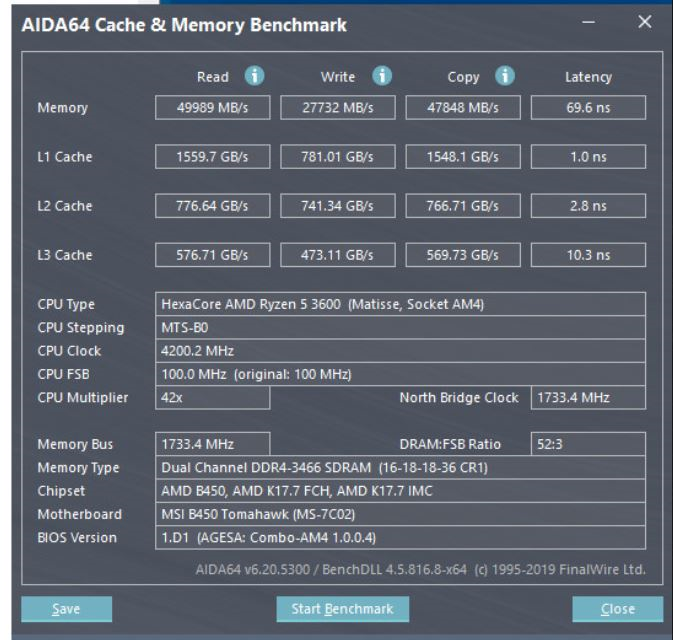

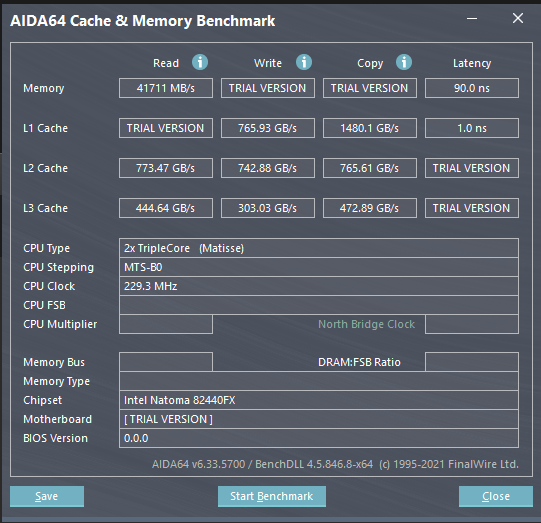

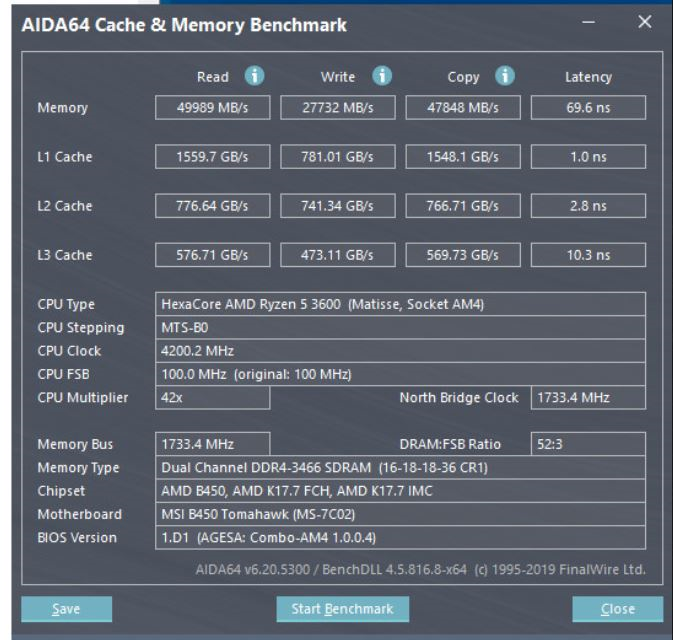

So I have taken a look at cache and Memory within the VM and compared against results i have found online for a 3600x (effectively what i have passed though the VM).

Results in VM

Example 3600x - note memory is running at 3466 - im using 3200 RAM.

future work,

disable SMT to see if that makes a difference?

Benchmark one die to create a close representation to my VM,

-

1

1

-

-

I have a 3080 and the only thing that worked was dumping my own V-BIOS. I found the old method using a temporary GPU the most reliable.

But this is the newer version. (This didn’t work for me)

edit: don’t put the loop back dongle in until you get it working with the monitor as it might be grabbing that as the primary monitor and is set to display on it only.I have one and if I need to reconfigure unraid or my VM there is a risk it grabs it first

-

I don’t know about passing AMD cards and I believe there was a rest bug that needs to be worked around.

however I would strongly advise installing Windows using VNC graphics first to ensure the VM works and is configured correctly.

Info on test bug

-

Install windows using VNC graphics first.

Follow this guide.

-

Dump your own V-BIOS. I never had any luck with hex editing one.

this is the method I use.

however this is a newer method.

I would check you can pass the GPU to a VM as a secondary card as this is easier because you don’t need a v-bios file.can you RDP into the VM (assuming windows) is the GPU detected in device manager?

-

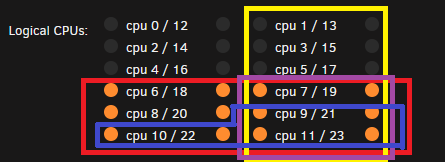

So after reading some more troubleshooting threads, I have also added the following options.

Added iothreads:

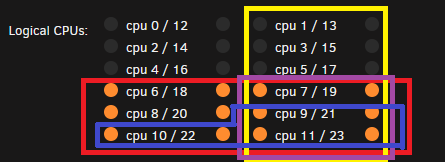

<vcpu placement='static'>12</vcpu> <iothreads>2</iothreads> <cputune> <vcpupin vcpu='0' cpuset='6'/> <vcpupin vcpu='1' cpuset='18'/> <vcpupin vcpu='2' cpuset='7'/> <vcpupin vcpu='3' cpuset='19'/> <vcpupin vcpu='4' cpuset='8'/> <vcpupin vcpu='5' cpuset='20'/> <vcpupin vcpu='6' cpuset='9'/> <vcpupin vcpu='7' cpuset='21'/> <vcpupin vcpu='8' cpuset='10'/> <vcpupin vcpu='9' cpuset='22'/> <vcpupin vcpu='10' cpuset='11'/> <vcpupin vcpu='11' cpuset='23'/> <emulatorpin cpuset='0,12'/> <iothreadpin iothread='2' cpuset='1,13'/> </cputune>

changed the topology to 2 dies to represent the 2 CCX i have passed though to the OS.

<cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='2' cores='3' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu>

No impact to single core performance.

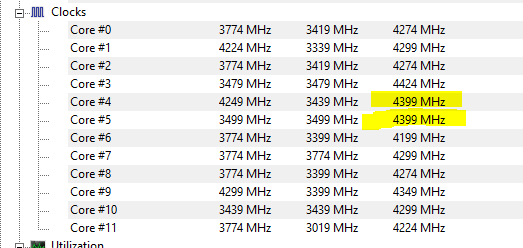

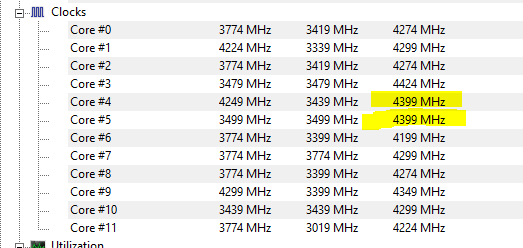

I have noticed that my CPU doesn't boost passed 4.2. Even in the single core R15 test. i need to check if it's the same on my BM install. However, i thought a 3900x was good for 4.6GHz single core. It would be useful if anybody else with a 3900x could run the same test and report back frequency. my cooling is more than adequate for this chip.

I get the same results with performance / on-demand governor and bosting is enabled.

root@Megatron:~# grep MHz /proc/cpuinfo cpu MHz : 3631.139 cpu MHz : 2954.867 cpu MHz : 2688.229 cpu MHz : 2471.153 cpu MHz : 2918.217 cpu MHz : 2566.181 cpu MHz : 2799.979 cpu MHz : 1866.616 cpu MHz : 2176.914 cpu MHz : 2524.751 cpu MHz : 2484.969 cpu MHz : 4196.191 cpu MHz : 3308.898 cpu MHz : 2822.740 cpu MHz : 2706.690 cpu MHz : 2804.207 cpu MHz : 2732.578 cpu MHz : 2215.498 cpu MHz : 2799.974 cpu MHz : 1866.641 cpu MHz : 2264.219 cpu MHz : 2163.178 cpu MHz : 2569.725 cpu MHz : 4216.841EDIT:

a quick BM boot into windows shows cores 4.4Ghz

-

1

1

-

-

So, a bit of history.

I was planing to move house in the middle of last year and consolidated my Unraid server and my gaming rig into one box. I was shocked the performance was almost identical at the time, so stuck with it when I moved as the convenience was much better, and I was getting more out of my PC. this was with a 3900x and GTX1080.

Fast-forward to now, I have an RTX308FE in my box, and it looks like I'm starting to lose an unacceptable amount of performance due to virtualization overhead. This is most noticeable in CPU bound games where the GPU now sits at 60% utilization and I have no performance uplift over my GTX1080.

Full system spec:

x470 gaming plus max

3900x - 6 cores (one die isolated for VM use)

32GB 3200Mhz Ram

6 - array drives

1TB sabrent NVME - stubbed to VM

I have tried a lot of different configs.

Most of them have come from this thread:

CPU Scaling Governor: On Demand vs Performance = no noticeable difference

isolating first 6 cores vs last 6 cores = better performance on last 6 (marginal)

Assigned difference combination of cores - same CCX vs cross CCX. Including a 3C6T system

I have pined emulation core off / on the isolated thread and now leave it on 0/12.

I have benchmarked i440fx vs Q35 with little to no difference.

I am still seeing a significant lack in single core performance, but no core is overutilized in game.

My benchmark results are: (all ran 3 times)

Cinebench

R20 Multi - BM = 6912.3 VM = 3393.0 (49.09%)

R20 Single - BM = 502.3 VM = 461.0 (91.77%)

R15 Multi - BM = 254.4 VM = 1484.7 (48.6%)

R15 Single - BM = 201.7 VM = 184.0 (91.24%)

3DMark - Fire strike Extreme

Score - BM = 17375.3 VM = 16468.0 (94.78%)

Graphics -BM = 18300.7 VM = 18574.3 (101.50%)

Physics - BM = 19214.0 VM = 17701.7 (92.13%)

Combined - BM = 9005.7 VM = 8430.3 (93.61%)

3DMark - Tiem Spy

Score -BM = 14876.0 VM = 13238 (88.99%)

Graphics -BM = 16085.7 VM = 16495 (102.54%)

CPU -BM = 10432.0 VM = 6248 (59.89%)

Mankined Devided - Ultra Preset 1440P

Average BM = 74.3 VM = 72.8 (97.9%)

RD2 - All ultra 1440P

Average BM = 72.7 VM = 67.5 (92.8%)

Civ 6

Graphics ultra

Av FPS BM - 197.3 VM = 136.0 (68.96%)

Tern Time

BM = 6.64 VM = 7.14 (93%)

F1 2019

Av FPS BM = 170.7 VM = 127.0 (74.41%) - note low GPU usage

CSGO

Av FPS Benchmark Map BM = 402.1 VM = 306.7 (76.3%) - note low GPU usage

Control

Average framerate BM = 82.2 VM = 75.8 (92.21%) - MAX GPU usage

Warzone Call of Duty

not benchmarked, but can happily run the game at 140-160 FPS BM, but around 100 FPS in the VM.

my KVM config

<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm' id='4'> <name>XXXXXXXXX</name> <uuid>XXXXXXXXX</uuid> <description>Dual Boot WIN 10 VM</description> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>16777216</memory> <currentMemory unit='KiB'>16777216</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>12</vcpu> <cputune> <vcpupin vcpu='0' cpuset='6'/> <vcpupin vcpu='1' cpuset='18'/> <vcpupin vcpu='2' cpuset='7'/> <vcpupin vcpu='3' cpuset='19'/> <vcpupin vcpu='4' cpuset='8'/> <vcpupin vcpu='5' cpuset='20'/> <vcpupin vcpu='6' cpuset='9'/> <vcpupin vcpu='7' cpuset='21'/> <vcpupin vcpu='8' cpuset='10'/> <vcpupin vcpu='9' cpuset='22'/> <vcpupin vcpu='10' cpuset='11'/> <vcpupin vcpu='11' cpuset='23'/> <emulatorpin cpuset='0,12'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-5.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/5f45766e-314f-6714-c52b-7b7a581ab713_VARS-pure-efi.fd</nvram> <boot dev='hd'/> </os> <features> <acpi/> <apic/> <hyperv> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='none'/> </hyperv> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='6' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <controller type='usb' index='0' model='ich9-ehci1'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <alias name='usb'/> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <alias name='usb'/> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <alias name='usb'/> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:1e:9d:c0'/> <source bridge='br0'/> <target dev='vnet0'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/0'/> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/0'> <source path='/dev/pts/0'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-4-Sideswipe/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='mouse' bus='ps2'> <alias name='input0'/> </input> <input type='keyboard' bus='ps2'> <alias name='input1'/> </input> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x27' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <rom file='/mnt/user/domains/vbios/RTX3080FE.rom'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x27' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x26' slot='0x00' function='0x0'/> </source> <alias name='hostdev3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <memballoon model='none'/> </devices> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+100</label> <imagelabel>+0:+100</imagelabel> </seclabel> </domain>

Any pointers in how to librate a bit more CPU performance?

-

1

1

-

-

I found this, but this did not provide a solution.

Whenever I navigate to the VM tab, the GUI pauses for a few moments while a disk spin up. As I have created a V-DISK on the array whenever I navigate to the tab, unraid is checking the file paths of the VM images. This is with the VM stopped.

A snapshot from the file activity plugin shows my Xpenology Vdisk is spinning up disk 3. I assume the other items are on the cache, so no spin up is required.

Is this expected behaviour when the VM tab is navigated to?

GPU Passthrough With GB 3070 Gaming OC

in VM Templates

Posted

Try the command line method in my first linked video. The new method Did not work for me. You need two GPU’s so your new card is the secondary.