-

Posts

176 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by mattw

-

-

You can disregard this... I did not realize the added path had to exactly match the listing provided.

So, not sure what I am doing wrong, I have my ssd(NVMe) in a pool of one drive. I would like to check it, but get the following message:

Unable to benchmark for the following reason

* Docker volume mount not detected

You will need to restart the DiskSpeed docker after making changes to mounted drives for changes to take effect.

For more information how the benchmarks work, view the FAQI have added path variable Unraid with /mnt as the path. I assume this is wrong?

-

I found the issue... I run smokeping in docker, I killed the docker and reloaded it and all of the messages stopped.

-

Sorry to bring this back up, but my logs are useless because of these entries. Could unraid-api or unraid connect be the cause of this? I have Home Assistant monitoring the server via unraid-api.

Crazy rate!

Feb 14 18:51:01 Tower monitor: Stop running nchan processes Feb 14 18:51:34 Tower monitor: Stop running nchan processes Feb 14 18:52:08 Tower monitor: Stop running nchan processes Feb 14 18:52:41 Tower monitor: Stop running nchan processes Feb 14 18:53:14 Tower monitor: Stop running nchan processes Feb 14 18:53:47 Tower monitor: Stop running nchan processes Feb 14 18:54:20 Tower monitor: Stop running nchan processes Feb 14 18:54:54 Tower monitor: Stop running nchan processes Feb 14 18:55:27 Tower monitor: Stop running nchan processes Feb 14 18:56:00 Tower monitor: Stop running nchan processes Feb 14 18:56:33 Tower monitor: Stop running nchan processes Feb 14 18:57:06 Tower monitor: Stop running nchan processes -

I would love to find a way to leave a card reader plugged into Unraid, then if a card is inserted I would like to have the contents automatically download to maybe my cache drive for processing on another workstation. If not processed in a certain time window the move the files to the array.

Does such a container exist? Is it even possible to do this in linux?

-

So, it appears that I have it fixed... The Unraid Connect plugin was out of date and could not update. I was finally able to force it to update and now my plugin screen is responding properly and quickly. I was on a version from January of this year.

-

Today, my plugins were stuck on checking for updates, had a notice in the banner for Community Applications update ready. Did a shift reload on the plugin page and now have this banner... What is going on. Was in Firefox when this happened, tried Edge and did not have the banner, opened the plugin page a seem to be stuck on checking for updates. Checking just seems to spin endlessly.

Logs seem to have way to many of the following entry...

Feb 7 17:43:14 Tower monitor: Stop running nchan processes

tower-diagnostics-20240207-1909.zip

It did come up finally wanting Unraid Connect to be updated... Now stuck here.

-

On 1/28/2024 at 8:05 AM, ChrisW1337 said:

How did you manage to get multiple ping into one graph.

I cant find neither Info nor example to get e.g. 192.168.1.1 and 192.168.1.2 into ONE graph.

FPing says it cant resolve the Hostname "192.168.1.1 192.168.1.2".... ?another example:

https://smokeping.oetiker.ch/?target=multiSo, the magic is in the Targets file... You build the following it paths to the queries you want to include in the multigraph like so...

++ MultiHostAPPings

menu = MultiHost AP ICMP

title = Consolidated AP Ping Responses

host = /Local/AP/AP1 /Local/AP/AP2 /Local/AP/AP4 /Local/AP/AP5 -

That is worth a try... I am not sure that I can reliably detect it, but may be able to as the cpu goes to a heavy load during the process. Thanks for the idea!

-

52 minutes ago, ich777 said:

Sorry but I really don't understand what you are trying to accomplish and I think you maybe overthinking what you want or need.

Why not make a timeout form 10 minutes at the end (or maybe 5 if you are more comfortable with that) and abort the script when you want to run the server as usual.

I'm not really sure what you want to do since this seems like a unique issue that you have to solve on your own I think.

I am very close to having it work perfectly...

1) turn on Unraid backup server with Home Assistant. check

Turns it on, sets a dummy switch indicating the automation was responsible for the turnon.

2) trigger automatic backup. check

Done in the start up script on Unraid X minutes after boot.

3) allow backup to complete, currently leave Unraid backup server running for 2 hours. check

4) turn off Unraid backup server with Home Assistant. check

Power down system via automatioin and set the dummy switch to off. The dummy switch as a condition will prevent Home Assistant from shutting down the system at the time designated in the backup automation if the state of the dummy is not correct.

The part that remains a mystery to Home Assistant is... did the backup complete after 2 hours or are we shutting down backup server in the middle of a backup? The only time it should go this long is if a serious list of stuff is missing from the backup server as they are connected at 10 gig.

-

30 minutes ago, ich777 said:

My bad, try this:

#!/bin/bash sleep 60 docker exec -i <CONTAINERNAME> /bin/bash -c "/usr/bin/luckybackup -c --no-questions --skip-critical /root/.luckyBackup/profiles/default.profile > /root/.luckyBackup/logs/default-LastCronLog.log 2>&1"

That did it! Is there a way to use exit status or completion to shut down the system? I see how this is done now and it makes sense! I knew there had to be a way to access docker exec, but I had no idea how to do it.

I think I could add a small delay and powerdown to the script. But, that would make it difficult to power it up and run it like normal since it would always complete and shutdown.

-

9 hours ago, ich777 said:

Sorry, I thought that would be obvious...

Maybe add a bit of sleep at the beginning, 60 seconds or so that your server can fully start up and after that start the sync:

#!/bin/bash sleep 60 docker exec -i <CONTAINERNAME> /usr/bin/luckybackup -c --no-questions --skip-critical /root/.luckyBackup/profiles/default.profile > /root/.luckyBackup/logs/default-LastCronLog.log 2>&1

(I think that should work)

So, the basic script works if I do not try to write the log. I touched the log file and set the same permissions as the rest of the files in the directory.

When I run in the foreground in get the following:

/tmp/user.scripts/tmpScripts/LuckyBackup_at_boot/script: line 3: /root/.luckyBackup/logs/default-LastCronLog.log: No such file or directory

-

9 hours ago, ich777 said:

Sorry, I thought that would be obvious...

Maybe add a bit of sleep at the beginning, 60 seconds or so that your server can fully start up and after that start the sync:

#!/bin/bash sleep 60 docker exec -i <CONTAINERNAME> /usr/bin/luckybackup -c --no-questions --skip-critical /root/.luckyBackup/profiles/default.profile > /root/.luckyBackup/logs/default-LastCronLog.log 2>&1

(I think that should work)

Will work on this today... BTW, the name... I ran Home Assistant as the non-OS install for years, it used docker and the container names were difficult to change and they were the name assigned_{long hex number @ the command prompt}. That is why I asked a stupid question.

-

-

How do I find the real container name? Is it the prompt on the container console?

-

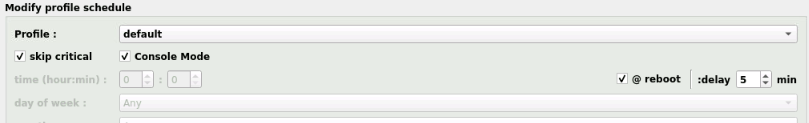

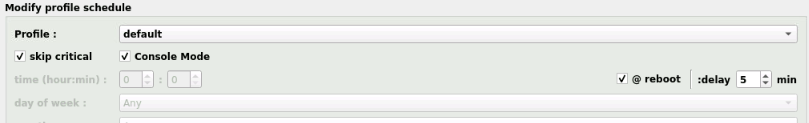

And another Luckybackup question... I think I know why this is not working, but would love to know if there is a solution to the problem. I use WOL to wake my backup Unraid server and want LB to wait a few minutes and then run the default profile. I have LB set up as follows:

This is in the container crontab file:

# ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ luckybackup entries ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

@reboot sleep 300; /usr/bin/luckybackup -c --no-questions --skip-critical /root/.luckyBackup/profiles/default.profile > /root/.luckyBackup/logs/default-LastCronLog.log 2>&1

# ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ end of luckybackup entries ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~I have LB set to start on boot as well.

So, the profile never executes... I suspect it is because the crontab in the docker container never executes. If this is the case, is there a way around it?

Logs from LB after attempt.

---Resolution check---

---Checking for old logfiles---

---Starting TurboVNC server---

---Starting Fluxbox---

---Starting noVNC server---

---Starting ssh daemon---

---Starting luckyBackup--- -

Any luck? I am trying to do the same thing.

-

20 hours ago, ich777 said:

What does the log say?

Stop the container, open the Logs from the container and post a screenshot or a textfile with the contents from the log.

The container is running and not restarting correct?

It looks like I got it running... wanted to install a larger cache drive and after I re-installed completely it is now working.

-

1

1

-

-

My primary server is working very well with luckybackup, running 6.11.5 on it. My new backup server is having an odd issue. When I try to open the luckybackup console to do the password stuff, the console closes a few seconds later.

I have tried a browser that had never connected to the server and I have tried clearing firefox cache. No change. I am not running any proxys either. Network and dns settings are the same between the servers.

What on earth is wrong?

-

On 11/25/2023 at 5:25 AM, skank said:

I just noticed that all are called buttons now instead of switches.

So you should use the service call: button.press i think

So then u can turn it off.

So, the button service call works to shut down the server... and the WOL component turns it on just fine.

This has allowed me to create and android widget that will power up/down my backup server!

Here is my HA config that creates switch.backup

~~~

- platform: wake_on_lan

mac: "BC:5F:F4:xx:xx:xx"

name: "Backup"

host: 192.168.1.101

broadcast_address: 192.168.1.255

turn_off:

service: button.press

data: {}

target:

entity_id: button.backup_server_backup_power_off~~~

-

I have my old server running 6.11.5 forever, running fine but really feel like I should upgrade to 6.12. I have been reading forum posts until almost blind, I have to assume that the vast majority of the complaints are user/installed stuff generated. When I run Update Assistant, everything comes back green, I have backups of my important stuff and have a solid install running XFS formatted drives and BTRFS formatted cache. My docker is set to ipvlan currently.

Should I shutdown all of my dockers before doing the upgrade? Should I stop the array before upgrading?

My backup server is new, running on old hardware. But, is running 6.12.6 flawlessly.

Thanks Matt

-

I use HA Companion on android. I can wake my main and backup servers from a widget, but because there is not a switch for shutdown, I am unable to power off my servers. I assume that I am going to have to add an ssh command to HA as the power off?

Is there a better way?

I would also still like to get cpu temp in via the api... is that a roadmap item? Otherwise works really well.

-

I do not store that much, but want it to be reliable. I can find many "new" drives on ebay in the 4tb range. I tend to buy data center type drives, have always held up well for me in the past. In this size range, what is the preferred drive? I currently have a mix of WD gold Re drives and a Hitachi or 2.

-

That is what I figured... good to know.

-

So, I am putting together an Unraid backup system. I cannot afford new drives, but acquired 4 WD 4000 Re drives. Kinda high hours, out of a datacenter environment. I have been running them through preclear, so far 1 passed and 2 have 2 pending sectors each.

My question... I intend to set the passed drive up as parity and use the others as data drives. My main server has good drives in it, so not so critical here. I see loads of people installing used drives, I assume most used drives do not pass a preclear. What do you guys do in that case, use them or lose them?

[Support] selfhosters.net's Template Repository

in Docker Containers

Posted · Edited by mattw

[scrutiny]

Just tried to update from last version and not only did it not update, it removed from Unraid. I get the following error:

docker run -d --name='scrutiny' --net='bridge' --privileged=true -e TZ="America/Chicago" -e HOST_OS="Unraid" -e HOST_HOSTNAME="Tower" -e HOST_CONTAINERNAME="scrutiny" -l net.unraid.docker.managed=dockerman -l net.unraid.docker.webui='http://[IP]:[PORT:8080]' -l net.unraid.docker.icon='https://raw.githubusercontent.com/selfhosters/unRAID-CA-templates/master/templates/img/scrutiny.png' -p '8080:8080/tcp' -v '/run/udev':'/run/udev':'ro' -v '/dev/disk':'/dev/disk':'ro' -v '':'/opt/scrutiny/config':'rw' -v '':'/opt/scrutiny/influxdb':'rw' 'ghcr.io/analogj/scrutiny:master-omnibus' docker: invalid spec: :/opt/scrutiny/config:rw: empty section between colons. See 'docker run --help'. The command failed.```