StarsLight

-

Posts

76 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by StarsLight

-

-

Anyone has experience on the motherboard as below requirement:

1. support 3900x

2. support 128GB

3. at least 5-6 PCIE slot if possible for 24+ harddisk

4. work with latest unRAID

I done researching that most of X570 motherboard supporting 128GB, but I don't really think about this because of heat/power consumption. Hence, I think x470 should be much better.

-

After reinstall diskover, WebGUI come out.

I found another question about "No diskover indices found in Elasticsearch. Please run a crawl and come back." when visit the page. Any suggestion?

-

On 12/15/2019 at 3:10 AM, raqisasim said:

First -- I'm no expert. I'm just a newbie around this, too! I cannot make any promises I can support deep debugging.

But always try looking at logs, first. Each Docker container has a log you can look at for troubleshooting.

Second, I noted in Step 7 a point where I did fail to see the GUI, so I'd triple-check that Elasticsearch actually is up and functional, as well as carefully re-check the other steps. If that doesn't work, re-start from ground zero (none of the relevant Dockers installed, appdata for those Dockers completely deleted as per Step 2) to ensure you've got the right config setup from jump.

In short, this is all a bit complex, and in fact since I also haven't gotten re-scanning to work per Surgikill's comment above I've set it all aside for now, myself. But hopefully this'll help you!

Checked log of diskover.

[cont-init.d] 10-adduser: exited 0.

[cont-init.d] 20-config: executing...

[cont-init.d] 20-config: exited 0.

[cont-init.d] 30-keygen: executing...

using keys found in /config/keys

[cont-init.d] 30-keygen: exited 0.

[cont-init.d] 50-diskover-config: executing...

Initial run of dispatcher in progress

[cont-init.d] 50-diskover-config: exited 0.

[cont-init.d] 60-diskover-web-config: executing...

ln: failed to create symbolic link '/app/diskover-web/public/customtags.txt': File exists

[cont-init.d] 60-diskover-web-config: exited 0.

[cont-init.d] 99-custom-files: executing...

[custom-init] no custom files found exiting...

[cont-init.d] 99-custom-files: exited 0.

[cont-init.d] done.

[services.d] starting services

[services.d] done.No any special error message. Does "fail to create symbolic link" cause problem?

-

On 10/5/2019 at 4:32 AM, raqisasim said:

So, after reviewing this thread, I followed OFark's steps, and finally got it working! (i.e. Diskover GUI loads and workers are running against my disks)

For those who are deeply confused, I wanted to expand on that worthy's notes, as there are a couple points where I got stuck, and suspect others have/are in similar situations:

- Check if you have the Redis, ElasticSearch, and/or Diskover Docker containers already installed

- If you do, note where your appdata folder is hosted for each, then remove the containers, then remove the appdata (removing the appdata is crucial! An older config file really tripped me up, at one point). You'll likely need to SSH or use the UnRAID Terminal in the GUI to cd to the appdata folder location and rm -rf them.

- This may not be required, however -- to ensure the OS setting for ElasticSearch was set before I installed, I followed the steps at this comment: that start at "You must follow these steps if using Elasticsearch 5 or above". IMPORTANT NOTE: I did NOT install this ElasticSearch container, just used the script instructions. For which container I did install, see Step 5, below.

- Install the Redis container via Community Apps. I used the copy of the official Redis Docker in jj9987's Repository (Author and Dockerhub are both "Redis"). It should not need any special config, unless you already have an container running on Port 6379.

- To install ElasticSearch version 5, go to https://github.com/OFark/docker-templates/ and follow the steps in the README on that page; it'll have you setup an additional Docker Repository first. Note that, so far as I can tell so far, I did not need to do anything in OFark's Step 6, on that page, around adding values in Advanced View. If that turns out to be a mistake, I'll update this.

- At this point, I recommend checking your Redis and ElasticSearch 5 container logs just to ensure there's no weird errors, or the like.

-

If it all looks good, install the Diskover container via Community Apps. To avoid the issues I ran into, ensure you have all the ports open to use (edit if not), and that you provide the right IP address and ports for both your Redis and ElasticSearch 5 containers. Also make sure you provide RUN_ON_START=true, and set the Elasticsearch user and password -- if you don't give the latter, you'll get no Diskover GUI and be confused, like me

- Once Diskover starts, give it a minute or so, then go to it's Workers GUI (usually at port 4040). You should see a set of workers starting to run thru your disk and pull info.

- From there, you should be able to go to the Diskover main GUI and see some data, eventually! As I wrap this, so did my scan; now to pass thru some parameters to get duplication and tagging working (I hope!)

I hope this helps -- Good luck, everyone! And thanks to Linuxserver for the Container, and OFark for templates and guidance that helped immensely in setting it up for UnRAID!

Thanks for your guideline.

I go through the steps but diskover GUI didn't display. Where should I start to do troubleshooting?

-

I am not sure whether if the file which fail to upload, has 10,000+ individual files, it can affect proftpd upload process...??

-

On 12/1/2019 at 5:46 AM, SlrG said:

The functionality to edit the proftpd.conf file from the plugins settings page is sadly currently broken. You have to open a shell window and use nano (or any other editor of your choice) to edit /etc/proftpd.conf.

If you have your log files on the flash, like your config file suggests, the server will propably run into problems and not start, as the file system of the flash doesn't support access rights and is therefore deemed insecure by proftpd. If you don't want to log into the syslog, I recommend to create a folder on the array to host the log files and set the correct access rights for that. (not world writeable)

Make sure you can access the ftp by a normal client (e.g. FileZilla) first before trying more advanced stuff like trying to run some backup software.

It might be necessary to enable Debug Logging, to maybe get more information on the problem. See here.

Thanks for your advice. I finally can upload the file through proftpd to upload file to destination folder which is created by other user account (not nobody users).

a) can upload to below new created folder

drwxrwxrwx 1 starslight users 28 Dec 3 22:54 DCIM/

But when I change destination folder and try to upload file again, it didn't work again.

b) cannot upload to existing folder

drwxrwxrwx 1 nobody users 96 Dec 1 22:07 DCIM/

c) error in slog

2019-12-03 23:29:15,436 Tower proftpd[30059] 127.0.0.1: ProFTPD 1.3.6 (stable) (built Thu Mar 14 2019 17:45:29 CET) standalone mode STARTUP

2019-12-03 15:30:03,039 Tower proftpd[30649] 127.0.0.1 (192.168.1.43[192.168.1.43]): notice: user twftp: aborting transfer: Broken pipe

2019-12-03 15:30:26,155 Tower proftpd[30649] 127.0.0.1 (192.168.1.43[192.168.1.43]): notice: user twftp: aborting transfer: Data connection closedanything I miss for proftpd setup??

-

Finally, I can find log file after change folder permission.

But when I use Android "FolderSync", I find error message in slog file. (aborting transfer: Broken pipe)

I cannot view Edit proftpd.conf session in unraid.

-

Hi,

I could not start the FTP server without any idea. Could someone help me?

In addition, I cannot view Edit proftpd.conf session in unraid.

=======Configuration file=====

# Server Settings

ServerName ProFTPd

ServerType standalone

DefaultServer on

PidFile /var/run/ProFTPd/ProFTPd.pid# Port 21 is the standard FTP port. You propably should not connect to the

# internet with this port. Make your router forward another port to

# this one instead.

Port 21# Set the user and group under which the server will run.

User nobody

Group users# Prevent DoS attacks

MaxInstances 30# Speedup Login

UseReverseDNS off

IdentLookups off# Control Logging - comment and uncomment as needed

# If logging Directory is world writeable the server won't start!

# If no SystemLog is defined proftpd will log to servers syslog.

#SystemLog NONE

SystemLog /boot/config/plugins/ProFTPd/slog

#TransferLog NONE

TransferLog /boot/config/plugins/ProFTPd/xferlog

#WtmpLog NONE

WtmpLog /boot/config/plugins/ProFTPd/WtmpLog# As a security precaution prevent root and other users in

# /etc/ftpuser from accessing the FTP server.

UseFtpUsers on

RootLogin off# Umask 022 is a good standard umask to prevent new dirs and files

# from being group and world writable.

Umask 022# "Jail" FTP-Users into their home directory. (chroot)

# The root directory has to be set in the description field

# when defining an user:

# ftpuser /mnt/cache/FTP

# See README for more information.

DefaultRoot ~# Shell has to be set when defining an user. As a security precaution

# it is set to "/bin/false" as FTP-Users should not have shell access.

# This setting makes proftpd accept invalid shells.

RequireValidShell no# Normally, we want files to be overwriteable.

AllowOverwrite on

# Added by Tom Wong 20191130

# Allow to restart a download

AllowStoreRestart on

=== Webserver configuration in unraid====

Enable ProFTPd: Yes

Webserver available: Yes

Webserver Path: /mnt/user/appdata/

Webserver Port: 8088

Regards,

Tom

-

Hi everyone,

I got this kinds of message after login + password entered:

"This page isn’t working

xxx.xxx.xxx.xxx is currently unable to handle this request.

HTTP ERROR 500"The log file shows:

"PHP Fatal error: Declaration of OC\Files\Storage\Local::copyFromStorage(OCP\Files\Storage $sourceStorage, $sourceInternalPath, $targetInternalPath) must be compatible with OC\Files\Storage\Common::copyFromStorage(OCP\Files\Storage $sourceStorage, $sourceInternalPath, $targetInternalPath, $preserveMtime = false) in /config/www/nextcloud/lib/private/Files/Storage/Local.php on line 43"

How should I do next??🙄

-

13 hours ago, binhex said:

i personally think blocklists are a waste of time, they generally are out of date, and as you're using a vpn there is no point.

the banned by ip filter message is not related to pia btw, this is purely telling you the site you are trying to connect to is in the blocklist, you would get the same message whether you were connected to a vpn provider or not, it makes no difference.

Thank binhex!

-

7 minutes ago, binhex said:

yes indeed, most probably that blocklist (which is pretty useless btw) has in its list the sites your trying to use to get your external ip.

Thanks!!! after remove this plugin, I can find Tracker status and IP then. (btw, I cannot find the external ip inside the blocklist file)

Should I keep use "blocklist" plugin, and what is the problem while the message "banned by IP filter" using PIA?

-

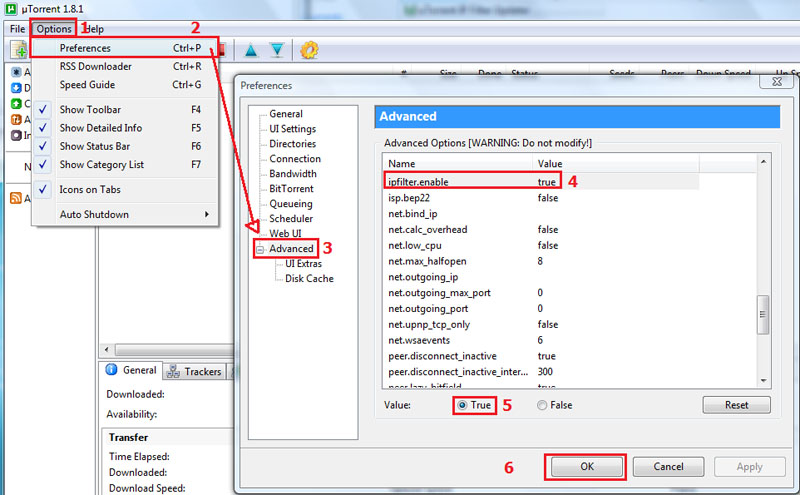

2 minutes ago, binhex said:

ahh hehe i think i get the issue, so are you using ipfilter built into deluge? maybe something like this:-

or perhaps a third party plugin you have installed?.

I have been using blocklist with "http://john.bitsurge.net/public/biglist.p2p.gz". Should this cause the problem?

-

6 minutes ago, binhex said:

ok that looks clean, so what is your unraid host ip address?

192.168.1.100

-

33 minutes ago, binhex said:

ok follow the procedure below:-

https://forums.lime-technology.com/topic/44108-support-binhex-general/?do=findComment&comment=435831

Thanks. Here is the log file.

-

57 minutes ago, binhex said:

try a restart of the container, it could be the ip address you got handed was previously used for DDoS attacks perhaps.

tried but keep the same message.

-

13 hours ago, death.hilarious said:

I have attempted checkmyIPtorrent and ipmagnet, but keep block message....

-

Hi,

I just download updated deluge VPN, and enable port_forward and put pia openvpn certificate.

After that, I tried to check ip by downloading torrent check file, and it returns "checkmytorrentip.net: Error: banned by IP filter" in Tracker Status. Does this normal for Deluge VPN?

-

1 hour ago, binhex said:

if you restart unraid then you need to re-run that command again before you start the container, or put it in the /boot/config/go script so that its executed on boot (what i do)

thanks!!! That make my life easy!!

-

22 hours ago, binhex said:

that command needs to be run on the host (as in unraid) not inside the container, so SSH to unraid and then run the command exactly as below, then restart the container and you should then see in the log that its successfully loaded the iptable mangle module, then try transdrone.:-

/sbin/modprobe iptable_mangleTried but I cannot get through after restart the server.... ?

-

46 minutes ago, binhex said:

dont do this, you dont need to port forward on your router, al traffic inbound and outbound is via the vpn tunnel.

you will always see "iptables" in the log, this is NOT the same as "iptable mangle" look for the keyword "mangle" in your supervisord.log that will tell if its loaded or not, if it isnt then you cannot access the webui outside of your lan until you fix this.

I found such message in the log file.

"[info] unRAID users: Please attempt to load the module by executing the following on your host:- '/sbin/modprobe iptable_mangle'"

Should I run this in unraid or any UI setting can do so?

-

On 8/7/2017 at 5:22 PM, binhex said:

you only need to port forward 8112 if your accessing deluge using transdrone outside of your lan, you also need to have ip table mangle support for this to work (see the /config/supervisord.log)

I would like to use transdrone / web portal outside my lan, but cannot do this after set port forward with TCP & UCP protocol in my router.

I have found iptable message in the log file. Anything I need to configure?

-

On 8/3/2017 at 3:05 AM, binhex said:

i use transdroid to connect to delugevpn and rtorrentvpn without issue so i know this does work fine. i would guess (without logs im guessing) that you either dont have your lan_network defined correctly, see here if your unsure:-

or your using the wrong port number, are you sure you have port 8112 port mapped to 8112 on your host side?, take a look at your config in the unraid web ui.

Hi, I have set lan_network to "192.168.1.0/24". do I put port forward 8112 in my router as well?

-

Hi,

I cannot use Transdrone to connect (192,168.1.XXX:8112) the delugeVPN with PIA. Any idea?

-

Any one counter one disk is completed "build" process with green tick, but will get "circle" at the same day....

Under "Automatically protect new and modified files" enabled, "green" click should imply complete the integrity build but why I get "circle" later on??

[Support] binhex - DelugeVPN

in Docker Containers

Posted

I have found DelugeVPN recently not able to connect Internet.

Tried run command (curl ifconifg.io), and return "curl: (6) Could not resolve host: ifconifg.io"

Where should I start to investigate the problem?