FrostyWolf

-

Posts

36 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by FrostyWolf

-

-

4 hours ago, frodr said:

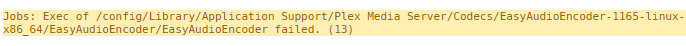

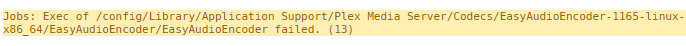

I have problem playing some files, and get this error in the logs:

Jobs: Exec of /config/Library/Application Support/Plex Media Server/Codecs/EasyAudioEncoder-1165-linux-x86_64/EasyAudioEncoder/EasyAudioEncoder failed. (13)

Anyone experience the same?

It's because the mount is flagged NOEXEC. You can chmod it yourself to fix it, but the problem will keep coming back everytime it tries to update the codec. I have no idea what causes this and I am in the same boat as you.

-

1

1

-

-

10 minutes ago, trurl said:

I don't have these problems with my Transmission downloads.

Maybe I'm not explaining this correctly.

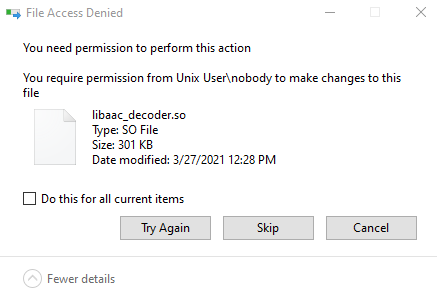

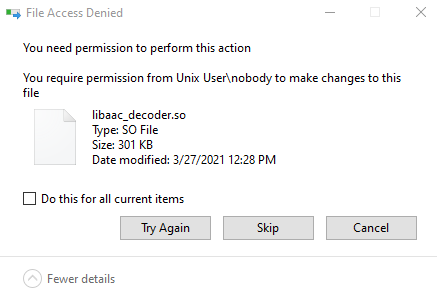

Any file that gets written to any share on the server, by anything, I can not access till I run New Permissions. After I run New Permissions, I can not access any file written after the last time I have run it.

It does not matter what creates/writes the file. It happens in all my shares. SWAG updates and gets new conf files? I can't edit them. Deluge/nzbget download a file? I can't touch it. Sonarr/Radarr move that file to the array and rename it? I still can't touch it there.

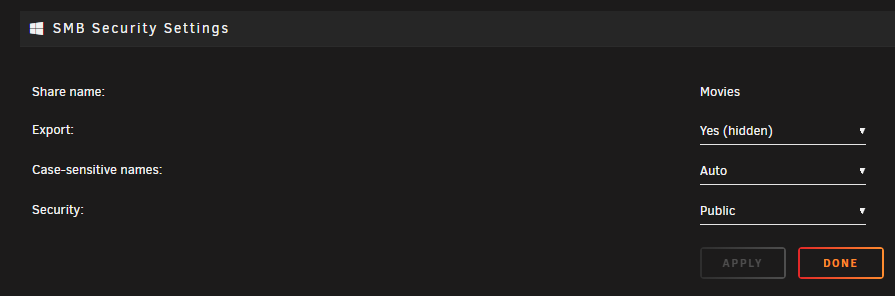

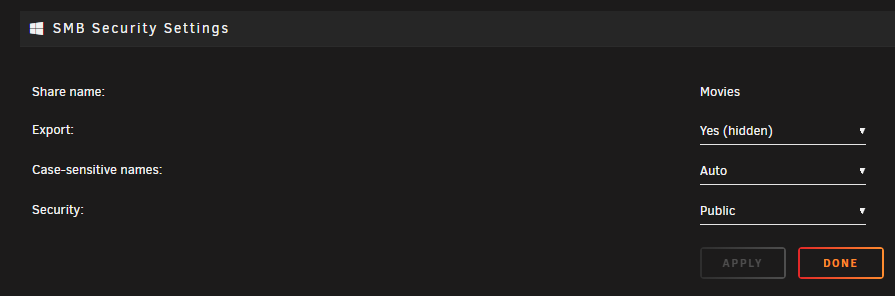

All of my shares are public. My guest account in unraid is showing Read/Write for all of them.

-

Yes.

I keep them secure by having them on a VLAN segregated on my home network that only one admin laptop can access. -

I understand where you are coming from, but my server hasn't had a issue running for a couple of years, and recently it now does this for all files, from 15 different dockers. I have to run New Permissions twice a week to fix things. I feel like this is something wrong deeper then a misconfigured docker container. I've never messed with permissions in any container before. They are all pretty much stock installs.

If everything is causing it wouldn't the common factor be the account trying to access it, not whats writing the files? This is in the array, on both cache arrays. It's all appdata files. It's everything. I can not delete/edit files that have been written since the last time I have run New Permissions. Everything I try to do gives me the "You Require Permission from Unix User\nobody" error.

Is it possible my guest account or whatever account gets used for Samba shares has been corrupted? -

I dunno if this is related, but I'm having other issues that seem to tie into permissions.

Plex for instance is throwing this error cause it can't replace the codec files with the new ones.

I have to stop the docker, run new permissions, then start it back up again so it can properly replace the files.

Also, this goes with all my files, not just some. Literally any new file on my array or appdata. -

When ever a new file or folder gets added to appdata or my array, when I try to access it via samba share I get this:

I then have to go to tools, run and run "New Permissions" to fix it.

This happens no matter what docker creates the files or folder. I don't even know where to begin tracking down what is going on. -

On 2/5/2021 at 2:52 AM, JorgeB said:

https://forums.unraid.net/topic/46802-faq-for-unraid-v6/?do=findComment&comment=480418

Note that latest releases have a bug and cache replacement doesn't work, don't remember any reports about removal not working, but also didn't confirm it's working.

That's what I was following, and things def don't work as I excepted on 6.9.0-rc2.

I started with 1TB cache and wanted to upgrade it to 2 x 2TB in a Raid 1.

I added one 2TB to the 1TB, and let it complete it's balance operation. What I expected was a 1TB cache, now mirrored across the 1TB and 2TB drive. What I got was a 2.5TB cache?

I then tried to drop the 1TB and replace it with the 2TB, which lead to a error telling me too many missing disks.

I then added the 1TB back, and then added the 2nd 2TB, so the cache pool was 2 x 2TB and 1 x 1TB. This resulted in a 3.5TB cache (?????).

After that, I tried to drop the 1TB, and still got the same too many missing disks error.

I fixed it by converting it to a Raid1c3, then dropping the 1TB, then converting it back to a Raid 1, which resulted in a 2 TB raid 1 cache. -

-

1 hour ago, sparklyballs said:

that's something you need to sort on the host and is not anything to do with our image specifically

Ok thank you, I'll ask in main unraid support then.

-

I asked about this over on

and they say it's more of a unraid issue then a transmission issue.

-

I am getting a bunch of:

I believe this info might be helpful reading other people that have this same issue:

Not sure how to proceed.

-

I installed Tautulli and it did its thing but it never showed up under Dockers. Now if I try to install it, I get this message:

root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='tautulli' --net='bridge' -e TZ="America/New_York" -e HOST_OS="unRAID" -e 'PUID'='99' -e 'PGID'='100' -v '/mnt/user/appdata/tautulli/config/':'/config':'rw' -v '':'/log':'rw' 'tautulli/tautulli' /usr/bin/docker: Error response from daemon: invalid volume spec ":/log:rw": invalid volume specification: ':/log:rw'. See '/usr/bin/docker run --help'. The command failed. -

29 minutes ago, trurl said:

And you must not allow yourself to have multiple shares with the same name except for case. SMB and Windows can't distinguish them and will only show you one of them. This has caused a lot of people to think their files are lost. And you also must not allow any user shares to have the same name as a disk, such as 'cache', 'disk1', etc. Same reason.

You should investigate why you had these in the first place. Often it is caused by a misconfigured docker, where it has specified the volume mappings using the wrong case.

A user share is simply the collection of all top level folders on all disks with the same name, so that explains why you got a share by having the folder. Even if you don't create a share, if the top level folder exists then there is a share with the same name with default settings unless you change them.

My old array was drivepool. These drives are from that. Since drivepool was in windows, it was probably just me accidently missing the shift key when I made the folder on that particular drive. It never got noticed cause windows doesn't care about case. The drive is a 8 year old 3TB WD green, one of the oldest drives in my previous array. After the data is off of it I'm just going to be formatting/testing it and then reusing it elsewhere in the house.

-

So I'm in the middle of transferring data over from my old array (drivepool) using Dolphin and I didn't notice till I started the copy that one of the Movies directories had a lowercase "m".

It's still in the middle of the transfer now (2.95TB) but when it is done, how to I go about fixing this? It seems like it also made a new share as well. Can I just used dolphin and move the contents from "movies" to "Movies" then delete the directory/share?

-

2 hours ago, dlandon said:

The best I can tell from the log is that the disk you unplugged without un-mounting caused the problem.

Thank you! I haven't had it happen again and I was pretty sure that it was my fault that it happened, but thank you for clarifying for me.

While I am here in the Unassigned Devices thread, I plan on having a disk off-array for downloading/processing before moving the final files to the array. Is there a particular format for my disk that I should use for that?

-

2 minutes ago, trurl said:

Yeah I just realized that cache-prefer might be exactly what you intend. Many expect that setting to result in files getting moved from cache to the array for some reason. All of those settings aren't easily understood from their one-word specifiers.

Hey no worries, I'm still glad you said something. I probably reread the help description for cache options 10 times before it clicked for me when I first started going through the options. I still have 6 more drives to empty to the array before I start messing more with plugins/shares/dockers and all that, including the download drive. Thank you for your help, now I have some stuff to think about in the mean time.

-

Cache prefer keeps the data in the cache unless the cache is full, then writes it to the array. When mover runs, it moves anything it on the array back to the cache, provided there is space, no? I would like my Temp directory to be on the SSD for speed, when possible.

EDIT: I just read my own quote, I'm doing a lot of things at once and I miss typed there, I'll go back and edit it.

-

43 minutes ago, trurl said:

It is certainly possible to use an Unassigned Device for your downloads. You just can't use mover to get files to/from it.

I think some of the apps you may be using have ways to move files around, or at least download to one place and unpack to another, for example. So maybe some of these apps could do some of the work depending on how you configure them.

There is also User Scripts plugin which will allow you to schedule a script. Maybe something for you in the sample scripts there or the Unassigned Devices sample scripts.

And, you might even make a copy of mover and modify it to your purposes. It is just a bash script at /usr/sbin/local/mover.

I could do the unassigned drive for downloads, which will allows sonnar/radarr/etc to do there thing to them on that drive, then move them to their final location on the array.

Then, I could do a Temp share with cache=prefer, which should keep it on the SSD unless its full. After some more thought and your comments there doesn't seem to be a reason to need to move the temp data to anywhere else, I can just delete it if I need more room cause that's why it is temp in the first place.

Editing mover is a cool idea, I am going to have to build a test rig so I can mess with stuff like that.I'm sure you have extremely more experience in data storage then I do. While I promise you I am not lying about how unlucky I have been with my download disks in the past, I understand that my person experience is not fact, and it could entire be something else other than "too much downloading is killing them". I'm just trying to explain things as I had them happen, not contradict anything you are saying.

Thank you for all your help so far. I think, at least for now, Temp on cache, unassigned disk for downloading.

Do you think it matters what format the download disk is? And if so, do you have a recommendation? I'm really only familiar with FAT, FAT32, xFAT and NTFS myself. -

33 minutes ago, trurl said:

I think it likely that you have misdiagnosed the problem. In what way was the disk "killed"? There are a number of ways to have disk problems, and most of them don't mean there is anything actually wrong with the disk itself. If you have a situation in the future please ask for help.

As in the drive died, and was no longer usable at all. There wasn't anything to misdiagnose, flex raid and drive pool store the files in a way where it's trivial to see what files are on what disk, and both times the disk died it was the one handling torrents/usenet downloads/unpacks. It could be a coincidence I guess, but after that I just moved the download drive to its own drive. I've burned out 2 more since then over the course of 5 years. Both times the download drive. As far as the specifics behind the exact failure, all the drives failed their respective diag tools (seatools, etc). 2 I was able to send out for RMA. I have had 4 drives die over the course of 10 years or so, and all 4 were drives that where handling download/unpacking. I download about 4TB a month to it, and that 4TB gets unpacked on it, processed (renamed/etc) before being moved to its final location in the array.

39 minutes ago, trurl said:How big is your cache? You can have a cache pool which allows you to choose from several btrfs raid configurations, including raid0 which will add their size together. There is no way to treat cache as separate disks.

You might also reconsider how you are currently using cache. I only use it for cache-only shares, such as dockers or temporary files. There is no requirement to cache any user share writes, and most of mine go directly to the array. They are usually written to by automated processes such as downloads and backup, so it is unattended and I am not really waiting for them to complete.

Each user share has cache settings. Here is a good reference of the effect of each of these settings:

https://lime-technology.com/forums/topic/46802-faq-for-unraid-v6/?page=2#comment-537383

I edited post with that info, but 240gb. Right now I am only using cache for docker/plex/appdata. My plex metadata is 70GB and 2 million+ files. It is a massive pain to backup and restore and I would like to have to do that as little as possible, which is why I don't want to use it for downloads. (also sometimes I'm downloading more than free space is available on the cache drive, so I don't want it to spill over to the array). I'm not done setting up unraid yet tho (no sonarr/jacket/radarr/plexrequests/torrents/etc/etc) so I'm sure more will end up on it.

As far as putting a Temp share as cache-only/prefered that could work. Sometimes I'm tossing data between computers that is bigger than available space on the cache, but if that spilled over to the array in those situations it would be ok. The only reason I am talking about backing up and putting the temp on a unassigned drive though is cause I am assuming I will have one for downloads. So it's safe to ignore the temp/backup part of why I want to use it, the main reason is just I'm afraid to download directly to the array or cache drive for fear of shorting its life span.

If this isn't actually a problem and has just been one major coincidence in my life since I started data hoarding, I can just get a 500/1TB SSD and replace the cache and use it for downloads and the like, which I would honestly prefer because not only is it easier to set up it frees up a slot in the server for another array drive.

-

2 hours ago, trurl said:

mover only works with user shares, and unassigned devices are not part of user shares.

Personally I would just do it all on cache

Besides my worry about stressing the SSD with all the extra read/write/etc, I'm not sure I actually have enough space on it. Would it be possible to add say a 4TB drive as a second cache for downloads/temp/CA Backup? I push 3-4 TBs of downloads a month, and I don't want it killing off a drive in the array or my main cache when it fails. Because of what's stored on it (downloads/etc) I don't need it backed up cause I won't really lose anything I need if it dies.

EDIT: Honestly, I'm not sure if I am even asking the right questions here. I'm still wrapping my head around everything while I transfer all my data over to the array. I previously used a Drobo, then Windows + Flexraid and then Windows + stablebit drivepool + snapraid. So having a disk off the array dor downloads and temp junk to throw around the network and stuff like that was pretty trivial. It's entirely possible I'm asking for something that doesn't even need to be done, so don't be afraid to just tell me I don't know what I'm talking about. I'm here to learn, so let me just try to explain one more time.

My main array is going to be ~80TB across 13 drives.

My SSD cache is 240GB.

I'll have one drive left in the server (probably 3~4TB, not done testing the drives from the old build yet) not part of the array.

I'm afraid to do my downloading directly to the array. When I first started I did this, and I ended up killing a drive in the array in a month, and then again in 6 months. Both times it was the drive with the download folders on it. Because of that, I moved it to a separate, off array disk.

I'm also afraid to put it on the main cache drive for the same reason. If that drive dies, there goes my plex metadata, and whatever else I will be storing on it (via Prefer option for cache). While this isn't as big of a pain in the ass as rebuilding the array, it's still not something I want to worry about having to do.

If it is off array, and it dies, its as simple as just slapping a new drive in. Any data (unfinished downloads) on it is easily replaceable, cause sonarr/radarr will just redownload it.

Then, because I have a drive already that is kind of a "throw away" disk, I also keep a folder on it called "Temp" which I throw files and stuff in to easily share on my local network. I have 8-9 computers in the house and countless cell phones/laptops/other devices, and I allow temp to be open to everyone to easily transfer items between devices without having to set up shares on each device. "Temp" works the same way for me, if I lose everything in it, no big deal. Same thing with my CA Backup. Unless the download/temp/backup drive dies at the same time as the flash drive or whatever other thing I am backing up, I'm not losing anything I can just easy replace.Since this is what I'm used to, I'm trying to recreate something similar in unRaid. If there is a better way to do it then unassigned devices, please just point me in the right direction.

Sorry for the long post, but I'm just trying to explain it as best I can. -

I'm still wrapping my head around how linux/unraid does things. In my old set up, I had a extra drive in the rig but not apart of the array for downloads (sab/torrents/etc) to go to. That way the download/unrar/etc operations were not taking place on the array or the OS drive. I also had a "temp" folder set up on the drive so that anything I just need to pass around my local network could get tossed into it.

In unRaid, the OS drive is a flash drive (well, stored in RAM while running). Still, the SSD cache I have in the rig is holding all my plex metadata, docker stuff, etc and I rather not download and etc to it if possible.

Now, I've been playing around and I think I figured out a solution, but I wanted to ask if what I was doing was "best practice" or if I was missing a better way to handle things.

I was thinking about mounting a drive with "unassigned devices", and pointing sab/torrents to download and handle the files there, before radarr/sonarr/etc pick them up and move them to the array. I could also create a temp directory on the drive as before. I was thinking about making a "temp" share that used cache, so that it stored whatever files I threw in it on the SSD, then moved it to the unassigned drive that night via mover. I'm pretty sure I understand how to set that up on my own. My question is...should I be handling it differently? I also was going to make a Backup directory on the drive to back up the flash drive and other relevant stuff using CA Appdata Backup / Restore.I'm just trying to minimize the amount of time the drives in the array need to be used.

-

1

1

-

-

6 hours ago, dlandon said:

UD will unmount all devices when unRAID is shut down. You wouldn't have to unmount your device before you shut down unRAID. What probably happened is that the device you pulled and didn't unmount hung the shutdown.

When unRAID is shutting down there is a timer that runs that will force a shutdown if things are hanging. When that happens, unRAID will attempt to write diagnostics to the /boot/log/ folder. See it the diagnostics were written there and post them and we can see more of what happened.

These?

-

I recently had a unclean shutdown and I thought it might have had something to do with UD.

What happened was I had mounted a drive to copy data off of, and when the transfer was done I forgot to unmount it before I pulled it. I plugged another drive into the same spot, got nothing, moved it a slot over, and continued transferring data from the next drive. After the transfer started I had that "...damn I'm a idiot" moment and released what I did. So after the second transfer finished, I unmounted that drive and then hit "power down". This is when the unlcean shutdown happened.

I'm not sure if it had anything to do with UD but its the only weird thing that happened during that session that I can think of. I posted on general support and the pointed me this way so here I am. I'm too stupid to figure out how to dig through the diag zip it gave me, and I can't seem to figure out exactly why I had the bad shutdown.

-

24 minutes ago, Frank1940 said:

Why don't you post over in the UD support thread and ask if the plugin unmounts drives when the operating system sends the shutdown message to the plugins?

Thank you, I will give that a try.

Share permissions

in General Support

Posted · Edited by FrostyWolf

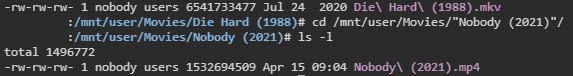

I'm still having this issue. I messed around a bit today and I still can't figure this out...

Die Hard (1988).mkv - When I connected to my server via samba share, I can edit, delete, etc this file

Nobody (2021).mp4 - When I connected to my server via samba share, I get the you need permission error

If I run Fix Permissions, I can then access both.

I'm not linux vet...but don't they have the same permissions? What is fix permissions doing that lets me then access it?

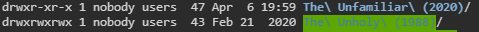

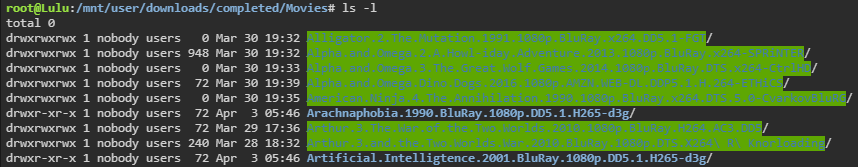

EDIT: After some more digging, the issue seems to be with the directory permissions:

And just to show:

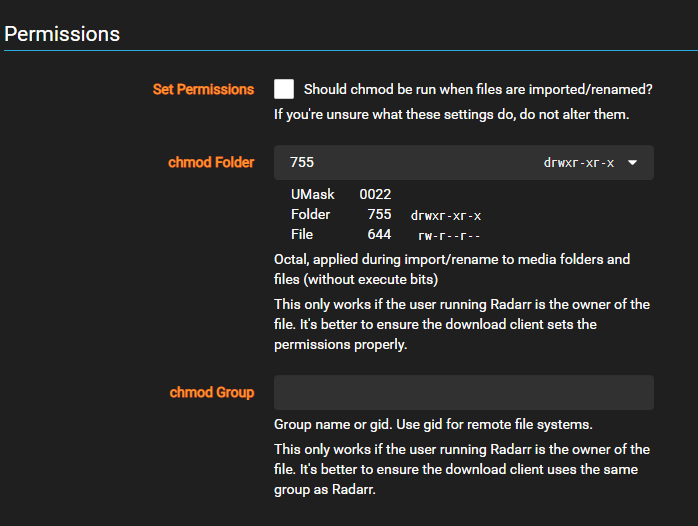

I am not doing anything with permissions with radarr (or any container) and this happens on all files, not just movies.

Also:

Umask is off in nzbget, which is where the files came from.

When I run the umask command in terminal in unraid, it is set to 0000 for /mnt/, /mnt/user, and /mnt/user/Movies

I do not understand where and why these permissions are getting set from on new directories.

You can see the same thing is happening in /mnt/user/downloads/

March 30th was the last day I ran fix permissions, everything after that doesn't have write access.

A Band-Aid fix is I can tell radarr/sonarr/etc and go through every single one of my containers that has the option and set them all, but I want to understand why this is suddenly happening for everything.