technorati

-

Posts

25 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by technorati

-

-

I have seen a few other posts about this, but nothing that was said there has fixed this for me. I have a GPU that I need to pass-thru to a VM. The device shows up in the PCI list (under Linux)/Device Manager (under Windows), but I get no signal out the HDMI port.

Things I have already checked/tried:

0) I have confirmed the GPU, monitor, and HDMI cable all work when swapped to bare-metal devices, and this setup was working fine until I upgraded to 6.9.2.1) I have tried switching OSes from Linux to Windows

2) I have tried switching "Machine" type; I have tried every version of both machine types under both OSes

3) I have confirmed that the GPU and its associated sound device are part of an otherwise-isolated IOMMU group and have tried both with and without having that IOMMU group being assigned to VFIO at boot.

4) I have tried both SeaBIOS and OVMF

5) I have tried using SpaceInvaderOne's vbios dump script, it always generates an error, even with Docker and VMs disabled. (See https://pastebin.com/ejFpvmPi for output); I have done as suggested in the video and rebooted the machine with the GPU bound to VFIO at boot, to no avail.

Any ideas or suggestions would be appreciated; Without this HDMI port working, we are unable to monitor our surveillance camers in realtime.

Loading config from /boot/config/vfio-pci.cfg BIND=0000:02:00.0|10de:1d01 0000:02:00.1|10de:0fb8 --- Processing 0000:02:00.0 10de:1d01 Vendor:Device 10de:1d01 found at 0000:02:00.0 IOMMU group members (sans bridges): /sys/bus/pci/devices/0000:02:00.0/iommu_group/devices/0000:02:00.0 /sys/bus/pci/devices/0000:02:00.0/iommu_group/devices/0000:02:00.1 Binding... Successfully bound the device 10de:1d01 at 0000:02:00.0 to vfio-pci --- Processing 0000:02:00.1 10de:0fb8 Vendor:Device 10de:0fb8 found at 0000:02:00.1 IOMMU group members (sans bridges): /sys/bus/pci/devices/0000:02:00.1/iommu_group/devices/0000:02:00.0 /sys/bus/pci/devices/0000:02:00.1/iommu_group/devices/0000:02:00.1 Binding... 0000:02:00.0 already bound to vfio-pci 0000:02:00.1 already bound to vfio-pci Successfully bound the device 10de:0fb8 at 0000:02:00.1 to vfio-pci --- vfio-pci binding complete Devices listed in /sys/bus/pci/drivers/vfio-pci: lrwxrwxrwx 1 root root 0 Sep 5 17:22 0000:02:00.0 -> ../../../../devices/pci0000:00/0000:00:02.0/0000:02:00.0 lrwxrwxrwx 1 root root 0 Sep 5 17:22 0000:02:00.1 -> ../../../../devices/pci0000:00/0000:00:02.0/0000:02:00.1 ls -l /dev/vfio/ total 0 crw------- 1 root root 249, 0 Sep 5 17:22 33 crw-rw-rw- 1 root root 10, 196 Sep 5 17:22 vfio -

Apologies - you'd think that by my age I'd be aware that correlation does not imply causation, but ¯\_(ツ)_/¯

-

Ever since the update to 1.6.2, my unRAID machine no longer joins my ZT network, and when I try to debug inside the container, I get errors from the zerotier-cli tool:

zerotier-cli info zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) 200 info XXXXXXXXXXX 1.6.2 OFFLINEI have tried deleting the container and reinstalling from CA, but it comes back with the same issue.

Rolling back to spikhalskiy/zerotier:1.4.6 has fixed the issue for now.

-

What is the process for switching the email address attached to my unraid purchase?

-

I have had the "extended tests" running for almost 20 hours, which is longer than it takes me to do a full parity rebuild. I attached strace to the PID identified in "/tmp/fix.common.problems/extendedPID" and for more than 4 hours it's been looping continuously over the same 2856 directories under my Radarr:

stat("/mnt/user/darr/radarr/MediaCover/XXXX", {st_mode=S_IFDIR|0755, st_size=158, ...}) = 0Is this expected behaviour?

-

This used to be working for me, but recently stopped. I now see it stuck forever in REQUESTING_CONFIGURATION. Obviously something has changed, but I cannot for the life of me determine WHAT, and I don't know where to go next to debug.

/ # zerotier-cli info 200 info ca96d2e10c 1.4.6 OFFLINE / # zerotier-cli listnetworks 200 listnetworks <nwid> <name> <mac> <status> <type> <dev> <ZT assigned ips> 200 listnetworks 8056c2e21c000001 02:ca:96:ce:03:ce REQUESTING_CONFIGURATION PRIVATE ztmjfmfyq5 - / # zerotier-cli listpeers 200 listpeers <ztaddr> <path> <latency> <version> <role> 200 listpeers 34e0a5e174 - -1 - PLANET 200 listpeers 3a46f1bf30 - -1 - PLANET 200 listpeers 992fcf1db7 - -1 - PLANET 200 listpeers de8950a8b2 - -1 - PLANET

-

It was formatted once before, but when shfs crashed it may never have come back. I have created a new config and removed the cache drive from the array to see whether the problem occurs again. Thank you both for looking into this.

-

-

"Fix Common Problems" warned me about the SSD in the array after booting, so I made a new config with no SSD in the array.

I never got any alerts from the system that cache was full, and the only thing going in the cache should be the one docker container's appdata...I guess I'll just watch and see if it happens again at this point. Thanks, @trurl.

-

@trurl is the docker config still useful, seeing now that it was a crash of

shfsthat seemingly took everything south with it?

-

I had to physically reboot the machine - I hope that doesn't mean the diagnostics are worthless, but here they are just in case.

-

Actually, the webUI is now completely unresponsive, and my SSH sessions have all been terminated.

-

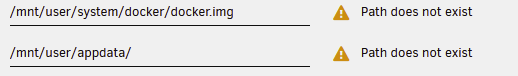

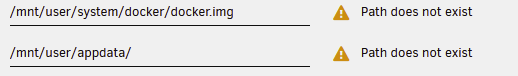

Turns out, the problem is much bigger than I thought at first:

-

I was just checking in on my unraid box, and found that my docker container had crashed, and the data on a volume that was mapped into the container is completely hosed. The only message in the syslog was

[32085.673877] shfs[5258]: segfault at 0 ip 0000000000403bd6 sp 0000149d0d945930 error 4 in shfs[403000+c000] [32085.673882] Code: 89 7d f8 48 89 75 f0 eb 1d 48 8b 55 f0 48 8d 42 01 48 89 45 f0 48 8b 45 f8 48 8d 48 01 48 89 4d f8 0f b6 12 88 10 48 8b 45 f0 <0f> b6 00 84 c0 74 0b 48 8b 45 f0 0f b6 00 3c 2f 75 cd 48 8b 45 f8Now, my data is ultimately fine - I just recovered the original data from backup. But I'm anxious now about continuing to use unRAID in this circumstance. Is there any way to discover what happened?

-

No, they are part of the array.

-

3 hours ago, trurl said:

The yellow highlight will not go away when you access the drives Attributes. It is the Dashboard SMART warning icon and associated Notifications that are supposed to no longer happen once you have acknowledged, until the count increases. Are you still getting those?

Yes, I'm still getting them - there's a screenshot a few posts above here showing that it came back again this morning.

QuoteNothing obvious in diagnostics that makes me think there is a Flash problem. Has Fix Common Problems ever told you there was a Flash problem?

No, I just ran "Fix Common Problems" and it doesn't report any issues with flash; nor have I ever seen anything in the logs or other notifications suggesting there was.

QuoteNot ideal, but you can configure each disk regarding which SMART attributes get monitored by clicking on the disk to get to its page, then go to SMART Settings and uncheck the box for the attribute. Of course that means it will never check that attribute again, though you can always take a look at it yourself in the Attributes section as before.

I'd prefer to live with it, so at least I get notified if the count does increase, indicating that a larger problem exists. Mostly, I was just curious why it would keep notifying me of something I'd already acknowledged, but it sounds like there's not a ready/obvious answer to that.

-

3 minutes ago, trurl said:

From Main, click on a drive to get to its page, then go to the Attributes section. Do any of the other attributes have yellow highlight?

No - that's exactly what I meant by "no other attribute on either drive shows any indicators of an alert" - the only field in yellow on either drive is that UDMA CRC Count.

juggernaut-diagnostics-20200511-1001.zip

Attached is an updated diagnostics.zip from when I had the error this morning.

-

15 minutes ago, trurl said:

I have one disk with 2 CRC errors. I acknowledged them long ago by clicking on the "thumbs down" on the Dashboard. Since they haven't increased I don't get any further warnings.

Is that what you have done to acknowledge them? Have they increased?

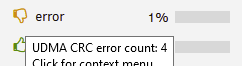

Yes, I clicked the "Thumbs down" icon and chose "Acknowledge" from the menu. The values (UDMA CRC Count) have not increased on the drives, but certain events (stopping the array, rebooting the server) sometimes cause unRAID to alert me again on the same value in the same attribute. No other attribute on either drive shows any indicators of an alert.

-

On 5/9/2020 at 7:23 PM, trurl said:

So have you tried it again after fixing that problem?

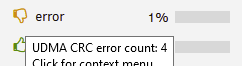

Yes, I am still seeing the SMART status come back to error again intermittently:

(I just confirmed there have been no csrf_token errors in my logs since my last reboot)

-

Based on that FAQ, I believe this is because there was another computer on the network that still had the unRAID web UI open across multiple reboots. It wasn't clear to me, though, whether you're saying this is the reason that it's not saving the SMART acks?

Thanks for looking into this!

-

Other settings seem to stick OK, but here's my Diagnostics.

-

Yes, that's what I'm doing, but it seems to come back and alert me again every time I stop the array or reboot the machine.

-

I have pulled two drives from a system that had some bad/cheap SATA cables in it, and added them to my unRIAD. When I pulled the drives, the SATA cables were obviously coming apart where they mount to the drive, so I was not surprised to find that unRAID alerted me these drives had positive values in "UDMA CRC error count" (12 on one drive, 4 on the other).

As I'm pretty confident this was due to the bad linkage they previously had, I'd like to just acknowledge this error, unless those values start increasing all of a sudden. But it seems every time I stop / start the array, I have to re-acknowledge those errors. Is there any way to permanently let the system know "Yep - 12 and 4 are acceptable values for those two drives"?

-

Can we get mmv added? Just in case you need a source distribution, I've grabbed the latest distribution from Debian and applied all their patches, and merged it into a single git repo at https://github.com/technoratii/mmv.git

No signal to HDMI with pass-thru GPU after upgrading to 6.9.2

in VM Engine (KVM)

Posted

I am not using vbios because I have been unable to dump it.