technorati

Members-

Posts

25 -

Joined

-

Last visited

technorati's Achievements

Noob (1/14)

0

Reputation

-

No signal to HDMI with pass-thru GPU after upgrading to 6.9.2

technorati replied to technorati's topic in VM Engine (KVM)

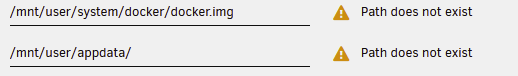

I am not using vbios because I have been unable to dump it. -

I have seen a few other posts about this, but nothing that was said there has fixed this for me. I have a GPU that I need to pass-thru to a VM. The device shows up in the PCI list (under Linux)/Device Manager (under Windows), but I get no signal out the HDMI port. Things I have already checked/tried: 0) I have confirmed the GPU, monitor, and HDMI cable all work when swapped to bare-metal devices, and this setup was working fine until I upgraded to 6.9.2. 1) I have tried switching OSes from Linux to Windows 2) I have tried switching "Machine" type; I have tried every version of both machine types under both OSes 3) I have confirmed that the GPU and its associated sound device are part of an otherwise-isolated IOMMU group and have tried both with and without having that IOMMU group being assigned to VFIO at boot. 4) I have tried both SeaBIOS and OVMF 5) I have tried using SpaceInvaderOne's vbios dump script, it always generates an error, even with Docker and VMs disabled. (See https://pastebin.com/ejFpvmPi for output); I have done as suggested in the video and rebooted the machine with the GPU bound to VFIO at boot, to no avail. Any ideas or suggestions would be appreciated; Without this HDMI port working, we are unable to monitor our surveillance camers in realtime. Loading config from /boot/config/vfio-pci.cfg BIND=0000:02:00.0|10de:1d01 0000:02:00.1|10de:0fb8 --- Processing 0000:02:00.0 10de:1d01 Vendor:Device 10de:1d01 found at 0000:02:00.0 IOMMU group members (sans bridges): /sys/bus/pci/devices/0000:02:00.0/iommu_group/devices/0000:02:00.0 /sys/bus/pci/devices/0000:02:00.0/iommu_group/devices/0000:02:00.1 Binding... Successfully bound the device 10de:1d01 at 0000:02:00.0 to vfio-pci --- Processing 0000:02:00.1 10de:0fb8 Vendor:Device 10de:0fb8 found at 0000:02:00.1 IOMMU group members (sans bridges): /sys/bus/pci/devices/0000:02:00.1/iommu_group/devices/0000:02:00.0 /sys/bus/pci/devices/0000:02:00.1/iommu_group/devices/0000:02:00.1 Binding... 0000:02:00.0 already bound to vfio-pci 0000:02:00.1 already bound to vfio-pci Successfully bound the device 10de:0fb8 at 0000:02:00.1 to vfio-pci --- vfio-pci binding complete Devices listed in /sys/bus/pci/drivers/vfio-pci: lrwxrwxrwx 1 root root 0 Sep 5 17:22 0000:02:00.0 -> ../../../../devices/pci0000:00/0000:00:02.0/0000:02:00.0 lrwxrwxrwx 1 root root 0 Sep 5 17:22 0000:02:00.1 -> ../../../../devices/pci0000:00/0000:00:02.0/0000:02:00.1 ls -l /dev/vfio/ total 0 crw------- 1 root root 249, 0 Sep 5 17:22 33 crw-rw-rw- 1 root root 10, 196 Sep 5 17:22 vfio

-

[Support] spikhalskiy - ZeroTier

technorati replied to Dmitry Spikhalskiy's topic in Docker Containers

Apologies - you'd think that by my age I'd be aware that correlation does not imply causation, but ¯\_(ツ)_/¯ -

[Support] spikhalskiy - ZeroTier

technorati replied to Dmitry Spikhalskiy's topic in Docker Containers

Ever since the update to 1.6.2, my unRAID machine no longer joins my ZT network, and when I try to debug inside the container, I get errors from the zerotier-cli tool: zerotier-cli info zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) zerotier-cli: /usr/lib/libstdc++.so.6: no version information available (required by zerotier-cli) 200 info XXXXXXXXXXX 1.6.2 OFFLINE I have tried deleting the container and reinstalling from CA, but it comes back with the same issue. Rolling back to spikhalskiy/zerotier:1.4.6 has fixed the issue for now. -

What is the process for switching the email address attached to my unraid purchase?

-

technorati started following argonaut

-

I have had the "extended tests" running for almost 20 hours, which is longer than it takes me to do a full parity rebuild. I attached strace to the PID identified in "/tmp/fix.common.problems/extendedPID" and for more than 4 hours it's been looping continuously over the same 2856 directories under my Radarr: stat("/mnt/user/darr/radarr/MediaCover/XXXX", {st_mode=S_IFDIR|0755, st_size=158, ...}) = 0 Is this expected behaviour?

-

[Support] spikhalskiy - ZeroTier

technorati replied to Dmitry Spikhalskiy's topic in Docker Containers

This used to be working for me, but recently stopped. I now see it stuck forever in REQUESTING_CONFIGURATION. Obviously something has changed, but I cannot for the life of me determine WHAT, and I don't know where to go next to debug. / # zerotier-cli info 200 info ca96d2e10c 1.4.6 OFFLINE / # zerotier-cli listnetworks 200 listnetworks <nwid> <name> <mac> <status> <type> <dev> <ZT assigned ips> 200 listnetworks 8056c2e21c000001 02:ca:96:ce:03:ce REQUESTING_CONFIGURATION PRIVATE ztmjfmfyq5 - / # zerotier-cli listpeers 200 listpeers <ztaddr> <path> <latency> <version> <role> 200 listpeers 34e0a5e174 - -1 - PLANET 200 listpeers 3a46f1bf30 - -1 - PLANET 200 listpeers 992fcf1db7 - -1 - PLANET 200 listpeers de8950a8b2 - -1 - PLANET -

argonaut started following technorati

-

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

It was formatted once before, but when shfs crashed it may never have come back. I have created a new config and removed the cache drive from the array to see whether the problem occurs again. Thank you both for looking into this. -

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

Got another shfs segfault. Diagnostics attached. hyperion-diagnostics-20200617-1313.zip -

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

"Fix Common Problems" warned me about the SSD in the array after booting, so I made a new config with no SSD in the array. I never got any alerts from the system that cache was full, and the only thing going in the cache should be the one docker container's appdata...I guess I'll just watch and see if it happens again at this point. Thanks, @trurl. -

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

@trurl is the docker config still useful, seeing now that it was a crash of shfs that seemingly took everything south with it? -

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

I had to physically reboot the machine - I hope that doesn't mean the diagnostics are worthless, but here they are just in case. hyperion-diagnostics-20200616-1452.zip -

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

-

segfault killed my docker container and corrupted data

technorati replied to technorati's topic in General Support

-

I was just checking in on my unraid box, and found that my docker container had crashed, and the data on a volume that was mapped into the container is completely hosed. The only message in the syslog was [32085.673877] shfs[5258]: segfault at 0 ip 0000000000403bd6 sp 0000149d0d945930 error 4 in shfs[403000+c000] [32085.673882] Code: 89 7d f8 48 89 75 f0 eb 1d 48 8b 55 f0 48 8d 42 01 48 89 45 f0 48 8b 45 f8 48 8d 48 01 48 89 4d f8 0f b6 12 88 10 48 8b 45 f0 <0f> b6 00 84 c0 74 0b 48 8b 45 f0 0f b6 00 3c 2f 75 cd 48 8b 45 f8 Now, my data is ultimately fine - I just recovered the original data from backup. But I'm anxious now about continuing to use unRAID in this circumstance. Is there any way to discover what happened?