mickh18

-

Posts

17 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by mickh18

-

-

3 hours ago, dlandon said:

Do any of the other tabs show anything? Like Dashboard?

Dashboard and others are OK

-

-

-

1 hour ago, mickh18 said:

Hi Josh

Not sure what's changed but the I've been using staging branch perfectly fine for few weeks and since 18th April it's just stopped processing files even though watcher and run on start is enabled. Even though there are new files for processing it's not adding them to queue.

ive changed to latest build and it then found the outstanding files that require processing but none of the workers begin to work.

can you please help?

Ignore this I've just read previous post and have deleted the db. It's sprung back into life :)

-

Hi Josh

Not sure what's changed but the I've been using staging branch perfectly fine for few weeks and since 18th April it's just stopped processing files even though watcher and run on start is enabled. Even though there are new files for processing it's not adding them to queue.

ive changed to latest build and it then found the outstanding files that require processing but none of the workers begin to work.

can you please help?

-

5 hours ago, Josh.5 said:

Sorry, just double checked. I only pushed the fix to the master branch. I've just applied it to the staging branch also. Give it 10 mins and then there should be an update for you to pull.

Sorry again.

Many thanks Josh. I’ve managed to now install but still loosing access to the UI soon as it starts encoding.

log attached.

-

14 minutes ago, Josh.5 said:

Sorry, just double checked. I only pushed the fix to the master branch. I've just applied it to the staging branch also. Give it 10 mins and then there should be an update for you to pull.

Sorry again.

Many thanks will test

-

3 hours ago, Josh.5 said:

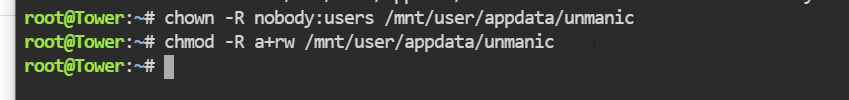

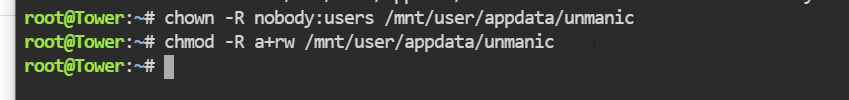

Could you please try modifying the permission on your appdata/unmanic directory to:

chown -R nobody:users

chmod -R a+rw

Then restart the containerNo joy

-

21 minutes ago, Josh.5 said:

could you also post your config

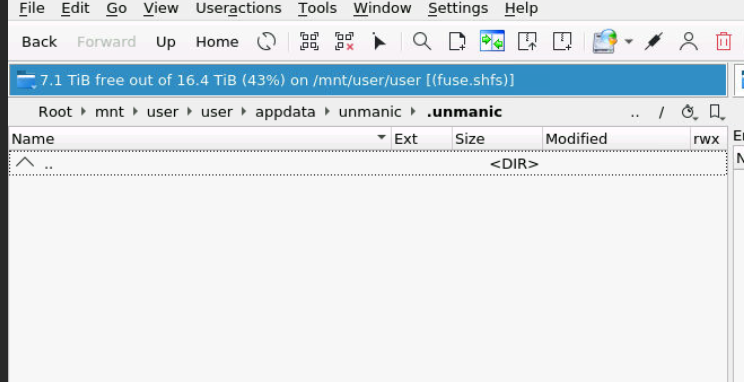

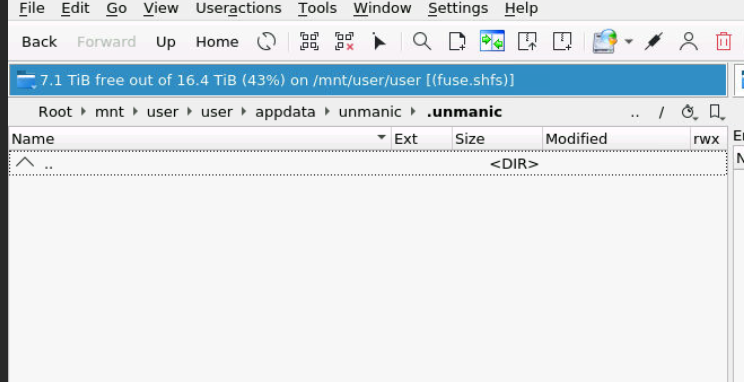

Many thanks for quick response, im looking into app data folder but nothing visible. tried reinstalling again

Below screenshot and also docker template output upon install, i just cant figure this out

Command:root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='unmanic' --net='host' -e TZ="Europe/London" -e HOST_OS="Unraid" -e 'TCP_PORT_8888'='8888' -e 'PUID'='99' -e 'PGID'='100' -e 'NVIDIA_VISIBLE_DEVICES'='false' -v '/mnt/user/appdata/unmanic':'/config':'rw' -v '/mnt/user/media/movies':'/library/movies':'rw' -v '/mnt/user/media/tv':'/library/tv':'rw' -v '/mnt/user/transcode/unmanic':'/tmp/unmanic':'rw' 'josh5/unmanic'

ad7c21b71d2838341f57032be5656a733087da0847bc9155a1f99e71d3ae982c

The command finished successfully!

-

I cant access the UI at all, don't know what's happened, I've deleted all files and started over several times tried staging and latest with and without GPU, bridged and host networks, im pulling my hair out

logs attached -

Cool. Excited to try this out, how do I install while it’s at staging?

-

On 3/23/2021 at 3:09 PM, rmeaux said:

Happy to say I have fixed my issue. its due to unmanic not being compatible with latest nvidia drivers. had to downgrade to v460.39. unmanic is happily transcoding again

hope this helps others

-

On 3/18/2021 at 4:35 PM, rmeaux said:

Your symptoms sound close to mine. I was trying staging and my RAM and CPU would max out about 15% into the first transcode. I would lose web UI response for a bit and it would come back but be stuck on the same current transcode not doing anything. I went back to 0.0.1 and been running fine that way. Josh.5 thinks it may be related to Audio transcode. I tried disabling that while on staging but still had the same issue. I don't know anything about the backend of this so I am at his mercy. I love the work Josh is doing on unManic but I am perfectly happy on 0.0.1 for the time being. He'll get it. He's done nothing but improve it since its birth.

Interesting. How can I downgrade to previous versions of Unmanic on Unraid to see if it resolved my issues?

-

HI I Need help please, unmanic just isnt working how it used to , im on latest unraid OS 6.9 and tried with 2 NVIDIA GPUs get same results. I also keep losing access to web UI, its really weird as ive not had any problems like this before

Deleted cleared data several times and retired installing to no avail, logs below,

onerror(os.rmdir, path, sys.exc_info())

File "/usr/lib/python3.6/shutil.py", line 488, in rmtree

os.rmdir(path)

OSError: [Errno 39] Directory not empty: '/tmp/unmanic/unmanic_file_conversion-1615972102.5668645'

Running Unmanic from installed module

Starting migrations

There is nothing to migrate

UnmanicLogger - SETUP LOGGER

Clearing cache path - /tmp/unmanic/unmanic_file_conversion-1615972102.5668645

Traceback (most recent call last):

File "/usr/local/bin/unmanic", line 11, in <module>

sys.exit(main())

File "/usr/local/lib/python3.6/dist-packages/unmanic/service.py", line 403, in main

service.run()

File "/usr/local/lib/python3.6/dist-packages/unmanic/service.py", line 388, in run

self.start_threads()

File "/usr/local/lib/python3.6/dist-packages/unmanic/service.py", line 350, in start_threads

common.clean_files_in_dir(settings.CACHE_PATH)

File "/usr/local/lib/python3.6/dist-packages/unmanic/libs/common.py", line 105, in clean_files_in_dir

shutil.rmtree(root)

File "/usr/lib/python3.6/shutil.py", line 490, in rmtree

onerror(os.rmdir, path, sys.exc_info())

File "/usr/lib/python3.6/shutil.py", line 488, in rmtree

os.rmdir(path)

OSError: [Errno 39] Directory not empty: '/tmp/unmanic/unmanic_file_conversion-1615972102.5668645'

Running Unmanic from installed module

Starting migrations

There is nothing to migrate

UnmanicLogger - SETUP LOGGER

Clearing cache path - /tmp/unmanic/unmanic_file_conversion-1615972102.5668645

Traceback (most recent call last):

File "/usr/local/bin/unmanic", line 11, in <module>

sys.exit(main())

File "/usr/local/lib/python3.6/dist-packages/unmanic/service.py", line 403, in main

service.run()

File "/usr/local/lib/python3.6/dist-packages/unmanic/service.py", line 388, in run

self.start_threads()

File "/usr/local/lib/python3.6/dist-packages/unmanic/service.py", line 350, in start_threads

common.clean_files_in_dir(settings.CACHE_PATH)

File "/usr/local/lib/python3.6/dist-packages/unmanic/libs/common.py", line 105, in clean_files_in_dir

shutil.rmtree(root)

File "/usr/lib/python3.6/shutil.py", line 490, in rmtree

onerror(os.rmdir, path, sys.exc_info())

File "/usr/lib/python3.6/shutil.py", line 488, in rmtree

os.rmdir(path)

OSError: [Errno 39] Directory not empty: '/tmp/unmanic/unmanic_file_conversion-1615972102.5668645' -

Agreed it’s not best practice but I used cloud flare dns, reverse proxy and have a robust firewall.

Is there a way I can remove the unraid.net dns element from my box?

-

I have added my server to unraid.net dns so my server is *****************.unraid.net but I’m now unable to access my server remotely using my domain name.

How can I remove unraid.net dns entry to restore external access?

thanks in advance 🙏

Code 43 with GPU passthrough on my Windows 11 VM

in VM Engine (KVM)

Posted

Ive just tried the below and its not workign for me, Can you offer any further assistance please as this is driving me crazy?

<features>

<acpi/>

<apic/>

<hyperv mode='custom'>

<relaxed state='on'/>

<vapic state='on'/>

<spinlocks state='on' retries='8191'/>

<vendor_id state='on' value='1234567890ab'/>

</hyperv>

<kvm>

<hidden state='on'/>

</kvm>

<vmport state='off'/>

<ioapic driver='kvm'/>

</features>