aiden

-

Posts

951 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by aiden

-

-

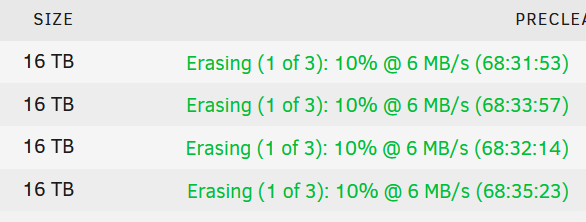

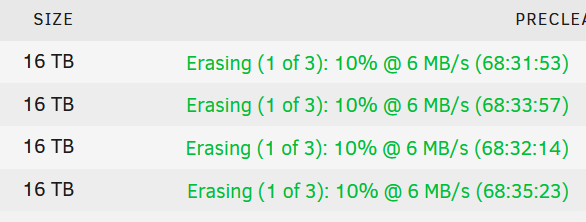

Hello,

I am trying to preclear 4 new 16TB Seagate EXOS x16 drives. The read speeds averaged 100 MB/s, but the write speed is a horrible 6 MB/s. I have verified that write-caching is enabled for all drives.

Ideas?

-

Okay, I've been absent for a while, and suddenly we're on v 6.5.3 with 6.6 looming. The sys admin in me just doesn't trust the "Check for Updates" easy button in the unRAID gui, especially given how complicated past updates have been (I've been using unRAID since the 4.x days).

I've been reading countless update threads for the past hour, trying to discern what the generally accepted stable version is currently (6.4.1, 6.5.0, 6.5.1..?), and I just can't get a good handle on what to upgrade to. What is the recommended update procedure from v6.3? Which version should I go to?

System Config

- Single parity

- All drives are ReiserFS, including cache

I noted several variations of upgrade issues for different users depending on their configuration, so I'm listing my add-ons below in case anything stands out as a "definitely disable / delete and reinstall" situation.

Docker

- MySQL

Plugins

- Community Applications (Andrew Zawadzki) 2017.11.23

- CA Auto Update Applications (Andrew Zawadzki) 2017.10.28

- CA Backup/Restore App Data (Andrew Zawadzki) 2017.01.28

- CA Cleanup App Data (Andrew Zawadzki) 2017.11.24a

- Dynamix System Buttons (Bergware) 2017.06.07

- Dynamix System Information (Bergware) 2017.8.23

- Dynamix System Statistics (Bergware) 2017.10.02b

- Dynamix WebGUI (Bergware) 2017.03.30

- Fix Common Problems (Andrew Zawadzki) 2017.10.15a

- Nerd Tools (dmacias72) 2017.10.03a

- Plex Media Server (PhAzE) 2016.09.17.1

- SABnzbd (PhAzE) 2016.11.29.1

- Sick Beard (PhAzE) 2016.09.17.1

- Unassigned Devices (dlandon) - 2017.10.17

I have been totally stable with this build, and the only reason I want to upgrade is because Community Applications will no longer update unless I'm at least on version 6.4.

I appreciate any advice and experiences with these versions to help me formulate an upgrade path.

-

I find myself in the position of needing to upgrade my hardware to a new chassis. I have been looking hard at 45 Drives, but they are spendy. I've always loved the look of the XServe design, and it's possible to purchase SEVERAL of them for the price of one empty Storinator case.

I realize that it would take 10U (3 XServe RAIDS + 1 XServe) to equal the capacity of a 4U Storinator. But the aesthetics are are a different story. I notice cooling is a concern, but that seems to depend on fan placement and channeling. But my question is, is it worth spending the time + money to buy some of these old girls and rip her guts out? Do all the status indicators still work for those of you who have embarked on this journey? Any other gotchas I should consider?

Thanks!

-

The biggest advantage of ZFS to my mind is the self-healing properties, protecting against bit rot etc. That is ideal for a hard disk archive. But the downsides have already been mentioned: no mixed drives, multiple drives per pool means all drives spin up together, etc. The merits of ReiserFS have been discussed in ancient threads on here, but the primary reason it's still in use is its robust journaling. BTRFS is most likely the path forward, though there has been no mention of it beyond the cache drives.

-

I generally run 2 cycles of pre-clear, although some like to run 3. The reality is the vast majority of issues (if any) will occur on the 1st pass, so I think 2 is plenty.

I suspect it will be a LONG time before you need more space than the 4TB drives will provide

I would agree with you, if I hadn't detected errors in my third pass of 2 drives in the past few years. If I had only done 2 cycles, they would have passed. I know there's a point of diminishing returns with repeated cycles, and someone could argue that 4 cycles caught a failure. But after my experiences, 3 passes are worth the wait in my mind.

-

Very nice... thanks for posting this update.

-

... The cost on these kind of cases is very prohibitive. Could you provide a link of where you are sourcing yours?

He listed the case he's using with the parts list -- HARDIGG CASES 413-665-2163

... and a quick Google for this case shows they are available on e-bay for exactly what he's listed ($184.23), so I suspect that's where he purchased it. http://www.ebay.com/itm/HARDIGG-CASES-413-665-2163-SHIPPING-STORAGE-HARD-CASE-/190709478495

Interesting, because my "quick" Google search came back that 413-665-2163 is the TELEPHONE number for Hardigg. That's why I asked the question. My guess is that is in fact NOT the part number for the actual item, and that the eBay listed it incorrectly. I appreciate your attempt to answer for him, but you of all people should know I do plenty of my own research before I start asking questions. If that is where the OP found the item, the so be it. But I would rather have him tell me, just the same.

-

Very nice. Looks like you are off to a good start. Which piece will be next? If you think it is heavy now, wait until you add in the UPS.

More importantly how will he/she move it, wouldn't want that thing sitting in my living room lol.

This is no joke. With 24 hard drives in the unRAID server, another server, a rackmount UPS, and the weight of the case, this will weigh a LOT. Why one large case instead of several smaller ones? I would think when going mobile, that would help make it easier to move the whole system.

EDIT: Nevermind. The cost on these kind of cases is very prohibitive. Could you provide a link of where you are sourcing yours?

I like the idea of a modular setup like this, because it's a completely self contained enterprise quality infrastructure in a half height rack. I will watch this DIY with interest.

-

That's definitely a proof of concept. The airflow is completely wrong, however. You need the fans to blow across ALL the drives, not hit the first one flat and disperse around the rest. If you follow that layout exactly, you would need to put a fan on the bottom and top, pulling air from the bottom and exhausting out the top. Whatever you decide to do, keep that in mind.

-

I went with an ammo can after seeing a similar build, and because I can't find any super small cases. I did find this:

http://www.kustompcs.co.uk/acatalog/info_0790.html

But I'm not sure I can find it in the US, and I don't want to sink a ton of money into this system anyway.

It IS available in the US, but it is out of stock right now.

http://www.u-nas.com/xcart/product.php?productid=17617&cat=249

-

A more efficient board with a good PCIe x16 24-port controller would be more efficient...

I agree. Plus, redundancy would make things more redundant, which is always more durable on dedicated systems.

-

Love my Coolspins. They are also the drives of choice for Backblaze.

-

There's something to be said for having some extra power on board if you need it. When the 5TB drives come along and you get that upgrade itch, the drives may be more power hungry than your current drives. Then you'd have to upgrade the PSU as well. Just more food for your brain.

-

Anyone have a way I can get back to rc12a? This one will not sleep and resume properly. Once it sleeps and wakes up it is not accessible and I don't have time troubleshoot. 12a worked perfectly for me.

Cheers, Dan

http://download.lime-technology.com/download/

EDIT: Lol... Lainie beat me to it.

-

I hate cross-posting, but this seems relevant to this discussion as well:

Remember that BackBlaze is currently using (2) 760w PSUs for their 45 drive Storage Pods, plus one more OS drive. From the blog post:

There is more to power than just Watts. ATX power supplies deliver power at several voltages or ‘rails’ (12V, 5V, 3.3V, etc). Each vendor imposes unique limits on the amount of power you can draw off of each rail and unused power on one rail cannot be used on another. In particular, most high end power supplies are designed to deliver most of their power on the 12V rail because that is what high end gamer PCs use. Unfortunately, hard drives draw a lot of power off the 5V rail and can easily overwhelm a high wattage power supply. You will hit serious problems if power requirements for each component are not met so be careful if you don’t use the power supplies we recommend.

The specs for their recommended power supply, the Zippy 760w PSM-5670V:

VOLTAGE: 90 ~ 264 VAC FULL RANGE

FREQUENCY : 47 ~ 63 HZ

INPUT CURRENT: 12 A ( RMS ) FOR 115VAC / 6A ( RMS ) FOR 230VAC

INRUSH CURRENT: 20A MAX. FOR 115 VAC / 40A MAX. FOR 230 VAC

PFC CAN REACH THE TARGET OF 95% @230V, FULL LOAD

Output VoltageOutput Current Min.Output Current Max.Output Current PeakRegulation LoadRegulation LineOutput Ripple & Noise Max.[P-P]

+5V0.525.00±5%±1%50mV

+12V155.00±5%±1%120mV

-12V00.8±5%±1%120mV

+3.3V0.525±5%±1%50mV

+5VSB0.13.5±5%±1%50mV* TEMPERATURE RANGE: OPERATING 0C ~ 50C

* HOLD UP TIME: WHEN POWER SHUTDOWN DC OUTPUT 5V MUST BE MAINTAIN 16MSEC IN REGULATION LIMIT AT NORMAL INPUT VOLTAGE

* HUMIDITY: OPERATING:20%-80%, NON-OPERATING:10%-90%

* EFFICIENCY: TYPICAL 80-85% AT 25C 115V FULL LOAD

This is in a production environment designed for long term storage, where customers will not tolerate a lot of "poof" type failures.

They are effectively running twice as many drives as we can on one unRAID installation, on about 1500W worth of PSUs. So dividing their requirements in half effectively cuts it down to one sub 1000w PSU with a beefy 5v rail being sufficient for 20+ drives, the motherboard, and processor. Clearly they avoided the surprisingly 5v hungry WD drives.

Based on their recommendations, I would submit that the Zippy 860w PSM-5860V (the bigger brother) could probably handle the job of a 24 drive, non-WD based unRAID server, with a moderate processor.

-

Gary, you talk to yourself a lot, don't you?

-

Remember that BackBlaze is currently using (2) 760w PSUs for their 45 drive Storage Pods, plus one more OS drive. From the blog post:

There is more to power than just Watts. ATX power supplies deliver power at several voltages or ‘rails’ (12V, 5V, 3.3V, etc). Each vendor imposes unique limits on the amount of power you can draw off of each rail and unused power on one rail cannot be used on another. In particular, most high end power supplies are designed to deliver most of their power on the 12V rail because that is what high end gamer PCs use. Unfortunately, hard drives draw a lot of power off the 5V rail and can easily overwhelm a high wattage power supply. You will hit serious problems if power requirements for each component are not met so be careful if you don’t use the power supplies we recommend.

The specs for their recommended power supply, the Zippy 760w PSM-5670V:

VOLTAGE: 90 ~ 264 VAC FULL RANGE

FREQUENCY : 47 ~ 63 HZ

INPUT CURRENT: 12 A ( RMS ) FOR 115VAC / 6A ( RMS ) FOR 230VAC

INRUSH CURRENT: 20A MAX. FOR 115 VAC / 40A MAX. FOR 230 VAC

PFC CAN REACH THE TARGET OF 95% @230V, FULL LOAD

Output VoltageOutput Current Min.Output Current Max.Output Current PeakRegulation LoadRegulation LineOutput Ripple & Noise Max.[P-P]

+5V0.525.00±5%±1%50mV

+12V155.00±5%±1%120mV

-12V00.8±5%±1%120mV

+3.3V0.525±5%±1%50mV

+5VSB0.13.5±5%±1%50mV* TEMPERATURE RANGE: OPERATING 0C ~ 50C

* HOLD UP TIME: WHEN POWER SHUTDOWN DC OUTPUT 5V MUST BE MAINTAIN 16MSEC IN REGULATION LIMIT AT NORMAL INPUT VOLTAGE

* HUMIDITY: OPERATING:20%-80%, NON-OPERATING:10%-90%

* EFFICIENCY: TYPICAL 80-85% AT 25C 115V FULL LOAD

This is in a production environment designed for long term storage, where customers will not tolerate a lot of "poof" type failures.

They are effectively running twice as many drives as we can on one unRAID installation, on about 1500W worth of PSUs. So dividing their requirements in half effectively cuts it down to one sub 1000w PSU with a beefy 5v rail being sufficient for 20+ drives, the motherboard, and processor. Clearly they avoided the surprisingly 5v hungry WD drives.

Based on their recommendations, I would submit that the Zippy 860w PSM-5860V (the bigger brother) could probably handle the job of a 24 drive, non-WD based unRAID server, with a moderate processor.

There is wisdom in Jonathan's post about having enough power to cover a simultaneous startup of all components in the event the OS fails to stagger the drives.

Having said all of the above, it would be much less taxing on the system as a whole if the OS could natively stagger the startup of the drives.

-

Yes, you can use the array while it is rebuilding. That's part of the design. I would suggest you not write a lot to the array during the rebuild, as this will slow things down. Plus it always makes me a little nervous.

-

That is definitely food for thought Jonathan.

-

what happens when a drive fails and all drives have to be spinning to create the failed drive? If the PSU in that situation does not have enough juice then you will be completely SOL and more stuff will "blow up"

No problem -- the PSU has to have enough power for all drives to spin .. but that's FAR less than the spinup current, which is typically 2-3 times the operating current.

I posted this in another thread that no doubt spawned Gary's desire to create this thread...

Here's a great breakdown of common high capacity drives and their power states (taken from Storage Review):

You can see just how much more drives consume at startup than during reads and writes. Staggered spinup would allow for smaller (by a factor of at least 2x smaller) and more efficient PSUs to be used in our systems.

-

If you measure your system's typical draw (with a Kill-a-Watt) I suspect you'll see that it's well below those numbers ... except for a parity check when all drives are spinning.

Here's a great breakdown of common high capacity drives and their power states (taken from Storage Review):

You can see just how much more drives consume at startup than during reads and writes. Staggered spinup would allow for smaller (by a factor of at least 2x smaller) and more efficient PSUs to be used in our systems.

-

Yes, a few of us contributed to a script over the years. Started with Starcat, then me, then dstroot, then Guzzi. Don't know where that falls in line with this one, time wise. Thread is here, and in my signature.

-

Wow, this is a very nice little case! Hopefully someone can do a full review on it soon.

EDIT: Found a user review - http://forums.overclockers.com.au/showthread.php?t=1084681

Seems like a very tight fit, which means it's likely about as small as you can get with that number of drives. Very efficient use of space, and surprisingly good cooling considering how crammed everything is. Some QC issues with a little plastic and metal warping, but that's standard fair with these kinds of imports.

Need to use a super low profile CPU cooler, like the Noctua he's using. Since you'd have to add offboard SATA channels (like m1015), you could use a 4 port mini-ITX board and still have enough ports for the job.

I'm starting to like this box quite a bit as a replacement for the Microserver, which is sadly removing a lot of mod-friendly features in the next generation.

-

FWIW...

Partition format: MBR: 4K-alignedFile sytem type: unknown

All of my disks show this until I start the array...

After the array is online, they show

Partition format: MBR: 4K-aligned

File sytem type: reiserfs

+1... every upgraded machine I have done from the "5bX" series to RC has exhibited the exact same behavior - MBR: 4K-aligned partition, unknown file system.

Since the wiki specifically states that MBR: unknown partition is the danger, I took the risk initially and started the array. All of the data drives had backups, so I was comfortable I could recover if needed. I've seen this over a half-dozen times now on various systems, and every time, the data drives stay intact when the array is started.

My guess is that something in the initial config phase is not correctly reading the filesystem, or is not relaying the correct string value to the GUI. Because if you take a look at the drives themselves, you can see the reiserfs partitions.

I'm not advocating starting the array if the experts tell you to wait. I'm just relaying my personal experience.

Unassigned Devices Preclear - a utility to preclear disks before adding them to the array

in Plugin Support

Posted

All the logs look like this...

Mar 15 07:12:05 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:12:05 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:14:17 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:14:17 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:16:29 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:16:29 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:18:41 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:18:41 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:20:51 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:20:51 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:23:03 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:23:03 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:25:15 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:25:15 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:27:28 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:27:28 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:29:39 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:29:39 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:31:49 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:31:49 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:34:01 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:34:01 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:36:13 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:36:13 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:38:26 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:38:26 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:40:38 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:40:38 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:42:49 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:42:49 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory